A simple Airflow ETL pipeline written in Python to gather various data on Reddit's top 100 subreddits. Reddit API & its Python client "PRAW" are used for data retrieval.

- Build simple ETL system as my personal fun project

- Demonstrate basic skills and workflow in Airflow which involves data retrieval from an API service

- Get the names of top 100 subreddits

- Get details and data on each subreddit via Reddit API

- Get hot/new/top submissions for each subreddit via Reddit API

- Load retrieved data to MongoDB

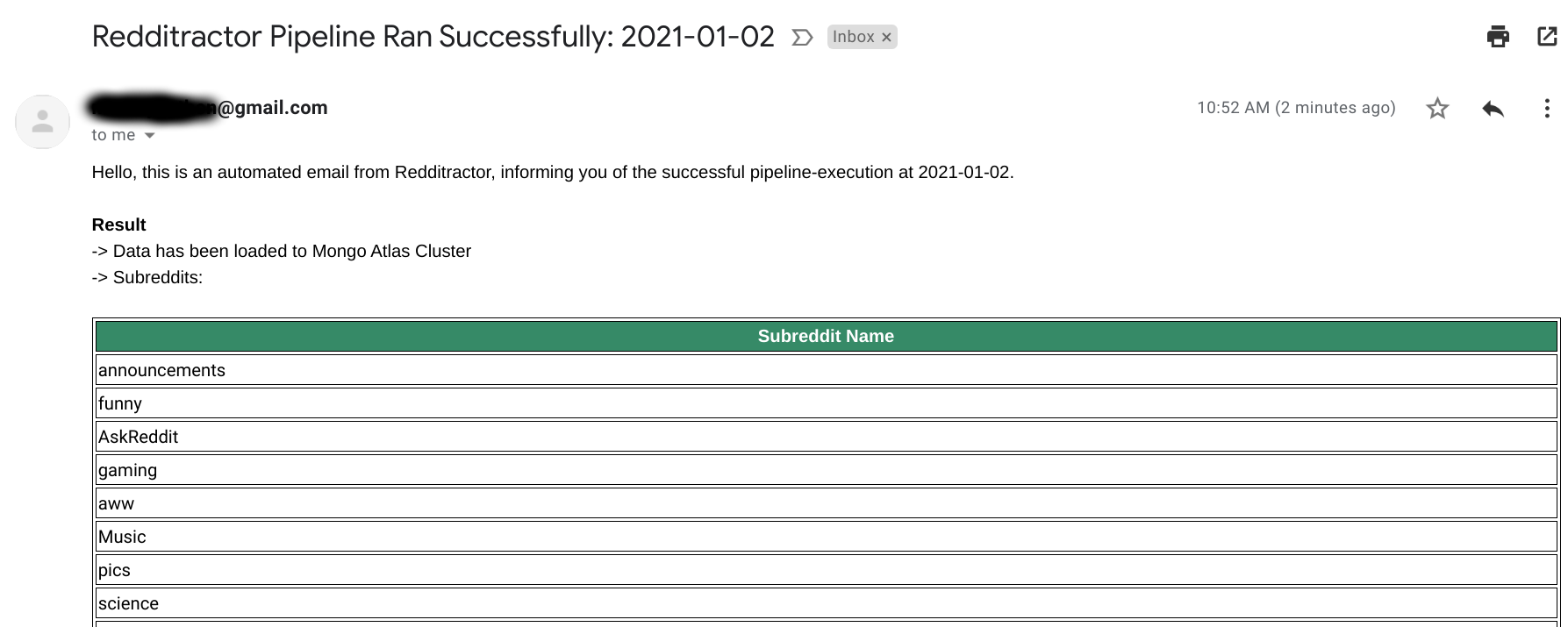

- Send Email report informing the successful DAG run

In order for the pipeline to work properly, the following Airflow variables are required.

URI for Mongo DB. Retrieved data will be stored in the specified Mongo DB.

Credentials for Reddit API. The pipeline assumes the following structure for reddit_credentials

{

"reddit_credentials": {

"reddit_key": YOUR_REDDIT_KEY,

"reddit_secret": YOUR_REDDIT_SECRET

}

}The pipeline is configured to send an email with result summary after each successful DAG run.

The following variables are required for it to work:

email_sender: Email to send notifiactions from

email_receiver: Email to send notifications to

Here is the sample of email report.

Docker containers are used for deployment.(puckel/docker-airflow:1.10.9)

Deployment is as easy as performing docker-compose up in the terminal.