Note: when choosing a medical imaging problem to be solved by machine learning, it is tempting to assume that automated detection of certain conditions would be the most valuable thing to solve. However, this is not usually the case. Quite often detecting if a condition is present is not so difficult for a human observer who is already looking for such a condition. Things that bring most value usually lie in the area of productivity increase. Helping prioritize the more important exams, helping focus the attention of a human reader on small things or speed up tedious tasks usually is much more valuable. Therefore it is important to understand the clinical use case that the algorithm will be used well and think of end-user value first.

- FDA cleared AI medical products that are related to radiology and other imaging domains

- The Cancer Imaging Archive

- TigerLily: Finding drug interactions in silico with the Graph

- TigerLily: Finding drug interactions in silico with the Graph

- How it fits into medicine and the healthcare system.

- How to use AI to solve 2D Imaging problems

- How to take AI from the bench to bedside to be used by doctors to improve patient lives

- X-ray: a 2D imaging technique that projects a type of radiation called x-rays down at the body from a single direction to capture a single image.

- Ultrasound: a 2D imaging technique that uses high-frequency sound waves to generate images.

- Computed Tomography (CT): a 3D imaging technique that emits x-rays from many different angles around the human body to capture more detail from more different angles.

- Magnetic Resonance Imaging (MRI): a 3D imaging technique that uses strong magnetic fields and radio waves to create images of areas of the body from all different angles.

- 2D imaging: an imaging technique that pictures are taken from a single angle.

- 3D imaging: an imaging technique that pictures are taken from different angles to create a volume of images.

- Picture Archiving and Communication System (PACS)

- Every Imaging center and hospital have PACS. These systems allows for all medical imaging to be stored in the hospital's servers and transferred to different departments throughout the hospital.

- Diagnostic Imaging

- In diagnostic situations, a clinitian orders an imaging study because they believe that a disease may be present based on the patient's symptoms. Diagnostic imaging can be performed in emergency settings as well as non-emergency settings.

- Clinical believes something is wrong with the patient

- Need imaging to verify

- e.g diagnosing brain tumor

- In diagnostic situations, a clinitian orders an imaging study because they believe that a disease may be present based on the patient's symptoms. Diagnostic imaging can be performed in emergency settings as well as non-emergency settings.

- Screening

Screening studies are performed on populations of individuals who fall into risk groups for certain diseases. These tend to be diseases that are relatively common, have serious consequences, but also have the potential of being reversed if detected and treated early. For example, individuals who are above a certain age with a long smoking history are candidates for lung cancer screening which is performed using x-rays on an annual basis.

- Nothing acutely wrong with the patient

- Patient in risk group for particular diseases

- Imaging regularly for early detection

- e.g regular screening for colonscopies because of family history.

- Track progression growth of tumour overtime -> Segmentation

- Identify the area of the lung where the fluid is -> Localization

- Determine whether a tumor is malignant or benign -> Classification

- Model

- Heuristic

- Bernoul Distribution

- Prior

- Likelihood

- Evidence

- Posterior

- Vocabulary

- Laplace Smoothing

- Tokenization

- Featurization

- Lemmatization

- Stemming

- Stop Word

- Vectorizer Used in a step featurizing. It transforms some input into something else. One example is binary vectorizer which transforms tokenized messages into a binary vector indicating which items in the vocabulary appear in the message

- Featurization The processin of transforming raw inputs into somethinga model can perform training and prediction on.

Clinical stakeholders are radiologists, diagnosing clinicians and patients. Radiologists are likely the end-users of an AI application for 2D imaging. They care about low disruption to workflow and they play an important advisory role in the algorithm development process. Clinicians have less visibility into the inner workings of an algorithm. They also care about low disruption to workflows and they care about the interpretability of algorithm output.

Industry stakeholders include medical device companies, software companies, and hospitals. Many medical device companies typically have accompanying imaging software

The main regulatory stakeholder in the medical imaging world is the Food and Drug Administration (FDA). The FDA treats AI algorithms as medical devices. Medical devices are broken down into three classes by the FDA, Class I, Class II, and Class III, based on their potential risks present to the patient. A device's class dictates the safety controls, which in turn dictates which regulatory pathway they must go down. The two main regulatory pathways for medical devices are 510(k) and Pre-market Approval (PMA). Lower risk devices (Classes I & II) usually take a 501(k) submission pathway. Higher risk devices and algorithms (Class III) must go through PMA.

The patient is always a stakeholder when we are developing algorithms to read clinical imaging studies.

This tool will presumably help a radiologist to more accurately define the boundaries of a nodule and how they change over time, meaning it will part of the radiologist's workflow and therefore they are a stakeholder.

The software developer who creates this algorithm will be a key stakeholder as they will be responsible for describing the algorithm to the FDA.

The hospital will likely be the purchaser of your algorithm, and they are a key stakeholder in that the algorithm must be beneficial to them financially in order to be adopted into their radiology practice.

- X-Ray: Type of 2D imaging that uses a type of radiation to take pictures of the body's internal structures.

- Computed Tomography: Type of 3D imaging that uses x-rays to take pictures at multiple angles of the body's internal structures.

- Magnetic Resonance Imaging: Type of 3D imaging that uses radio waves and strong magnetic fields at multiple angles to take pictures of the body's internal structures

- Mammogram: A type of 2D x-ray that is specialized for breast imaging

- Digital Pathology: A type of 2D imaging that involves the digitization of microscopy images of cell-level biological material

- Radiologist: A specialized type of clinician who is trained to read medical imaging data

- PACS: Picture archiving and communication system, used for storing and viewing medical images within and across hospitals

- Screening: A type of test that is performed on individuals who are in a risk group for a given disease

- Sensitivity: Proportion of accurately identified positive cases that a test returns

- Specificity: Proportion of accurately identified negative cases that a test returns

DICOM "Digital Imaging and Communications in Medicine", which is the standard for the communication and management of medical imaging information and related data.

- DICOM ensures the "interoperability" of medical imaging systems by making it easier to perform the following actions on medical images:

- Produce, Store, Display, Send, Query, Process, Retrieve, Print

- By using DICOM, one can get derived structured documents and manage the related workflow more conveniently.

- DICOM files are a medical imaging file that is in the format that conforms to the DICOM standard.

- A DICOM file contains information about the imaging acquisition method, the actual medical images, and patient information. It has a header component that contains information about the acquired image and an image component that is a set of pixel data representing the actual images

- Protected Health Information (PHI) is part of DICOM and clinical data and radiologist report are not part of DICOM

- DICOM studies and series With 2D imaging, a single 2D image is known as a single DICOM series. All image series combined comprise a study of the patient, known as a DICOM study.

For efficient algorithm training, the best practice is to pre-extract all data from DICOM headers into a dataframe.

DICOM header has some other applications besides training models. It can be used to mitigate the risks of the algorithm. It can also be used to optimize image processing workflow.

Some metadata may come from the DICOM headers, patient history, and image labels. Once we have all of a dataset's metadata stored in a single place, we'll then want to explore data features.

Histograms help us look at distributions of single variables. Sometimes we only want to look at distributions within a single class of our data.

Scatterplots are useful for assessing relationships between two variables.

Pearson Correlation Coefficient measures how two variables are linearly related. The value ranges from -1 to 1. A value of 1 or -1 means the two variables are perfectly linearly related. A value of 0 implies there is no linear relationship between the two variables.

Co-Occurrence Matrices are useful for assessing how frequently different classifications co-occur together.

Not all 2D medical images are stored as a DICOM. Microscopy images are not stored in DICOM since they do not come from a digital machine. Instead, they are biological data and come from smeared physical cells from patients.

The first step of transforming microscopy into a digital image is to get the cell sample from a patient. Then cells are dyed into different colors based on their structure and viewed by a microscope. The microscopy data is then captured by a camera to form a digital image. This transformation technique is called digital pathology.

Once images are digitized, they can be processed with ML in the same way as you would with the pixel data extracted from DICOM.

Digital Imaging and Communications in Medicine (DICOM) is the standard for the communication and management of medical imaging information and related data

An object or distortion in an image that reduces its quality

An object in a medical image that is not biological material from the patient, such as a pacemaker or wire

A set of data that describes another set of data

A special type of clinician who reads and interprets microscopy and digital pathology data

the distribution of all pixels' intensity values that comprise an image

any individually identifiable health information, including demographic data, insurance information, and other information used to identify a patient

The biggest difference between ML and DL is the concept of feature selection. Classical machine learning algorithms require predefined features in images. And, it takes up a lot of time and effort to design features. When deep learning came along, it was so groundbreaking because it worked to discover important features, taking this burden off of the algorithm researchers.

It’s often used for background extraction and classification. It takes the intensity distribution of an image and searches it to find the intensity threshold that minimizes the variance in each of the two classes. Once it discovers that threshold, it considers every pixel on one side of that image to be one class and on the other side to be another class.

There are several sets of convolutional layers in a CNN model. Each layer is made up of a set of filters that are looking for features. Layers that come early in a CNN model look for very simple features such as directional lines and layers that come later look for complex features such as shapes.

Note that the input image size must match the size of the first set of convolutional layers.

U-Net is used for segmentation problems and it is more commonly used in 3D medical imaging. It's important to note that a limitation of 2D imaging is the inability to measure the volume of structures. 2D medical imaging only measures the area with respect to the angle of the image taken, which limits its utility in segmenting the whole area.

The gold standard for a particular type of data refers to the method that detects disease with the highest sensitivity and accuracy. Any new method that is developed can be compared to this to determine its performance. The gold standard is different for different diseases.

Often times, the gold standard is unattainable for an algorithm developer. So, you still need to establish the ground truth to compare your algorithm.

Ground truths can be created in many different ways. Typical sources of ground truth are

- Limitations: difficult and expensive to obtain.

- Limitations: may not be accurate.

- Limitations: expensive and requires a lot of time to come up with labeling protocols.

- Limitations: may not be accurate.

The silver standard involves hiring several radiologists to each make their own diagnosis of an image. The final diagnosis is then determined by a voting system across all of the radiologists’ labels for each image. Note, sometimes radiologists’ experience levels are taken into account and votes are weighted by years of experience.

Intensity normalization is good practice and should always be done prior to using data for training. Making all of your intensity values fall within a small range that is close to zero helps the weights on our convolutional filters stay under control

- zero-meaning: subtract that mean intensity value from every pixel.

- standardization: subtract the mean from each pixel and divide by the image’s standard deviation.

Image augmentation allows us to create different versions of the original data. Keras provides ImageDataGenerator package for image augmentation.

not all image augmentation method is appropriate for medical imaging. A vertical flip should never be applied. And validation data should NEVER be augmented.

CNNs have an input layer that specifies the size of the image they can process. Keras flow_from_directory have a target_size parameter to resize image.

- Remove potential noise from your images(e.g background extraction)

- Enforce some normalization across images(Zero-mean, standardization)

- Enlarge your dataset(Image Augmentation)

- Resize for your CNN architecture's required input

ImageDataGenerator(rescale = 1. / 255)

function called flow_from_dataframe instead of flow_from_directory. This is easier because I had all of my image file paths stored in a dataframe, and is identical in function to flow_from_directory. This may be a handy tool in your project.

Each time the entire training data is passed through the CNN, we call this one epoch. At the end of each epoch, the model has a loss function to calculate how different its prediction from the ground truth of the training image, this difference is the training loss. The network then uses the training loss to update the weights of filters. This technique is called back-propogation.

At the end of each epoch, we also use that loss function to evaluate the loss on the validation set and obtain a validation loss that measures how the prediction matches the validation data. But we don’t update weights using validation loss. The validation set is just to test the performance of the model.

If the loss is small, it means the model did well classifying the images that it saw in that epoch.

If the training loss keeps going down while the validation loss stops decreasing after a few epochs, we call the model is overfitting. It suggests the model is still learning how to better classify the training data but NOT the validation data.

To avoid overfitting, we can A) changing your model’s architecture, or B) changing some of the parameters. Some parameters you can change are:

- Learning rate

- Dropout * More variation on training data

- Training set: Set of data that your ML or DL model uses to learn its parameters, usually 80% of your entire dataset

- Validation set: Set of data that the algorithm developer uses to establish whether or not their algorithm is learning the correct features and parameters

- Gold standard: The method that detects your disease with the highest sensitivity and accuracy.

- Ground truth: A label used to compare against your algorithm's output and establish its performance

- Silver standard: A method to create a ground truth that takes into account several different label sources

- Image augmentation: The process of altering training data slightly to expand the training dataset

- Fine-tuning: The process of using an existing algorithm's architecture and weights created for a different task, and re-training them for a new task

- Batch size: The number of images used at a time to train an algorithm

- Epoch: A single run of sending the entire set of training data through an algorithm

- Learning rate: The speed at which your optimizer function moves towards a minimum by updating algorithm weights through back-propagation

- Overfitting: A phenomenon that happens when an algorithm specifically learns features of a training dataset that do not generalize beyond that specific dataset

The FDA will require you to provide an intended use statement and an indication for use statement. The intended use statement tells the FDA exactly what your algorithm is used for. Not what it could be used for. And FDA will use this statement to define the risk and class of your algorithm.

- eg. In assisting the radiologist in detecting of breast abnormalities on mammogram

You can use the indications for use statement to make more specific suggestions about how your algorithm could be used. Indications for use statement describes precise situations and reasons where and why you would use this device.

- eg. Screening mammography studies women between the ages of 20-60 yrs old with no prior history of breast cancer

When the FDA talks about limitations, they want to know more about scenarios where your algorithm is not safe and effective to use. In other words, they want to know where our algorithm will fail.

If your algorithm needs to work in an emergency workflow, you need to consider computational limitations and inform the FDA that the algorithm does not achieve fast performance in the absence of certain types of computational infrastructure. This would let your end consumers know if the device is right for them.

After your algorithm is cleared by the FDA and released, the FDA has a system called Medical Device Reporting to continuously monitor. Any time one of your end-users discovers a malfunction in your software, they report this back to you, the manufacturer, and you are required to report it back to the FDA. Depending on the severity of the malfunction, and whether or not it is life-threatening, the FDA will either completely recall your device or require you to update its labeling and explicitly state new limitations that have been encountered.

Precision looks at the number of positive cases accurately identified by an algorithm divided by all of the cases identified as positive by the algorithm no matter whether they are identified right or wrong. This metric is also commonly referred to as the positive predictive value.

A high precision test gives you more confidence that a positive test result is actually positive since a high precision test has low false positive. This metric, however, does not take false negatives into account. So a high precision test could still miss a lot of positive cases. Because of this, high-precision tests don’t necessarily make for great stand-alone diagnostics but are beneficial when you want to confirm a suspected diagnosis.

When a high recall test returns a negative result, you can be confident that the result is truly negative since a high recall test has low false negatives. Recall does not take false positives into account though, so you may have high recall but are still labeling a lot of negative cases as positive. Because of this, high recall tests are good for things like screening studies, where you want to make sure someone doesn’t have a disease or worklist prioritization where you want to make sure that people without the disease are being de-prioritized.

Optimizing one of these metrics usually comes at the expense of sacrificing the other.

CNN models output a probability ranging from 0-1 that indicates how likely the image belongs to a class. We will need a cut-off value called threshold to assist in making the decision if the probability is high enough to belong to one class. Recall and precision vary when a different threshold is chosen.

Precision-recall curve plots recall in the x-axis and precision in the y-axis. Each point along the curve represents precision and recall under a different threshold value.

For binary classification problems, the F1 score combines both precision and recall. F1 score allows us to better measure a test’s accuracy when there are class imbalances. Mathematically, it is the harmonic mean of precision and recall.

You'll need to perform a standalone clinical assessment of your tool that uses an FDA validation set from a real-world clinical setting to prove to the FDA that your algorithm works. You will run this FDA validation set through your algorithm just ONCE.

You’ll need to identify a clinical partner who you can work with to gather the “BEST” data for your validation plan. This partner will collect data from a real-world clinical setting that you describe so that you can then see how your algorithm performs under these specifications.

You need to identify a clinical partner to gather the FDA validation set. First, you need to describe who you want the data from. Second, you need to specify what types of images you’re looking for.

You need to gather the ground truth that can be used to compare the model output tested on the FDA validation set. The choice of your ground truth method ties back to your intended use statement. Depending on the intended use of the algorithm, the ground truth can be very different.

For your validation plan, you need evidence to support your reasoning. As a result, you need a performance standard. This step usually involves a lot of literature searching.

Depending on the use case for your algorithm, part of your validation plan may need to include assessing how fast your algorithm can read a study.

A statement given to the FDA that concisely describes what your algorithm does

An FDA regulatory pathway for medical devices of all three risk categories that is appropriate when a predicate device exists

An FDA regulatory pathway for Class II and III devices that is mandated when a predicate device does not exist

a device used to acquire a medical image

Magnetic Resonance Imaging scanner

Contrast resolution refers to the ability of any imaging modality to distinguish between differences in image intensity, and makes the most sense to optimize in this given scenario.

the ability of an imaging modality to distinguish between differences in image intensity

the ability of an imaging modality to differentiate between smaller objects

Surgeons, Interventional Radiologists, and other procedure-heavy fields use 3D medical imaging not only for diagnosis, but for guidance during an operation/procedure.

“raw” data generated by an MRI scanner. Images need to be reconstructed from it

a combination of magnetic fields and sequence in which they are applied that results in a particular type of MR image

multi-planar reconstruction - extraction of non-primary imaging planes from a 3D volume

MRI Data construction

Extracting a 2D image in coronal plane from an image which has been acquired in sagittal extracting non-primary 2D planes from volume

building 3D volume from voxels

Mapping the hing-range intensity space to 8-bit grayscale screen space

Bringing two images into the same patient-centric coordinate system so that they could be loverlaid on top of each other

Depending on the tasks you are dealing with, you might come across both registered and unregistered data in your datasets. Before starting to build models that leverage data for the same patient that comes from multiple modalities or multiple time points, you should think if your voxels match up in space or not. And if they don’t you might want to consider registering related volume pairs.

Vocabulary Let us leave you with a little vocabulary of the many terms that have been introduced throughout this lesson:

Contrast resolution: the ability of an imaging modality to distinguish between differences in image intensity

Entity-Relationship Model

standard defines Information Entities that represent various real-world entities and relationships between them. The cornerstone of the DICOM standard are the following objects and relationships:

is, naturally, the patient undergoing the imaging study. A patient object contains one or more studies.

- a representation of a “medical study” performed on a patient. You can think of a study as a single visit to a hospital for the purpose of taking one or more images, usually within. A Study contains one or more series.

- a representation of a single “acquisition sweep”. I.e., a CT scanner took multiple slices to compose a 3D image would be one image series. A set of MRI T1 images at different axial levels would also be called one image series. Series, among other things, consists of one or more instances.

- (or Image Information Entity instance) is an entity that represents a single scan, like a 2D image that is a result of filtered backprojection from CT or reconstruction at a given level for MR. Instances contain pixel data and metadata (Data Elements in DICOM lingo)

NIFTI, which stands for Neuroimaging Informatics Technology Initiative, is an open standard that is available at link The standard has started out as a format to store neurological imaging data and has slowly seen a larger adoption across other types of biomedical imaging fields.

Some things that distinguish NIFTI from DICOM, though are:

- NIFTI is optimized to store serial data and thus can store entire image series (and even study) in a single file.

- NIFTI is not generated by scanners; therefore, it does not define nearly as many data elements as DICOM does. Compared to DICOM, there are barely any, and mostly they have to do with geometric aspects of the image. Therefore, NIFTI files by themselves can not constitute a valid patient record but could be used to optimize storage, alongside some sort of patient info database.

- NIFTI files have fields that define units of measurements and while DICOM files store all dimensions in mm, it’s always a good idea to check what units of measurement are used by NIFTI.

- When addressing voxels, DICOM uses a right-handed coordinate system for X, Y and Z axes, while NIFTI uses a left-handed coordinate system. Something to keep in mind, especially when mixing NIFTI and DICOM data.

- NIFTI’s ability to store entire series in a single file is quite convenient when you are dealing with a curated research dataset. Also, NIFTI uses a different orientation of the cartesian coordinate system than DICOM.

- RSNA Intracranial Hemorrhage Detection Identify acute intracranial hemorrhage and its subtypes

- RSNA Pneumonia Detection Challenge Can you build an algorithm that automatically detects potential pneumonia cases?

- Data Science Bowl 2017 Can you improve lung cancer detection?

- RSNA-ASNR-MICCAI Brain Tumor Segmentation (BraTS) Challenge 2021

- windowing approaches

- See like a Radiologist with Systematic Windowing

- Some DICOM gotchas to be aware of (fastai)

- Digital Imaging and Communication in Medicine. The standard defining the storage and communication of medical images.

- DICOM Information Object - representation of a real-world object (such as an MRI scan) per DICOM standard.

- IOD - Information Object Definition. Definition of an information object. Information Object Definition specifies what metadata fields have to be in place for a DICOM Information Object to be valid. IODs are published in the DICOM standard.

- Patient - a subject undergoing the imaging study.

- Study - a representation of a “medical study” performed on a patient. You can think of a study as a single visit to a hospital for the purpose of taking one or more images, usually within. A Study contains one or more series.

- Series - a representation of a single “acquisition sweep”. I.e., a CT scanner took multiple slices to compose a 3D image would be one image series. A set of MRI T1 images at different axial levels would also be called one image series. Series, among other things, consists of one or more instances.

- Instance - (or Image Information Entity instance) is an entity that represents a single scan, like a 2D image that is a result of filtered backprojection from CT or reconstruction at a given level for MR. Instances contain pixel data and metadata (Data Elements in DICOM lingo).

- SOP - Service-Object Pair. DICOM standard defines the concept of an Information Object, which is the representation of a real-world persistent object, such as an MRI image (DICOM Information Objects consist of Information Entities).

- Data Element - a DICOM metadata “field”, which is uniquely identified by a tuple of integer numbers called group id and element id.

- VR - Value Representation. This is the data type of a DICOM data element.

- Data Element Type - identifiers that are used by Information Object Definitions to specify if Data Elements are mandatory, conditional or optional.

- NIFTI - Neuroimaging Informatics Technology Initiative, is an open standard that is used to store various biomedical data, including 3D images.

When it comes to classification and object detection problems, the key to solving those is identifying relevant features in the images, or feature extraction. Not so long ago, machine learning methods relied on manual feature design. With the advent of CNNs, feature extraction is done automatically by the network, and the job of a machine learning engineer is to define the general shape of such features. As the name implies, features in Convolutional Neural Networks take the shape of convolutions. In the next section, let’s take a closer look at some of the types of convolutions that are used for 3D medical image analysis.

Classification - the problem of determining which one of several classes an image belongs to. Object Detection - the problem of finding a (typically rectangular) region within an image that matches with one of several classes of interest.

is an operation visualized in the image above, where a convolutional filter is applied to a single 2D image. Applying a 2D convolution approach to a 3D medical image would mean applying it to every single slice of the image. A neural network can be constructed to either process slices one at a time, or to stack such convolutions into a stack of 2D feature maps. Such an approach is fastest of all and uses least memory, but fails to use any information about the topology of the image in the 3rd dimension.

is an approach where 2D convolutions are applied independently to areas around each voxel (either in neighboring planes or in orthogonal planes) and their results are summed up to form a 2D feature map. Such an approach leverages some 3-dimensional information.

is an approach where the convolutional kernel is 3 dimensional and thus combines information from all 3 dimensions into the feature map. This approach leverages the 3-dimensional nature of the image, but uses the most memory and compute resources.

Understanding these is essential to being able to put together efficient deep neural networks where convolutions together with downsampling are used to extract higher-order semantic features from the image.

Assuming that you keep input and output images in memory, what is the minimum amount of memory in bytes that you need to allocate in order to compute a convolutional feature map of size 28x28 from an image of size 30x30 using a 3x3 convolutional kernel? Assume 16 bits per pixel and 16 bits for each parameter of the kernel.

3400 "Correct, you need to at least store the original image - 30x30x2 bytes, the output image - 28x28x2 bytes and the convolutional kernel - 4x4x2 bytes. Now, you can try and compute how this requirement changes for 2.5d and 3d approaches."

- A guide to convolution arithmetic for deeplearning

- Improving Computer-aided Detection using Convolutional Neural Networks and Random View Aggregation

- A New 2.5D Representation for Lymph Node Detection using Random Sets of Deep Convolutional Neural Network Observations

- 3D multi-view convolutional neural networks for lung nodule classification

Segmentation - the problem of identifying which specific pixels within an image belong to a certain object of interest.

-

Longitudinal follow up: Measuring volumes of things and monitoring how they change over time. These methods are very valuable in, e.g., oncology for tracking slow-growing tumors.

-

Quantifying disease severity: Quite often, it is possible to identify structures in the organism whose size correlates well with the progression of the disease. For example, the size of the hippocampus can tell clinicians about the progression of Alzheimer's disease.

-

Radiation Therapy Planning: One of the methods of treating cancer is exposing the tumor to ionizing radiation. In order to target the radiation, an accurate plan has to be created first, and this plan requires careful delineation of all affected organs on a CT scan

-

Novel Scenarios: Segmentation is a tedious process that is not quite often done in clinical practice. However, knowing the sizes and extents of the objects holds a lot of promise, especially when combined with other data types. Thus, the field of radiogenomics refers to the study of how the quantitative information obtained from radiological images can be combined with the genetic-molecular features of the organism to discover information not possible before.

A certain part of the population has risk factors that make them susceptible to early lung cancer - these could be things like smoking, routine exposure to certain substances or family history. A combination of these risk factors make people good candidates for routine lung cancer screening since early detection can lead to very positive outcomes. Routine lung cancer screening is done by taking a low-dose CT image and then looking for dense areas in the lungs, or lung nodules. Quite often, if lung nodules are found, they need to be monitored to see how they grow as the presence of nodules per se does not necessarily mean that intervention is needed. Most important question a radiologist would need to answer - which nodules have increased in size since the last time a scan was taken?

Would a classification or segmentation algorithm be a good tool to assist the radiologist? Why?

The segmentation algorithm would make the radiologists’ job much easier since measuring volume needs accurate delineation of the extent of the nodules. However, in a comprehensive AI system, a classification or object detection algorithm may also assist in the screening process, providing a second read of the image and flagging those where nodules are initially found.

- unet

- Brain Tumor Segmentation | UNets | PyTorch | Monai

- Brain MRI segmentation

- U-Net: Convolutional Networks for Biomedical Image Segmentation

- Medical Segmentation Decathlon Generalisable 3D Semantic Segmentation

- notebook

- Upsampling

- Fully Convolutional Networksfor Semantic Segmentation

- Hounsfield

- Radiogenomics: bridging imaging and genomics

- Ground Truth

- Autosegmentation of prostate anatomy for radiation treatment planning using deep decision forests of radiomic features

- Metrics for evaluating 3D medical image segmentation: analysis, selection, and tool

You're building a ML model to segment blood vessels in chest CT scans. Since blood vessels are only a few voxels in diameter, it's possible that the predicted shape might be very similar to ground truth but predicted voxels will not match GT precisely. Which of the following metrics would be best if you wanted to rate this type of prediction similarly to one that labels all the voxels precisely?

Hausdorff distance will assign similar scores to result that matches up with all the voxels and one where none of the voxels match up, but the structure is very close to the target.

- Using deep learning to increase the resolution of low-res scans

- GANs for synthetic MRI

- A survey of deep learning methods for medical image registration:

- Overview of opportunities for deep learning on MRIs:

- Fast.ai - python library for medical image analysis, with focus on ML:

- MedPy - a library for medical image processing with lots of various higher-order processing methods

- Deepmedic, a library for 3D CNNs for medical image segmentation

- A publication about a project dedicated to large-scale medical imaging ML model evaluation which includes a comprehensive overview of annotation tools and related problems (including inter-observer variability)

- a boilerplate for machine learning experiment

- tooling for data augmentation

- deep learning with a special section on computer vision by Alexander Smola et al. Alexander has a strong history of publications on machine learning algorithms and statistical analysis and is presently serving as a director for machine learning at Amazon Web Services in Palo Alto, CA

- a book on general concepts of machine learning by Christopher Bishop et al. Christopher has a distinguished career as a machine learning scientist and presently is in charge of Microsoft Research lab in Cambridge, UK, where I had the honor to work on project InnerEye for several years

- resource

- Interactive Organ Segmentation using Graph Cuts

- Probabilistic Graphical Models for Medical Image Segmentation

- Awesome Semantic Segmentation

The former is designed to support data exchange in protected clinical networks that are largely isolated from the Internet. The latter is a set of RESTful APIs (link to the Standard) that are designed to communicate over the Internet.

- DIMSE networking does not have a notion of authentication and is prevalent inside hospitals.

- DIMSE networking defines how DICOM Application Entities talk to each other on protected networks.

- DICOM Application Entities that talk to each other take on roles of Service Class Providers which are an an AE that provides services over DIMSE network and Service Class Users which is an AI that requests service from an SCP SCPs typical respond to requests and SCUs issue them Full list of DIMSE services could be found in the Part 7 of the DICOM Standard, ones that you are most likely run into are:

- C-Echo - “DICOM ping” - checks if the other party can speak DICOM

- C-Store - request to store an instance

- An Application Entity (AE) is an actor on a network (e.g. a medical imaging modality or a PACS) that can talk DIMSE messages defined by three parameters:

- Port

- IP Address

- Application Entity Title (AET) - an alphanumeric string

Clinical networking is an industry on its own and an AI engineer will probably be exposed to a very small subset of that. So, it’s important to understand the basics - what are the systems that are important to a clinical network and how do they all fit together.

- PACS - Picture Archiving and Communication System. An archive for medical images. A PACS product typically also includes “diagnostic workstations” - software for radiologists that is used for viewing and reporting on medical images.

- VNA - Vendor Neutral Archive. A PACS that is not tied to a particular equipment manufacturer. A newer generation of PACS. Often deployed in a cloud environment.

- EHR - Electronic Health Record. A system that stores clinical and administrative information about the patients. If you’ve been to a doctor’s office where they would pull your information on a computer screen and type up the information - it is an EHR system that they are interacting with. EHR system typically interfaces with all other data systems in the hospital and serves as a hub for all patient information. You may also see the acronym “EMR”, which typically refers to the electronic medical records stored by the EHR systems.

- RIS - Radiology Information System. Think of those as “mini-EHRs” for radiology departments. These systems hold patient data, but they are primarily used to schedule patient visits and manage certain administrative tasks like ordering and billing. RIS typically interacts with both PACS and EHR.

- HL7 - Health Level 7. A protocol used to exchange patient data between systems as well as data about physician orders (lab tests, imaging exams)

- FHIR - Fast Healthcare Interoperability Resources. Another protocol for healthcare data exchange. HL7 dates back to the '80s and many design decisions of this protocol start showing their age. You can think of FHIR as the new generation of HL7 built for the open web.

When you start thinking of deploying your AI algorithms, you will want to set some requirements as to data that is sent to these algorithms, and the environment they operate in. When defining those, you may want to think of the following:

Series selection. As we’ve seen, modalities typically use C-STORE requests to send entire studies. How are you going to identify images/series that your algorithms will process? Imaging protocols. There are lots of ways images can be acquired - we’ve talked about MR pulse sequences, and there are just physiological parameters, like contrast media or FoV. How do you make sure that your algorithm processes images that are consistent with what it has been trained on? Workflow disruptions. If the algorithm introduces something new into the radiologists' workflow - how is this interaction going to happen? Interfaces with existing systems. If your algorithm produces an output - where does it go? What should the systems processing your algorithm’s output be capable of doing?

- Digital Imaging and Communications in Medicine (DICOM)

- Understanding and Using DICOM, the Data Interchange Standard for Biomedical Imaging

- As usual, DICOM standard is a great reference for DICOM networking

- dcmtk tool

FDA MEDICAL DEVICE

An instrument, apparatus, implement, machine, contrivance, implant, in vitro reagent, or other similar or related article, including a component part, or accessory which is:

- recognized in the official National Formulary, or the United States Pharmacopoeia, or any supplement to them,

- intended for use in the diagnosis of disease or other conditions, or in the cure, mitigation, treatment, or prevention of disease, in man or other animals, or

- intended to affect the structure or any function of the body of man or other animals, and which does not achieve its primary intended purposes through chemical action within or on the body of man or other animals …

- FDA Clearances

- FDA Clearances

- FDA quality management

‘medical device’ means any instrument, apparatus, appliance, software, implant, reagent, material or other article intended by the manufacturer to be used, alone or in combination, for human beings for one or more of the following specific medical purposes:

- diagnosis, prevention, monitoring, prediction, prognosis, treatment or alleviation of disease,

- diagnosis, monitoring, treatment, alleviation of, or compensation for, an injury or disability,

- investigation, replacement or modification of the anatomy or of a physiological or pathological process or state,

- providing information by means of in vitro examination of specimens derived from the human body, including organ, blood and tissue donations,

- European and Canadian regulators require compliance

The pattern here - something that is built with the purpose of diagnosing, preventing, or treating the disease is potentially a medical device that should conform with certain standards of safety and engineering rigor. The degree of this rigor that the regulatory bodies require depends on the risk class of the said device. Thus, for a device with lesser risk class (like a sterile bandage) often it is sufficient to just notify the respective regulatory body that device is being launched into the market while with high-risk devices (like an implantable defibrillator) there are requirements to clinical testing and engineering practices.

While “medical device” may sound like something that does not have much to do with software, the regulatory bodies actually include software in this notion as well, often operating with concepts of “software-as-a-medical-device”. Sometimes a distinction is made between “medical device with embedded software” (like a CT scanner) or “software-only medical device” (like a PACS).

Key thing that determines whether something is a medical device or not, and what class it is, is its ”intended use”. The same device may have different risk classes (or not qualify as a medical device at all) depending on what use you have in mind for it. A key takeaway here is that the presence of an “AI algorithm” in a system that is used by clinicians does not automatically make it a medical device. You need to articulate the intended use of the system before you try to find out what the regulatory situation is for your product.

- HIPAA - Health Insurance Portability and Accountability Act - key legislation in the USA that among other things defines the concept of Protected Health Information and rules around handling it.

- GDPR - General Data Protection Regulation - European legislation that defines the principles of handling personal data, including health data.

- FDA’s guidance for software validation

- webinars on all things medical

- Article by American Collage of Radiology

- Proposed Regulatory Framework for Modifications to Artificial Intelligence/Machine learning(AI/ML) Based Software as a Medical Device

- PACS - Picture Archiving and Communication System. An archive for medical images. A PACS product typically also includes “diagnostic workstations” - software for radiologists that is used for viewing and reporting on medical images.

- VNA - Vendor Neutral Archive. A PACS that is not tied to a particular equipment manufacturer. A newer generation of PACS. Often deployed in a cloud environment.

- EHR - Electronic Health Record. A system that stores clinical and administrative information about the patients. EHR system typically interfaces with all other data systems in the hospital and serves as a hub for all patient information. You may also see the acronym “EMR”, which typically refers to the electronic medical records stored by the EHR systems or sometimes used interchangeably with EHR.

- RIS - Radiology Information System. Think of those as “mini-EHRs” for radiology departments. These systems hold patient data, but they are primarily used to schedule patient visits and manage certain administrative tasks like ordering and billing. RIS typically interacts with both PACS and EHR.

- HL7 - Health Level 7. A protocol used to exchange patient data between systems as well as data about physician orders (lab tests, imaging exams)

- FHIR - Fast Healthcare Interoperability Resources. Another protocol for healthcare data exchange. HL7 dates back to the '80s and many design decisions of this protocol start showing their age. You can think of FHIR as the new generation of HL7 built for the open web.

- DIMSE - DICOM Message Service Element. A definition of network message used by medical imaging systems to talk to each other. Often refers to overall subset of DICOM standard that defines how medical images are moved on local networks.

- DICOMWeb - RESTful API for storing and querying DICOM archives. A relatively recent update to the networking portion of the DICOM protocol.

- Application Entity - an actor on a network (e.g. a medical imaging modality or a PACS) that can talk DIMSE messages. Often the abbreviation “AE” is used. An Application Entity is uniquely defined by IP address, Port and an alphanumeric string called “Application Entity Title”.

- SCP - Service Class Provider - an AE that provides services over DIMSE network

- SCU - Service Class User - an AI that requests service from an SCP.

- FDA - Foods and Drugs Administration - a regulatory body in the USA that among other things creates and enforces legislation that defines the operation of medical devices, including AI in medicine. Many regulatory agencies in other countries use regulatory frameworks very similar to that used by the FDA.

- HIPAA - Health Insurance Portability and Accountability Act - key legislation in the USA that among other things defines the concept of Protected Health Information and rules around handling it.

- GDPR - General Data Protection Regulation - European legislation that defines the principles of handling personal data, including health data.

EHR data is the data being collected when we see a doctor, pick up a prescription at the pharmacy, or even from a visit to the dentist. These are just a few of the examples where EHR data is collected.

This data is used for a variety of use-cases. From personalizing healthcare to discovering novel drugs and treatments to helping providers diagnose patients better and reduce medical errors.

- Health Record: A patient's documentation of their healthcare encounters and the data created by the encounters across time

- EHR: Electronic Health Record

- HIPAA: Health Insurance Portability and Accountability Act EHR is also commonly referred to as

EMR: Electronic Medical Record

A few other fantastic uses of AI in EHR Include:

- Mapping of our genes in Genomics

- Analyzing data from clinical trials

- Predicting a diagnosis for patients

Protected Health information Is the part of HIPAA that protects the transmission of certain types of personally identifiable information such as name, address, and other info. Certain information in an electronic medical record is considered PHI and must comply with HIPAA standards around data security and privacy. This informs not only how you transmit and store data but also data usage rights and restrictions around building models for other purposes than the original use.

Covered Entities: are a group of industry organizations defined by HIPAA to be one of three groups: health insurance plans, providers, or clearinghouses. You can see from the table the types of entities in each category.

These groups transmit protected health information and are subject to HIPAA regulations regarding these transmissions.

Other Covered Entities:

- Business Associates: A business associate is a person or entity that performs certain functions or activities that involve the use or disclosure of protected health information on behalf of, or provides services to, a covered entity.

- Covered entities can disclose to BAs under Privacy Rule

- Only for a purpose allowed by the covered entity

- Safeguard data against misuse

- Comply with other requirements of the covered entity under HIPAA Privacy Rule

- Covered entities can disclose to BAs under Privacy Rule

- Business Associates Agreement/Addendum (BAA): this is the contract between a covered entity and BA Covered Entites Sample Business Associate Agreement Business Associate Guidance

De-identifying a dataset refers to the removal of identifying fields like name, address from a dataset. De-Identification is done to reduce privacy risks to individuals and support the secondary use of data for research and such.

This is not something you should be doing on your own. HIPAA has two ways that you can use to de-identify a dataset.

The first method is the Expert Determination Method and this is done by a statistician that determines there is a small risk that an individual could be identified.

The second method is called Safe Harbor and it refers to the removal of 18 identifiers like name, zip code, etc.

Limited Latitude: Very limited scope of work. EHR Data can only be used for the purpose granted. De-Identification Rationale

- Be aware of what you do not know

- Your organization likely has compliance protocols and rules

- There is required HIPPPA training for those is the US

- privacy is evolving

- Industrial methods are gaining more acceptance across different fields

- Healthcare methods are also gaining accetance

Exploratory Data Analysis

EDA is a step in the data science process that is often overlooked for the modeling and evaluation phase that can be easier to quantify and benchmark.

This stands for “cross-industry standard process for data mining” and is a common framework used for data science projects and includes a series of steps from business understanding to deployment.

EDA and CRISP-DM As you can see from the image above EDA falls in the Data Understanding phase of CRISP-DM

- EDA can enable you to discover features or data transformations/aggregations that might have data leakage. This can save a tremendous amount of time and prevent you from building a flawed model.

- EDA can help you better translate and define modeling objectives and corresponding evaluation metrics from a machine learning/data science and business perspective.

- EDA can help inform strategies for handling missing/null/zero valued data. This is a common issue that you will encounter with EHR data that you will have missing values and have to determine imputing strategies accordingly.

- EDA can help to identify subsets of features to utilize for feature engineering and modeling along with appropriate feature transformations based off of type (e.g. categorical vs numerical features)

- Normal is the well-known bell-curve that most people are familiar with and is also referred to as a Gaussian distribution

- The uniform distribution is where the unique values have almost the same frequency and this is important b/c this might indicate some issue with the data

- Skewed/unbalanced data distributions as the name indicates are where a smaller subset of values or a single value dominates

Missing values are especially common in healthcare where you may have incomplete records or some fields are sparsely populated

MCAR which stands for Missing Completely at Random. This means that the data is missing due to something unrelated to the data and there is no systematic reason for the missing data. In other words, there is an equal probability that data is missing for all cases. This is often due to some instrumentation like a broken instrument or process issue where some of the data is randomly missing.

refers to Missing at Random and this is the opposite case where there is some systematic relationship between data and the probability of missing data. For example, there might be some missing demographics choices in surveys.

is a Missing Not at Random and this usually means there is a relationship between a value in the dataset and the missing values.

Understanding why data is missing help with choosing the best imputing method to fill or drop the values in your dataset.

null_df = pd.DataFrame({'columns': df.columns,

'percent_null': df.isnull().sum() * 100 / len(df),

'percent_zero': df.isin([0]).sum() * 100 / len(df)

} )

return null_df

Refers to the number of unique values that a feature has and is relevant to EHR datasets because there are code sets such as diagnosis codes in the order of tens of thousands of unique codes. This only applies to categorical features and the reason this is a problem is that it can increase dimensionality and makes training models much more difficult and time-consuming.

Determine if it is a categorical feature. Determine if it has a high number of unique values. This can be a bit subjective but we can probably agree that for a field with 2 unique values would not have high cardinality whereas a field like diagnosis codes might have tens of thousands of unique values would have high cardinality. Use the nunique() method to return the number of unique values for the categorical categories above.

The reason that demographic analysis is so important, especially in healthcare, is that we need our clinical trials and machine learning models to be able to representative to general population. While this is not always completely possible given limited trials and very rare conditions it is something we need to strive for and identify as early as possible if there may be an issue.

If we don't have a properly representative demographic dataset, we wouldn't know how a drug or prediction might impact a certain age, race or gender which could lead to significant issues for those not represented.

While there are many reasons for this you need to make sure that you datasets will be representative of the population you will be attempting serve. If it is not, the models created later will not generalize well in production.

- Diagnosis Codes

- IDC10-CM

- Procedure Codes

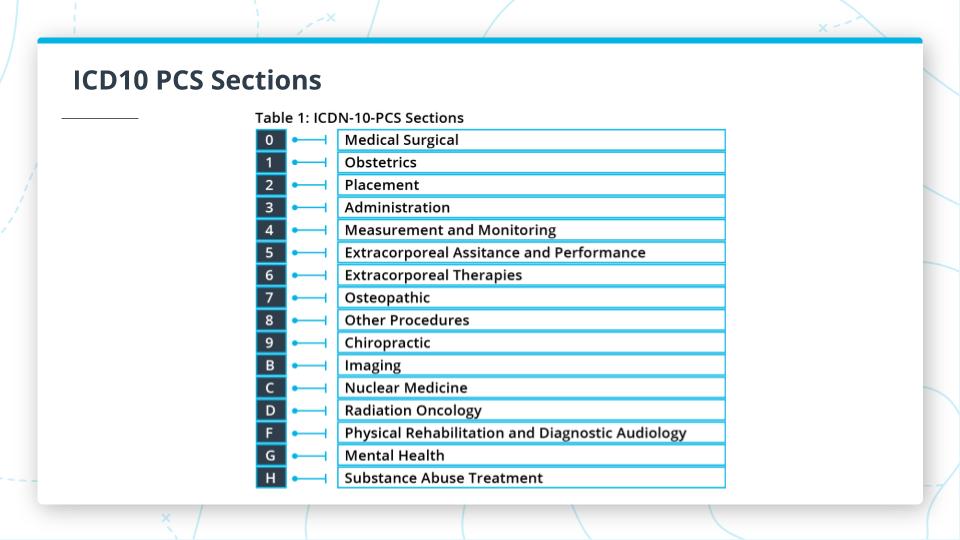

- ICD10 - PCS

- CPT

- HCPCS

- Medication Codes

- NDC Codes

- RXNorm

- Using these codes to group and categorize your datasets

- Using CCS

There are many different providers and EHR systems around the world. There needs to be a standard way to encode common diagnoses, medications, procedures, and lab test results across all these providers and systems. We will focus on some of the most common code sets that allow for some of the most high-value analysis

In Healthcare, the different diagnosis code sets are extremely important and can sometimes be tricky to deal with. But don't worry, when you are done with this section, you'll be a able to crack the code like a pro!

In Healthcare, the different diagnosis code sets are extremely important and can sometimes be tricky to deal with. But don't worry, when you are done with this section, you'll be a able to crack the code like a pro!

Let’s imagine that you see a doctor and you have some symptoms such as coughing and a stomach ache and then your doctor magically comes up with a diagnosis. As we will learn later this diagnosis is a key piece of information that connects so much of the encounter experience together.

ICD10 ICD10: International Classification of Diseases 10

Also known as ICD10 Standard developed by WHO: World Health Organization and in 10th revision ICD10-CM

-

ICD10-CM: International Classification of Diseases 10 - Clinical Modification

-

Diagnosis code standard used in the U.S.

Maintained by U.S. CDC Contains a wide variety of diseases and conditions Used for Medical claims, disease epidemic, and mortality tracking

[ XXX ].XXX X The first part is the category of the diagnosis and it is the first three characters and could be an S code like the injury category. There are 21 different categories and these range from the disease of the respiratory system to injury, poisoning, and certain other consequences of external causes.

The Second is the etiology, anatomic site, and manifestation part which can be up to 3 more characters and is essentially the cause for a condition or disease or the location of the condition.

Finally, the third part is the extension which is the last character and can be tricky b/c it can often be null or has an X placeholder. It is often used with injury-related codes referring to the episode of care.

Again we will go over this more in-depth in the next section, but see if you can use your intuition to figure out the correct code for normal first pregnancy in the third trimester?

The answer for this one is Z34.03. Where Z34 has to do with Encounter for supervision of normal first pregnancy, and the 03 indicates the 3rd trimester.

At a high level, it is important to distinguish what code is taking up the most resources or is the most critical and there are few terms that you should become familiar with.

Principal Diagnosis Code: The diagnosis that is found after hospitalization to be the one that is chiefly responsible. This can be an important distinction since the admitting diagnosis code can widely differ from the final, Principal Diagnosis. For the most part, these terms interchangeably but it's good to be aware of the differences and the need to dig into the details when necessary.

For example, if a patient were to have a knee replacement surgery but had type 2 diabetes as a prior condition, the secondary diagnosis code of type 2 diabetes would be included in the medical record.

Note: Secondary diagnoses codes can include many additional codes

One great method for grouping codes is using the str.startswith() method. While there are a lot of medical codes, the decision to provide a clear categorization system works in your favor here.

Essentially you use str.startswith() by providing the number of characters you are looking for in your code. str.startswith('4') or str.startswith('D35'). While this is probably just a good review for you, the important part here is that you know how to find the right codes to search for in the first place.

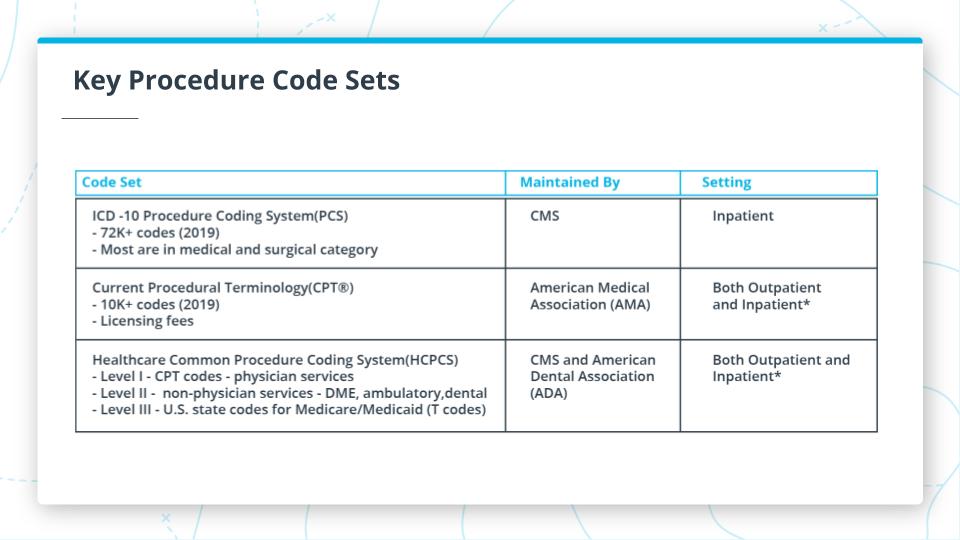

Procedure Codes: the categorization of the medical codes during an encounter. It's important to note if a procedure is inpatient or outpatient.

Procedure Codes: the categorization of the medical codes during an encounter. It's important to note if a procedure is inpatient or outpatient.

Key Procedure Code Sets

The graphic above shows some additional information about 3 of the important code sets. Here are the key points about each of them.

- ICD10 PCS: Procedure Coding Systems

- Only for Inpatient

- 72,000+ codes as of 2019

- Focus on medical and surgical

- CPT: Current Procedural Terminology

- Outpatient focused but can apply to physician visits in ambulatory settings

- 10,000+ codes as of 2019

- Focus on professional services by physician

- HCPCS: Healthcare Common Procedure Coding System

- Inpatient and outpatient

- Has 3 levels

- L1: CPT Codes

- L2: Non-physician services

- DME: Durable Medical Equipment

- Ambulatory Services

- Dental

- L3: Medicare/Medicaid related

ICD10-PCS Code Structure

- 7 alphanumeric characters [A-Z, 0-9]

- 1st character is the Section

- Reference Section codes categories in the table above

- Subsequent characters relate to the Section and give:

- Body System

- Body Part

- Approach

- Device used for a procedure

CPT Codes CPT codes have the following structure:

- Up to 5 numbers

- 3 Categories

-

Category 1: Billable codes

- 10 sections largely split along biological systems which are broken out in the image above

-

Category 2:

- Five digits that end in F

- Non-billable

- Performance measure focused on physicals and patient history

-

Category 3:

- Services and procedures using emerging technology

-

- Understanding CPT Codes

- CMS.gov Database Download for CPT Codes

- CMS.gov HCPCS Codes Information

- HCPCS Wikipedia

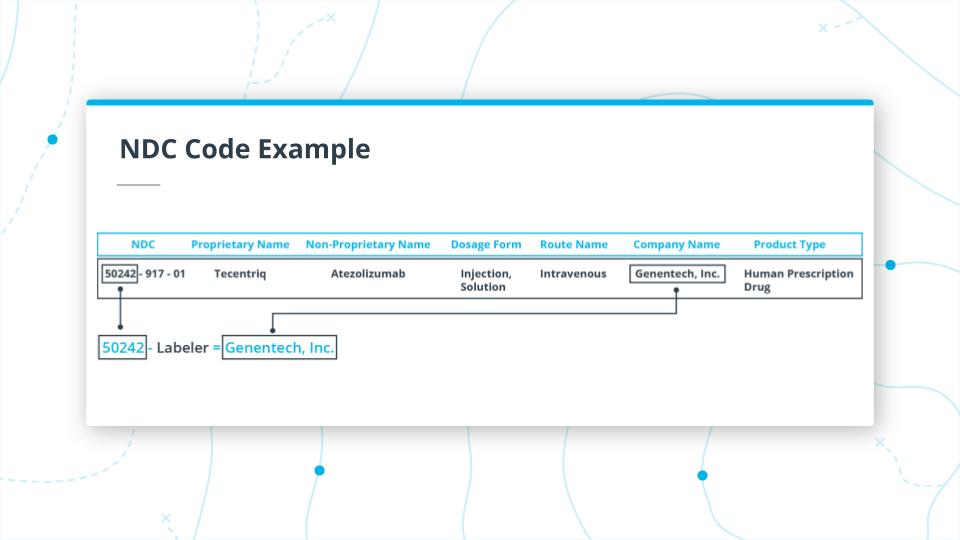

Medication Code Key Points NDC: National Drug Code

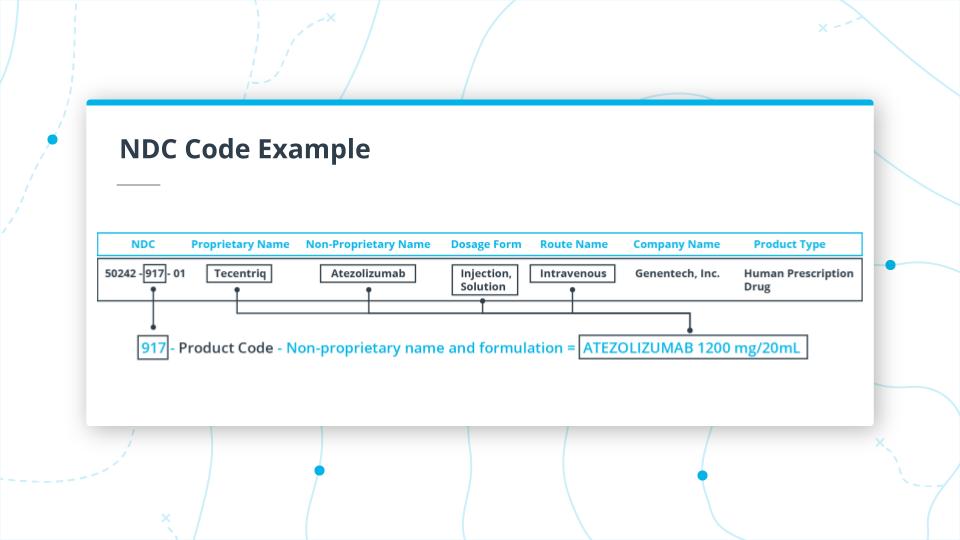

In this section, we discussed the NDC Codes. These codes have been in place since 1972, and are maintained by the FDA.

NDC Code Structure

- 10- to 11-digit code

- multiple configurations

- 3 Parts

- Labeler: Drug manufacturer

- Product code: the actual drug details

- Package code: form and size of medication

In the image above you, can see the first part of the NDC code for Tecentriq.

The first part of the code 50242 is the Labeler, which maps to the manufacturer of the drug (which in this case is Genentech, Inc).

In the image above you, can see the first part of the NDC code for Tecentriq.

The first part of the code 50242 is the Labeler, which maps to the manufacturer of the drug (which in this case is Genentech, Inc).

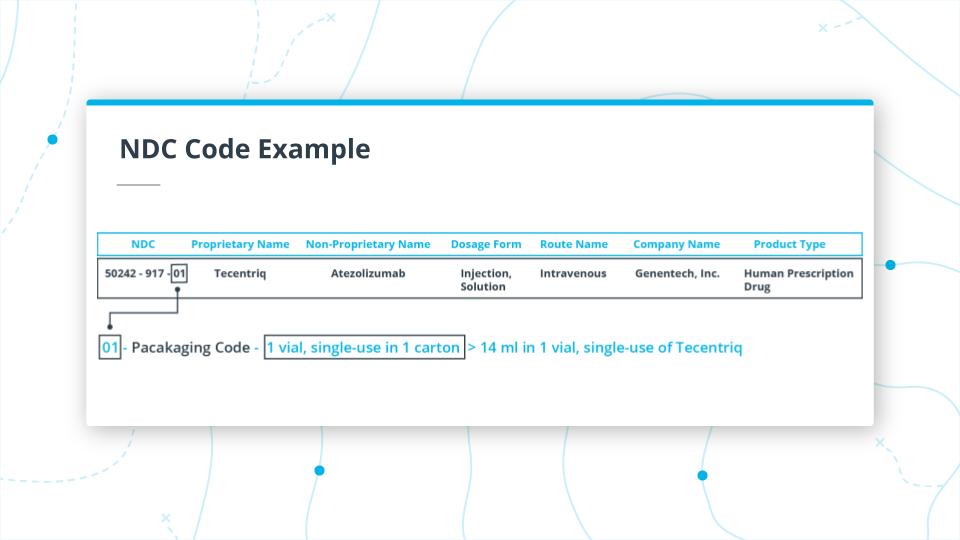

In the example above, we are looking at the middle section of the code 917.

917 is the Product Code. In this case, it takes the unpronounceable, Non-Proprietary Name, ATEZOLIZUMAB 1200mg/20ml, and maps it to the Proprietary Name, Tecentriq.

It also indicates that the drug dosage form is Injection, Solution and the route of administration is Intravenous.

It is important to note that, you'd have to use the Non-Proprietary Name for generic-wise grouping of all drugs.

Finally, the last two digits 01.

This is the packaging code, and in this instance indicates that the package is a 14ml single vial in 1 carton for single use.

Finally, the last two digits 01.

This is the packaging code, and in this instance indicates that the package is a 14ml single vial in 1 carton for single use.

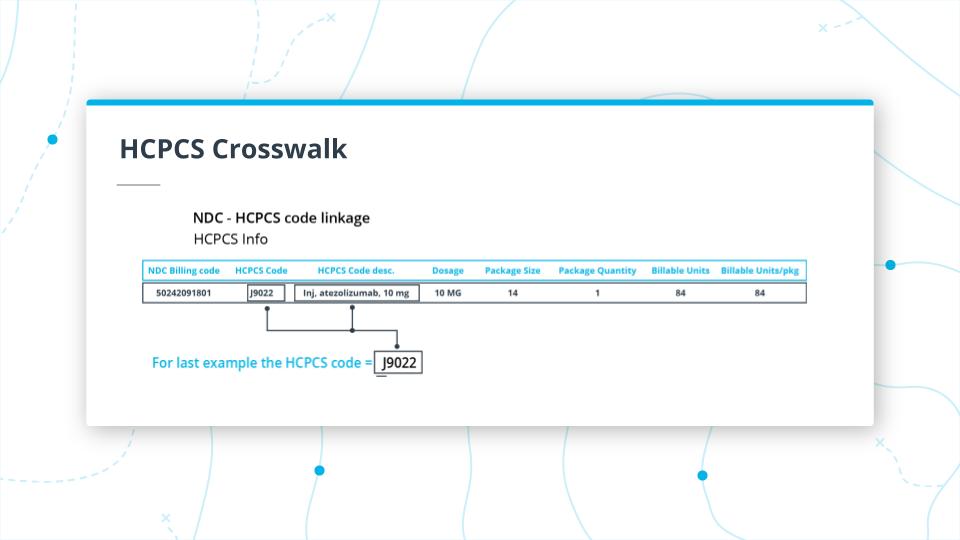

Crosswalk: A connection between two different code sets or versions of drugs in the same code set.

A common term you will hear for medical code sets is a crosswalk.

For NDC codes we can connect them to HCPCS codes and depending on the data source you are looking at there may be a mapping from these codes.

For the previous example, there is a crosswalk between the Tecentriq NDC code and the HCPCS code J9022. Something to note is that the HCPCS code starts with a J and the “J” codes tend to be drugs that are injected but by definition, they are drugs that are not taken orally. You can see that the HCPCS code maps some info from the NDC code.

One of the major challenges with NDC codes is to normalize or group codes by common types of drugs. For example, let’s take acetaminophen. You would think this would be relatively straightforward to group whenever someone has the NDC code for this drug. However, as you can see from this table of only a sample of the results from NDC List that there many drug codes that could contain acetaminophen with other drugs too. This can make drugs very difficult to deal with.

To address the problem just mentioned, the NIH developed a normalized naming system called RXNorm, which does what its name implies and groups medication together. This is important because providers, pharmacies, payers all send EHR records with this data but might use different names and it becomes difficult to communicate between different systems. To illustrate this issue, take a look at a drug Naproxen and the just a few examples of different names of naproxen that is the same thing, While there is a crosswalk between NDC codes and RXNorm, there are still some issues. Depending on the system you are dealing with, it could use one or the other code set.

CCS- Clinical Classifications Software As mentioned earlier, there is a tremendous challenge of taking the 77K+ ICD10 PCS codes and categorizing them into meaningful categories at scale. This is where a government-industry partnership called the Healthcare Cost and Utilization Project (HCUP) created a categorization system called clinical classifications software or CCS. It can be used to map diagnosis or procedure codes from ICD code sets. It has single or multi-level options for mapping these codes.

- Mutually exclusive categories

- 285 categories for diagnoses

- 231 categories for procedures

As an example using single-level codes:

- Operations on the cardiovascular system are codes 43-63

- Heart valve procedures is code 43.

EHR Code Sets Recap Key Points

In this lesson, you learned about key components of EHR data, Code Sets. As a quick reminder, EHR Code sets allow for the comparison of data across various EHR systems. You first learned about diagnosis codes. In particular, ICD10-CM. You gained knowledge of what this code set is, why it's important, how to read them. You also applied that knowledge by grouping data using EHR codes as well as build an embedding with visualizations. Hopefully, ICD10- CM codes will be easy to work with from now on. Then you reduced cardinality of a dataset using procedure codes after learning more about ICD10-PCS, CPT and HCPCS. You should be able to break down medication codes too! You should also be able to apply your knowledge to deal with the challenges of working with medication code sets. Finally, you put all of this code knowledge to work by grouping and categorizing these code sets with CCS. I know that there was a lot of information in this lesson, but now it's time to move on to learning some magic to transform EHR Data! Into what? You'll have to head to the next lesson to find out

Additional Resources:

Advanced EHR Data Representation: Graph Convolutional Transformer We have learned about the complexity of information found within code sets such as diagnosis, procedure, and medication codes. However, there is even more benefit in using these code sets together to understand connections between codes within a given encounter and trend across the longitudinal view of a patient’s medical history. You can also compare at a population level to extract useful trends, insights, and even prognostic capabilities.

One paper that I recommend reviewing is the paper that came out of work from Google Health and DeepMind where they create a Graph Convolutional Transformer or GCT.

GCT leverages the now commonly used Transformer Architecture with graph embedding representations of code sets such as diagnosis, procedure, lab, and medication codes.

The advantage of this approach over the standard bag of features approaches is that it retains connections between features such as

- diagnosis code for a symptom

- the connection to a medication code prescribed

- a procedure code that was performed.

It does this by utilizing a conditional probability matrix that you can see in the illustration in figure 3 from the GCT paper. This is definitely worth your time to read through even though it was a bit outside of the scope of this course and should help clarify how these codes are used together in real-world healthcare data.

Encounter: “An interaction between a patient and healthcare provider(s) for the purpose of providing healthcare service(s) or assessing the health status of a patient.”

The definition of an encounter commonly used for EHR records comes from the Health Level Seven International (HL7), the organization that sets the international standards for healthcare data. As the definition states, it is essentially an interaction between a patient and a healthcare professional(s). It usually refers to doctors visits and hospital stays.

- Create a column list for the columns you would use to group. Likely these would be:

- "encounter_id"

- "patient_id"

- "principal_diagnosis_code"

- Create column list for the other columns not in the grouping

- Transform your data into a new dataframe. You can use groupby() and agg() functions for this

- Then you can do a quick inspection of the result by grabbing one of the patient records and to compare the output of the original dataframe and the newly transformed encounter dataframe.

- Encounter Definition

- Scalable and accurate deep learning with electronic health records

- Google patent

- FHIR

- longitudinal ehr

- A Bayesian Approach to Modelling Longitudinal Data in Electronic Health Records

Data Leakage: Data Leakage

Inadvertently sharing data between test and training datasets.

Data leakage is a massive problem as your model will perform fantastically during training and fail miserably in production.

An example of where this can occur is when you have a longitudinal dataset and you use different patient encounters across different splits. You may inadvertently leak in information about the patient into your training data that you will be testing on. Essentially giving your model some of the answers. So preventing data leakage is very important to ensure your mode can generalize in production.

It is important to have some ways to assess whether you have split your data right.

Here are a few ways to do this.

- Assess to make sure that a single patient's data is not in more than one partition to avoid possible data leakage.

- Check that the total number of unique patients across the splits is equal to the total number of unique patients in the original dataset. This ensures no patient information lost in the splitting and that the counts are correct.

- Check that the total number of rows in original dataset should be equal to the sum of rows across all three dataset partitions.

len(original_df) == len(train_df) + len(val_df) + len(test_df) should evaluate to True

- An ROC curve (receiver operating characteristic curve) is a graph showing the performance of a classification model at all classification thresholds.

- A note on the evaluation of novel biomarkers: do not rely on integrated discrimination improvement and net reclassification index

- Brier Score

- The Brier score does not evaluate the clinical utility of diagnostic tests or prediction models

- Aleatoric:

- Statistical Uncertainty - a natural, random process

- Known Unknowns

Aleatoric uncertainty is otherwise known as statistical uncertainty and is known unknowns. This type of uncertainty is inherent, and just a part of the stochasticity that naturally exists. An example is rolling a dice, which will have an element of randomness always to it.

- Epistemic:

- Systematic Uncertainty - lack of measurement, knowledge

- Unknown Unknowns

Epistemic uncertainty is also known as systemic uncertainty and is unknown unknowns. This type of uncertainty can be improved by adding parameters/features that might measure something in more detail or provide more knowledge.

Building a Basic Uncertainty Estimation Model with Tensorflow Probability

- Using the MPG model from earlier, create uncertainty estimation model with TF Probability.

- In particular, we will focus on building a model that accounts for Aleatoric Uncertainty.

NOTE: Before we go into the walkthrough I want to note that TF Probability is not a v1 yet and documentation and standard patterns are evolving. That being said I wanted to expose you to a tool that might be good to have on your radar and this library can abstract away some of the challenging math behind the scenes.

- Known Unknowns

- 2 Main Model Changes

-

Add a second unit to the last dense layer before passing it to Tensorflow Probability layer to model for the predictor y and the heteroscedasticity or unequal scattering of data

tf.keras.layers.Dense(1 + 1) -

DistributionLambda is a special Keras layer that uses a Python lambda to construct a distribution based on the layer inputs and the output of the final layer of the model is passed into the loss function. This model will return a distribution for both mean and standard deviation. The 'loc' argument is the mean and is sampled across the normal distribution as well as the 'scale' argument which is the standard deviation and in this case, is a slightly increasing with a positive slope. Important to note here is that we have prior knowledge that the relationship between the label and data is linear. However, for a more dynamic, flexible approach you can use the VariationalGaussianProcess Layer. This is beyond the scope of this course but as mentioned can add more flexibility.

tfp.layers.DistributionLambda( lambda t:tfp.distributions.Normal( loc=t[..., :1], scale=1e-3 + tf.math.softplus(0.1 * t[...,1:]) ) -

We can use different loss functions such as mean squared error(MSE) or negloglik. Note that if we decide to use the standard MSE metric that the scale or standard deviation will be fixed. Below is code from the regression tutorial for using negative log-likelihood loss, which through minimization is a way to maximize the probability of the continuous labels.

negloglik = lambda y, rv_y: -rv_y.log_prob(y) model.compile(optimizer='adam', loss=negloglik, metrics=[loss_metric]) -

Extracting the mean and standard deviations for each prediction by passing the test dataset to the probability model. Then, we can call mean() or stddev() to extract these tensors.

yhat = prob_model(x_tst) m = yhat.mean() s = yhat.stddev()

-

- Unknown Unknowns

- Add Tensorflow Probability DenseVariational Layer with prior and posterior functions. Below are examples adapted from the Tensorflow Probability Regression tutorial notebook.

def posterior_mean_field(kernel_size, bias_size=0, dtype=None):

n = kernel_size + bias_size

c = np.log(np.expm1(1.))

return tf.keras.Sequential([

tfp.layers.VariableLayer(2*n, dtype=dtype),

tfp.layers.DistributionLambda(lambda t: tfp.distributions.Independent(

tfp.distributions.Normal(loc=t[..., :n],

scale=1e-5 + tf.nn.softplus(c + t[..., n:])),

reinterpreted_batch_ndims=1)),

])

def prior_trainable(kernel_size, bias_size=0, dtype=None):

n = kernel_size + bias_size

return tf.keras.Sequential([

tfp.layers.VariableLayer(n, dtype=dtype),

tfp.layers.DistributionLambda(lambda t: tfp.distributions.Independent(

tfp.distributions.Normal(loc=t, scale=1),

reinterpreted_batch_ndims=1)),

])

- Here is how the 'posterior_mean_field' and 'prior_trainable' functions are added as arguments to the DenseVariational layer that precedes the DistributionLambda layer we covered earlier.

tf.keras.layers.Dense(75, activation='relu'),