A curated (still actively updated) list of practical guide resources of LLMs. These sources aim to help practitioners navigate the vast landscape of large language models (LLMs) and their applications in natural language processing (NLP) applications. If you find any resources in our repository helpful, please feel free to use them (and don't forget to cite our paper!)

-

We used PowerPoint to plot the figure and released the source file pptx for our GIF figure. We welcome pull requests to refine this figure, and if you find the source helpful, please cite our paper.

@article{yang2023harnessing, title={Harnessing the Power of LLMs in Practice: A Survey on ChatGPT and Beyond}, author={Jingfeng Yang and Hongye Jin and Ruixiang Tang and Xiaotian Han and Qizhang Feng and Haoming Jiang and Bing Yin and Xia Hu}, year={2023}, eprint={2304.13712}, archivePrefix={arXiv}, primaryClass={cs.CL} }

We build an evolutionary tree of modern Large Language Models (LLMs) to trace the development of language models in recent years and highlights some of the most well-known models, in the following figure:

- BERT BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding, 2018, Paper

- RoBERTa ALBERT: A Lite BERT for Self-supervised Learning of Language Representations, 2019, Paper

- DistilBERT DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter, 2019, Paper

- ALBERT ALBERT: A Lite BERT for Self-supervised Learning of Language Representations, 2019, Paper

- ELECTRA ELECTRA: PRE-TRAINING TEXT ENCODERS AS DISCRIMINATORS RATHER THAN GENERATORS, 2020, Paper

- T5 "Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer". Colin Raffel et al. JMLR 2019. [Paper]

- GLM "GLM-130B: An Open Bilingual Pre-trained Model". 2022. [Paper]

- AlexaTM "AlexaTM 20B: Few-Shot Learning Using a Large-Scale Multilingual Seq2Seq Model". Saleh Soltan et al. arXiv 2022. [Paper]

- GPT-3 "Language Models are Few-Shot Learners". NeurIPS 2020. [Paper]

- OPT "OPT: Open Pre-trained Transformer Language Models". 2022. [Paper]

- PaLM "PaLM: Scaling Language Modeling with Pathways". Aakanksha Chowdhery et al. arXiv 2022. [Paper]

- BLOOM "BLOOM: A 176B-Parameter Open-Access Multilingual Language Model". 2022. [Paper]

- MT-NLG "Using DeepSpeed and Megatron to Train Megatron-Turing NLG 530B, A Large-Scale Generative Language Model". 2021. [Paper]

- GLaM "GLaM: Efficient Scaling of Language Models with Mixture-of-Experts". ICML 2022. [Paper]

- Gopher "Scaling Language Models: Methods, Analysis & Insights from Training Gopher". 2021. [Paper]

- chinchilla "Training Compute-Optimal Large Language Models". 2022. [Paper]

- LaMDA "LaMDA: Language Models for Dialog Applications". 2021. [Paper]

- LLaMA "LLaMA: Open and Efficient Foundation Language Models". 2023. [Paper]

- GPT-4 "GPT-4 Technical Report". 2023. [Paper]

- BloombergGPT BloombergGPT: A Large Language Model for Finance, 2023, Paper

- GPT-NeoX-20B: "GPT-NeoX-20B: An Open-Source Autoregressive Language Model". 2022. [Paper]

- How does the pre-training objective affect what large language models learn about linguistic properties?, ACL 2022. Paper

- Scaling laws for neural language models, 2020. Paper

- Data-centric artificial intelligence: A survey, 2023. Paper

- Benchmarking zero-shot text classification: Datasets, evaluation and entailment approach, EMNLP 2019. Paper

- Language Models are Few-Shot Learners, NIPS 2020. Paper

- Does Synthetic Data Generation of LLMs Help Clinical Text Mining?, 2023. Paper

- Shortcut learning of large language models in natural language understanding: A survey, 2023. Paper

- On the Robustness of ChatGPT: An Adversarial and Out-of-distribution Perspective, 2023. Paper

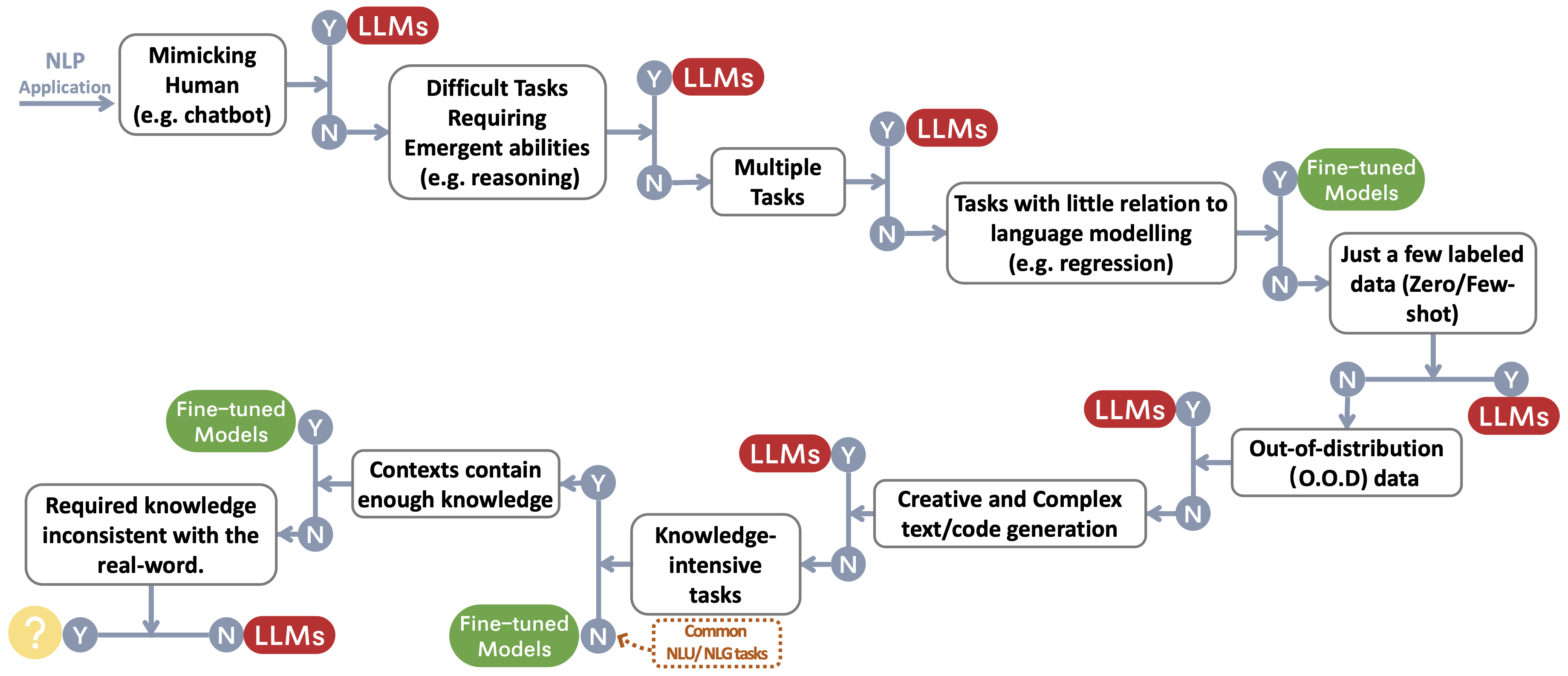

We build a decision flow for choosing LLMs or fine-tuned models~\protect\footnotemark for user's NLP applications. The decision flow helps users assess whether their downstream NLP applications at hand meet specific conditions and, based on that evaluation, determine whether LLMs or fine-tuned models are the most suitable choice for their applications.

- No use case

- Use case

- Use case

- No use case

- Use case

- No use case

Scaling of LLMs~(e.g. parameters, training computation, etc.) can greatly empower pretrained language models. With the model scaling up, a model generally becomes more capable in a range of tasks. Reflected in some metrics, the performance shows a power-law relationship with the model scale.

- Use Case with Reasoning

- Arithmetic reasoning

- Problem solving

- Commonsense reasoning

- Use Cases with Emergent Abilities

- Word manipulation

- Logical deduction

- Logical sequence

- Logic grid puzzles

- Simple math problems

- Coding abilities

- Concept understanding

- No-Use Cases

- Redefine-math

- Into-the-unknown

- Memo-trap

- NegationQA

- No use case

- Use case

- Cost

- Latency

- Parameter-Efficient Fine-Tuning

- Robustness and Calibration

- Fairness and Bias

- Spurious biases

- Calibrate before use: Improving few-shot performance of language models, ICML 2021. Paper