Bootstrap easy your own 3-node k8s cluster within vagrant for tests and development

This is modified version of this original tutorial from kubernetes.io

Any PRs highly welcomed.

Purpose of this project is to create your own cluster for development and testing purposes.

Do not try to create such cluster for production usage!

You can use minikube or microk8s (and many more similar projects) but using them usually does not reflect the "real" cluster conditions which you may encounter on various cloud providers or manually created cloud clusters

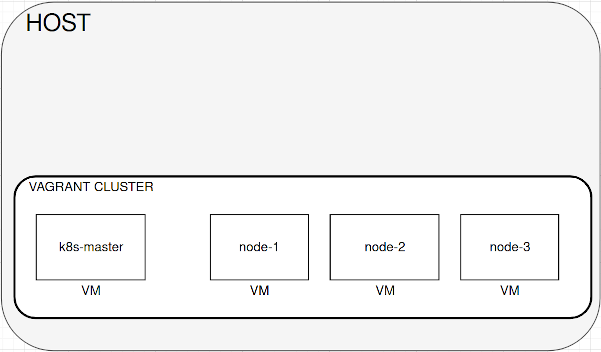

The cluster uses vagrant with 4 separate VMs (this can be changed in Vagrantfile), 1 for master, 3 for nodes.

They will be communicating between themselves by an internal vagrant network which simulates normal private network in the same datacenter. In this cluster, you have to attach PersistentVolumes by yourself (we will use NFS share for this) and configure, let's say, your own docker registry.

Because of this, this cluster reflects much better real cluster, rather than, for instance - minikube.

This whole cluster is intended to run on the same machine, hence:

- it's not HA cluster

- etcd resides only on master, normally it should be separate HA cluster too, ideally on complete separate instances

- TLS certs are self signed

This cluster is intended to be run only on VMs on host - it's depended on host security mostly, however, be aware that:

- docker daemon on master vm and nodes vm is listening on tcp interface which is highly unsecure

- docker registry is an insecure registry (on your host, if you follow this tutorial)

- sudo on root is without password from user vagrant by default

- nfs share can be easily exposed outside the host by simple mistake

- a public vagrant vm image can be malicious and harm your host

- a public docker images can be malicius and harm your vms or host

- tls certs are self signed

It's highly recommend to bootstrap this cluster on host which is not directly exposed to the Internet via an public IP address (for example: within NAT network behind router/firewall). In such infrastructure, unsecure registers, shares and vms are less likely to be compromised.

- 8 vCPU cores recommended (2 vCPU per 1 VM), more is better

- min 16 GB ram for cluster purposes (4 GB per 1 VM), more is better

- vagrant with vbox provider (it's tested on virtualbox driver)

- ansible (to bootstrap cluster when VM are ready)

- docker and docker-compose, for deploy private insecure docker registry for the cluster, but you can use helm to deploy similar registry directly within the cluster

- NFS server (as PersistentVolume for pods), you need to setup your own local NFS server

Before you start, I highly recommend to follow this great tutorial from Kelsey Hightower to understand how kubernetes cluster works and how to bootstrap it correctly from scratch. This gives you better understanding how this vagrant-kubernetes-cluster works.

The cluster will be using the following private IP addresses:

- 15.0.0.1 for host

- 15.0.0.5 for master

- 15.0.0.11-13 for nodes

Knowing ip addresses are not mandatory to bootstrap and operating this cluster - mostly you can use vagrant ssh or

vagrant scp commands to interact with VMs, but it can be helpful when you have to debug something later.

This configuration was successfully tested on Manjaro Linux, as VM OS is used Ubuntu 18.04.3 LTS

Install (according to your distribution packages)

- docker (don't forget to add you to

dockergroup to be able to use docker from user) - ansible (I've tested 2.9.2 version with python 3.8)

- docker-compose

- vagrant (with virtualbox provider, tested version 2.2.6)

- nfs-server (sometimes it's nfs-common package, depends on distribution)

- kubectl

- helm

If you already have vagrant, install vagrant-scp plugin by typing:

$ vagrant plugin install vagrant-scp

Basically you should have available vagrant ssh and vagrant scp commands to interact with VMs

One directory on host system will be used as a NFS share. In my case this is /home/nfs

Add the following line to /etc/exports on your host system:

/home/nfs 15.0.0.0/16(rw,sync,crossmnt,insecure,no_root_squash,no_subtree_check)

And later type (from root): # exportfs -avr

And you should got:

exporting 15.0.0.0/16:/home/nfs

NOTE: Bear in mind if your host already has 15.0.0.0/16 network interface for different purpose - in such case you have to change playbooks and Vagrantfile. Futhermore, if your host is within a NAT network and this network already uses 15.0.0.0/16 addresses, that's not a good idea to keep using this address for NFS server (without firewall rules, you expose your NFS share to this network)

I've used standard docker-compose template in this repo (docker-registry directory) - just go to this directory and type:

$ docker-compose up -d

To check if it works correctly you can use curl:

$ curl -X GET http://localhost:5000/v2/_catalog

And if it works correctly you should got:

{"repositories":[]}

Alternatively, you can use docker-registry helm chart to deploy

In my case, my vagrant directory is ~/vagrant/vagrant-kubernetes-cluster and I will be using this directory in this tutorial. In my case kubernetes-cluster is a directory which contains file from this repository. You can clone this by:

$ git clone https://github.com/mateusz-szczyrzyca/vagrant-kubernetes-cluster.git

Now go to the repository, Vagrantfile should be there and write:

$ vagrant up

If everything is ok, vagrant should start 4 vms and ansible should perform configuration of these vms to work within the cluster. This will take a while.

You can check if VMs are working properly by typing vagrant status:

$ vagrant status

Current machine states:

k8s-master running (virtualbox)

node-1 running (virtualbox)

node-2 running (virtualbox)

node-3 running (virtualbox)

This environment represents multiple VMs. The VMs are all listed

above with their current state. For more information about a specific

VM, run vagrant status NAME.When all machines are working and there were no errors during ansible configuration, type:

$ vagrant scp k8s-master:/home/vagrant/.kube/config .

It will copy /home/vagrant/.kube/config file from k8s-master vm to your host machine with certificates which is needed to be used by kubectl command.

Now you can point to this file:

$ export KUBECONFIG=$PWD/config

And now kubectl should work properly:

$ kubectl get nodes -o wide

Now you should be able to see nodes in the cluster:

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master Ready master 20m v1.17.0 10.0.2.15 <none> Ubuntu 18.04.3 LTS 4.15.0-72-generic docker://19.3.5

node-1 Ready <none> 17m v1.17.0 15.0.0.11 <none> Ubuntu 18.04.3 LTS 4.15.0-72-generic docker://19.3.5

node-2 Ready <none> 15m v1.17.0 15.0.0.12 <none> Ubuntu 18.04.3 LTS 4.15.0-72-generic docker://19.3.5

node-3 Ready <none> 13m v1.17.0 15.0.0.13 <none> Ubuntu 18.04.3 LTS 4.15.0-72-generic docker://19.3.5It means the cluster is working properly!

You also may use vagrant ssh to connect to a specific VM, like:

$ vagrant ssh node-2

Now it's time to use our NFS share as PV in our cluster.

To do this, we will install nfs-client-provisioner from helm chart. To install this, just type:

$ helm install stable/nfs-client-provisioner --generate-name --set nfs.server="15.0.0.1" --set nfs.path="/home/nfs"

If you changed your NFS server/path to different than in this tutorial, please modify this command accordingly.

Now we can check if we have a new pod:

$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-client-provisioner-1577712423-b9bcf4778-sw2xm 1/1 Running 0 10s 192.168.139.65 node-3 <none> <none>This pod adds a new storageclass nfs-client, check this using the command $ kubectl get sc:

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client cluster.local/nfs-client-provisioner-1577712423 Delete Immediate true 12mWe need to make this storageclass as default for our cluster, by commmand:

$ kubectl patch storageclass nfs-client -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

And now we can check again by $ kubectl get sc that this class is now default:

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client (default) cluster.local/nfs-client-provisioner-1577712423 Delete Immediate true 12mTo attach to docker daemon exposed by master (or nodes), set this env variable:

$ export DOCKER_HOST="tcp://15.0.0.5:2375"

And now you can use cluster's docker:

$ docker ps

In this case, our private insecure registry is 15.0.0.1:5000. All VMs (nodes and master) already use this additional insecure registry address, so we can push images there.

If you want to use your newly created docker image, use docker build using the following syntax:

$ docker build . -t 15.0.0.1:5000/my-kubernetes-app

Where my-kubernetes-app is a name of your application.

This build command will automatically tagged this newly created image and later you can push it to private registry by:

$ docker push 15.0.0.1:5000/my-kubernetes-app

If you docker registry is working fine now you have the new image pushed there, and this new image can be used in kubernetes yaml files in such manner:

image: 15.0.0.1:5000/my-kubernetes-appNow you can build and deploy your app to the cluster.

Moreover, consider the following scenarios for further practise:

- try to connect/reconnect nodes and watch what will happen with the cluster

- add more PersistentVolumes and use them at the same time with different pods

- use proper TLS certs (use Let's Encrypt to generate them)

- store/use some secrets

- bootstrap another such cluster on another machine (if you have) with the same network as current host - now you can "simulate" multi-master cluster with different datacenters if you connect each other.