Code for the paper "Attributable and Scalable Opinion Summarization", Tom Hosking, Hao Tang & Mirella Lapata (ACL 2023).

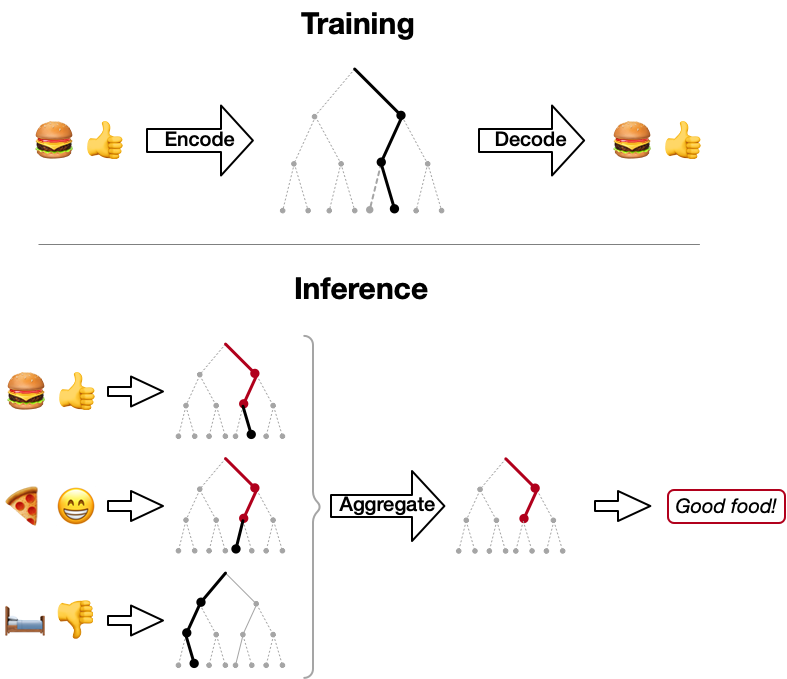

By representing sentences from reviews as paths through a discrete hierarchy, we can generate abstractive summaries that are informative, attributable and scale to hundreds of input reviews.

Create a fresh environment:

conda create -n herculesenv python=3.9

conda activate herculesenv

or

python3 -m venv herculesenv

source herculesenv/bin/activate

Then install dependencies:

pip install -r requirements.txt

Download data/models:

- Space ->

./data/opagg/ - AmaSum ->

./data/opagg/ - Trained checkpoints ->

./models

Tested with Python 3.9.

See ./examples/Space-Eval.ipynb

or

from torchseq.utils.model_loader import model_from_path

from torchseq.metric_hooks.hrq_agg import HRQAggregationMetricHook

model_slug = 'hercules_space' # Which model to load?

instance = model_from_path('./models/' + model_slug, output_path='./runs/', data_path='./data/', silent=True)

scores, res = HRQAggregationMetricHook.eval_generate_summaries_and_score(instance.config, instance, test=True)

print("Model {:}: Abstractive R2 = {:0.2f}, Extractive R2 = {:0.2f}".format(model_slug, scores['abstractive']['rouge2'], scores['extractive']['rouge2']))To train on SPACE, download the datasets (as above) then you should just be able to run:

torchseq --train --reload_after_train --validate --config ./configs/hercules_space.json

You will need to:

- Install

allennlp==2.10.1andallennlp-models==2.10.1via pip (ignore the warnings about version conflicts) - Make a copy of your dataset in a format expected by the script below

- Run the dataset filtering scripts

./scripts/opagg_filter_space.pyand./scripts/opagg_filter_space_eval.py - Run the script to generate training pairs

./scripts/generate_opagg_pairs.py - Make a copy of one of the training configs and update to point at your data

- Finally, train the model!

torchseq --train --reload_after_train --validate --config ./configs/{YOUR_CONFIG}.json

Please feel free to raise a Github issue or email me if you run into any difficulties!

@inproceedings{hosking-etal-2023-attributable,

title = "Attributable and Scalable Opinion Summarization",

author = "Hosking, Tom and

Tang, Hao and

Lapata, Mirella",

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = jul,

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.acl-long.473",

pages = "8488--8505",

}