Human-in-the-loop De novo Drug Design by Preference Learning (HIL-DD)

About

This directory contains the code and resources of the following paper:

"Human-in-the-loop De novo Drug Design by Preference Learning". Under review.

-

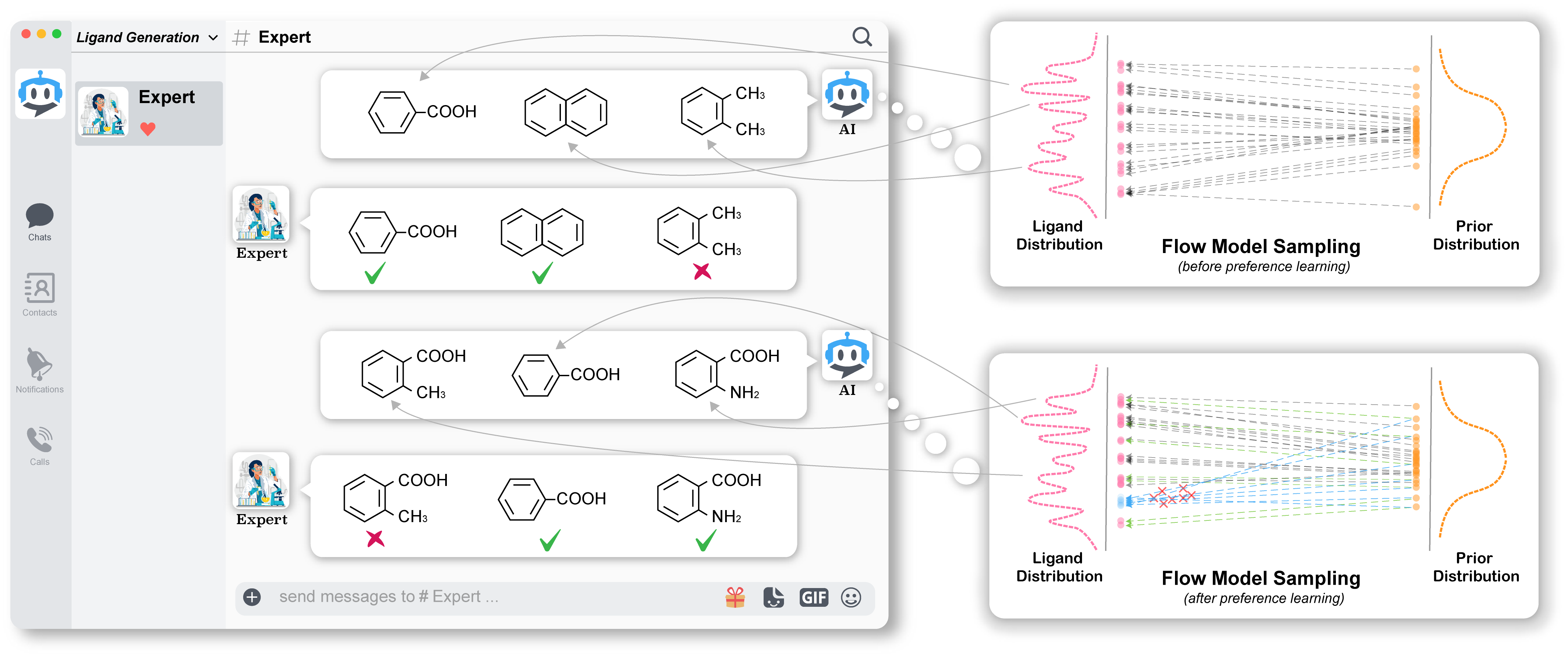

HIL-DD is a new Human-In-the-Loop Drug Design framework that enables human experts and AI to co-design molecules in 3D space conditioned on a protein pocket.

-

The backbone model is a surprisingly simple generative model called rectified flow (RF) based on ordinary differential equation (ODE) [1-2]. By combining equivariant graph neural networks (EGNNs) [3], we create an equivariant rectified flow model (ERFM).

-

Our HIL-DD framework is built upon ERFM. It takes molecules generated by ERFM conditioning on a protein pocket as input, and incorporates human experts' preferences to generate new molecules with human preferences.

-

Our experimental results are based on the CrossDocked dataset [4], which is available here.

-

If you have any issues using this software, please do not hesitate to contact Youming Zhao (youming0.zhao@gmail.com). We will try our best to assist you. Any feedback is highly appreciated.

Overview of the Model

We introduce HIL-DD to bridge the gap between human experts and AI.

Step 1. Construct an equivariant rectified flow model (ERFM) and train it on the CrossDocked dataset

In this step, we combine EGNNs and RF to create the ERFM. The ERFM is then trained on the CrossDocked dataset using protein pockets as a condition.

Step 2. Generate samples

We utilize a well-trained ERFM to generate molecules conditioned on a protein pocket of interest.

Step 3. Propose promising molecules as positive samples and unpromising molecules as negative samples

According to a specific preference, say binding affinity, given the generated samples, we select molecules with high binding affinity (measured by Vina score in our work) as promising samples, and molecules with low binding affinity as unpromising samples.

Step 4. Employ our HIL-DD algorithm to finetune ERFM

With the human annotations obtained from the previous step, we finetune the well-trained ERFM using the HIL-DD algorithm.

For more detailed information, please refer to our paper.

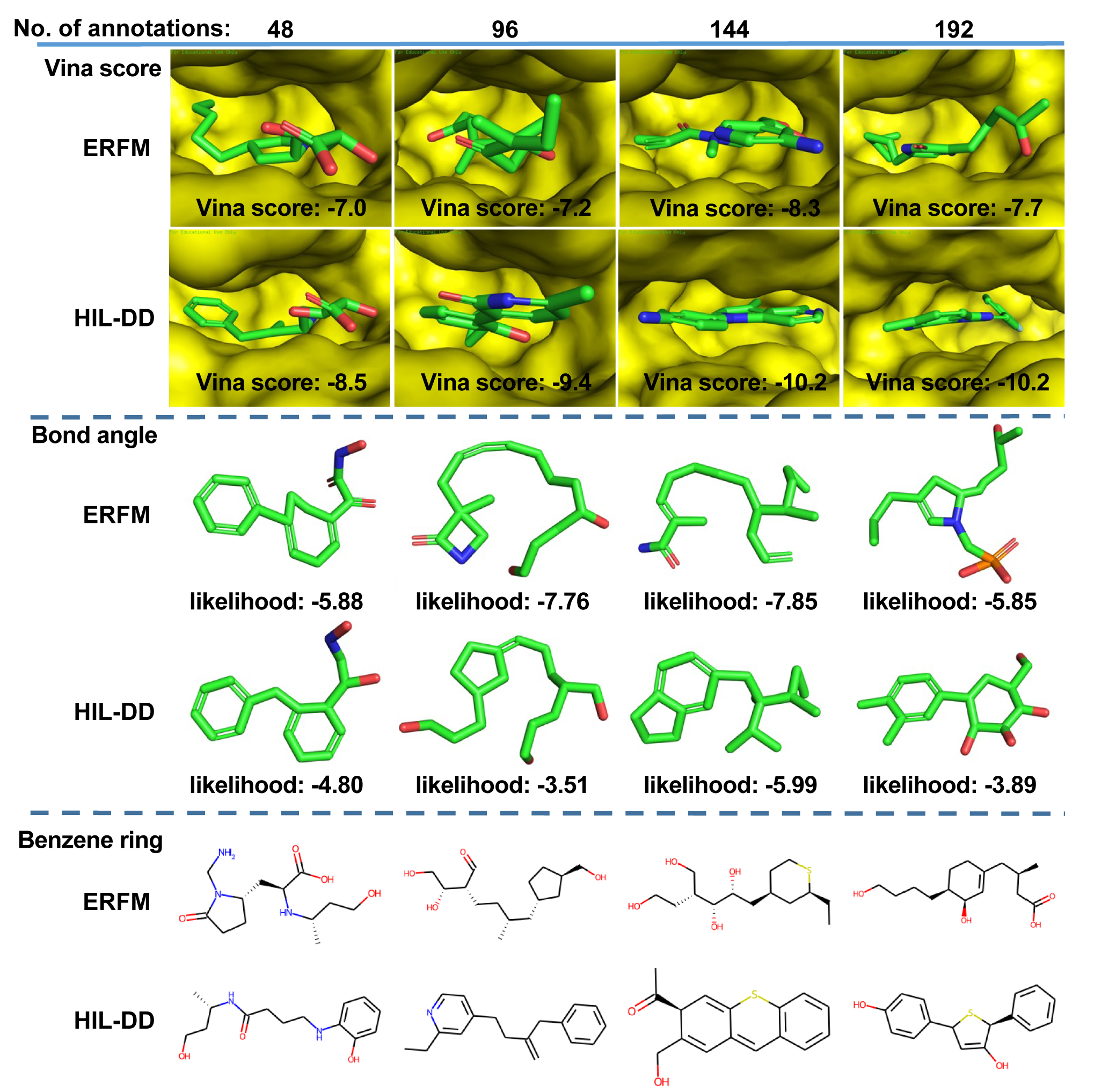

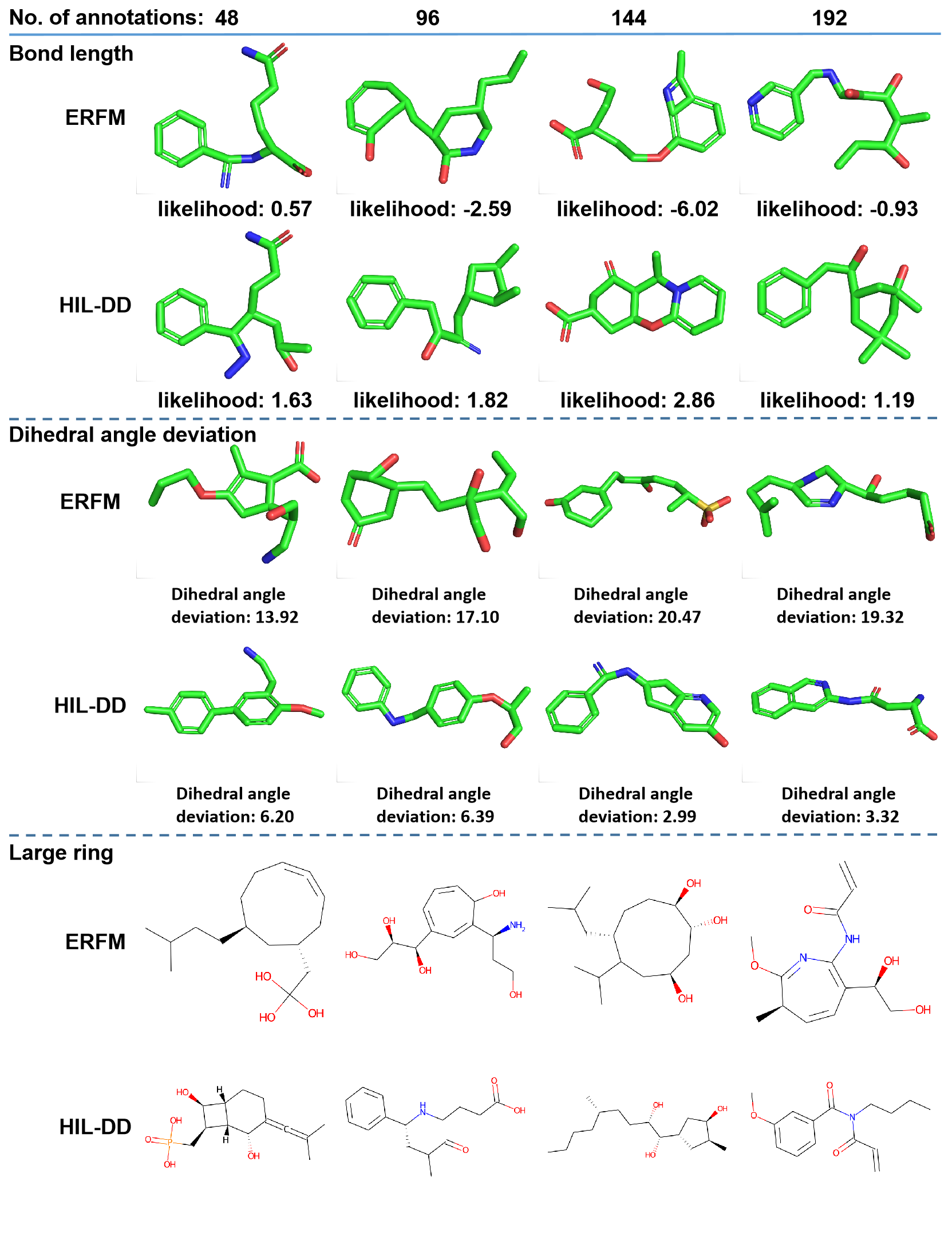

Generated molecules of ERFM and HIL-DD

In this section, we present the molecules generated by HIL-DD for six preferences, namely, Vina score, bond angle, benzene ring, bond length, dihedral angle deviation, avoiding large ring. Meanwhile, we provide the molecules generated by ERFM with the same noise which are used to generate these molecules by HIL-DD. These examples demonstrate that HIL-DD can capture human preferences well.

Sub-directories

- [configs] contains configuration files for training, sampling, and finetuning.

- [statistics] includes statistics about the training data, such as bond-length distribution, bond-angle distribution, and dihedral-angle distribution, etc.

- [datasets] contains files for preprocessing data.

- [models] contains the architectures of ERFM and the classifier.

- [toy-experiment] contains code for conducting a toy experiment to validate our algorithm.

- [utils] contains various helper functions.

Toy experiment

Before you check out our ERFM and HIL-DD, you can try to run the toy experiment. You will see the beauty of preference learning in a few minutes.

Dependencies

We recommend using Anaconda to create an environment for installing all dependencies. If you have Anaconda installed, please run the following command to install all packages. Normally, this can be done within a few minutes:

conda create --name HIL-DD --file configs/spec-file.txtThe main dependencies are as follows:

- Python=3.10

- PyTorch==1.12.1

- PyTorch Geometric==2.1.0

- NumPy==1.23.3

- OpenBabel==3.1.1

- RDKit==2022.03.5

- QVina==2.1.0

- SciPy==1.9.1

Vina Docking Score Calculation

To calculate Vina docking scores, you need to download the full protein pocket files from

here and

place them in the configs folder. Then, unzip the files.

Prepare Receptor Files

If all your experiments are based on the CrossDocked dataset, please skip the following two steps.

If you want to compute the binding affinity for the generated molecules conditioned on your own pocket, it is recommended to create a separate environment to install MGLTools. This is because MGLTools and OpenBabel may not be compatible.

- Put the untailored PDB file under the

examples/folder and run the following command:

python utils/prepare_receptor4.py -r examples/xxxx_full.pdb -o examples/xxxx_full.pdbqt- Put the tailored PDB file under

examples/.

Data

We trained/tested ERFM and HIL-DD using the same datasets as SBDD,

Pocket2Mol, and TargetDiff.

If you only want to sample molecules for the pockets of the CrossDocked test set,

we have stored those pockets in configs/test_data_list.pt, so you can skip the following steps.

- Download the dataset archive

crossdocked_pocket10.tar.gzand the split filesplit_by_name.ptfrom this link and place them underdata/. - Extract the TAR archive using the command:

tar -xzvf crossdocked_pocket10.tar.gz.

Please note that it may take approximately 2 hours to preprocess the data when training ERFM or HIL-DD for the first time.

Prepare proposals for HIL-DD

To prepare proposals for HIL-DD, please follow the steps below:

-

Choose a protein pocket of interest either from the test set or from another dataset. If the protein pocket of interest is a member of the CrossDocked test set, refer to this .csv file for the corresponding PDB ID.

-

To sample molecules from the chosen protein pocket, use the following command:

python sampling.py --device cuda --config configs/sampling.yml --pocket_id 4 --num_samples 1000 Make sure to replace the --pocket_id value with the index of the desired pocket.

Run this command 13 times to generate 13 result files. These result files will be used to select good and bad samples.

Note that if you don't mind the samples overlapping among the 12 preference injections, you can run the command only 3 times.

-

Move all the result files from

logs_sampling/datetime/sample-results/datetime.ptto a new folder namedtmp/samples_pocket4. -

Calculate the metrics for the samples using the following command:

python cal_metric_one_pocket.py tmp/samples_pocket4- Select the good and bad molecules using the command:

python select_proposals.py tmp/samples_pocket4 tmp/samples_pocket4_proposalsIn the select_proposals.py file, you can specify the lower and upper thresholds for various preferences such as

Vina score, bond angle, bond length, benzene ring, large ring, and dihedral angle deviation. By default, the thresholds

for Vina score are -7 and -9. For more details, please refer to the last lines of the select_proposals.py file.

The minimum number of positive and negative samples is determined by config.pref.num_positive_samples x config.pref.proposal_factor

and config.pref.num_negative_samples x config.pref.proposal_factor, respectively.

Code Usage

To train ERFM, use the following command:

python train_ERFM.py --device cuda --config configs/config_ERFM.ymlTo sample with a pretrained ERFM for all 100 pockets in the CrossDocked test set, run the following command:

python sampling.py --device cuda --config configs/sampling.ymlTo sample with a protein pocket that is not in the CrossDocked test set, make sure to place your PDB file under the examples/ directory.

Then, execute the following command:

python sampling4pocket.py --device cuda --config configs/sampling.yml --pdb_path examples/2V3R.pdb If you need to calculate the binding affinity, ensure that you have the complete protein pocket file in the examples/ directory.

Then, run the command as shown below:

python sampling4pocket.py --device cuda --config configs/sampling.yml --pdb_path examples/2V3R.pdb --receptor_path examples/2V3R_full.pdbqtTo finetune a pretrained ERFM, use the following command:

python HIL_DD_pref.py --device cuda --config configs/config_pref.ymlLicense

HIL-DD is licensed under the Apache License, Version 2.0: http://www.apache.org/licenses/LICENSE-2.0

Reference

[1]. Liu, Xingchao, et al. "Flow Straight and Fast: Learning to Generate and Transfer Data with Rectified Flow." ICLR (2023).

[2]. Liu, Qiang. "Rectified flow: A marginal preserving approach to optimal transport." arXiv preprint arXiv:2209.14577.

[3]. Satorras, Victor Garcia, et al. "E (n) equivariant graph neural networks." ICML (2021).

[4]. Francoeur, Paul G et al. "Three-dimensional convolutional neural networks and a cross-docked data set for structure-based drug design." Journal of chemical information and modeling. (2020).