Goal: Understand better LoRA [1] and whether one can use low-rank matrices directly instead of full-rank weight matrices.

Main idea of LoRA: We assume that updating a pre-trained model only requires updates of low-rank, i.e. for the weight matrix

Question: Can we also use this low-rank approximation directly and replace $W_0$ with $BA$?

Trying to answer this question, we compare three different layers.

- Full-rank linear layers:

$$\text{Layer}(x) = \sigma(Wx + b) \text{ with } W \in \mathbb{R}^{d_{out} \times d_{in}}$$ - LoRA layers (custom weight initialization):

$$\text{Layer}(x) = \sigma(ABx + b) \text{ with } A \in \mathbb{R}^{d_{out} \times r}, B \in \mathbb{R}^{r \times d_{in}}$$ - Autoencoder (AE) layers (default weight initialization):

$$\text{Layer}(x) = \sigma(\text{Layer}_2(\text{Layer}_1(x)))$$ with $$ \text{Layer}1(x) = Bx \qquad \text{ with } B \in \mathbb{R}^{r \times d{in}}, \ \text{Layer}2(y) = Ay + b \text{ with } A \in \mathbb{R}^{d{out} \times r}. $$ Note that technically the second and third approach are almost identical, but in the third approach we use the default weight initialization of PyTorch and in the second approach we employ a weight initialization that depends on the rank$r$ .

To test these three approaches, we plug this into a simple MNIST classifier from the PyTorch tutorials [2]. The baseline implementation (method 1) is a fully connected neural network with

cd src; sh run.sh

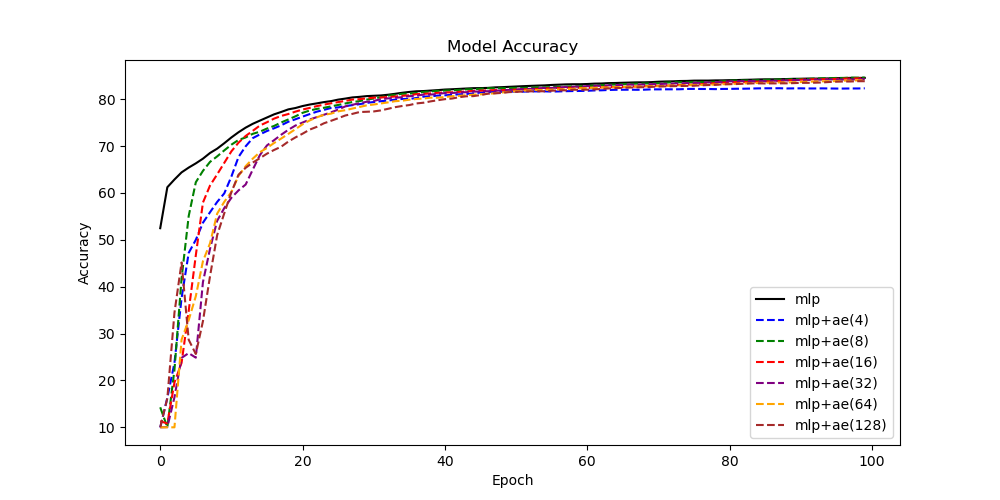

We start by comparing the autoencoder layers (method 3) with the full-rank baseline (method 1).

We see that the autoencoder layers start with an accuracy of around

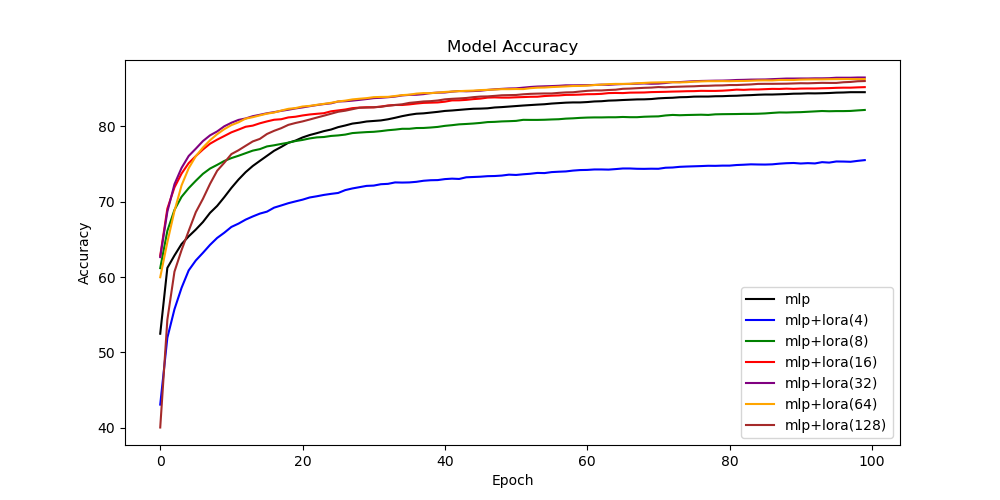

Next, we compare the LoRA layers (method 2) with the full-rank baseline (method 1).

Here, we observe that the initialization of the LoRA layers (method 2) is significantly better than the default initialization (method 3), since the initial accuracy is closer to the baseline and converges faster. Moreover, we see that using too small of a rank, e.g.

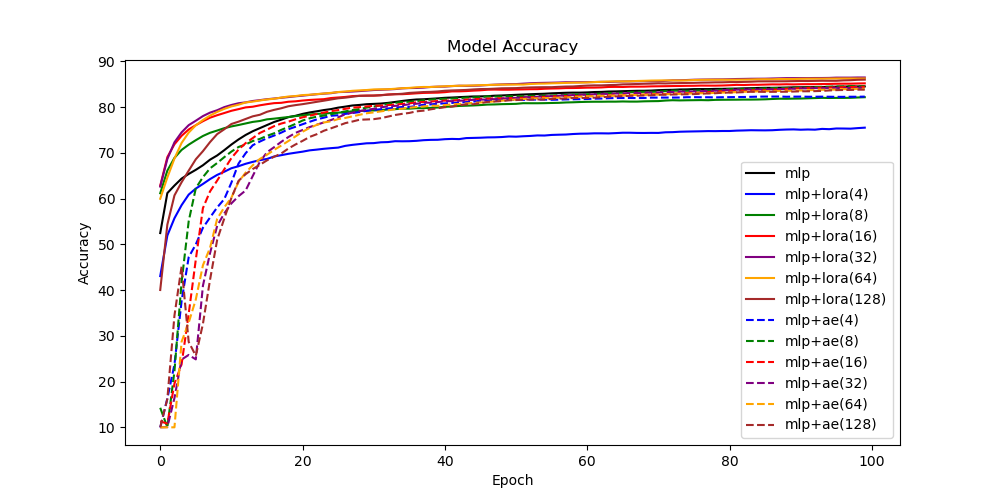

Finally, we compare all three methods in a single plot.

Overall, we observe that for this simple FashionMNIST test case it is beneficial to use low-rank representations of the matrices directly, as long as the weight matrices are initialized appropriately. It remains an open question whether this observation can be also made for more difficult problems.

There has been a lot of other research LoRA. Some related papers include:

- LoRA+ [3]: better optimizer by proper, separate learning rate scaling of

$A$ and$B$ - ReLoRA [4]: low-rank pre-training

- AdaLoRA [5]: adaptively changing rank

$r$ during training - GaLore [6]: low-rank approximation of gradient of weight matrix

$W$ -> possible to pretrain LLaMA 7B on consumer GPU

[1] Edward J Hu, yelong shen, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Lu Wang, & Weizhu Chen (2022). LoRA: Low-Rank Adaptation of Large Language Models. In: International Conference on Learning Representations. Code: https://github.com/microsoft/LoRA.

[2] https://pytorch.org/tutorials/beginner/basics/quickstart_tutorial.html

[3] Soufiane Hayou, Nikhil Ghosh, & Bin Yu. (2024). LoRA+: Efficient Low Rank Adaptation of Large Models. arXiv preprint. Code: https://github.com/nikhil-ghosh-berkeley/loraplus.

[4] Vladislav Lialin, Sherin Muckatira, Namrata Shivagunde, & Anna Rumshisky (2024). ReLoRA: High-Rank Training Through Low-Rank Updates. In: The Twelfth International Conference on Learning Representations. Code: https://github.com/Guitaricet/relora.

[5] Qingru Zhang, Minshuo Chen, Alexander Bukharin, Nikos Karampatziakis, Pengcheng He, Yu Cheng, Weizhu Chen, & Tuo Zhao. (2023). AdaLoRA: Adaptive Budget Allocation for Parameter-Efficient Fine-Tuning. arXiv preprint. Code: https://github.com/qingruzhang/adalora.

[6] Jiawei Zhao, Zhenyu Zhang, Beidi Chen, Zhangyang Wang, Anima Anandkumar, & Yuandong Tian. (2024). GaLore: Memory-Efficient LLM Training by Gradient Low-Rank Projection. arXiv preprint. Code: https://github.com/jiaweizzhao/GaLore.