Table of Contents

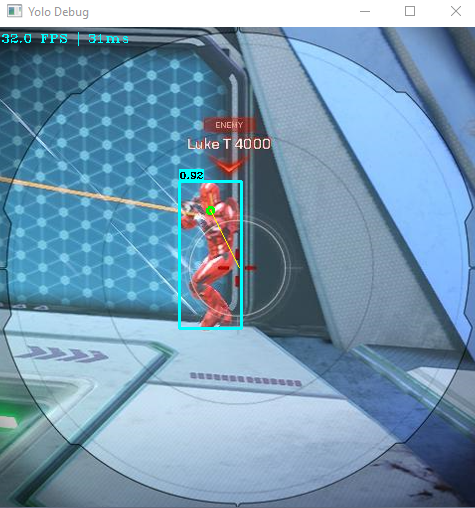

This project started as an experiment as

is it possible to "cheat" in FPS games using object detection.

I already know that object detection gives valuable information from images and is used in many sectors - from social media algorithms to basic quality control. I'v had an opporturnity to work with image recognition and on my spare time I have also created my own scrapers that classify images - for vaurious different cases. But that all is made on still images from different sources - where there isn't really any hurry on the inference results.

I wanted to find out would it be feasible to inference a FPS game, and then use the inference results to our advantage. The biggest issue was: will this be practical - as will it be fast enough. The inference would need to run on the same machine as the game and would it be hindered by the GPU.

As traditional video game hacking is made by reading process memory and different anti-cheats try to detect & block these reads. Object detection would take an totally different approach - no memory reading - thus would have the possibility to be undetected by anti-cheat. Another issue would be that how could we send input to the desired video game without triggering any flags. Main goal of this project is to showcase an POC, that indeed this is currently possible with relative affordable equipment.

I do not condem any hacking - it ruins the fun for you but also for other players. This project was created just to show that it is possible to "cheat" using object detection. Also this is my first bigger python project and totally first time using multiprocessing and threads - thus someone could benefit from optimizing the code. At the end I'm happy with the performance of the code, I managed to inference at ~28ms (~30 FPS) while the game was running at high settings +140 FPS.

I won't go to details on how to create your own custom models as there are way better tutorials on how to do this. If you are going to create your own model, you should have atleast an intermediate understanding of Image Recognition - as creating an valid dataset and analyzing the model outcome could be challenging if you are totally new. That said YOLOv5 Github is an good starting point.

Here is an list of programs / platforms I used for this project:

- YOLOv5 Object detection (custom model)

- Google Colab to train my model - they give free GPU (ex. my 300 epoch took only 3h)

- CVAT to label my datasets

- Roboflow to enrich these datasets

- Interception_py for mouse hooking (more on why this later)

This repo contains two pretrained modes that will detect enemies in Splitgate.

best.pt is trained on +600 images for 300 epoch.

bestv2.pt is then refined from that with +1500 images and an another 300 epochs.

These models only work on Splitgate - if you want to test this out in different games, you will need to create your own models.

Interception driver is selected because it will hook to your mouse on OS level - has low latency and does not trigger virtual_mouse flag - anticheat softwares may look for that flag.

If you aren't okay on installing that driver you will need to alter inter.py and use ex. pyautogui or mouse - however latency might become an issue.

If you are getting inference issues with YOLOv5 or it isn't finding any targets - try downgrading PyTorch CUDA version. Ex. I have CUDA +11.7, but needed to use PyTorch CUDA 10.2.

When above steps are done you only need to clone the repo:

git clone https://github.com/matias-kovero/ObjectDetection.gitI have stripped the smooth aim - as I see it would create harm to Splitgates community - I don't want to distirbute an plug and play repository. This was only ment for showing that this is currently possible - of course if you have the skillset - you could add your own aim functions - but this is up to you. Currently the code has an really simple aim movement - that more likely will get you flagged - but still proves my point that you can "hack" with this.

Main entrypoint - params found here. Example usage:

python .\main.py --source 480You can change --source and --img for better performance (smaller img = faster inference, but accuracy suffers).

If you have an beefy GPU and want better accuracy, try to set --img to 640 and whatever source.

If you are creating your own dataset, you might find this useful - as I used this to gather my images. It is currently set to take screenshot while mouse left is pressed, and after 10 sec cooldown, allows an new screenshot to be taken. Source code should be readable, so do what you want.

python .\gather.pyI was suprised how "easily" you could inference and play the game with relative low budget GPU.

My 1660 Super managed to inference with about 28ms delay (~30FPS) and hooking it up with smoothing aim created an discusting aimbot.

I really don't know how anti-cheats could detect this kind of cheating. Biggest issue is still how to send human like mouse movement to the game.