Self-driving bots for the Robotini racing simulator with deep reinforcement learning (RL). The deep RL algorithm used to train these bots is deep (recurrent) deterministic policy gradients (DDPG/RDPG) from the TensorFlow Agents framework.

Simulator socket connection and camera frame processing code is partially based on the codecamp-2021-robotini-car-template project (not (yet?) public).

However, this project moves all socket communication to separate OS processes and implements message passing with concurrent queues.

This is to avoid having the TensorFlow Python API runtime compete for thread time with the socket communication logic (because GIL).

All custom multiprocessing scribble could probably be replaced with a few lines external library code, e.g. Celery?.

Screen recordings from an 20 hour training session.

At the beginning, the agent has no policy for choosing actions based on inputs:

eval-step0.mp4

After 2 hours of training, the agent has learned to avoid the walls but is still making mistakes:

eval-step2000.mp4

After 20 hours of training, the agent is driving somewhat ok:

eval-step16000.mp4

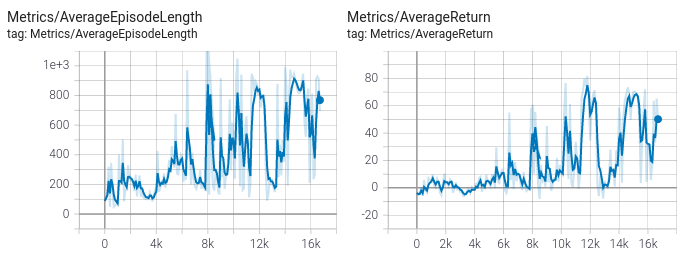

TensorBoard provides visualizations for all metrics (only 2 shown here):

Here we can see how the average episode length (number of steps from spawn to crash or full lap) and average return (discounted sum of rewards for one episode) improve over time. Horizontal axis is the number of training steps.

By looking at the metrics at step 16k, we can see a global minimum of approx. 400 avg. episode length and 20 avg. return during evaluation.

Therefore, the agent driving car env7_step16000_eval seen in the video under "After 16000 training steps" might not be the optimal choice for a trained agent.

This repository contains an agent from training step 11800.

web-ui.mp4

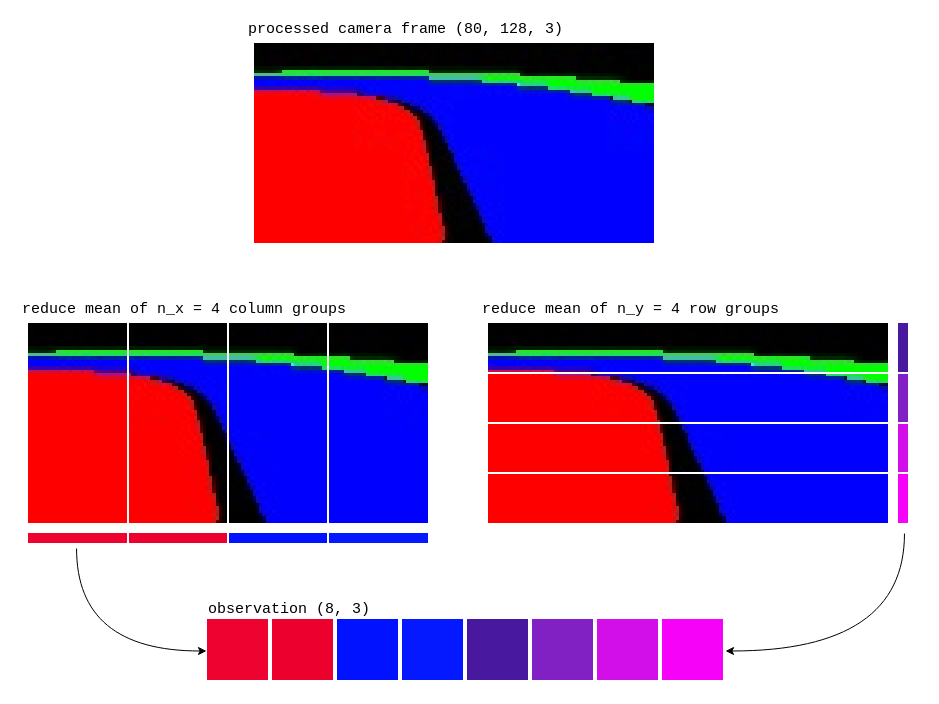

The neural network sees 8 RGB pixels as input at each time step, also called the observation.

These are computed by averaging 4 non-overlapping row and column groups of the input camera frame:

Experience gathering is done in parallel:

explore-step16000.mp4

- Linux or macOS

- Robotini simulator - fork that exports additional car data over the logging socket.

- Unity - for running the simulator

- Redis - Fast in-memory key-value store, used as a concurrent cache for storing car state.

- Python >= 3.6 + all deps from

requirements.txt

Training a new agent can take several hours even on fast hardware due to the slow, real-time data collection. There are no guarantees that an agent trained on one track will work on other tracks.

If you still want to try training a new agent from scratch, here's how to get started:

- Start the simulator (use the fork) using this config file.

- If using a single machine for both training and running the simulator, set

SIMULATOR_HOST := localhostin the Makefile. Otherwise, set the address of the simulator machine.

On the training machine, run these commands in separate terminals

make start_redismake train_ddpg

make start_tensorboardmake start_monitor- Open two tabs in a browser and go to:

localhost:8080- live feedlocalhost:6006- TensorBoard

./tf-datacontains TensorBoard logging data, saved agents/policies, checkpoints, i.e. all training state. It can (should) be deleted between unrelated training runs. Note: If a long training session produced a good agent, remember to backup it from./tf-data/saved_policiesbefore deleting. Perhaps even back up the wholetf-datadirectory.- Interrupting or terminating the training script may (rarely) leave processes running.

Check e.g. with

ps ax | grep 'scripts/train_ddpg.py'orpgrep -f train_ddpg.pyand clean unwanted processes with e.g.pkill -ef 'python3.9 scripts/train_ddpg.py'(fix pattern depending onps axoutput). - Every N training steps, the complete training state is written to

./tf-data/checkpoints. This makes the training state persistent and allows you to continue training from the saved checkpoint. Note that this includes the full, pre-allocated replay buffer, which might make each checkpoint up to 2 GiB in size. - For end-to-end debugging of the whole system, try

make run_debug_policy. This will run a trivial hand-written policy that computes the amount of turn from RGB colors (seerobotini_ddpg.features.compute_turn_from_color_mass). - If the training log gets filled with

camera frame buffer is empty, skipping stepwarnings, then the TensorFlow environment step loop is processing frames faster than we read from the simulator over the socket. Some known causes for this is that the simulator is under heavy load or the machine is sleeping. Also, the simulator dumps spectator logs torace.log, which can grow large if the simulator is running for a long time. - I have no idea if the hyperparameters in

./scripts/config.ymlare optimal. Some tweaking perhaps could improve the results and training speed drastically.