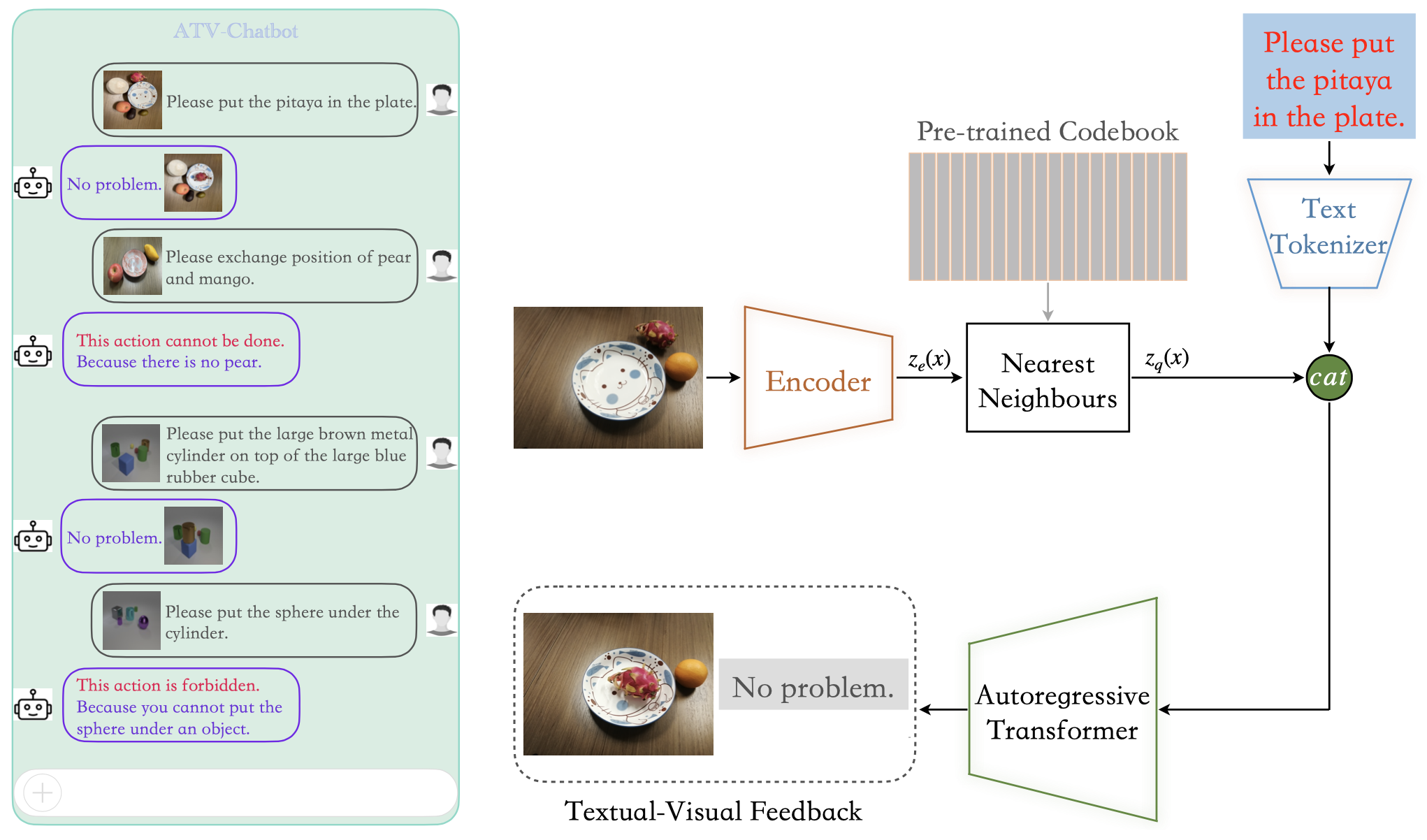

The official repository for Accountable Textual-Visual Chat Learns to Reject Human Instructions in Image Re-creation.

- Python 3.8

- matplotlib == 3.1.1

- numpy == 1.19.4

- pandas == 0.25.1

- scikit_learn == 0.21.3

- torch == 1.8.0

We provide an environment file; environment.yml containing the required dependencies. Clone the repo and run the following command in the root of this directory:

conda env create -f environment.yml

Please refer to DOWNLOAD.md for dataset preparation.

Please refer to pretrained-models to download the released models.

- To train the first stage:

bash dist_train_vae.sh ${DATA_NAME} ${NODES} ${GPUS}- To train the second stage:

bash dist_train_atvc.sh ${VAE_PATH} ${DATA_NAME} ${NODES} ${GPUS}${VAE_PATH}: path of pretrained vae model.${DATA_NAME}: dataset for training, e.g.CLEVR-ATVC,Fruit-ATVC.${NODES}: number of node.${GPUS}: number of gpus for each node.

- To test image reconstruction ability of the first stage:

bash gen_vae.sh ${GPU} ${VAE_PATH} ${IMAGE_PATH}- To test atvc final model:

bash gen_atvc.sh ${GPU} ${ATVC_PATH} ${TEXT_QUERY} ${IMAGE_PATH}${GPU}: id of one gpu, e.g.0.${VAE_PATH}: path of pretrained vae model.${IMAGE_PATH}: image path for reconstrction, e.g.input.png.${ATVC_PATH}: path of pretrained atvc model.${TEXT_QUERY}: text-based query, e.g."Please put the small blue cube on top of the small yellow cylinder.".

ATVC is released under the Apache 2.0 license.

If you find this code useful for your research, please cite our paper

@article{zhang2023accountable,

title={Accountable Textual-Visual Chat Learns to Reject Human Instructions in Image Re-creation},

author={Zhang, Zhiwei and Liu, Yuliang},

journal={arXiv preprint arXiv:2303.05983},

year={2023}

}

Our code is learned from DALLE-pytorch and CLIP. We would like to thank all the people who help label text-image pairs and participate in human evaluation experiments. We hope our explorations and findings contribute valuable insights regarding the accountability of textual-visual generative models.

This project is developed by Zhiwei Zhang (@zzw-zwzhang) and Yuliang Liu (@Yuliang-Liu).