The Most Educated Places in the U.S.

Earlier this week I came across an article on the most educated places in the US. I thought it would be cool to try to recreate their findings and expand upon by taking into account the uncertainty of the survey results.

Let's get started.

Setup

%matplotlib inlineimport matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import requests

import seaborn as sns

import usRead data

We need two variables for each place in the US:

- Percent of population over 25 with a bachelor's degree or higher (called educational attainment from now on)

- Total population

We'll also grab the margin of error for these variables. We'll use them later.

def state_and_place(df):

"""Extracts state and place from the original identification variable."""

columns = (

df

.id

.astype(str)

.str.extract('(?P<state_fips>\d{1,2})(?P<place_fips>\d{5})', expand=True)

.astype(int)

)

return df.join(columns)def read_acs(year):

"""Reads and formats ACS educational attainment data for a given year."""

filename = '../data/ACS_{year}_5YR_S1501/ACS_{year}_5YR_S1501_with_ann.csv'.format(year=str(year)[-2:])

na_values = ('**', '-', '+', '***', '*****', 'N', '(X)')

variables = {

'Id2': 'id',

'Geography': 'place',

"Total; Estimate; Percent bachelor's degree or higher": 'pct_bachelor_plus',

"Total; Margin of Error; Percent bachelor's degree or higher": 'pct_bachelor_plus_moe'

}

return (

pd

.read_csv(

filename,

usecols=variables.keys(),

na_values=na_values,

# https://www.census.gov/geo/maps-data/data/tiger/char_encoding.html

encoding='ISO-8859-1',

skiprows=1

)

.rename(columns=variables)

.pipe(state_and_place)

.drop('id', axis=1)

.set_index(['state_fips', 'place_fips'])

)def read_population(year):

"""Collects and formats population data from the Census API for a given year."""

url = 'http://api.census.gov/data/{}/acs5?get=B01003_001E,B01003_001M&for=place:*'.format(year)

r = requests.get(url)

data = r.json()

columns = {

'B01003_001E': 'population',

'B01003_001M': 'population_moe',

'state': 'state_fips',

'place': 'place_fips'

}

return (

pd.DataFrame(data=data[1:], columns=data[0])

.rename(columns=columns)

.apply(lambda s: s.astype(int))

.set_index(['state_fips', 'place_fips'])

)def state(df):

"""Converts state FIPS codes to state names."""

data = (

df

.index

.get_level_values('state_fips')

.astype(str)

.str.rjust(2, fillchar='0')

)

s = pd.Series(data=data, index=df.index)

return s.apply(lambda x: us.states.lookup(x).name)def read_data(year):

"""Reads and formats desired ACS data for a given year."""

acs = read_acs(year)

population = read_population(year)

return (

acs

.join(population)

.assign(state=state)

.set_index(['state', 'place'])

.sort_index(axis=1)

)df = read_data(2012)Let's check out the dataset

Whenever I work with a dataset for the first time I like to do a few things:

- Check out the shape/composition.

- Look at the top few rows.

- Look at the bottom two rows.

- Get a sense of how things are distributed.

# Composition

df.info()<class 'pandas.core.frame.DataFrame'>

MultiIndex: 29256 entries, (Alabama, Abanda CDP, Alabama) to (Wyoming, Y-O Ranch CDP, Wyoming)

Data columns (total 4 columns):

pct_bachelor_plus 29029 non-null float64

pct_bachelor_plus_moe 29029 non-null float64

population 29256 non-null int32

population_moe 29256 non-null int32

dtypes: float64(2), int32(2)

memory usage: 914.2+ KB

# Top

df.head()| pct_bachelor_plus | pct_bachelor_plus_moe | population | population_moe | ||

|---|---|---|---|---|---|

| state | place | ||||

| Alabama | Abanda CDP, Alabama | 0.0 | 45.8 | 42 | 31 |

| Abbeville city, Alabama | 16.9 | 5.7 | 2699 | 18 | |

| Adamsville city, Alabama | 12.3 | 3.9 | 4537 | 26 | |

| Addison town, Alabama | 17.8 | 10.5 | 826 | 284 | |

| Akron town, Alabama | 7.9 | 8.7 | 369 | 141 |

# Bottom

df.tail()| pct_bachelor_plus | pct_bachelor_plus_moe | population | population_moe | ||

|---|---|---|---|---|---|

| state | place | ||||

| Wyoming | Woods Landing-Jelm CDP, Wyoming | 40.6 | 17.7 | 91 | 102 |

| Worland city, Wyoming | 20.4 | 3.9 | 5426 | 18 | |

| Wright town, Wyoming | 14.3 | 4.3 | 2216 | 382 | |

| Yoder town, Wyoming | 15.9 | 15.0 | 149 | 104 | |

| Y-O Ranch CDP, Wyoming | 0.0 | 16.1 | 223 | 109 |

# Summary

df.dropna().describe()| pct_bachelor_plus | pct_bachelor_plus_moe | population | population_moe | |

|---|---|---|---|---|

| count | 29029.000000 | 29029.000000 | 2.902900e+04 | 29029.000000 |

| mean | 20.028689 | 9.109429 | 7.903848e+03 | 190.236625 |

| std | 16.372341 | 11.247205 | 6.613706e+04 | 221.437532 |

| min | 0.000000 | 0.200000 | 1.000000e+00 | 0.000000 |

| 25% | 9.100000 | 4.000000 | 3.460000e+02 | 48.000000 |

| 50% | 15.700000 | 5.800000 | 1.106000e+03 | 123.000000 |

| 75% | 26.000000 | 9.200000 | 4.100000e+03 | 245.000000 |

| max | 100.000000 | 100.000000 | 8.199221e+06 | 3536.000000 |

Population vs. Attainment

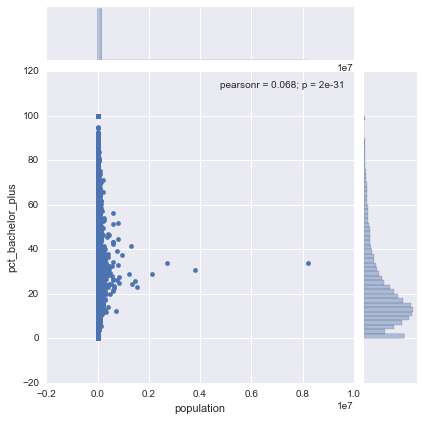

We can see right away that we're dealing with some pretty heavily skewed data. Most places are teeny-tiny, while some are massive (I see you New York). Most also tend to have educational attainment rates (i.e., percent of the population over 25 with bachelor's degrees) around 10-20%, though some go all the way to 100%! More on that later.

sns.jointplot('population', 'pct_bachelor_plus', data=df);Recreating the original metric

To make sure I'm starting off right, I wanted to try to recreate the original ranking. Fortunately the author was explicit with his methodology, so recreating was a breeze.

colleges = (

'Stanford CDP, California',

'University at Buffalo CDP, New York',

'University of Pittsburgh Johnstown CDP, Pennsylvania',

'Mississippi State CDP, Mississippi',

'St. Vincent College CDP, Pennsylvania',

'University CDP, Mississippi'

)def highest_attainment_orig(df, filter_=True):

"""Gets the place with the higehst educational attainment in each state."""

if filter_:

# Only include places with at least 1,000 people, excluding colleges

subset = df.loc[(df.population > 1000) & (df.index.map(lambda x: x[1] not in colleges))]

else:

subset = df

# Find the place with the higehst attainment by state

idx = (

subset

.groupby(level='state')

.pct_bachelor_plus

.transform('max')

.eq(subset.pct_bachelor_plus)

)

return subset.loc[idx].reset_index('state', drop=True)(

df

.pipe(highest_attainment_orig)

.pct_bachelor_plus

.sort_values(ascending=False)

)place

Chevy Chase town, Maryland 92.7

Ladera CDP, California 89.3

Winnetka village, Illinois 88.4

Mission Hills city, Kansas 88.1

Short Hills CDP, New Jersey 87.1

Belle Meade city, Tennessee 86.4

Lost Creek CDP, Texas 86.3

Scarsdale village, New York 84.9

Castleton CDP, Vermont 84.2

Shorewood Hills village, Wisconsin 83.6

Yarrow Point town, Washington 83.1

Old Greenwich CDP, Connecticut 83.0

Hanover CDP, New Hampshire 83.0

Mountain Brook city, Alabama 82.9

Ladue city, Missouri 82.6

Druid Hills CDP, Georgia 81.8

The Village of Indian Hill city, Ohio 81.2

McLean CDP, Virginia 81.2

Edgeworth borough, Pennsylvania 81.2

Wellesley CDP, Massachusetts 80.8

Pine Brook Hill CDP, Colorado 80.5

Kiawah Island town, South Carolina 80.5

Meridian Hills town, Indiana 79.5

Indian Hills city, Kentucky 79.5

University Heights city, Iowa 78.8

Moose Wilson Road CDP, Wyoming 78.3

East Grand Rapids city, Michigan 77.5

Nichols Hills city, Oklahoma 76.9

Fearrington Village CDP, North Carolina 76.0

Key Biscayne village, Florida 76.0

Sandia Heights CDP, New Mexico 73.6

North Oaks city, Minnesota 73.3

Emigration Canyon CDP, Utah 71.8

Paradise Valley town, Arizona 71.6

Rehoboth Beach city, Delaware 68.9

Kingston CDP, Rhode Island 67.7

Cumberland Center CDP, Maine 67.6

Lake Oswego city, Oregon 66.5

Oak Hills Place CDP, Louisiana 66.4

Hidden Spring CDP, Idaho 66.2

Madison city, Mississippi 63.1

Maunawili CDP, Hawaii 62.3

Goldstream CDP, Alaska 57.7

Dakota Dunes CDP, South Dakota 56.3

Big Sky CDP, Montana 54.3

Shepherdstown town, West Virginia 51.4

Goshen town, Arkansas 51.3

Washington city, District of Columbia 51.2

Incline Village CDP, Nevada 47.6

Wayne city, Nebraska 44.4

Fargo city, North Dakota 39.0

Name: pct_bachelor_plus, dtype: float64

Here's the original list, for comparison:

The Problem: Uncertainty

One thing I was curious about was why the author decided to include only places with more than 1,000 people. What does this list look like if we include all places?

(

df

.pipe(highest_attainment_orig, filter_=False)

.sort_values('pct_bachelor_plus', ascending=False)

)| pct_bachelor_plus | pct_bachelor_plus_moe | population | population_moe | |

|---|---|---|---|---|

| place | ||||

| Lakeview North CDP, Wyoming | 100.0 | 85.6 | 12 | 19 |

| Gloster CDP, Louisiana | 100.0 | 68.8 | 20 | 31 |

| Allen CDP, Maryland | 100.0 | 24.6 | 168 | 170 |

| Zion CDP, Oklahoma | 100.0 | 100.0 | 2 | 4 |

| Ocean CDP, Maryland | 100.0 | 100.0 | 7 | 10 |

| Seconsett Island CDP, Massachusetts | 100.0 | 65.6 | 22 | 26 |

| Prairie City CDP, South Dakota | 100.0 | 100.0 | 2 | 3 |

| Bridger CDP, Montana | 100.0 | 52.0 | 53 | 75 |

| Turtle Lake CDP, Montana | 100.0 | 51.0 | 70 | 111 |

| Mendeltna CDP, Alaska | 100.0 | 71.2 | 19 | 29 |

| Allamuchy CDP, New Jersey | 100.0 | 37.3 | 105 | 137 |

| Blawenburg CDP, New Jersey | 100.0 | 9.3 | 515 | 209 |

| Port Colden CDP, New Jersey | 100.0 | 45.9 | 45 | 71 |

| Zarephath CDP, New Jersey | 100.0 | 62.8 | 72 | 106 |

| Cobre CDP, New Mexico | 100.0 | 64.7 | 21 | 33 |

| Lumberton CDP, New Mexico | 100.0 | 74.1 | 20 | 41 |

| Manzano CDP, New Mexico | 100.0 | 100.0 | 7 | 11 |

| Pinos Altos CDP, New Mexico | 100.0 | 63.2 | 22 | 35 |

| Regina CDP, New Mexico | 100.0 | 100.0 | 5 | 8 |

| Weed CDP, New Mexico | 100.0 | 48.8 | 37 | 61 |

| Dering Harbor village, New York | 100.0 | 100.0 | 6 | 9 |

| Paul Smiths CDP, New York | 100.0 | 82.2 | 600 | 138 |

| Thousand Island Park CDP, New York | 100.0 | 32.9 | 77 | 73 |

| Bellfountain CDP, Oregon | 100.0 | 100.0 | 15 | 21 |

| Brush Creek CDP, Oklahoma | 100.0 | 37.5 | 69 | 103 |

| Campo Verde CDP, Texas | 100.0 | 92.0 | 12 | 18 |

| Little Orleans CDP, Maryland | 100.0 | 82.2 | 14 | 20 |

| Lotsee town, Oklahoma | 100.0 | 100.0 | 3 | 5 |

| Carmet CDP, California | 100.0 | 85.1 | 14 | 19 |

| Oakville CDP, California | 100.0 | 36.4 | 76 | 103 |

| ... | ... | ... | ... | ... |

| Remington CDP, Ohio | 89.7 | 15.2 | 199 | 119 |

| Mission Woods city, Kansas | 88.5 | 7.0 | 175 | 36 |

| Winnetka village, Illinois | 88.4 | 2.1 | 12210 | 54 |

| Grandfather village, North Carolina | 88.2 | 9.1 | 85 | 38 |

| Pippa Passes city, Kentucky | 88.2 | 11.5 | 605 | 215 |

| Barton Hills village, Michigan | 88.1 | 5.6 | 286 | 62 |

| University of Pittsburgh Johnstown CDP, Pennsylvania | 87.5 | 70.3 | 1453 | 254 |

| Belle Meade city, Tennessee | 86.4 | 3.3 | 3016 | 105 |

| Castleton CDP, Vermont | 84.2 | 12.6 | 1167 | 271 |

| Henderson Point CDP, Mississippi | 84.0 | 24.1 | 50 | 39 |

| Shorewood Hills village, Wisconsin | 83.6 | 5.7 | 1720 | 81 |

| Hanover CDP, New Hampshire | 83.0 | 4.8 | 8608 | 248 |

| Old Greenwich CDP, Connecticut | 83.0 | 3.8 | 6622 | 431 |

| Mountain Brook city, Alabama | 82.9 | 2.3 | 20398 | 30 |

| Ladue city, Missouri | 82.6 | 2.9 | 8524 | 49 |

| Druid Hills CDP, Georgia | 81.8 | 3.0 | 14321 | 743 |

| East Amana CDP, Iowa | 80.9 | 43.7 | 60 | 52 |

| Kiawah Island town, South Carolina | 80.5 | 5.8 | 1448 | 239 |

| Golf village, Florida | 80.3 | 7.4 | 291 | 73 |

| Woodland city, Minnesota | 79.8 | 7.2 | 453 | 94 |

| Glenbrook CDP, Nevada | 78.7 | 13.6 | 178 | 105 |

| Henlopen Acres town, Delaware | 78.3 | 10.9 | 116 | 32 |

| Keystone CDP, Nebraska | 76.1 | 29.8 | 109 | 124 |

| Hansboro city, North Dakota | 75.0 | 35.4 | 12 | 9 |

| Bluff CDP, Utah | 74.6 | 20.2 | 346 | 134 |

| Watch Hill CDP, Rhode Island | 71.1 | 13.9 | 207 | 91 |

| Cousins Island CDP, Maine | 68.4 | 14.7 | 615 | 156 |

| Hidden Spring CDP, Idaho | 66.2 | 10.4 | 2077 | 312 |

| Cammack Village city, Arkansas | 62.2 | 7.4 | 858 | 186 |

| Washington city, District of Columbia | 51.2 | 0.5 | 605759 | 0 |

76 rows × 4 columns

That's a lot of 100%s. One thing to notice is that the confidence interval for these places are massive. Zion CDP, Oklahoma, for example, has a 90% CI of [0, 100]. The interval includes every value this variable can take! While filtering these small, highly-uncertain places is a good heuristic, there are more robust ways to handle this isuse.

One way is to use the 90% least plausible value, defined as the value such that there is only a 10% chance the true parameter is lower. In other words, the smartest places, according to this procedure, are the places that are most likely to have a high percentage of people with educational attainment. This works well because even if we completely overestimate the educational attainment rates we can be sure the top places are still on top.

The great thing about the American Community Survey (ACS) is that they provide margins of error (MoE) for all of their estimate variables. Best of all, their MoE are for 90% confidence intervals. All we need to do is subtract the MoE from the estimate!

A more conservative approach

def lower_bound(df, column):

"""Gets the 90% confidence interval lower bound for a variable."""

lower = df[column] - df['{}_moe'.format(column)]

# Minimum value is 0

lower.loc[lower < 0] = 0

return lowerdef add_lower_bounds(df):

"""Adds the lower bounds for population and educational attainment."""

return (

df

.assign(

population_lower=lambda df: df.pipe(lower_bound, 'population'),

pct_bachelor_plus_lower=lambda df: df.pipe(lower_bound, 'pct_bachelor_plus')

)

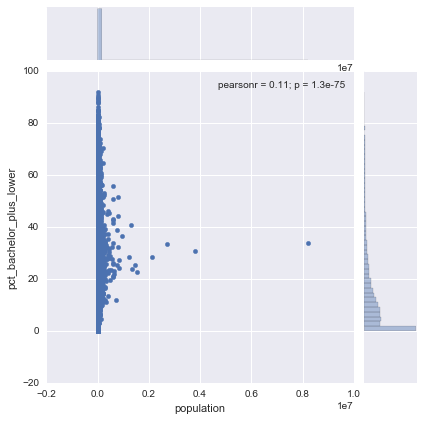

)df = df.pipe(add_lower_bounds)sns.jointplot('population', 'pct_bachelor_plus_lower', data=df);You'll notice that the educational attainment distribution is even more skewed than before! This is because so many places are small and therefore have a lot of uncertainty associated with their estimates. These places will be unlikely to show up on our list (as they shouldn't).

def highest_attainment(df):

"""Find the place with the higehst attainment by state, using the lower bound."""

subset = df.loc[df.index.map(lambda x: x[1] not in colleges)]

idx = (

subset

.groupby(level='state')

.pct_bachelor_plus_lower

.transform('max')

.eq(subset.pct_bachelor_plus_lower)

)

return (

subset

.loc[idx]

.reset_index('state', drop=True)

)def plot_highest(df):

"""Creates an errorbar plot for attainment data."""

highest = df.sort_values('pct_bachelor_plus_lower')

plt.figure(figsize=(16, 10))

plt.errorbar(

highest.pct_bachelor_plus,

np.arange(len(highest)),

xerr=highest.pct_bachelor_plus_moe,

fmt='o'

)

plt.xlim(40, 100)

plt.ylim(-1, len(highest))

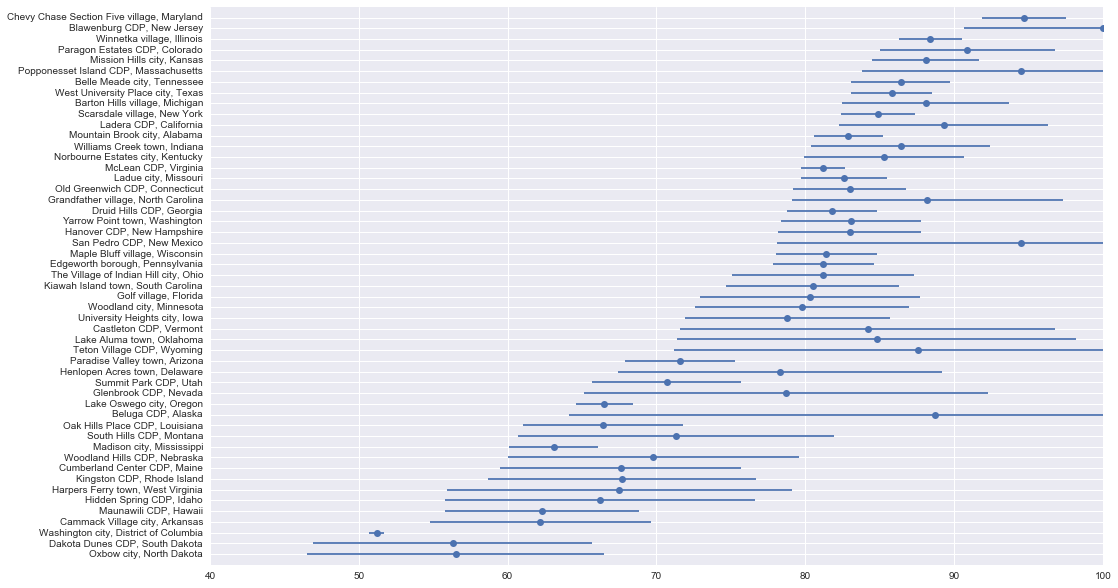

plt.yticks(np.arange(len(highest)), highest.index)df.pipe(highest_attainment).pipe(plot_highest)In the plot above we have the point estimate (the dot) and its confidence interval, ordered by the 90% least plausible value (i.e., the lower end of the CI). Compared to the original results, about half of the top places have changed.

How do things look now?

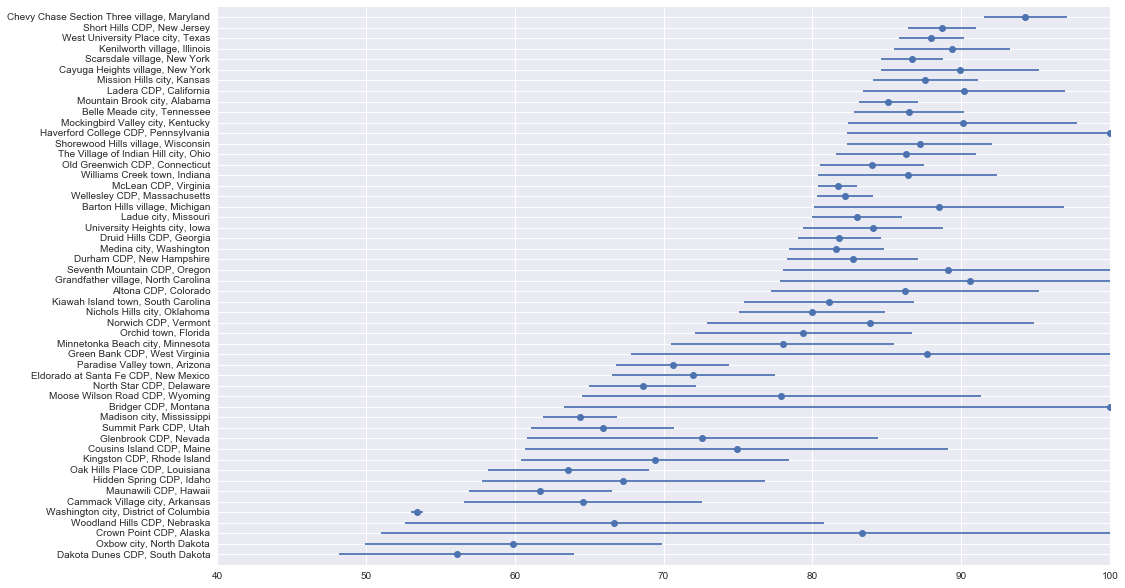

The original data is a few years old. Let's see how things look with 2010-2014 data.

df_14 = read_data(2014)df_14.pipe(add_lower_bounds).pipe(highest_attainment).pipe(plot_highest)Improvements

I don't think places are a good unit of analysis for this kind of work. Many are subsets of larger cities, which can be misleading.

An alternative would be to use metropolitian/micropolitan statistical areas and see how things change. The issue there is than smaller geographies are underrepresented (or not represented at all).

I'd like to try a hybrid approach: Use MSA where possible, otherwise use place. That allows us to acknowledge agglomeration effects, where applicable. I would need to find a mapping of places to MSAs. If that doesn't exist (quick Google search suggest it doesn't), I'd have to create one using Census TIGER shapefiles, which is easy to do with something like PostGIS or QGIS.

Acknowledgements

Huge thanks goes to Andy Kiersz, the author of the original article, for the inspiration.

Much of the discussion on uncertainty comes from Cameron Davidson-Pilon's book Bayesian Methods for Hackers. Can't recommend it enough.