Making use of terraform and nomad to setup a cluster orchestration system. This respository will provide an extended example from the main nomad terraform module

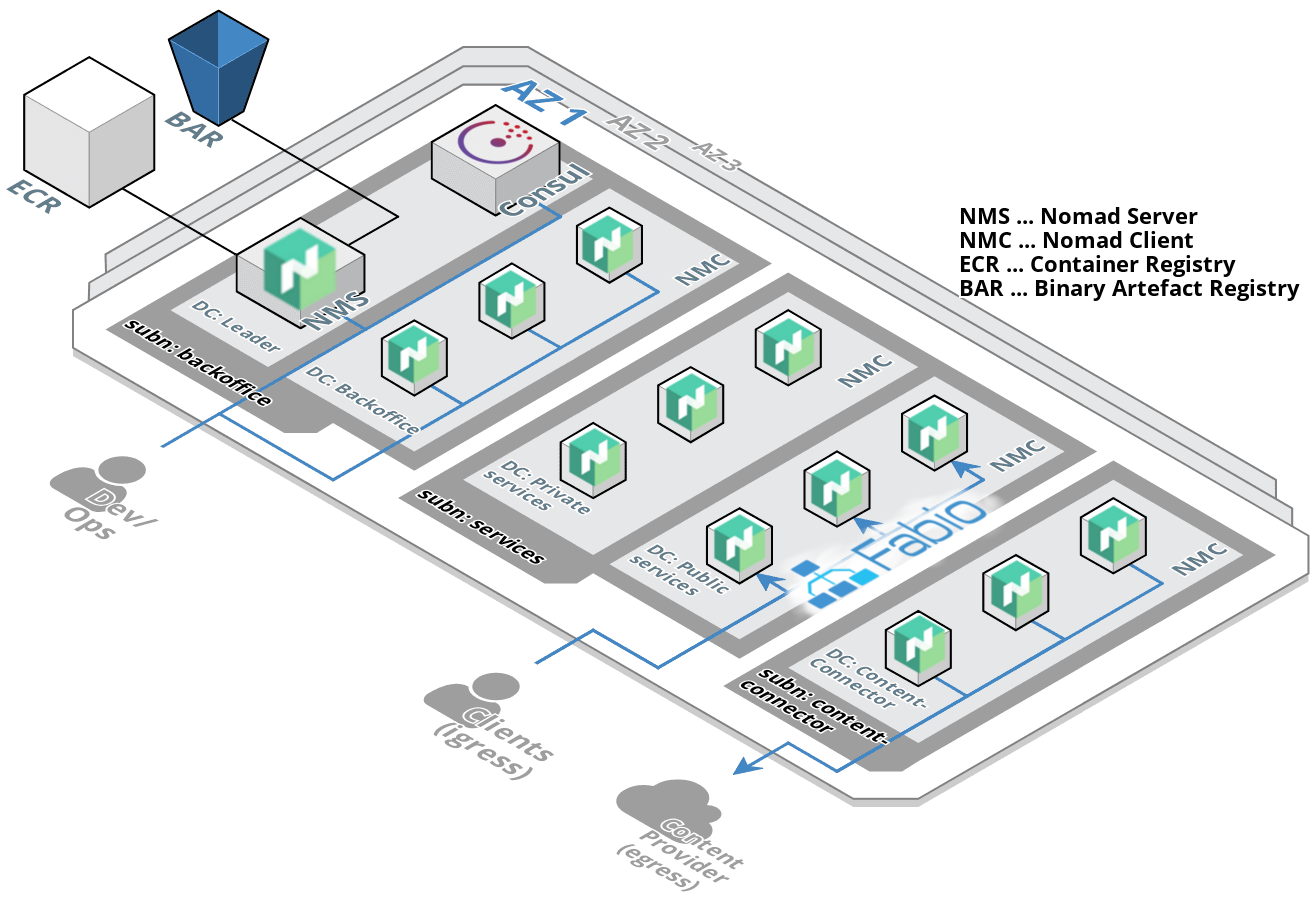

The COS (Cluster Orchestration System) consists of three core components.

- A cluster of nomad servers servers (NMS, the leaders).

- Several nomad clients (NMC).

- A cluster of consul servers used as service registry. Together with the consul-agents on each of the instances, consul is used as Service Discovery System.

The nomad instances are organized in so called data-centers. A data-center is a group of nomad instances. A data-center can be specified as destination of a deployment of a nomad job.

The COS organizes it's nodes in five different data-centers.

- DC leader: Contains the nomad servers (NMS).

- DC backoffice: Contains those nomad clients (NMC) that provide basice functionality in order to run services. For example the prometheus servers and Grafana runs there.

- DC public-services: Contains public facing services. These are services the clients directly get in touch with. They process ingress traffic. Thus an ingress load-balancer like fabio runs on those nodes.

- DC private-services: Contains services wich are used internally. Those services do the real work, but need no access from/ to the internet.

- DC content-connector: Contains services which are used to obtain/ scrape data from external sources. They usually load data from content-providers.

The data-centers of the COS are organized/ live in three different subnets.

- Backoffice: This is the most important one, since it contains the most important instances, like the nomad servers. Thus it's restricted the most and has no access to the internet (either ingress nor egress).

- Services: This subnet contains the services that need no egress access to the internet. Ingress access is only granted for some of them over an ALB, but not directly.

- Content-Connector: This subnet contains services that need egress access to the internet in order to obtain data from conent-providers.

This Cluster Orchestration System allows to pull docker images from public docker registries like Docker Hub and from AWS ECR.

Regarding AWS ECR, it is only possible to pull from the registry of the AWS account and region where this COS is deployed to. Thus you have to create an ECR in the same region on the same account and push your docker images there.

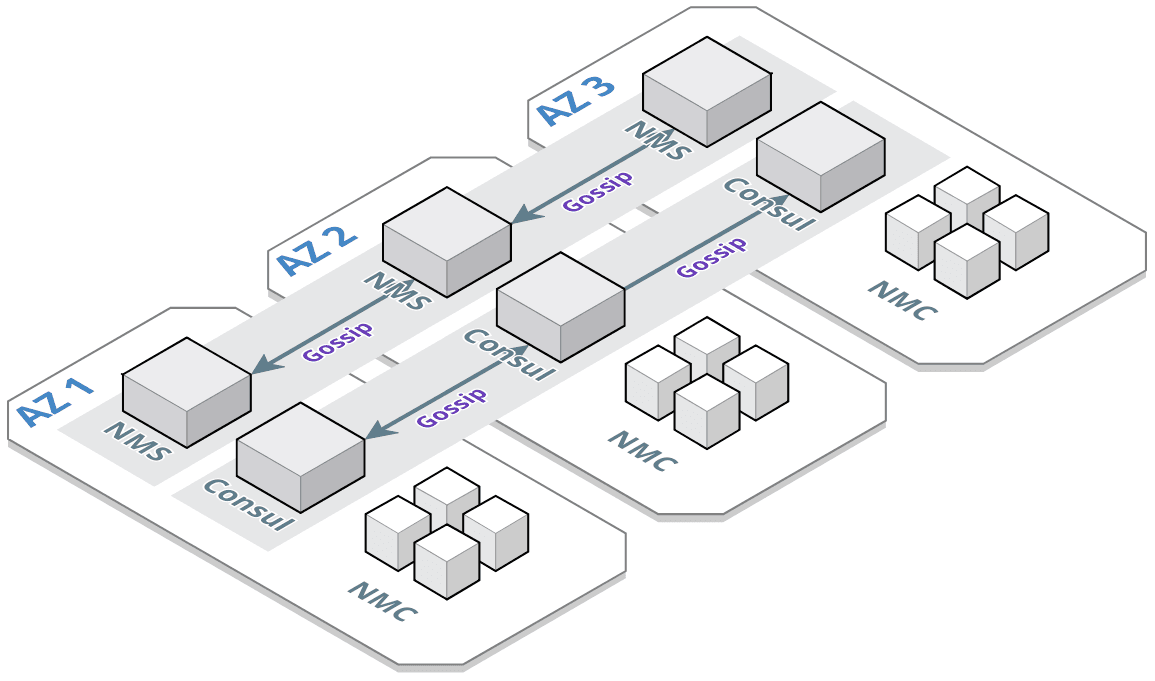

The consul-servers as well as the nomad-servers are build up in an high-availability set up. At least three consul- and nomad-servers are deployed in different availability-zones. The nomad-clients are deployed in three different AZ's as well.

Providing detailed documentation for this module.

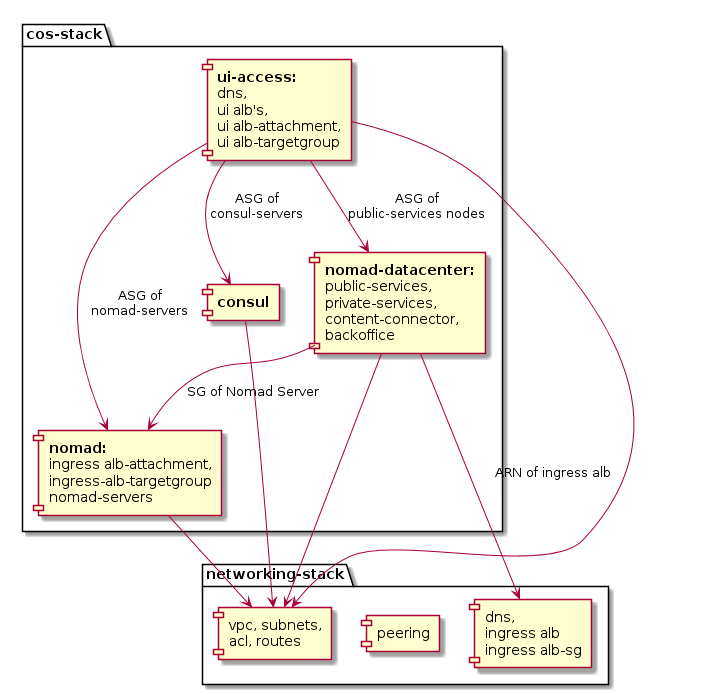

Provides example instanziation of this module. The root-example builds up a full working nomad-cluster including the underlying networking, the nomad servers and -clients and a consul cluster for service discovery.

Terraform modules for separate aspects of the cluster orchestration system.

- nomad: Module that creates a cluster of nomad masters.

- nomad-datacenter: Module that creates a cluster of nomad clients for a specific data-center.

- consul: Module building up a consul cluster.

- ui-access: Module building up alb's to grant access to nomad-, consul- and fabio-ui.

- sgrules: Module connecting security groups of instances apropriately to grant the minimal needed access.

- ami: Module for creating an AMI having nomad, consul and docker installed (based on Amazon Linux AMI 2017.09.1 .

- ami2: Module for creating an AMI having nomad, consul and docker installed (based on AAmazon Linux 2 LTS Candidate AMI 2017.12.0).

- networking: This module is only used to support the examples. It is not part of the main cos module.

The picture shows the dependencies within the modules of the cos-stack and the dependencies to the networking-stack.

nomad monitor -log-level error|warn|info|debug|trace -node-id <node_id> | -server-id <server_id>- supported since nomad 0.10.2

If you have deployed the cluster with https endpoints for the ui-albs and have created a selfsigned certificate you might get errors from the nomad cli complanig about an invalid certificate (x509: certificate is..). To fix this you have to integrate your custom root-CA you used for signing your certificate apropriately into your system.

Therefore you have to store the PEM encoded CA cert-file locally and give the information where to find it to nomad.

There are two options:

-ca-cert=<path>flag orNOMAD_CACERTenvironment variable-ca-path=<path>flag orNOMAD_CAPATHenvironment variable

To overcome certificate verification issues you can also (not recommended) temporarily skip the certificate verification when using the nomad CLI.

-tls-skip-verifyAs additional parameter in your cli calls. i.e.nomad plan -tls-skip-verify jobfile.nomadNOMAD_SKIP_VERIFYJust set the environment variable to 1.export NOMAD_SKIP_VERIFY=1And then call your CLI commands as usual. i.e.nomad plan jobfile.nomad