- M. Beekenkamp, A. Bhagavathula, P. LaDuca.

Separable Physics Informed Neural Networks (SPINNs), originally proposed in the paper “Separable PINN: Mitigating the Curse of Dimensionality in Physics-Informed Neural Networks.” by Cho et al., are an architectural overhaul of conventional PINNs that can approximate solutions to partial differential equations (PDEs). These architectural changes allow the authors to leverage forward-mode autodifferentiation (AD) and operate on a per-axis basis. Compared to conventional PINNs, which use point-wise processing, SPINNs presents a notable reduction in training time whilst maintaining accuracy.

Although referenced in the paper, Cho et al. did not release the original code until after we completed this problem. This repository is our implementation of the architecture proposed by the authors. By looking at a 2 dimensional heat equation, we show the improvements described in the paper.

The performance of the Simple PINN, SA-PINN, and SPINN models were evaluated using

| Algorithm | Total Loss |

|

Time [ms/iter] |

|---|---|---|---|

| Simple PINN | |||

| SA-PINN | |||

| S-PINN |

The table above summarises the results obtained for the three different network architectures. As shown in the table, the SPINN model achieved the best performance across all the metrics used for the benchmarking process. The SPINN model achieved a total loss of

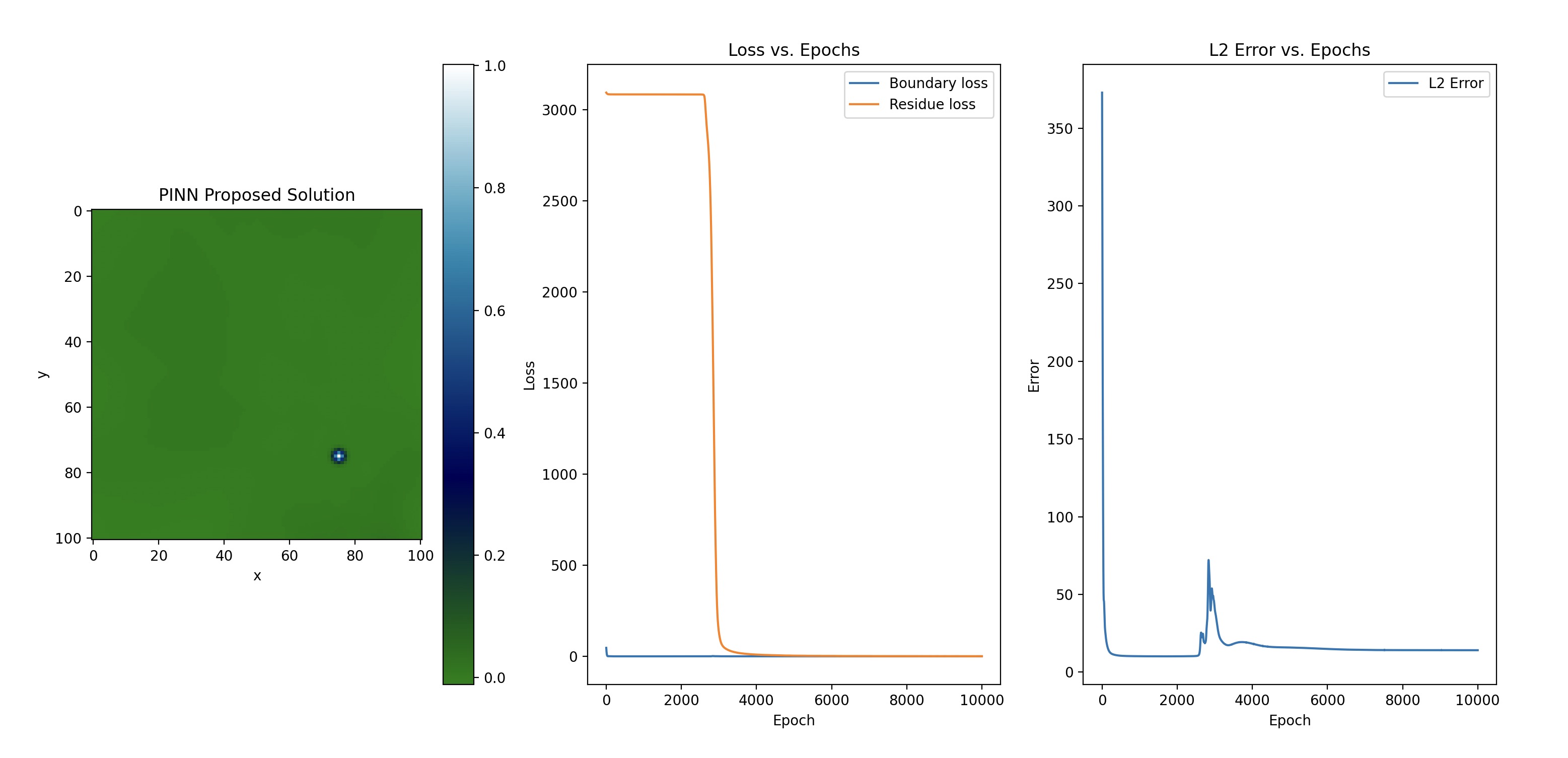

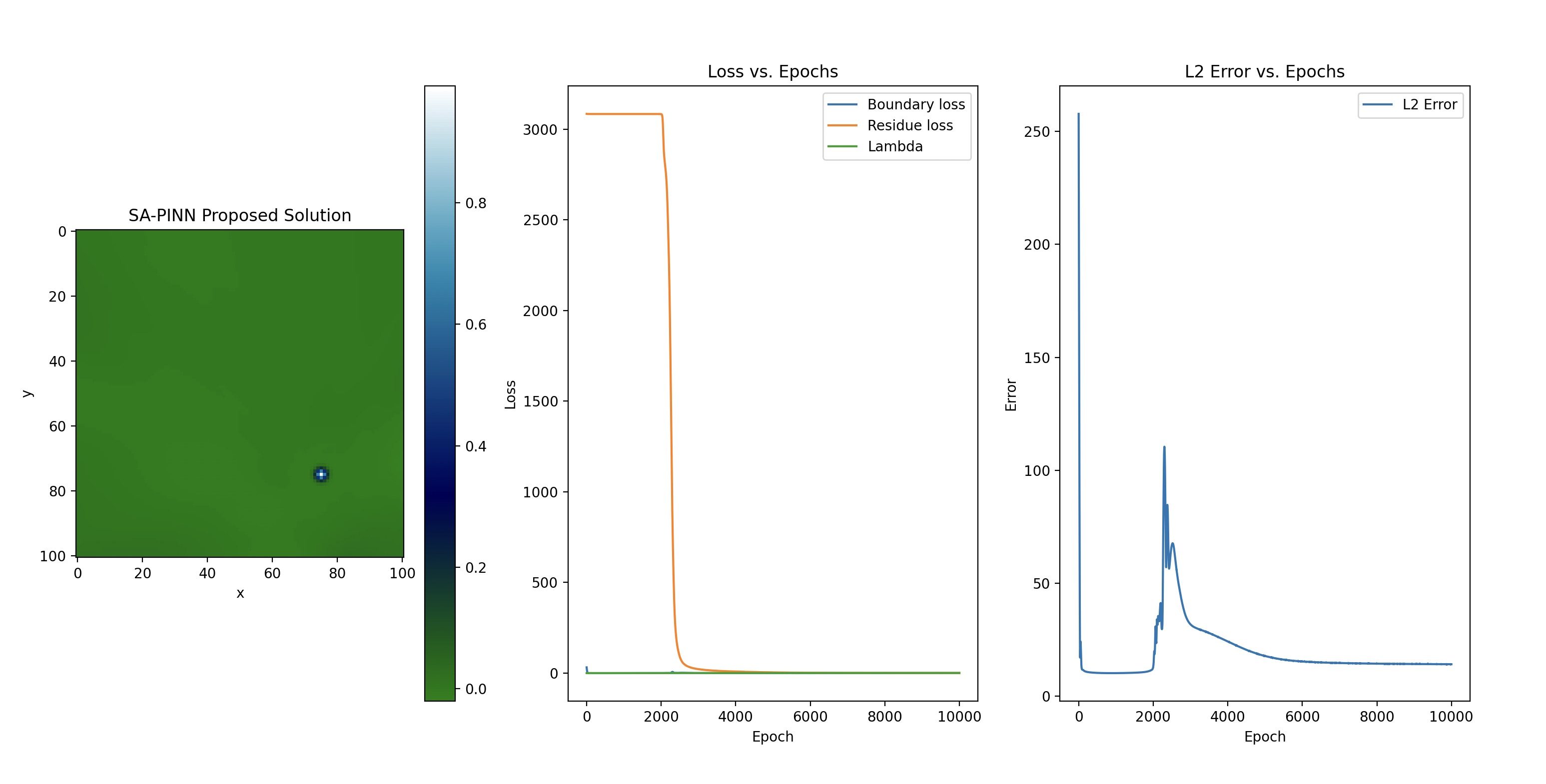

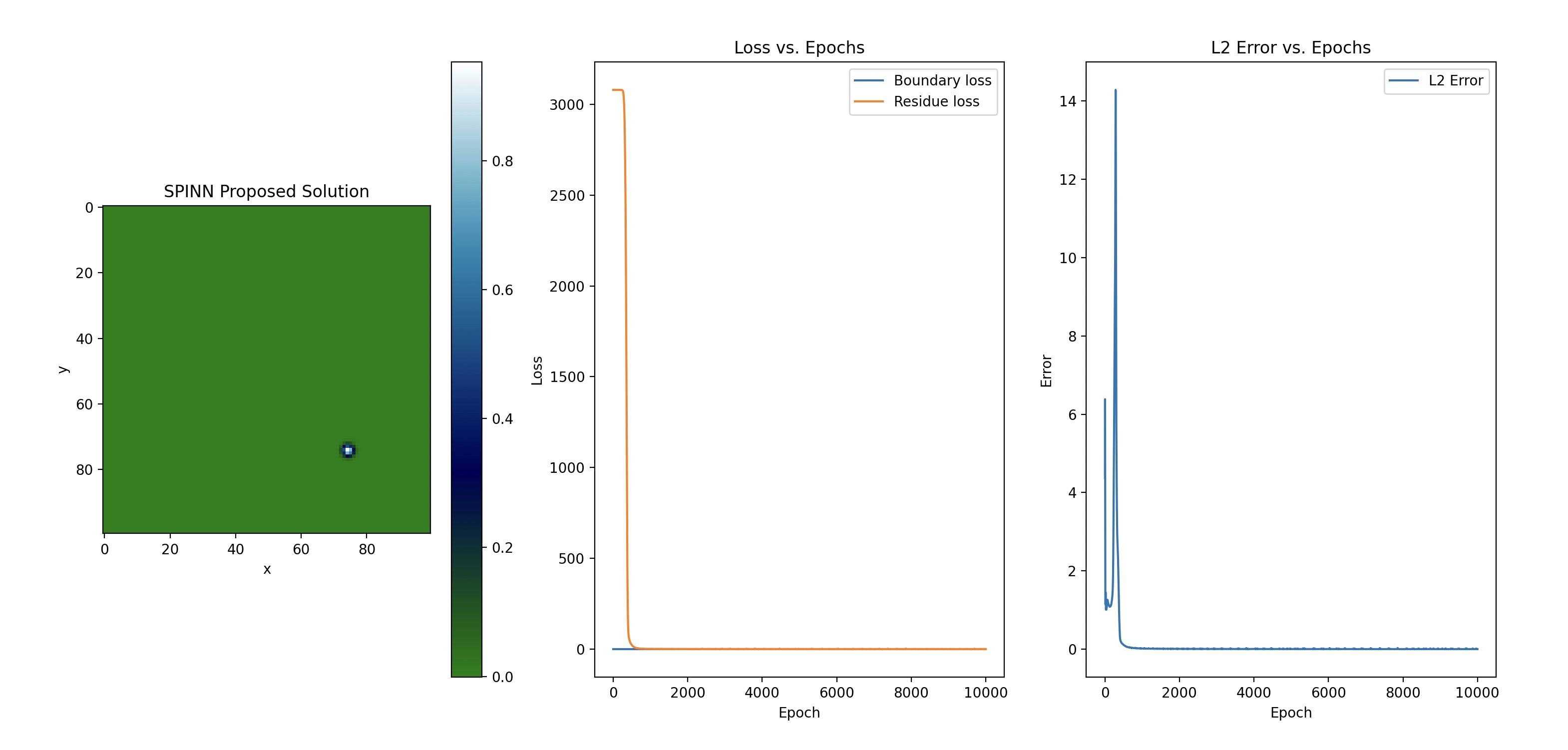

The images show the error plots for the Simple PINN, SA-PINN, and SPINN models, respectively. These plots show that the SPINN model achieved the lowest error throughout the training process. Additionally, the convergence of the SPINN model was significantly faster than the Simple PINN and SA-PINN models, as shown in the time per iteration column in Table 1. Moreover, where the Simple PINN and SA-PINN struggled with over-fitting the SPINN model kept optimising relative to reference solution.

Cho, Junwoo, et al. "Separable PINN: Mitigating the Curse of Dimensionality in Physics-Informed Neural Networks." arXiv preprint arXiv:2211.08761 (2022).