Conferences and conventions are hotspots for making connections. Professionals in attendance often share the same interests and can make valuable business and personal connections with one another. At the same time, these events draw a large crowd and it's often hard to make these connections in the midst of all of these events' excitement and energy. To help attendees make connections, we are building the infrastructure for a service that can inform attendees if they have attended the same booths and presentations at an event.

You work for a company that is building a app that uses location data from mobile devices. Your company has built a POC application to ingest location data named UdaTracker. This POC was built with the core functionality of ingesting location and identifying individuals who have shared a close geographic proximity.

Management loved the POC so now that there is buy-in, we want to enhance this application. You have been tasked to enhance the POC application into a MVP to handle the large volume of location data that will be ingested.

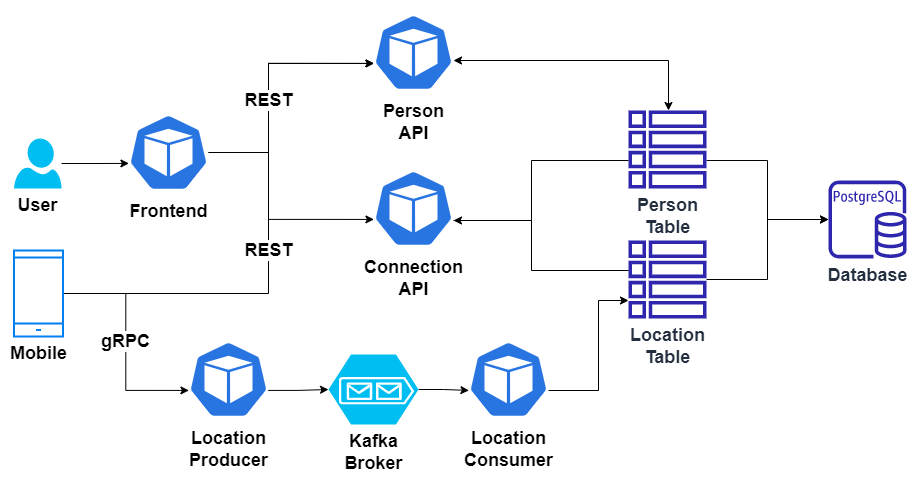

To do so, you will refactor this application into a microservice architecture using message passing techniques that you have learned in this course. It’s easy to get lost in the countless optimizations and changes that can be made: your priority should be to approach the task as an architect and refactor the application into microservices. File organization, code linting -- these are important but don’t affect the core functionality and can possibly be tagged as TODO’s for now!

- Flask - API webserver

- SQLAlchemy - Database ORM

- PostgreSQL - Relational database

- PostGIS - Spatial plug-in for PostgreSQL enabling geographic queries]

- Vagrant - Tool for managing virtual deployed environments

- VirtualBox - Hypervisor allowing you to run multiple operating systems

- K3s - Lightweight distribution of K8s to easily develop against a local cluster

The project has been set up such that you should be able to have the project up and running with Kubernetes.

We will be installing the tools that we'll need to use for getting our environment set up properly.

- Install Docker

- Set up a DockerHub account

- Set up

kubectl - Install VirtualBox with at least version 6.0

- Install Vagrant with at least version 2.0

Start PostGIS, ZooKeeper and Kafka locally using docker:

docker-compose up -d

Install Migrate from https://github.com/golang-migrate/migrate/tree/master/cmd/migrate, then run:

export DB_USERNAME="postgres" && export DB_PASSWORD="postgres" && export DB_HOST="localhost" && export DB_PORT="5432" && export DB_NAME="postgres"

export DATABASE="postgres://$DB_USERNAME:$DB_PASSWORD@$DB_HOST:$DB_PORT/$DB_DATABASE?sslmode=disable"

migrate -path="./db/" -database $DATABASE up

Run the following lines each in a separate terminal:

- Run Kafka Location Consumer

export DB_USERNAME="postgres" && export DB_PASSWORD="postgres" && export DB_HOST="localhost" && export DB_PORT="5432" && export DB_NAME="postgres"

KAFKA_CONSUMER="127.0.0.1:9092" python ./modules/location-consumer/app/udaconnect/consumer.py

- Run Kafka Location Producer

KAFKA_PRODUCER="127.0.0.1:9092" python ./modules/location-producer/app/udaconnect/producer.py

- Run Connection API on port 30040

export DB_USERNAME="postgres" && export DB_PASSWORD="postgres" && export DB_HOST="localhost" && export DB_PORT="5432" && export DB_NAME="postgres"

cd ./modules/connection && flask run --host 0.0.0.0 --port 30040

- Run Person API on port 30010

export DB_USERNAME="postgres" && export DB_PASSWORD="postgres" && export DB_HOST="localhost" && export DB_PORT="5432" && export DB_NAME="postgres"

cd ./modules/person && flask run --host 0.0.0.0 --port 30010

- Run Frontend on port 3000, you can access the UI over http://localhost:3000

cd modules/frontend && npm i && npm start

To run the application, you will need a K8s cluster running locally and to interface with it via kubectl. We will be using Vagrant with VirtualBox to run K3s.

In this project's root, run vagrant up.

$ vagrant upThe command will take a while and will leverage VirtualBox to load an openSUSE OS and automatically install K3s. When we are taking a break from development, we can run vagrant suspend to conserve some ouf our system's resources and vagrant resume when we want to bring our resources back up. Some useful vagrant commands can be found in this cheatsheet.

After vagrant up is done, you will SSH into the Vagrant environment and retrieve the Kubernetes config file used by kubectl. We want to copy the contents of this file into our local environment so that kubectl knows how to communicate with the K3s cluster.

$ vagrant sshYou will now be connected inside of the virtual OS. Run sudo cat /etc/rancher/k3s/k3s.yaml to print out the contents of the file. You should see output similar to the one that I've shown below. Note that the output below is just for your reference: every configuration is unique and you should NOT copy the output I have below.

Copy the contents from the output issued from your own command into your clipboard -- we will be pasting it somewhere soon!

$ sudo cat /etc/rancher/k3s/k3s.yaml

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUJWekNCL3FBREFnRUNBZ0VBTUFvR0NDcUdTTTQ5QkFNQ01DTXhJVEFmQmdOVkJBTU1HR3N6Y3kxelpYSjIKWlhJdFkyRkFNVFU1T1RrNE9EYzFNekFlRncweU1EQTVNVE13T1RFNU1UTmFGdzB6TURBNU1URXdPVEU1TVROYQpNQ014SVRBZkJnTlZCQU1NR0dzemN5MXpaWEoyWlhJdFkyRkFNVFU1T1RrNE9EYzFNekJaTUJNR0J5cUdTTTQ5CkFnRUdDQ3FHU000OUF3RUhBMElBQk9rc2IvV1FEVVVXczJacUlJWlF4alN2MHFseE9rZXdvRWdBMGtSN2gzZHEKUzFhRjN3L3pnZ0FNNEZNOU1jbFBSMW1sNXZINUVsZUFOV0VTQWRZUnhJeWpJekFoTUE0R0ExVWREd0VCL3dRRQpBd0lDcERBUEJnTlZIUk1CQWY4RUJUQURBUUgvTUFvR0NDcUdTTTQ5QkFNQ0EwZ0FNRVVDSVFERjczbWZ4YXBwCmZNS2RnMTF1dCswd3BXcWQvMk5pWE9HL0RvZUo0SnpOYlFJZ1JPcnlvRXMrMnFKUkZ5WC8xQmIydnoyZXpwOHkKZ1dKMkxNYUxrMGJzNXcwPQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://127.0.0.1:6443

name: default

contexts:

- context:

cluster: default

user: default

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: default

user:

password: 485084ed2cc05d84494d5893160836c9

username: adminType exit to exit the virtual OS and you will find yourself back in your computer's session. Create the file (or replace if it already exists) ~/.kube/config and paste the contents of the k3s.yaml output here.

Afterwards, you can test that kubectl works by running a command like kubectl describe services. It should not return any errors.

Run sh scripts/docker_build.sh to build all the docker images and push them to DockerHub. You need to replace your username first, for me it is maxritter.

Then install Kafka and Zookeeper on the Cluster:

helm repo add bitnami https://charts.bitnami.com/bitnami

helm install kafka-local bitnami/kafka \

--set persistence.enabled=true,zookeeper.persistence.enabled=true

Afterwards, install K8s Layer 2 Components, replacing the pod name for the scripts command:

kubectl apply -f deployment/kafka-configmap.yaml

kubectl apply -f deployment/db-configmap.yaml

kubectl apply -f deployment/db-secret.yaml

kubectl apply -f deployment/postgres.yaml

sh scripts/run_db_command.sh <POSTGRES_POD_NAME>

Finally, install K8s Layer 3 Components:

kubectl apply -f deployment/udaconnect-app.yaml

kubectl apply -f deployment/udaconnect-connection.yaml

kubectl apply -f deployment/udaconnect-location-consumer.yaml

kubectl apply -f deployment/udaconnect-location-producer.yaml

kubectl apply -f deployment/udaconnect-person.yaml

Once the project is up and running, you should be able to see 3 deployments and 3 services in Kubernetes:

kubectl get pods and kubectl get services - should both return udaconnect-app, udaconnect-api, and postgres

These pages should also load on your web browser:

http://localhost:30000/- Frontend ReactJS Applicationhttp://localhost:30010/- OpenAPI Documentation for Person APIhttp://localhost:30010/api/- Base path for Person APIhttp://localhost:30040/- OpenAPI Documentation for Connection APIhttp://localhost:30040/api/- Base path for Connection API

You may notice the odd port numbers being served to localhost. By default, Kubernetes services are only exposed to one another in an internal network. This means that udaconnect-app and udaconnect-api can talk to one another. For us to connect to the cluster as an "outsider", we need to a way to expose these services to localhost.

Connections to the Kubernetes services have been set up through a NodePort. (While we would use a technology like an Ingress Controller to expose our Kubernetes services in deployment, a NodePort will suffice for development.)