Welcome to our introductory session on Nextflow!

Nextflow is a powerful workflow language designed to streamline complex computational workflows, often used in fields like bioinformatics. The goal of this training workshop is not to transform you overnight into coding experts or bioinformatics scientists. Instead, we aim to highlight the key features and capabilities offered by using Nextflow with a set of very simple, relatable examples that should be digestable by anyone!

By the end of this workshop, you will grasp the essentials as to why Nextflow is a leading solution for managing large-scale data analysis and how it empowers users to achieve remarkable scientific breakthroughs with efficiency and flexibility.

More specifically, this session will cover the following key capabilities of Nextflow:

- Scalability

- Parallelism

- Reproducibility

- Resumability

- Reporting

- Flexibility

If you walk away from this workshop being able to understand and communicate these advantages to others then we will consider the workshop a success!

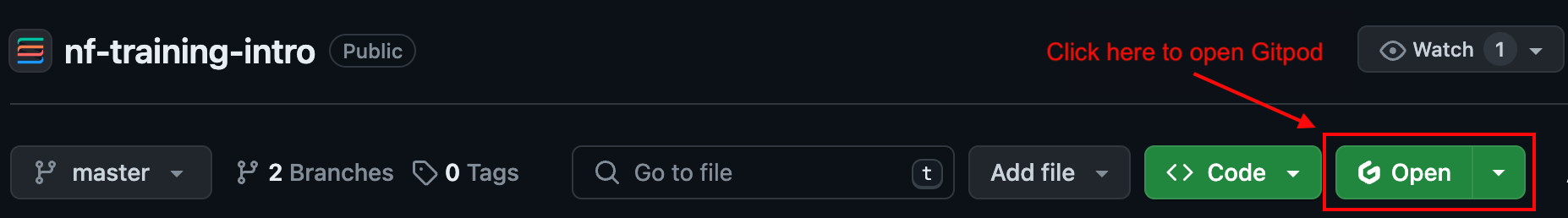

For this workshop we are going to be using a tool called GitPod, which provides us with a fully managed environment to deliver the training. You will need a GitHub account, so if you don't have one, go to GitHub and create one for yourself.

It will make things easier for you if you have a browser extension to provide easier access to GitPod. Go here and follow the link to install the extension for your preferred browser. Restart your browser if required.

At the top of the nf-training-intro GitHub repository, you should see a green button with a funny-shaped 'G'. Click that button, follow any prompts, wait for the environment to initialize, and you should get a GitPod workspace with everything pre-installed for this training workshop.

Please don't worry if you are unfamiliar with command-line environments, you will just be copying commands to follow along.

The major difference between a command-line environment and conventional interfaces like Windows or macOS is that you have to issue commands to do even the simple things you would normally take for granted in a grapical user interface.

For example, you would issue the following commands:

- To enter a directory or folder called

data/

cd data- List files in a directory/folder:

ls- Go back up a level to a parent directory:

cd ..In the different sections of this tutorial, we will explore how to run an existing classification model using OpenAI's Contrastive Language–Image Pre-training (CLIP). It is a versatile tool that can understand and classify images based on natural language descriptions. Again don't worry, the previous sentence is a mouthful of random words to most of us - all will become clear as we progress through the tutorial!

We have intentionally picked a use case quite different to the usual scientific workloads, to make the transferability of the concepts more relatable as we progress through the workshop.

You have been given a set of pictures of your colleagues' animals, and you want to be able to classify them, so you can make attractive collages of them to present at your company retreat. Unfortunately you have developed cat/dog/spider-blindness and can't classify the critters with the naked eye. Although we are not quite yet in the days of an adult John Connor, fortunately for you this is 2024, so you can still enlist the help of AI.

To make this workshop palatable for eveyone we have already:

- Dumped a bunch of animal pictures in the

data/folder. - Written a simple Python script called

classify.py, which is uses CLIP to classify the bloomin' critters.

Your mission should you choose to accept is going to be to:

- Use CLIP to assign critters to classes based on a list of labels you provide.

- Make a collage of the critters in each class.

- Combine the collages to create a single, glorious critter cornucopia.

This workshop has been split up into 4 sections with decreasing manual intervention to highlight the key strengths of Nextflow. The last section will help to contextualize how we can leverage Nextflow in combination with the Seqera Platform to solve challenges like scalability and

Please complete the following critter classification sections in order: