I'm working on two Information Retrieval courses at the Vienna University of Technology (TU Wien), mainly focusing on the master-level Advanced Information Retrieval course. I try to create engaging, fun, and invormative lectures and exercises.

- The Introduction to Information Retrieval course focuses on IR basics and engineering skills of the students.

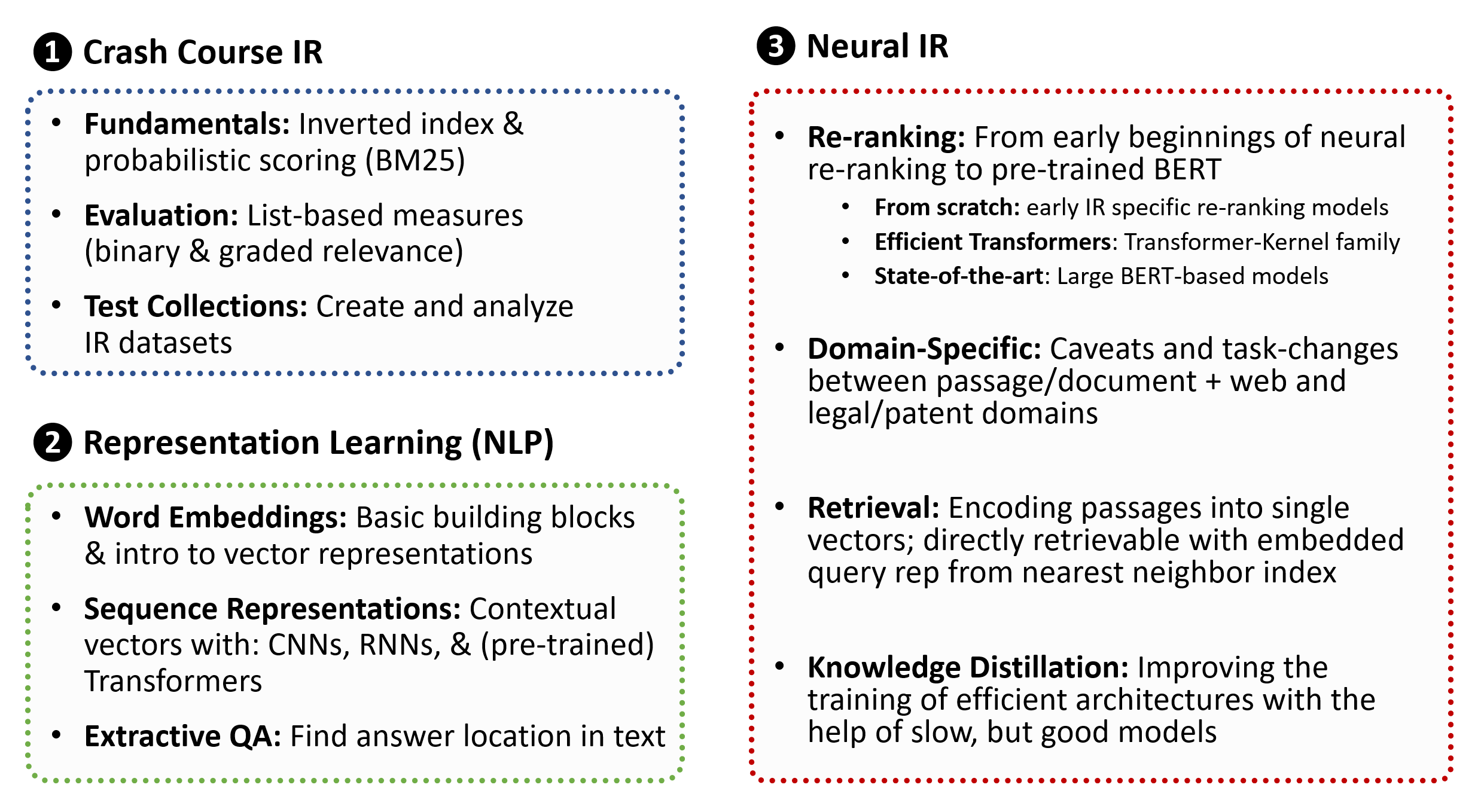

- The Advanced Information Retrieval course focuses on machine learning & neural IR techniques and tries to follow the state-of-the-art in IR research.

Please feel free to open up an issue or a pull request if you want to add something, find a mistake, or think something should be explained better!

Information Retrieval is the science behind search technology. Certainly, the most visible instances are the large Web Search engines, the likes of Google and Bing, but information retrieval appears everywhere we have to deal with unstructured data (e.g. free text).

A paradigm shift. Taking off in 2019 the Information Retrieval research field began an enormous paradigm shift towards utilizing BERT-based language models in various forms to great effect with huge leaps in quality improvements for search results using large-scale training data. This course aims to showcase a slice of these advances in state-of-the-art IR research towards the next generation of search engines.

In the following we provide links to recordings, slides, and closed captions for our lectures. Here is a complete playlist on YouTube.

| Topic | Description | Recordings | Slides | Text |

|---|---|---|---|---|

| 0: Introduction | Infos on requirements, topics, organization | YouTube | Transcript | |

| 1: Crash Course IR Fundamentals | We explore two fundamental building blocks of IR: indexing and ranked retrieval | YouTube | Transcript | |

| 2: Crash Course IR Evaluation | We explore how we evaluate ranked retrieval results and common IR metrics (MRR, MAP, NDCG) | YouTube | Transcript | |

| 3: Crash Course IR Test Collections | We get to know existing IR test collections, look at how to create your own, and survey potential biases & their effect in the data | YouTube | Transcript | |

| 4: Word Representation Learning | We take a look at word representations and basic word embeddings including a usage example in Information Retrieval | YouTube | Transcript | |

| 5: Sequence Modelling | We look at CNNs and RNNs for sequence modelling, including the basics of the attention mechanism. | YouTube | Transcript | |

| 6: Transformer & BERT | We study the Transformer architecture; pre-training with BERT, the HuggingFace ecosystem where the community can share models; and overview Extractive Question Answering (QA). | YouTube | Transcript | |

| 7: Introduction to Neural Re‑Ranking | We look at the workflow (including training and evaluation) of neural re-ranking models and some basic neural re-ranking architectures. | YouTube | Transcript | |

| 8: Transformer Contextualized Re‑Ranking | We learn how to use Transformers (and the pre-trained BERT model) for neural re-ranking - for the best possible results and more efficient approaches, where we tradeoff quality for performance. | YouTube | Transcript | |

| 9: Domain Specific Applications Guest lecture by @sophiaalthammer | We learn how about different task settings, challenges, and solutions in domains other than web search. | YouTube | Transcript | |

| 10: Dense Retrieval ❤ Knowledge Distillation | We learn about the (potential) future of search: dense retrieval. We study the setup, specific models, and how to train DR models. Then we look at how knowledge distillation greatly improves the training of DR models and topic aware sampling to get state-of-the-art results. | YouTube | Transcript |

In this exercise your group is implementing neural network re-ranking models, using pre-trained extractive QA models, and analyze their behavior with respect to our FiRA data.