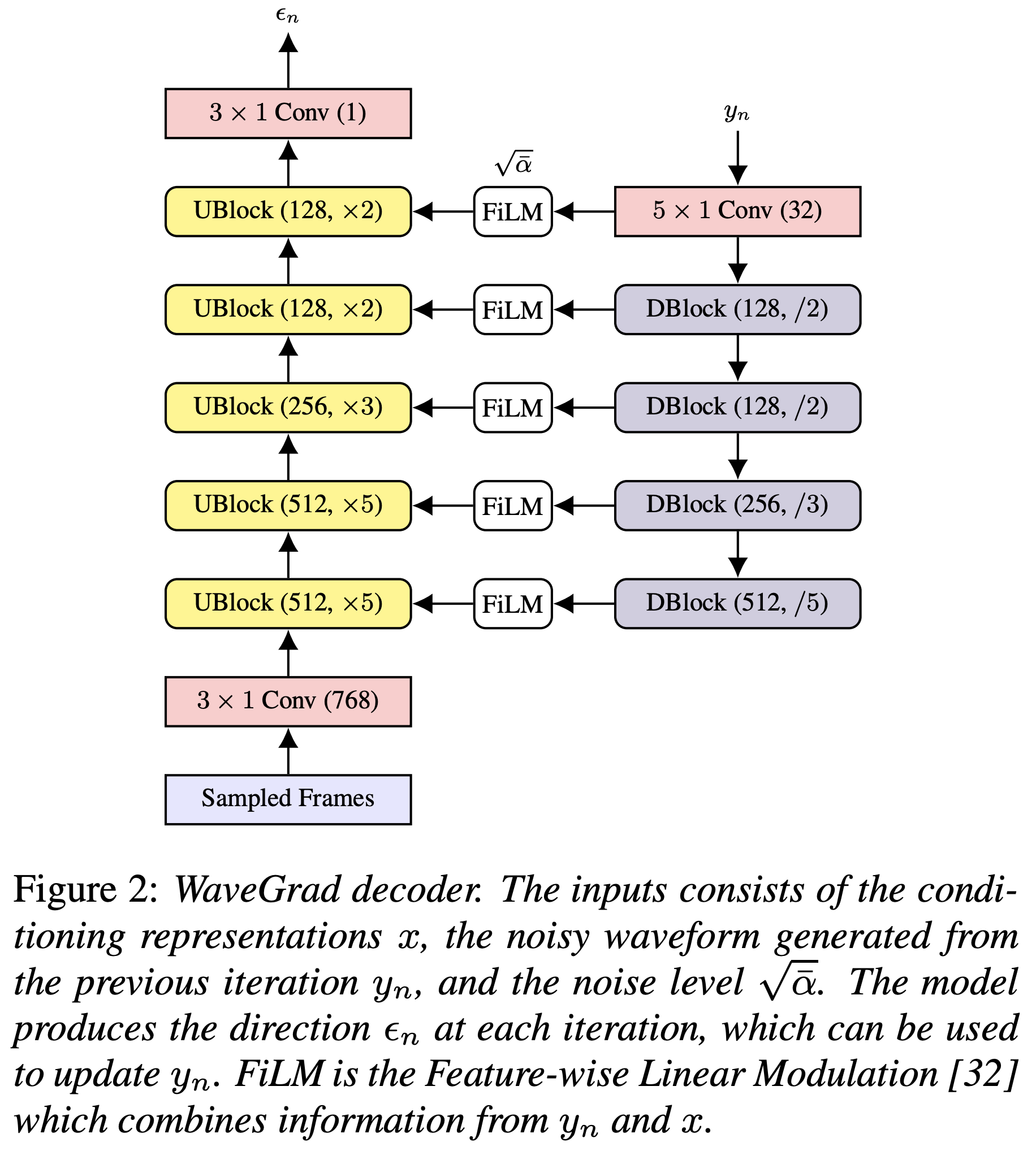

PyTorch Implementation of Google Brain's WaveGrad 2: Iterative Refinement for Text-to-Speech Synthesis.

- Working on

You can install the Python dependencies with

pip3 install -r requirements.txt

You have to download the pretrained models and put them in output/ckpt/LJSpeech/.

For English single-speaker TTS, run

python3 synthesize.py --text "YOUR_DESIRED_TEXT" --restore_step 900000 --mode single -p config/LJSpeech/preprocess.yaml -m config/LJSpeech/model.yaml -t config/LJSpeech/train.yaml

The generated utterances will be put in output/result/.

Batch inference is also supported, try

python3 synthesize.py --source preprocessed_data/LJSpeech/val.txt --restore_step 900000 --mode batch -p config/LJSpeech/preprocess.yaml -m config/LJSpeech/model.yaml -t config/LJSpeech/train.yaml

to synthesize all utterances in preprocessed_data/LJSpeech/val.txt

The speaking rate of the synthesized utterances can be controlled by specifying the desired duration ratios. For example, one can increase the speaking rate by 20 % by

python3 synthesize.py --text "YOUR_DESIRED_TEXT" --restore_step 900000 --mode single -p config/LJSpeech/preprocess.yaml -m config/LJSpeech/model.yaml -t config/LJSpeech/train.yaml --duration_control 0.8

The supported datasets are

- LJSpeech: a single-speaker English dataset consists of 13100 short audio clips of a female speaker reading passages from 7 non-fiction books, approximately 24 hours in total.

- (will be added more)

First, run

python3 prepare_align.py config/LJSpeech/preprocess.yaml

for some preparations.

As described in the paper, Montreal Forced Aligner (MFA) is used to obtain the alignments between the utterances and the phoneme sequences.

Alignments for the LJSpeech datasets are provided here (thanks to ming024's FastSpeech2).

You have to unzip the files in preprocessed_data/LJSpeech/TextGrid/.

After that, run the preprocessing script by

python3 preprocess.py config/LJSpeech/preprocess.yaml

Alternately, you can align the corpus by yourself. Download the official MFA package and run

./montreal-forced-aligner/bin/mfa_align raw_data/LJSpeech/ lexicon/librispeech-lexicon.txt english preprocessed_data/LJSpeech

or

./montreal-forced-aligner/bin/mfa_train_and_align raw_data/LJSpeech/ lexicon/librispeech-lexicon.txt preprocessed_data/LJSpeech

to align the corpus and then run the preprocessing script.

python3 preprocess.py config/LJSpeech/preprocess.yaml

Train your model with

python3 train.py -p config/LJSpeech/preprocess.yaml -m config/LJSpeech/model.yaml -t config/LJSpeech/train.yaml

Use

tensorboard --logdir output/log/LJSpeech

to serve TensorBoard on your localhost.

- Use

22050Hzinstead of24KHzand follow general LJSpeech configurations. - Add

nn.ReLU()activation at the end of the duration predictor to force the value positive. - Follow the Aligher of EATS: End-to-End Adversarial Text-to-Speech for the Gaussian upsampling, rather than that of Non-Attentive Tacotron.

@misc{lee2021wavegrad2,

author = {Lee, Keon},

title = {WaveGrad2},

year = {2021},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/keonlee9420/WaveGrad2}}

}