A Python/Pytorch app for easily synthesising human voices

- Windows 10 or Ubuntu 20.04+ operating system

- 5GB+ Disk space

- NVIDIA GPU with at least 4GB of memory & driver version 450.36+ (optional)

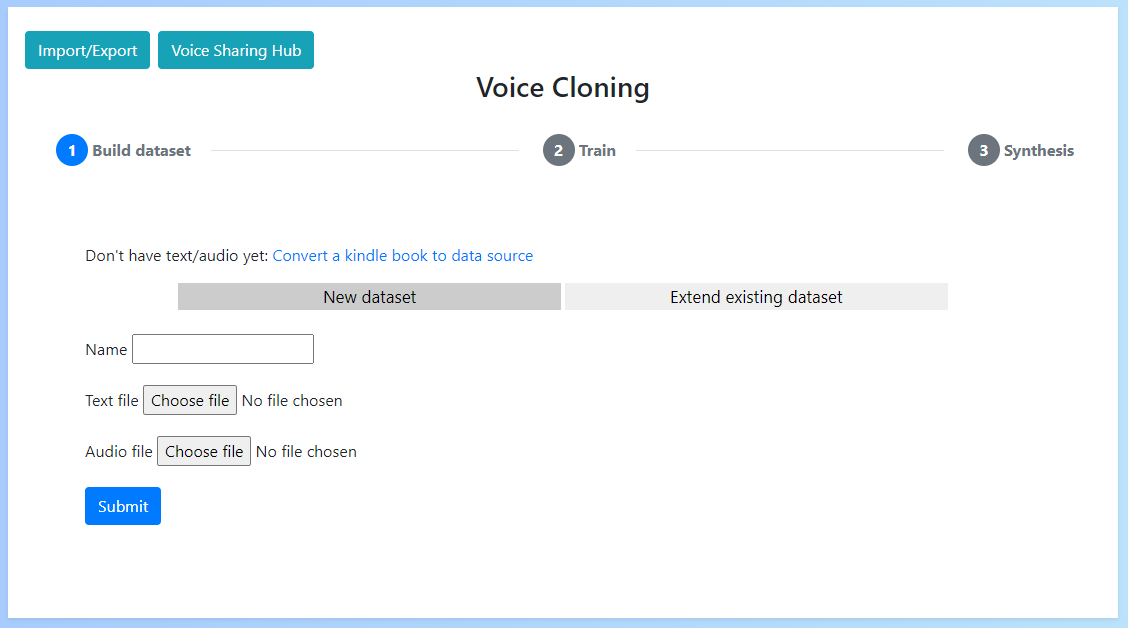

- Automatic dataset generation

- Additional language support

- Local & remote training

- Easy train start/stop

- Tools for extracting kindle & audible as data sources

- Data importing/exporting

- Word replacement suggestion

- Multi GPU support

- Add support for alternative models

- Improved batch size estimation

- AMD GPU support

- Try out existing voices at uberduck.ai and Vocodes

- Synthesize in Colab (created by mega b#6696)

- Generate youtube transcription (created by mega b#6696)

- Wit.ai transcription

This project uses a reworked version of Tacotron2 & Waveglow. All rights for belong to NVIDIA and follow the requirements of their BSD-3 licence.

Additionally, the project uses DSAlign, Silero, DeepSpeech & hifi-gan.

Thank you to Dr. John Bustard at Queen's University Belfast for his support throughout the project.

Supported by uberduck.ai, reach out to them for live model hosting.

Also a big thanks to the members of the VocalSynthesis subreddit for their feedback.

Finally thank you to everyone raising issues and contributing to the project.