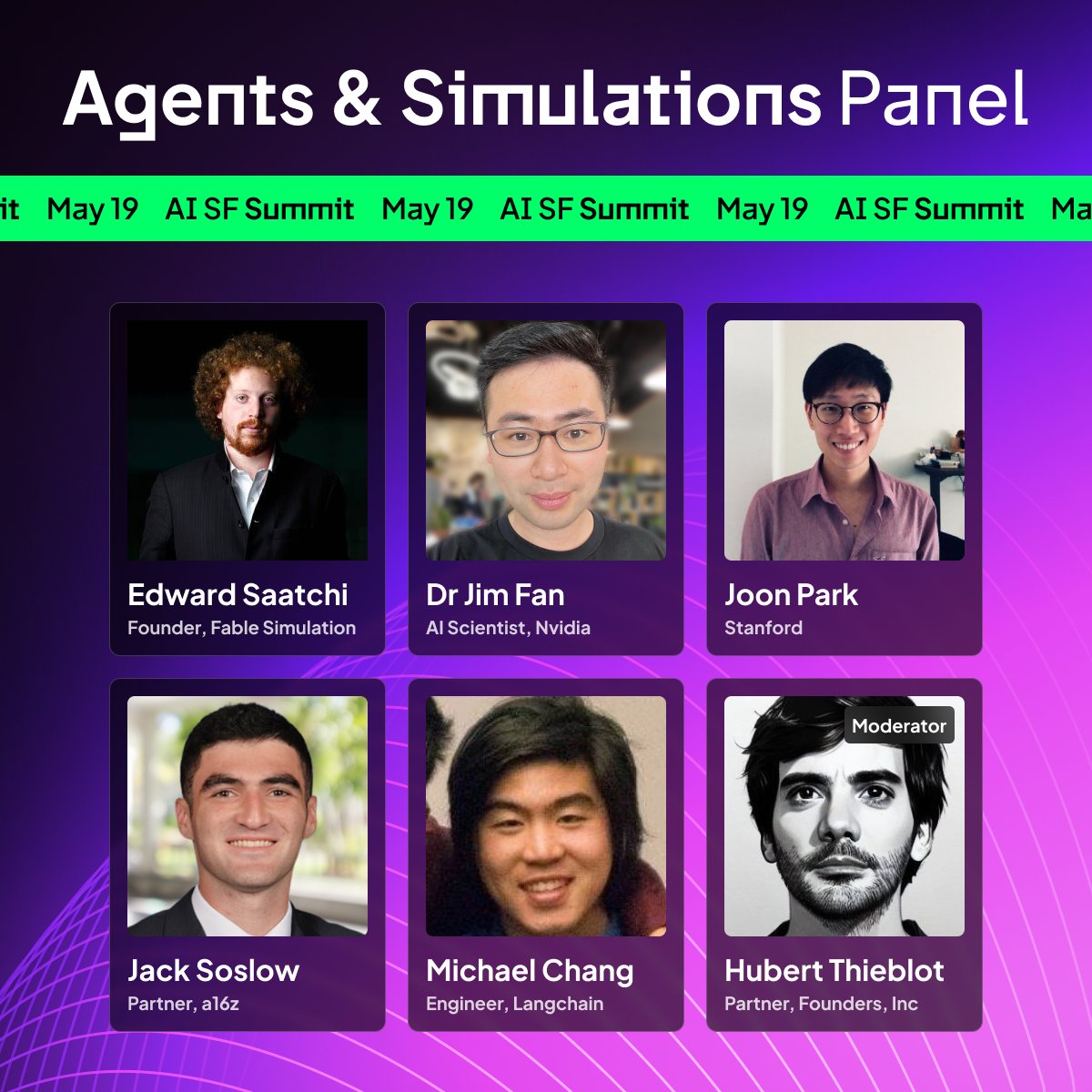

Missed the panel discussion at the AI SF Summit https://aisf.co/ that happened on May 19, 2023?

Never fear, with this repo you can re-simulate the discussion with the panelists about any topic at your leisure.

Play with it here.

The challenge faced by generating multi-agent simulations is determining the order in which the agents speak. To address this challenge, this application uses a "director" agent to decide the order in which the agents speak. You can find an example of how to implement this method, as well as other speaker selection methods, in the LangChain docs.

Created by Michael Chang (@mmmbchang) at LangChain.

pip install -r requirements.txt

First, obtain an OpenAI API key. If you want to listen to sound, also obtain an ElevenLabs API key.

Run with: python -m streamlit run main.py

This application simulates a fake dialogue among fictitious renditions of real people. To protect the privacy of the individuals represented, note that:

- the content generated by this application is entirely synthetic and is not actual statements or opinions expressed by the individuals represented;

- the voices used in this application are synthetically generated by Eleven Labs and do not reflect the voices of the individuals represented.

This application is intended for demonstration and entertainment purposes only. The creator of this application takes no responsibility for any actions taken based on its content.

This application is powered by OpenAI and created with LangChain, Streamlit, and Eleven Labs. The UI design was inspired by WhatIfGPT. Thank you to the LangChain team for their support and feedback.