Minimal unofficial implementation of Consistency Trajectory models proposed in paper_link. High chance that some implementation errors remain, since everything is implemented from the paper without code examples. Please also check out the original code from the authors to run experiments for high-resolution image generation experiments ctm.

pip install -e .A new class of generative models close of Diffusion models, that learn to model the trajectory of Probability Flow ODEs directly. Diffusion models learn to predict the denoised action

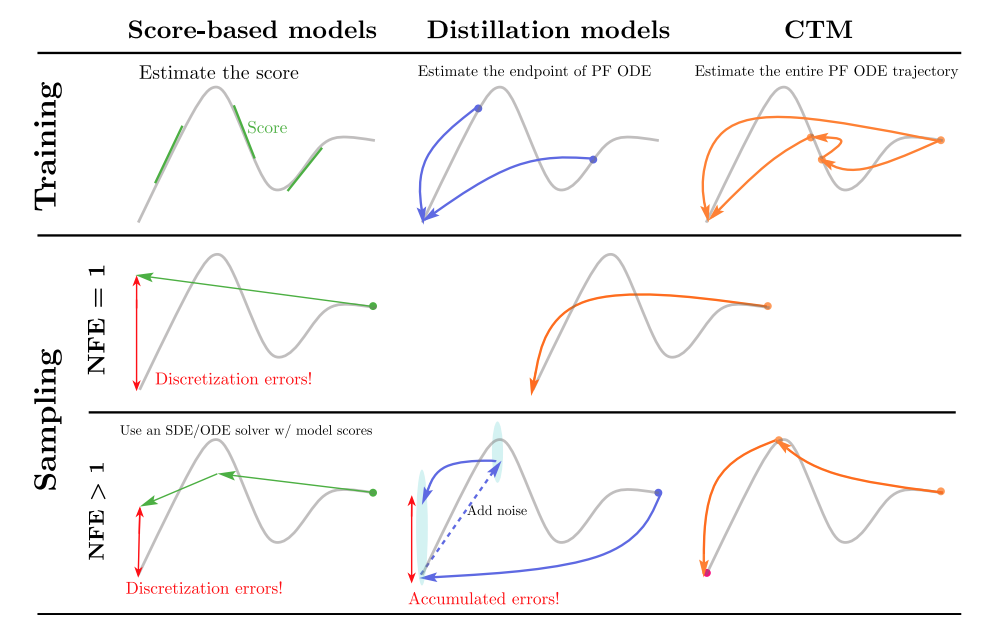

Overview of different model classes w.r.t. Sampling from the ODE

with the standard Karras et al. (2022) preconditioning functions inside

The original paper proposes the following training objective consisting of a score matching objective and a consistency loss combined with an additional GAN loss. However, since we do not wanna use GAIL style training (yet) we only use the score matching objective and the consistency loss.

The score matching objective is defined as follows:

The soft consistency matching loss is defined as:

where the

and

The soft consistency loss tries to enforce local consistency and global consistency for the model, which should enable small jumps for ODE-like sovlers and large jumps for few step generation.

For our application the GAN loss is not usable, thus I didnt implement it yet.

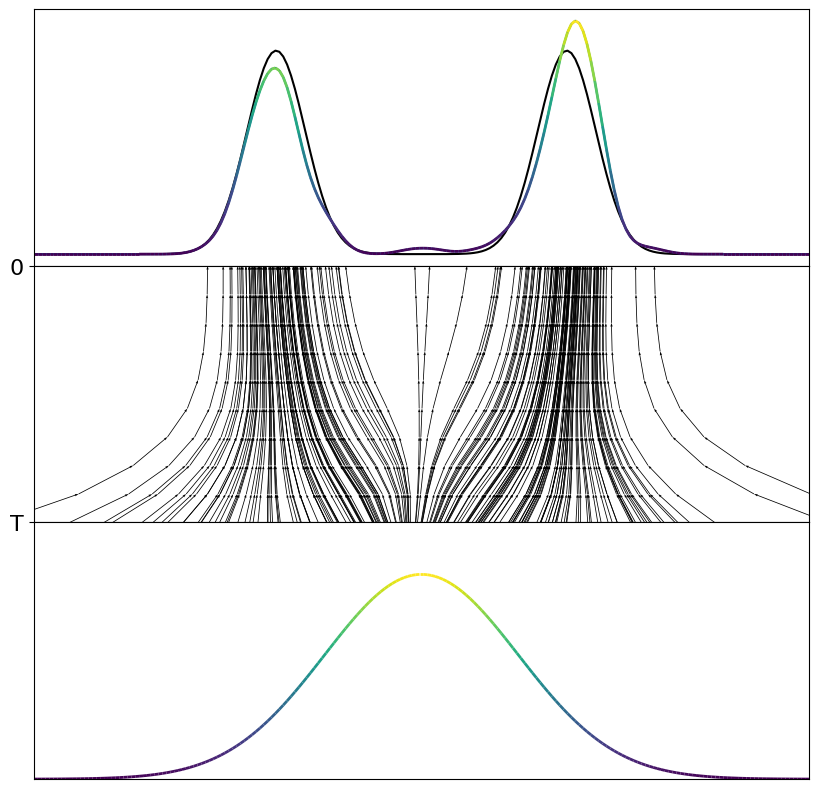

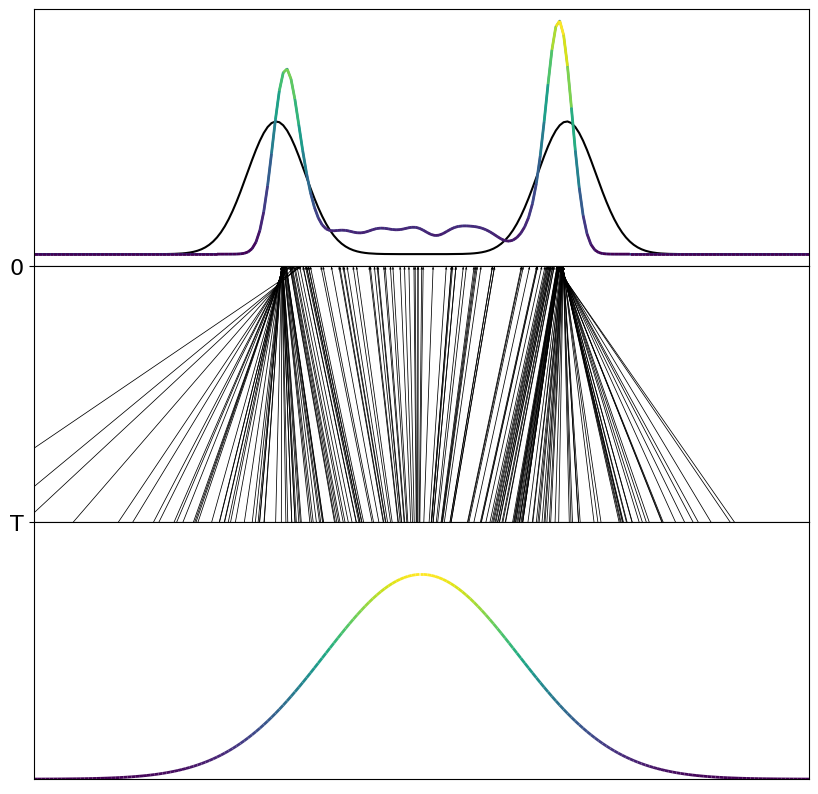

Here are some first results of CMT trained from scratch without a teacher model. Further experiments with teacher models need to be tested.

From left to right: CMT with 10 Euler Steps, Multistep and Single Step prediction with CMT.

Right now the model performs significantly better with 10 steps. Working on improving the single step prediction. Lets see whats possible. However, even the main author of the consistency model paper noted in his recent rebuttal link, that Consistency models work better on high-dimensional datasets. So it can be, that the same counts for CTM.

...

- Implement the new sampling method

- Add new toy tasks

- Compare teacher and student models vs from scratch training

- Find good hyperaparmeters

- Work on better single step inference

- Add more documentation

- the model is based on the paper Consistency Trajectory Models

If you found this code helpfull please cite the original paper:

@article{kim2023consistency,

title={Consistency Trajectory Models: Learning Probability Flow ODE Trajectory of Diffusion},

author={Kim, Dongjun and Lai, Chieh-Hsin and Liao, Wei-Hsiang and Murata, Naoki and Takida, Yuhta and Uesaka, Toshimitsu and He, Yutong and Mitsufuji, Yuki and Ermon, Stefano},

journal={arXiv preprint arXiv:2310.02279},

year={2023}

}

---