• [arXiv] •

Despite the success of large-scale pretrained Vision-Language Models (VLMs) especially CLIP in various open-vocabulary tasks, their application to semantic segmentation remains challenging, producing noisy segmentation maps with mis-segmented regions. In this paper, we carefully re-investigate the architecture of CLIP, and identify residual connections as the primary source of noise that degrades segmentation quality. With a comparative analysis of statistical properties in the residual connection and the attention output across different pretrained models, we discover that CLIP's image-text contrastive training paradigm emphasizes global features at the expense of local discriminability, leading to noisy segmentation results. In response, we propose ClearCLIP, a novel approach that decomposes CLIP's representations to enhance open-vocabulary semantic segmentation. We introduce three simple modifications to the final layer: removing the residual connection, implementing the self-self attention, and discarding the feed-forward network. ClearCLIP consistently generates clearer and more accurate segmentation maps and outperforms existing approaches across multiple benchmarks, affirming the significance of our discoveries.

# git clone this repository

git clone https://github.com/mc-lan/ClearCLIP.git

cd ClearCLIP

# create new anaconda env

conda create -n ClearCLIP python=3.10

conda activate ClearCLIP

# install torch and dependencies

pip install -r requirements.txt

We include the following dataset configurations in this repo:

With background class: PASCAL VOC, PASCAL Context, Cityscapes, ADE20k, and COCO-Stuff164k,Without background class: VOC20, Context59 (i.e., PASCAL VOC and PASCAL Context without the background category), and COCO-Object.

Please follow the MMSeg data preparation document to download and pre-process the datasets. The COCO-Object dataset can be converted from COCO-Stuff164k by executing the following command:

python datasets/cvt_coco_object.py PATH_TO_COCO_STUFF164K -o PATH_TO_COCO164K

python demo.py

Single-GPU:

python eval.py --config ./config/cfg_DATASET.py --workdir YOUR_WORK_DIR

Multi-GPU:

bash ./dist_test.sh ./config/cfg_DATASET.py

Evaluation on all datasets:

python eval_all.py

Results will be saved in results.xlsx.

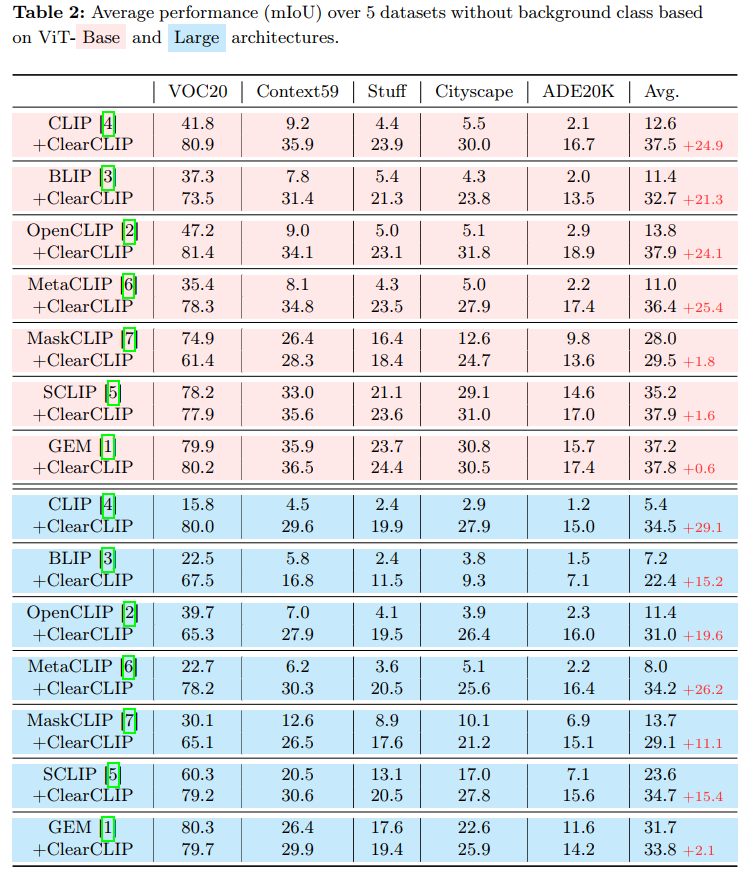

We provide the comparison results (in Appendix) on five datasets without background class by using our implementation as below:

@inproceedings{lan2024clearclip,

title={ClearCLIP: Decomposing CLIP Representations for Dense Vision-Language Inference},

author={Mengcheng Lan and Chaofeng Chen and Yiping Ke and Xinjiang Wang and Litong Feng and Wayne Zhang},

booktitle={ECCV},

year={2024},

}

This project is licensed under NTU S-Lab License 1.0. Redistribution and use should follow this license.

This study is supported under the RIE2020 Industry Align- ment Fund – Industry Collaboration Projects (IAF-ICP) Funding Initiative, as well as cash and in-kind contribution from the industry partner(s).

This implementation is based on OpenCLIP and SCLIP. Thanks for the awesome work.

If you have any questions, please feel free to reach out at lanm0002@e.ntu.edu.sg.