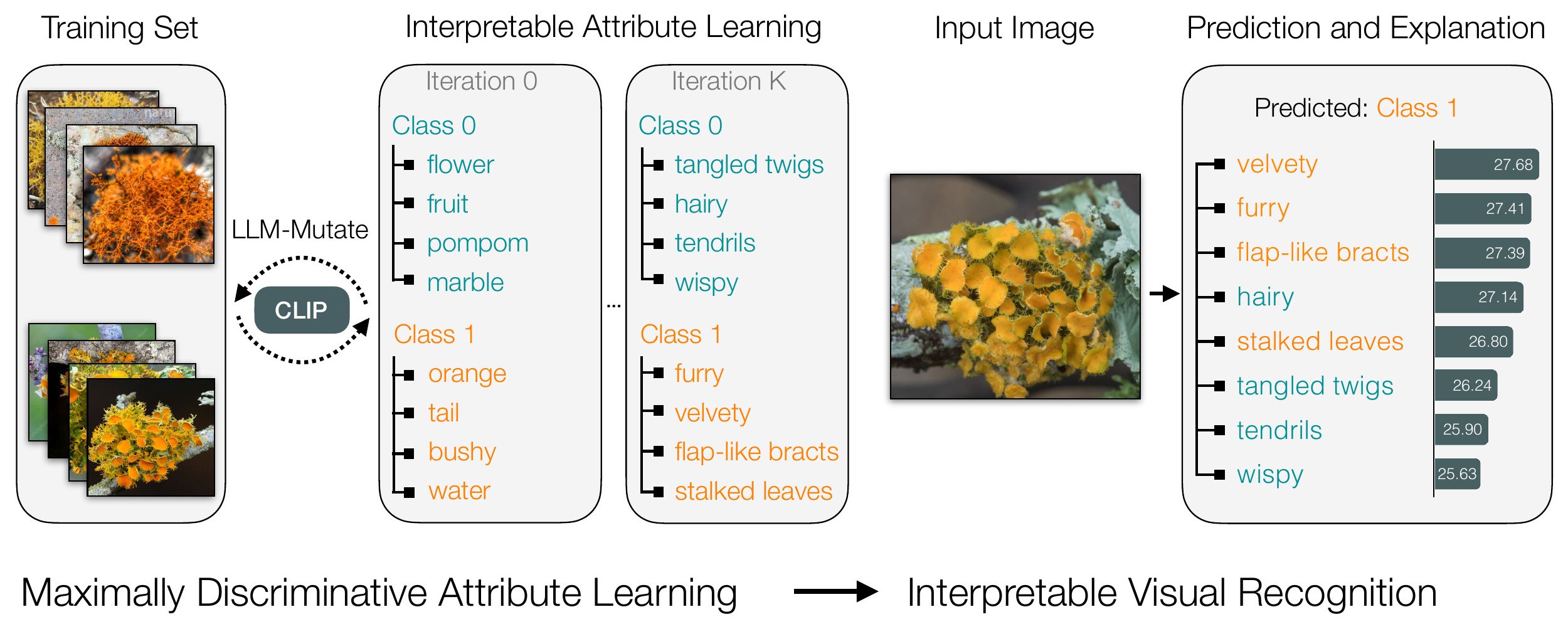

This is the code for the paper Evolving Interpretable Visual Classifiers with Large Language Models by Mia Chiquier, Utkarsh Mall and Carl Vondrick.

Clone recursively:

git clone --recurse-submodules https://github.com/mchiquier/llm-mutate.gitAfter cloning:

cd llm-mutate

export PATH=/usr/local/cuda/bin:$PATH

bash setup.sh # This may take a while. Make sure the llm-mutate environment is active- Clone this repository with its submodules.

- Install the dependencies. See Dependencies.

- Install vLLM. See vLLM.

git clone --recurse-submodules https://github.com/mchiquier/llm-mutate.gitFirst, create a conda environment using setup_env.sh.

To do so, just cd into the llm-mutate directory, and run:

export PATH=/usr/local/cuda/bin:$PATH

bash setup_env.sh

conda activate llmmutateLaunch a server that hosts the LLM with vLLM in a tmux.

python -m vllm.entrypoints.openai.api_server --model meta-llama/Llama-2-70b-chat-hf --tensor-parallel-size 8 --chat-template ./examples/template_chatml.jinja --trust-remote-code

Download the iNaturalist dataset (specifically, 'train' and 'validation') from : https://github.com/visipedia/inat_comp/tree/master/2021

Update the path to the parent folder of train and val in config.py : self.dataset_path = YOUR_PATH.

Download the two KikiBouba datasets here:

KikiBouba_v1: https://drive.google.com/file/d/1-a4FRS9N1DLf3_YIYq8150zN1uilapft/view?usp=sharing KikiBouba_v2: https://drive.google.com/file/d/17ibF3tzFiZrMb9ZnpYlLEh-xmWkPJpNH/view?usp=sharing

Remember to update the dataset_path in the config.py file.

Running this will automatically launch both pre-training and joint-training on the iNaturalist dataset for the Lichen synset. To change the synset, modify the config file. See paper for explanation of pretraining/joint training.

python src/llm-mutate.py

All you need to do to run LLM-Mutate on your dataset is update the dataset path in the config.py file and also make sure that this path points to a folder that has the following folder structure.

dataset-root/

│

├── train/

│ ├── class 1/

│ │ ├── img1.png

│ │ ├── img2.png

...

├── val/

│ ├── class 1/

│ │ ├── img1.png

│ │ ├── img2.png

NOTE: By default, we only run on the first 5 classes in your folder structure for time efficiency. If you'd like to change this, modify the num_classes attribute in config.py.

python src/llm-mutate.py

Specify what experiment you'd like to evaluate in the config.py file in the experiment attribute. You can pick from: zero_shot, clip_scientific, clip_common, cbd_scientific, cbd_common, ours. Note that for the KikiBouba datasets there is no difference between scientific and common as there is only one class name per class.

python src/inference.py

If you use this code, please consider citing the paper as:

@article{chiquier2024evolving,

title={Evolving Interpretable Visual Classifiers with Large Language Models},

author={Chiquier, Mia and Mall, Utkarsh and Vondrick, Carl},

journal={arXiv preprint arXiv:2404.09941},

year={2024}

}