Shu Miao,Guang Chen,Xiangyu Ning,Yang Zi,Kejia Ren,Zhenshan Bing,Alois Knoll

Institute of Intelligent Vehicle, Tongji Unversity, Shanghai, China

Technical University Munich, Munich, Germany

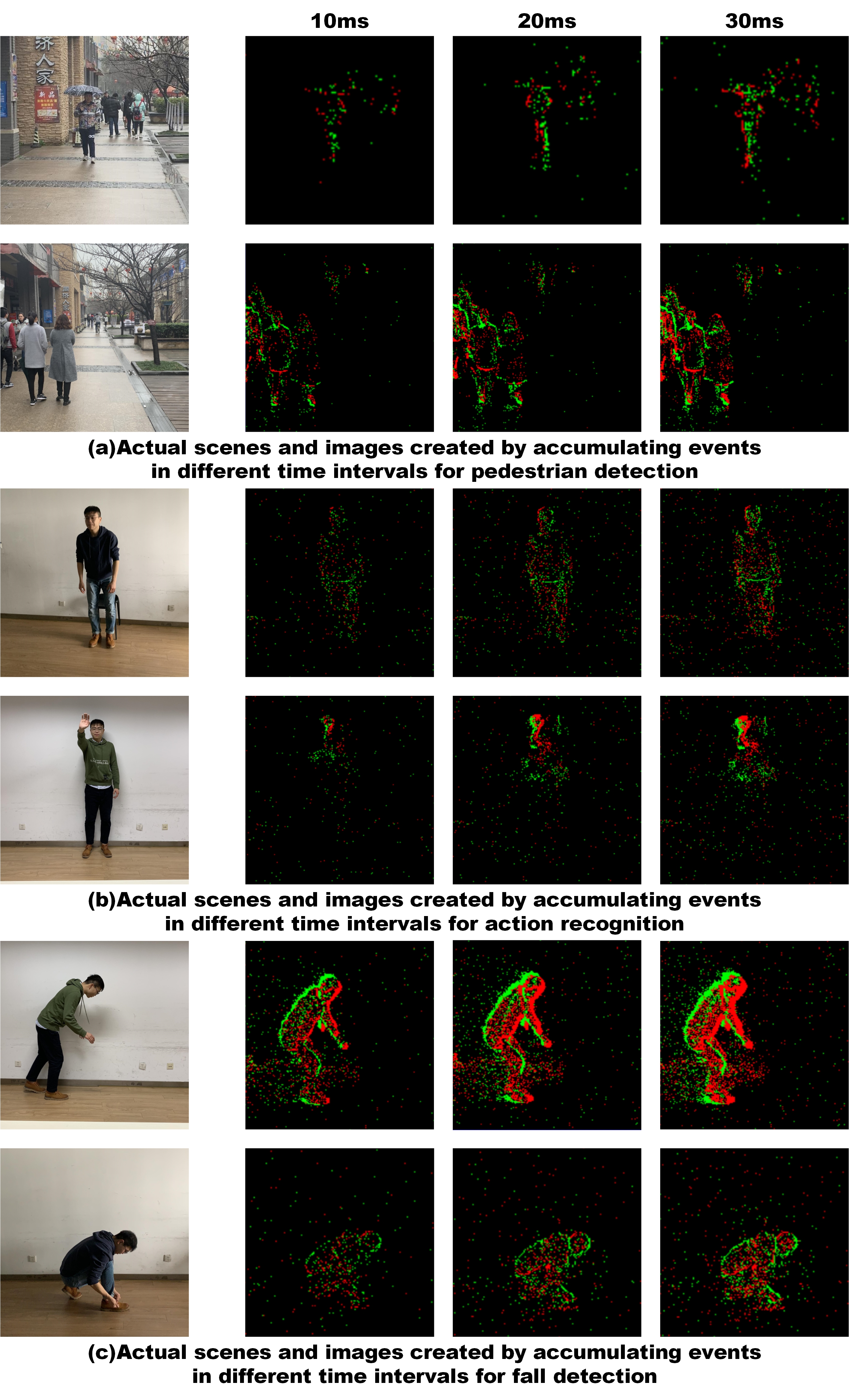

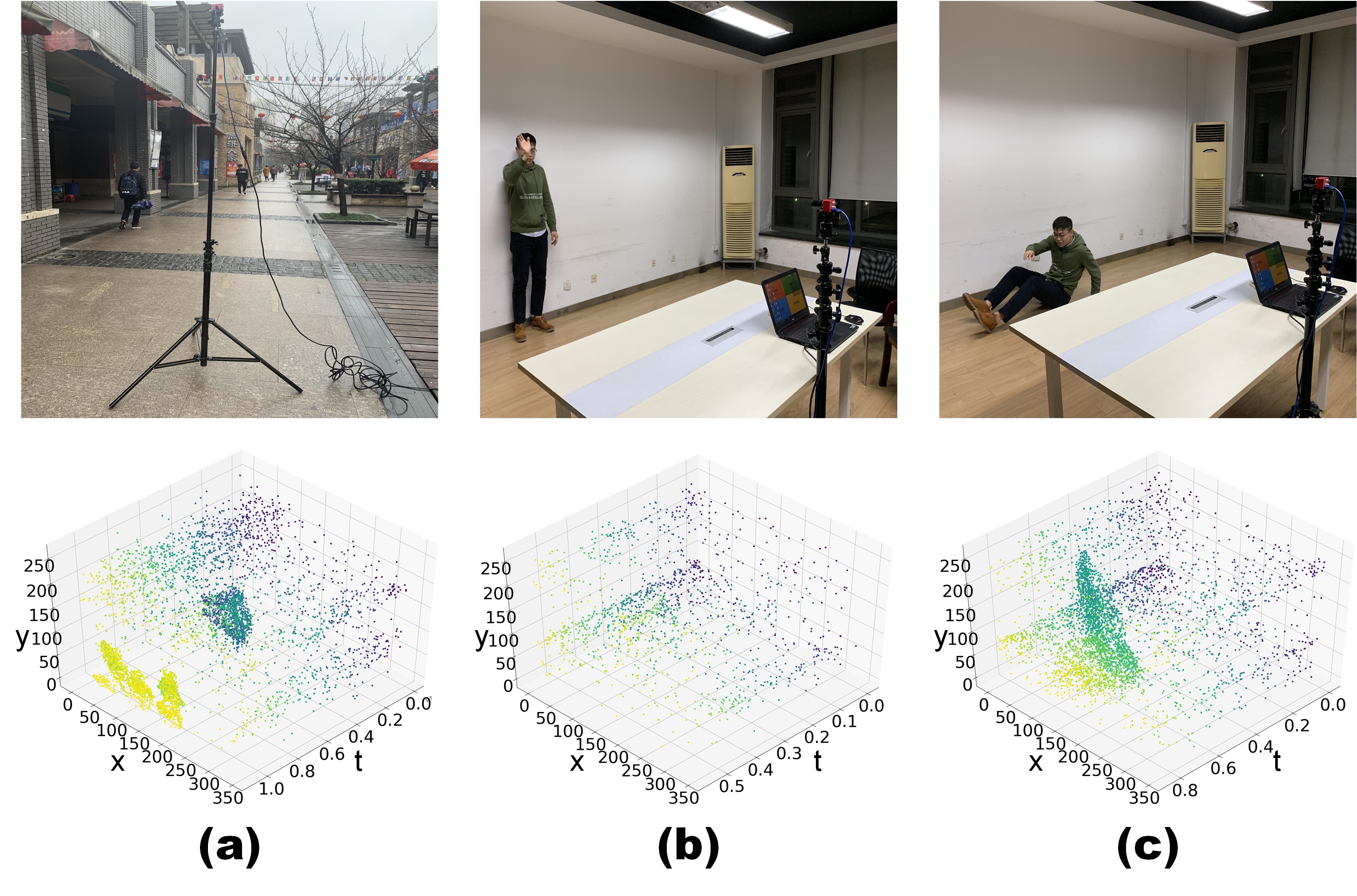

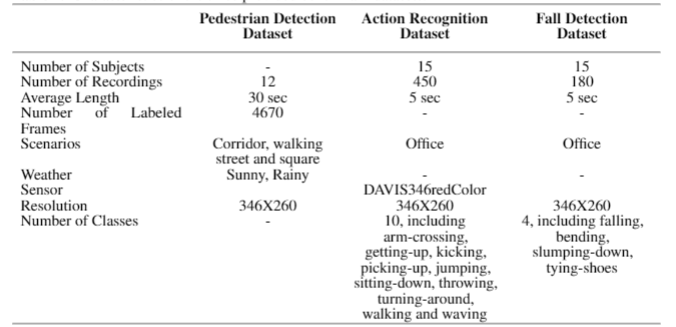

The characteristics of the three datasets are summarized in the following Table, and they are described in detail below.

We upload all the datasets to the cloud server. The user can click to download the datasets.

-

Pedestrian Detection Dataset download

-

Action Recognition Dataset download

-

Fall Detection Dataset download

A large part of pedestrian detection raw data are converted to 4670 frame images through SAE encoding method with frame interval of 20ms. In our experiment, all these images have been labelled via annotation tool -labelImg.

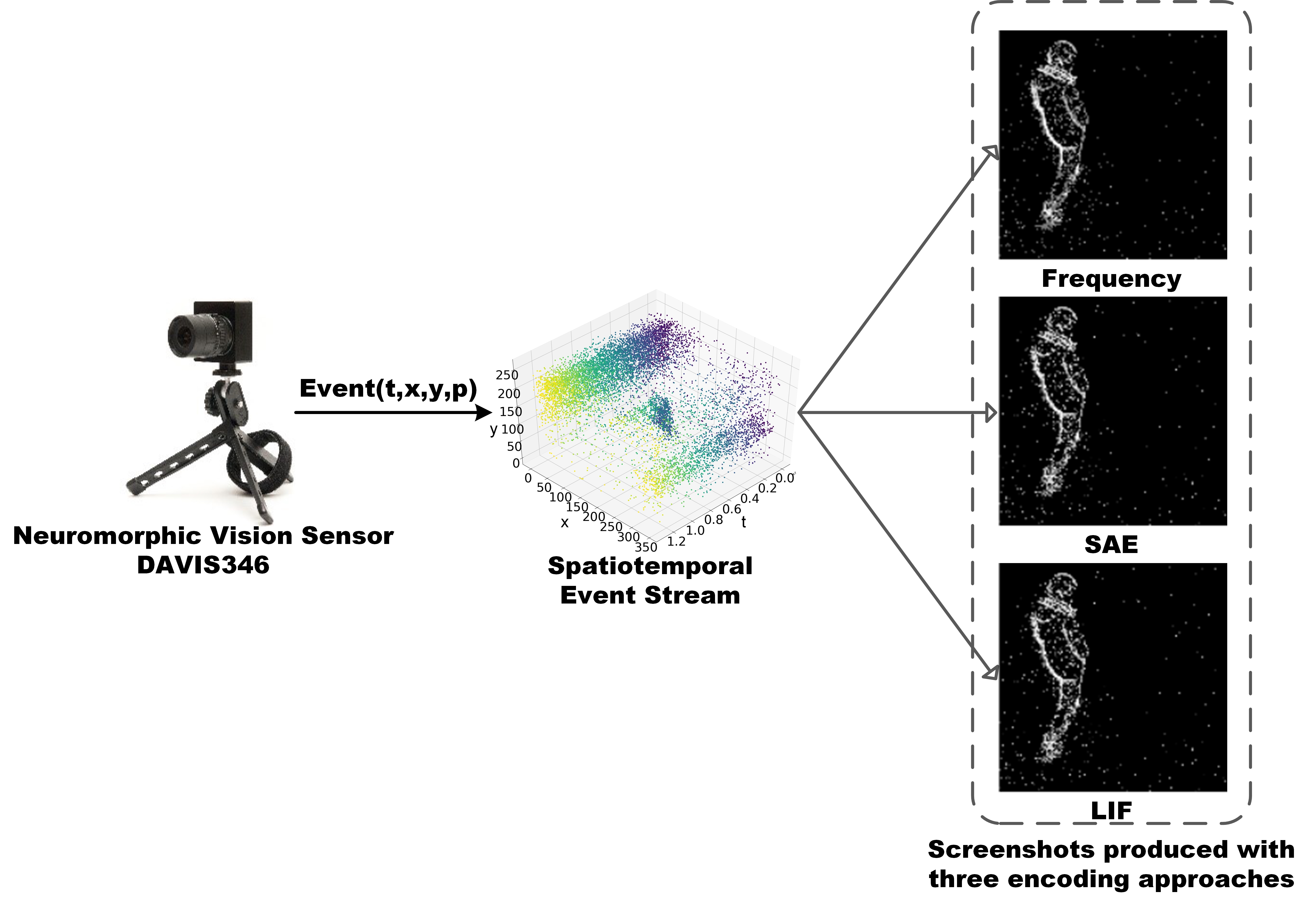

Conventional methods cannot process event data directly. Thus, we employ three encoding approaches here as Frequency, SAE(Surface of Active Events) and LIF(Leaky Integrate-and-Fire) to process continuous DVS event stream into a sequence of frame images, in order to fit for conventional deep learning algorithms. We provide our codes (in Python) respect to three encoding approaches shown in folders.

We provided a toy dataset file toy.aedat to verify our code for encoding. You can download this file, put it in your destination folder.

We provides basic tutorials about the usage of three encoding codes.

If you want to use the code of snn.py,you can enter the following command.

python snn.py /path/to/aedat/file

If you want to use SAE and Frequency you can replace snn with sae or frequency.

Questions about these datasets should be directed to: guang@in.tum.de

For further details please related to our paper. If you are going to use datasets, please cite our article.

@article{miao2019neuromorphic,

title={Neuromorphic Benchmark Datasets for Pedestrian Detection, Action Recognition, and Fall Detection},

author={Miao, Shu and Chen, Guang and Ning, Xiangyu and Zi, Yang and Ren, Kejia and Bing, Zhenshan and Knoll, Alois C},

journal={Frontiers in neurorobotics},

volume={13},

pages={38},

year={2019},

publisher={Frontiers}

}