Important

This repository is archived. We're working on turning this process into a standalone deliberation platform for any topic. You can see this fork here.

Developed by the Meaning Alignment Institute, funded by OpenAI. Live deployment available at dft.meaningalignment.org.

- Overview

- Setting Up a New Environment

- Setting Up a New Deliberation

- Contributing

- Additional Documentation

Democratic Fine-Tuning (DFT) is an initiative aimed at achieving a fine-tuned model that bridges political, cultural, and ideological boundaries. More info can be found in our paper.

This repository hosts code for an application with a new democratic process that takes ~15 minutes to complete.

Participants go through the following steps:

- Dialogue: Participants interact with a chatbot, discussing values they believe ChatGPT should have when responding to contentious questions.

- Vote on Values: Participants vote on values proposed by their peers.

- Vote on Wisdom Transition: Participants vote on wether the transition from one value to another represents an increase in wisdom.

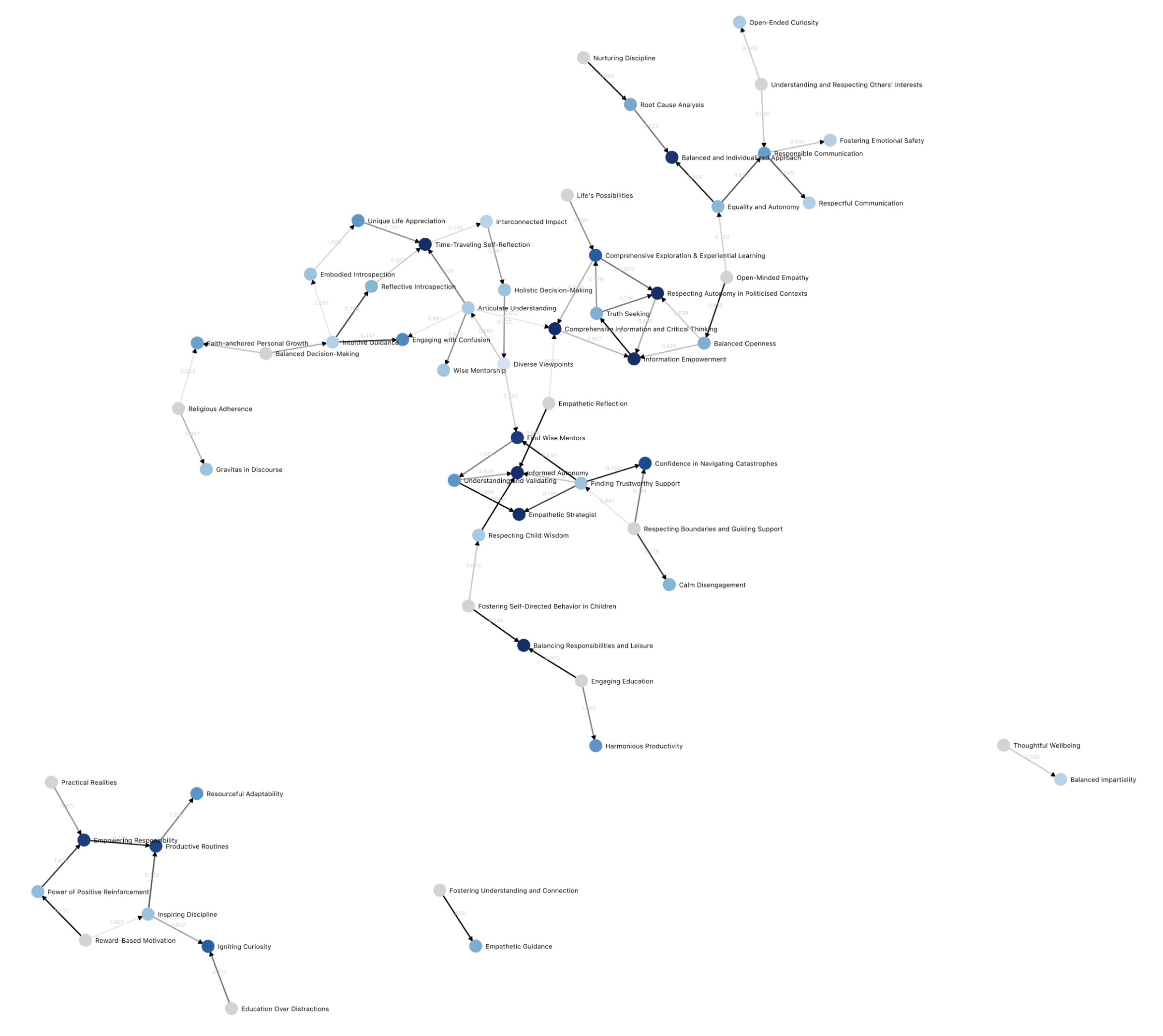

This process generates a moral graph, which can be used to find convergence in which values ChatGPT should have in contentious scenarios, while remaining legible and democratically legitimated.

The intricacies of the graph can be explored here.

Our aspiration with DFT is to craft a model universally regarded as "wise." Such a model would resonate with Republicans, Democrat, irrespective of their ideological or cultural bearings. The ultimate goal is to mitigate the prospects of ideological conflicts amplified by models individually fine-tuned based on group or individual preferences. Two novel techniques are employed:

- Value Alignment: Rather than aligning with preferences, the model is aligned with values. These values are sourced from an expansive and diverse demographic. For more on how we define values, please read our paper.

- Moral Graph Creation: This graph helps find convergent values.

Subsequent endeavors will focus on fine-tuning the LLM based on these values.

- Development Language: TypeScript

- Framework: Remix

- Database: PostgreSQL

- Event Queue: Inngest

- Deployment Platform: Vercel

The moral graph, survey data and demographics data we collected can be found here.

- Database Schema: The data collated during the process adheres to our database schema.

- Moral Graph Generation: The code responsible for generating the moral graph is available here.

- Data Export: A moral graph can be exported in JSON format via this endpoint. The export schema is detailed here.

To initialize a new environment, follow these steps:

- Environment Variables: Begin by duplicating the

.env.examplefile to create a.envfile.

Our application relies on several external services for various functionalities. You'll need to set up accounts and obtain API keys for the following services:

-

Mailgun (For Sending Login Emails):

- Create an account on Mailgun.

- Obtain your API key from the Mailgun dashboard.

- Add your Mailgun API key etc. to the

.envfile.

-

OpenAI (For OpenAI APIs):

- Add your OpenAI API key to the

.envfile.

- Add your OpenAI API key to the

-

Inngest (Event Queue for Background Jobs):

- Create an account on Inngest.

- Follow the Inngest setup process to initialize your event queue.

- No immediate

.envconfiguration is required; Once your vercel project is configured, you can connect your inngest account to vercel by clicking "Connect to Vercel" from the Inngest dashboard.

We recommend using Vercel PostgreSQL for the database:

- Vercel PostgreSQL:

- If you haven't already, sign up or log in to Vercel.

- Navigate to the Integrations or Database section and create a new PostgreSQL database.

- Once your database is created, Vercel will provide you with the necessary environment variables.

- Copy these POSTGRES environment variables into your

.envfile. - Populate the database by running

npx prisma generate && npx prisma db push.

-

Create a New Vercel Project:

- In your Vercel dashboard, create a new project by importing this repository.

- During the import process, Vercel will automatically detect the project settings. Make sure to review them for accuracy.

- Update the .env variables.

-

Link Inngest to Your Vercel Project:

- Log into your Inngest account.

- Navigate to the section where you can connect to Vercel and select the option to "Connect to Vercel".

- Follow the prompts to authorize and link Inngest with your Vercel project. This action will automatically populate your Vercel project with the necessary environment variables for Inngest.

After completing these steps, your environment should be set up and ready.

Deliberations are conducted by creating a case. Here are the steps to set up a new deliberation:

-

Make yourself an admin user: This can be done by setting

isAdmin = truefor your user in the database. -

Creating a Case: Deliberations are initiated by creating a case. This can be done by navigating to

/admin/caseson your site. -

Generating Seed Values and Upgrade Stories: After a case is created, some seed values and upgrade stories are automatically generated in the background, assuming Inngest is set up correctly.

Once a case has been added, participants can begin the deliberation process by navigating to /start on the site. As values and upgrades are populated, the resulting moral graph can be viewed at /data/edges, providing insights into the convergence of values through the deliberation process.

- Install Dependencies:

npm i - Generate Prisma Schema:

npx prisma generate - Environment Configuration: Duplicate

.env.exampleto create.envand populate it with relevant values. - Run Development Server:

npm run dev

To update the database schema, execute: npx prisma db push. The schema can be found here.

Thank you for your engagement with Democratic Fine-Tuning. We value your contributions and insights.