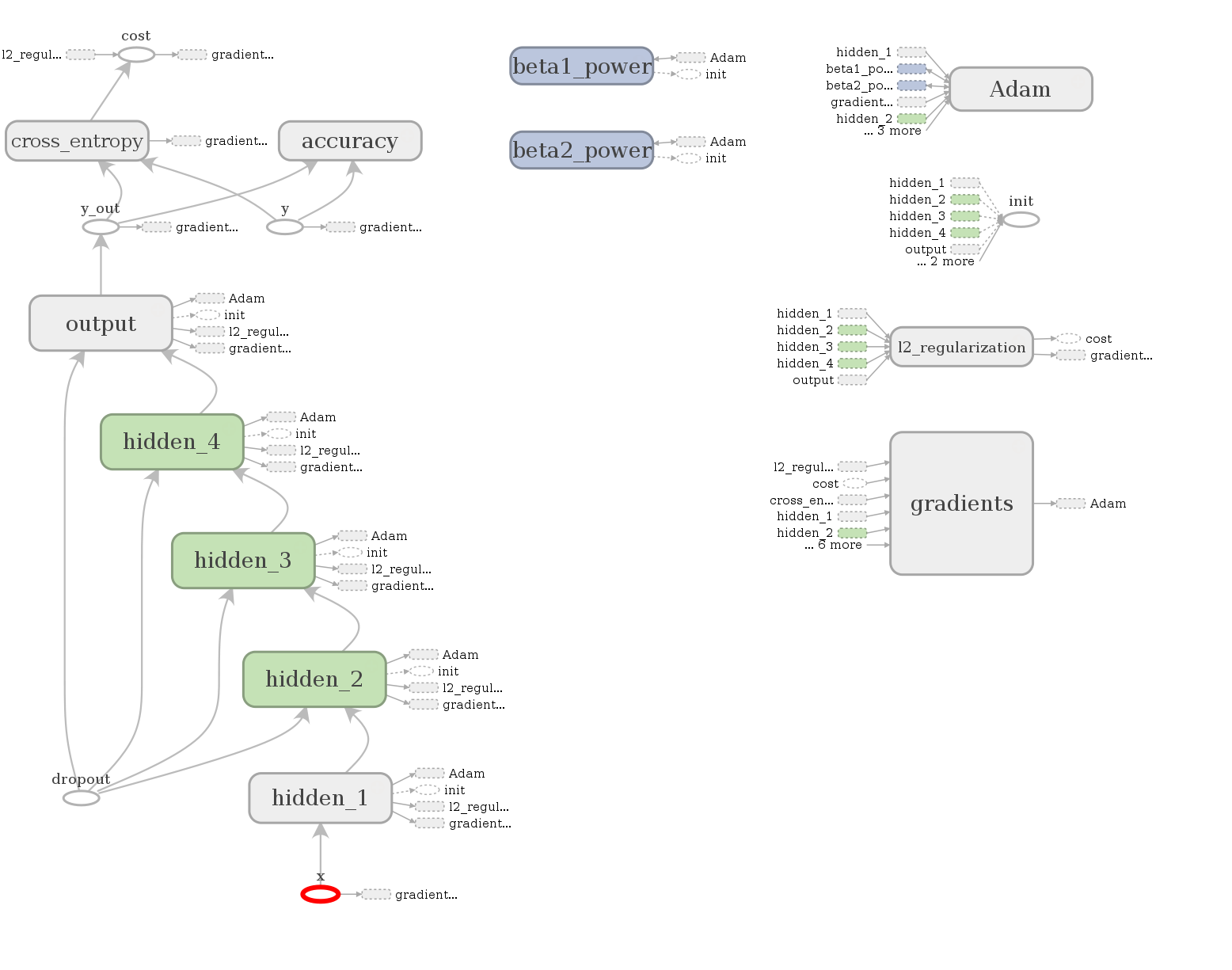

tl;dr --> 🍨 "vanilla" artificial neural net classifier, implemented in TensorFlow

Modularized for convenient model-building, tuning, and training.

(1) Install dependencies

$ pip install requirements.txt

(2) Configure model by editing config.py, including hyperparameters (learning rate, dropout, L2-regularization...) and layer architecture, as well as paths/to/{train,test}data and target label header

Config may be a simple Config - i.e. single settings for hyperparameters (dictionary) and architecture (list of Layer namedtuples) - OR a GridSearchConfig - i.e. hyperparameter dictionary with lists of multiple settings as values, and architecture dictionary with list of activation functions (tf.relu, tf.sigmoid, tf.tanh...) and hidden layer architectures (list of lists of nodes per hidden layer).

(3) Train and save best model, while logging performance

Input data must be CSV, with quantitative and/or qualitative features and qualitative labels.

$ python train.py

(4) Test model

$ python test.py

By default, categorical features are encoded as one-hots (in N dimensions, unless N-1 specified to avoid collinearity), and quantitative features are Gaussian-normalized based on mean & stdev of train set.

Training runs in two modes. If config is flagged as:

Config-->train.pyuses early-stopping based on single train/validate split to save optimal model.GridSearchConfig-->train.pyuses nested cross-validation to tune hyperparameters and hidden layer architecture (by selecting optimal settings via k-fold cross-validation), followed by early-stopping with held-out validation set to train and save optimal model.

During training, weights and biases are updated based on cross-entropy cost by the AdamOptimizer algorithm. Performance is logged to STDOUT and output file; trained model is saved as a TensorFlow graph_def binary.

test.py restores the trained model, saves predictions to file, and outputs accuracy if test data is labeled.