This guidance focuses on payment processing subsystems responsible for posting payments to recieving accounts. In this phase of payment processing, inbound transactions are evaluated, have accounting rules applied to them, then are posted into receiving accounts. The accounting rules dictate the work that needs to happen to successfully process the transaction. Inbound transactions are assumed to have been authorized by an upstream process.

In traditional architectures, the upstream system writes transactions to a log. The log is periodically picked up by a batch-based processing system system, processed in bulk, then eventually posted to customer accounts. Transactions (and customers!) must wait for the next batch iteration before being processed, which can take multiple days.

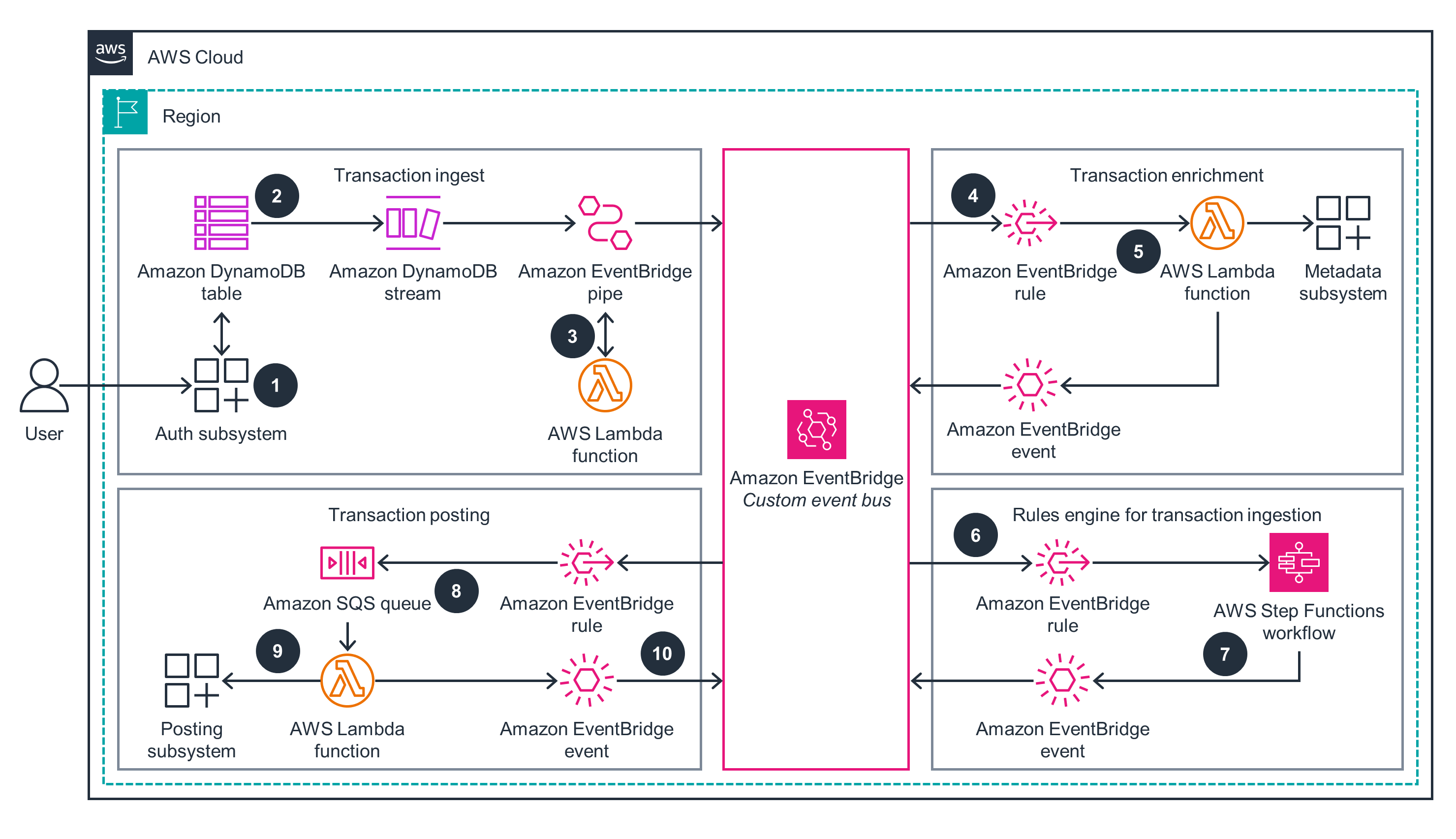

Instead, this sample architecture uses event-driven patterns to post transactions in near real-time rather than in batches. Transaction lifecycle events are published to an Amazon EventBridge event bus. Rules forward the events to processors, which act on the events, then emit their own events, moving the transaction through its lifecycle. Processors can be easily added or removed as organization needs change. Customers get a more fine-grained account balance and can dispute transactions much sooner. Processing load is offloaded from batch systems during critical hours.

- Overview

- Prerequisites

- Deployment Instructions

- Next Steps

- FAQ, known issues, additional considerations, and limitations

- Revisions

- Notices

- Authors

This guidance focuses on the part of payments processing systems that post payments to recieving accounts. In this phase, inbound transactions are evaluated, have accounting rules applied to them, then are posted into customer accounts.

Inbound transactions are assumed to have been authorized by an upstream process.

In traditional architectures, the upstream system writes transactions to a log. The log is periodically picked up by a batch-based processing system system, then eventually posted to customer accounts. Transactions (and customers!) must wait for the next batch iteration before being processed, which can take multiple days.

This sample architecture uses event-driven patterns to post transactions in near real-time rather than in batches. In this system, customers get a more fine-grained account balance, they can dispute transactions much sooner, and processing load is offloaded from batch systems during critical hours.

-

A user initiates a payment which the authorization application approves and persists to an Amazon DynamoDB table.

-

An Amazon EventBridge Pipe reads approved authorization records from the DynamoDB table stream and publishes events to an EventBridge custom bus.

-

Duplicate-checking logic can be added to the EventBridge Pipe through a deduplication AWS Lambda function.

-

An EventBridge rule invokes the enrichment Lambda function for events that match to add context like account type and bank details.

-

The enrichment Lambda function queries metadata and publishes a new event back to the EventBridge custom bus with extra info.

-

An EventBridge rule watching for enriched events invokes an AWS Step Functions workflow to apply business rules to the event as part of a rules engine. In this case the Step Function "state machine" is representative of any rules engine, such as Drools or similar.

-

When an event passes all business rules, the Step Functions workflow publishes a new event back to the EventBridge bus.

-

An EventBridge rule enqueues a message in an Amazon Simple Queue Service (SQS) queue as a buffer to avoid overrunning the downstream posting subsystem.

-

The posting Lambda function reads from the SQS queue and invokes the downstream posting subsystem to post the transaction.

-

The posting Lambda function publishes a final event back to the EventBridge custom event bus.

| AWS service | Role | Description |

|---|---|---|

| Amazon Eventbridge | Core | An EventBridge custom event bus is paired with EventBridge rules to route transaction processing events to subscribed components. The emitted events describe the lifecycle of transactions as they move through the system. Additionally, an EventBridge pipe is used to consume the inbound transaction stream and publish events to the event bus. |

| AWS Lambda | Core | Runs custom code in response to events. This guidance includes a sample duplication detection function, a transaction enrichment function, a sample posting system integration function, and others. |

| Amazon Simple Queue Service (Amazon SQS) | Core | Used as a durable buffer for when you need to capture events from rules and also need to govern scale-out. |

| Amazon Simple Storage Service (Amazon S3) | Core | Stores audit and transaction logs captured by EventBridge archives. |

| Amazon DynamoDB | Core | Acts as a possible ingest method for inbound transactions. Transactions are written to a DynamoDB table, which pushes records onto a DynamoDB stream. The stream records are published to the EventBridge event bus to start the processing lifecycle. |

| AWS Step Functions | Supporting | Implements a simple business rules system, initiaiting alternate processing paths for transactions with unique characteristics. This could be implemented by alternate business rules systems like Drools. |

| Amazon CloudWatch | Supporting | Monitors system health through metrics and logs. |

| AWS X-Ray | Supporting | Traces transaction processing across components. |

| AWS Identity and Access Management (IAM) | Supporting | Defines roles and access policies between components in the system. |

| AWS Key Management Service (AWS KMS) | Supporting | Manages encryption of transaction data. |

You are responsible for the cost of the AWS services used while running this guidance. As of April 2024, the cost for running this guidance with the default settings in the US East (N. Virginia) Region is approximately $1 per month, assuming 3,000 transactions.

This guidance uses Serverless services, which use a pay-for-value billing model. Costs are incurred with usage of the deployed resources. Refer to the Sample cost table for a service-by-service cost breakdown.

We recommend creating a budget through AWS Cost Explorer to help manage costs. Prices are subject to change. For full details, refer to the pricing webpage for each AWS service used in this guidance.

The following table provides a sample cost breakdown for deploying this guidance with the default parameters in the US East (N. Virginia) Region for one month assuming "non-production" level traffic volume.

| AWS service | Dimensions | Cost [USD] |

|---|---|---|

| Amazon DynamoDB | 1 GB Data Storage, 1 KB avg item size, 3000 DynamoDB Streams per month | $ 0.25 |

| AWS Lambda | 3,000 requests per month with 200 ms avg duration, 128 MB memory, 512 MB ephemeral storage | $ 0.00 |

| Amazon SQS | 0.03 million requests per month | $ 0.00 |

| AWS Step Functions | 3,000 workflow requests per month with 3 state transitions per workflow | $ 0.13 |

| Amazon SNS | 3,000 requests users and 3000 Email notifications per month | $ 0.04 |

| Amazon EventBridge | 3,000 custom events per month with 3000 events replay and 3000 requests in the pipes | $ 0.00 |

| Total estimated cost per month: | $1 |

A sample cost breakdown for production scale load (around 20 mln requests/month) can be found in this AWS Pricing Calculator estimate and is esitimated around $1,811.15 USD/month

These deployment instructions are optimized to work on Amazon Linux 2 or Mac OSX.

This solution builds Lambda functions using Python. The build process currently supports Linux and MacOS. It was tested with Python 3.11. You will need Python and Pip to build and deploy.

This solution uses Terraform as an Infrastructure-as-Code provider. You will need Terraform installed to deploy. These instructions were tested with Terraform version 1.7.1.

You can install Terraform on Linux (such as a CodeBuild build agent) with commands like this:

curl -o terraform_1.7.1_linux_amd64.zip https://releases.hashicorp.com/terraform/1.7.1/terraform_1.7.1_linux_amd64.zip

unzip -o terraform_1.7.1_linux_amd64.zip && mv terraform /usr/binThe solution is deployed as one Terraform config. The Root HCL config file (main.tf) dictates the flow and all the submodules are bundled under this repo in individual folders (for example /sqs for the sqs module). Lambda code can be found under the /src folder.

The solution uses a local Terraform backend for deployment simplicity. You may want to switch to a shared backend like S3 for collaboration, or when using a CI/CD pipeline.

These instructions require AWS credentials configured according to the Terraform AWS Provider documentation.

The credentials must have IAM permission to create and update resources in the target account.

Services include:

- Amazon EventBridge Custom Event Bus, Pipes, Rules

- AWS Lambda functions

- Amazon Simple Queue Services (SQS) queues

- Amazon DynamoDB tables and streams

- AWS Step Function workflows

- Amazon Simple Notification Service (SNS) topics

Experimental workloads should fit within default service quotas for the involved services.

Please see detailed deployment, validation and cleanup instructions in the Implementation Guide

Consider subscribing your own business rules engine to the EventBridge event bus and processing inbound transactions using your own logic.

- Visit ServerlessLand for more information on building with AWS Serverless services

- Visit What is an Event-Driven Architecture? in the AWS documentation for more information about Event-Driven systems

- Given a successful authorization has completed, when the request and matching response has been logged in the authorization log/file/table then a single post auth event should be created with the details of the authorization req/response in the payload.

- Given a post-auth event is received from the event source when the event has the correct payload then the event must be searched for duplicate

- Given a post-auth event is run through duplicate checks when the search indicates that the event is a duplicate then the event must be parked in a separate bucket and the event must not be processed further

- Given a post-auth event is run through duplicate checks when the search indicates that the event is not a duplicate then a new event should be sent to the event system and the new event must have the authorization req/response as its payload

- Given the event engine receives a de-dup event from them event source, when the payload has the necessary details then the payload must be standardized into ISO20022 format and a new event written in the standardized format.

- Given the event engine receives a standard event from the event source, when the payload conforms to the new format then the payload must be enriched with Account, Branch /Sort-Code, Account Holder Information, Bank Product Type, Transaction Description, Merchant Information, Currency Conversion and a new enriched event gets created with this payload.

- Given the event engine receives the enriched event from the event source, when the enriched event payload has all the details required then the event must be passed on to the Account Interface and the Account Interface must produce the related events conforming to the posting event and each event must have a connecting indicator to indicate they are from the same posting sequence

- Given the posting engine receives the posting events from the event source, when the event sequence is complete then the sequence events must be enessembled and the final payload should match to the issuing banks posting input which it process as BAU

- Given events sources create new events within the new flow, when the events are processed by their respective subscribers / destinations then the events must be idempotent.

- Given events sources create new events within the new flow, when the events are processed by their respective subscribers / destinations then the events must be reconciled and any mismatches should be auto patched.

- Given the various event sources which can generate events within the new flow, when the events are ready to be created then suitable configuration should be available for the customer and the configurations must ensure events can be batched, real-time and near-realtime.

- Given the events being generated by the various event sources when the events are being processed then the platform should be able to process a million posting events in 15 minutes.

- Given the events being generated by the various event sources when the events are being processed in batches, then the biggest batch should not take more than 15 minutes to process.

- Given the events being generated by the various event sources when the events are being processed then the platform should be able to fail gracefully and have inbuilt mechanisms for retires and replays

- Given the events being generated by the various event sources when the events are being processed then the platform should be able to process a single event in less than a milli second

- Given the events being generated by the various event sources when the events are being processed then the platform should be able to withstand network or infrastructure outages and maintain data integrity

- Given the events being generated by the various event sources when the events are being processed then the platform should be able to process the events in a secure manner and PCI-DSS fields completely tokenized.

- Given the events being generated by the various event sources when the events are being processed then the platform should be able to process the events and have sufficient logging to log the important messages from the platform and have sufficient monitoring & alerting in place to invoke the SRE teams in the event of a failure.

This system is designed to not be sensitive to event ordering.

- This is downstream from transaction settlement, so we can assume the customer is in good financial standing for the transaction.

- Event consumers must not assume previous events have happened or have been processed in a certain way. Instead they must stay within the boundary of their source event.

- Event consumers must process idempotently within their time window. To put this another way, they must accept replay of transactions and not re-perform work against those transactions within the time window.

- Events are scoped to not be dependent on each other.

Accepting event ordering as a requirement would likely force us off of EventBridge, or at least require complex ordering checks with event rejection/replay. Queues would need to be FIFO which would have lower throughput limits.

This is a question of tradeoff for responsibility. In this iteration we use an event router. This gives us flexible dynamic routing via expressive rules, sufficient throughput and latency, and simple onboarding/offboarding of consumers. We can also enforce decoupling of event consumers more easily. Consumers do not need to manage checkpointing.

The major drawback is that the responsibility of event durability/buffering is offloaded consumers. Events are pushed through the router at a single point in time (replay/retry notwithstanding) and consumers must be online and ready to receive at that time. Consumers are therefore recommended to implement buffering (e.g. a queue) as needed in their own domain. We see this in the sample architecture in the “External Consumer Pattern”.

We do have a fallback to an archive in place and can replay that if needed, though at a non-trivial cost (assumed, not calculated).

We also avoid ordering requirements, which would push us towards a stream-based solution that provide ordering-per-shard.

This may be a legitimate consideration. However, doing so would fail our goal to decouple consumers. In this case we have a single consumer per event type (plus logging), but a stated tenant is that we are able to support multiple as needed, and can on/offboard them easily, without disruption to others. If they were coupled via an orchestrator like a step function workflow, a deployment to the workflow would be required to add/remove consumers. This could be non-trivial to coordinate with the inbound event flow. Also, though the ceiling is soft and high, we could face an overall max of transaction throughput against Step Function quotas.

We do not make any assumptions about producer architecture. The boundary of this system starts at a PutEvents call to EventBridge.

We do provide suggested producer patterns across stream, API, and batch systems. Producers may choose to use alternate architectures as their needs dictate.

We have one in place at this time, but are only using it for duplicate checks for inbound events. We are not updating a “state” throughout the flow. Ideally this will not be required. We do not have a known use case for it at this time.

At this time, we assume that cloudwatch+cloudtrail is sufficient for logging/auditing purposes, unless requirements change.

We assume all inbound records are pre-tokenized. This will be described in our event schemas. For an example of how to achieve this, customers can refer to this blog post.

All included services are in scope for PCI-DSS. Customer-managed keys should be used for encryption wherever possible. Data at both rest and in flight will be encrypted. DynamoDB streams are encrypted with a table-level encryption key. For SQS, customers can protect data in transit using Secure Sockets Layer (SSL) or client-side encryption. By default, Amazon SQS stores messages and files using disk encryption. Customers can protect data at rest by configuring SQS to encrypt your messages before saving them to the encrypted file system. SQS recommends using Server-side encryption (SSE) for optimized data encryption. Additionally, SNS lets you store sensitive data in encrypted topics by protecting the contents using keys managed in Key Management Service (KMS). When SSE is configured for SNS, messages are encrypted as soon as SNS receives them. The messages are stored in encrypted form, and only decrypted when they are sent.

As key fields from the inbound authorization are fully tokenized, they pass through the work flow using the controls mentioned above.

Additional security guidance can be found in the Security pillar of the AWS Well Architected Framework and the Architecture Center.

The architecture as designed is single region, multi-AZ due to inherited multi-AZ characteristics of the chosen services.

We see financial services customers using mostly Java and Python. For simplicity/readability we will use Python.

- 1.0.0: Initial Version

- 1.0.1: Reviewed and updated version 5/15/24

- 1.0.2: Pre publication revision 5/29/24

- 1.0.3: Publication version 6/11/24

Customers are responsible for making their own independent assessment of the information in this guidance. This guidance: (a) is for informational purposes only, (b) represents AWS current product offerings and practices, which are subject to change without notice, and (c) does not create any commitments or assurances from AWS and its affiliates, suppliers or licensors. AWS products or services are provided “as is” without warranties, representations, or conditions of any kind, whether express or implied. AWS responsibilities and liabilities to its customers are controlled by AWS agreements, and this guidance is not part of, nor does it modify, any agreement between AWS and its customers.

- Ramesh Mathikumar, Principal DevOps Consultant

- Rajdeep Banerjee, Senior Solutions Architect

- Brian Krygsman, Senior Solutions Architect

- Daniel Zilberman, Senior Solutions Architect Technical Solutions