This repository is the implementation of the paper Feature Space Singularity for Out-of-Distribution Detection.

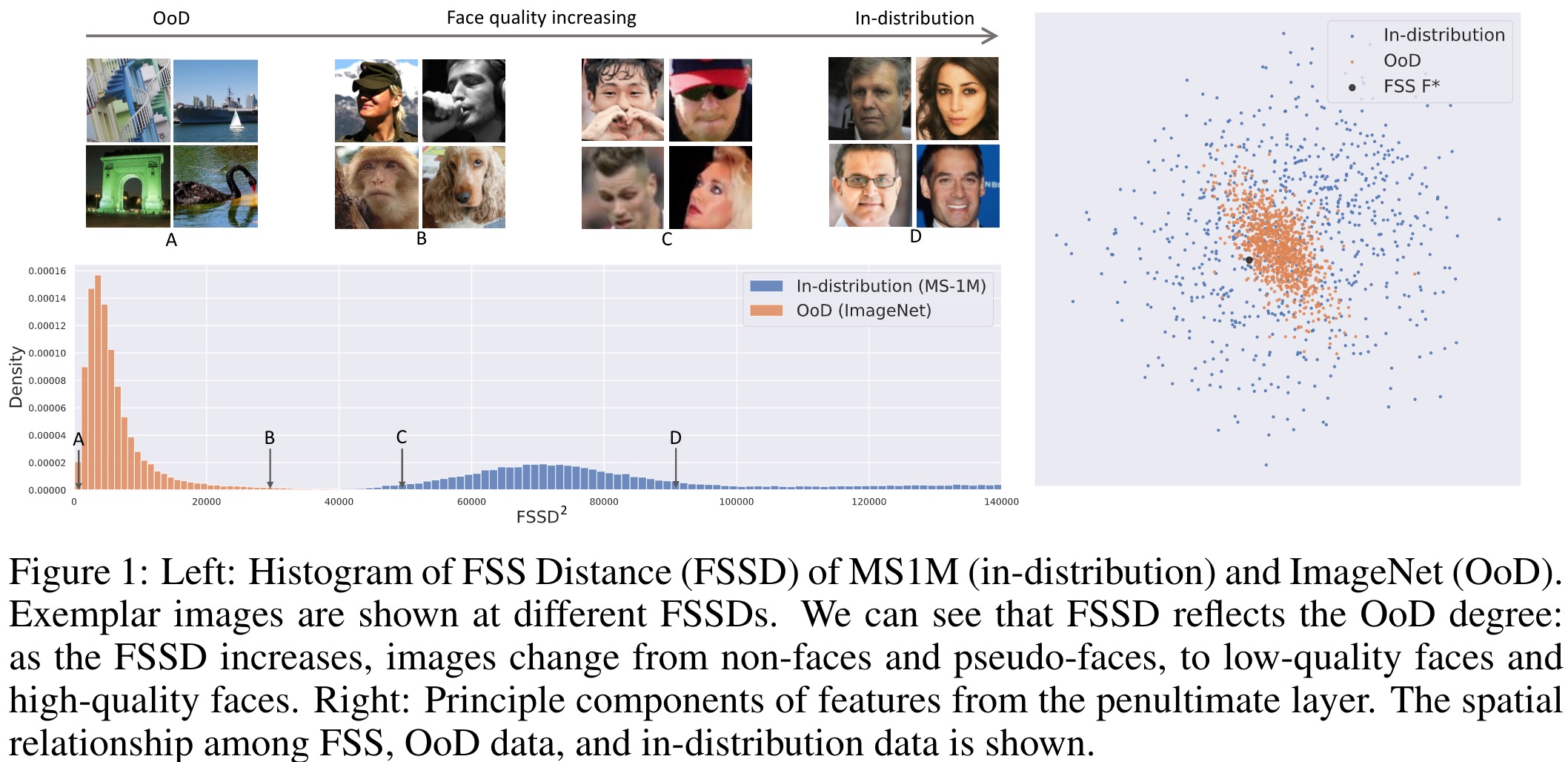

The central idea of FSSD algorithm is to use the following FSSD score to tell OoD samples from in-distribution ones:

where is the feature extractor and

is the Feature Space Singularity (FSS).

We approximate FSS simply by calculting the mean of uniform noise features:

.

In this repository, we implement the following algorithms.

Note that OE shares the same implementation with Baseline. To test these methods, see the Evaluation section. We also welcome contributions to this repository for more SOTA OoD detection methods.

Python: 3.6+

To install required packages:

pip install -r requirements.txt

You can download FashionMNIST and CIFAR10 datasets directly from links offered in torchvision. For ImageNet-dogs-non-dogs dataset, please download the dataset from this link and find the dataset description in this issue. (We will add more datasets and corresponding pre-trained models in the future.)

The default dataset root is /data/datasets/.

To train the model(s) in the paper, see lib/training/. We provide the training codes of FashionMNIST and CIFAR10 datasets.

To evaluate OoD detection performance of FSSD, run:

python test_fss.py --ind fmnist --ood mnist --model_arch lenet --inp_process

You can also evaluate OoD performance of other methods:

Baseline:

python test_baseline.py --ind fmnist --ood mnist --model_arch lenet

ODIN:

python test_odin.py --ind fmnist --ood mnist --model_arch lenet

Mahanalobis distance:

python test_maha.py --ind fmnist --ood mnist --model_arch lenet

Deep Ensemble:

python test_de.py --ind fmnist --ood mnist --model_arch lenet

Outlier Exposure:

python test_baseline.py --ind fmnist --ood mnist --model_arch lenet --test_oe

You can download pretrained models here:

- Google cloud links for models trained on FashionMNIST, CIFAR10 , ImageNet-dogs-non-dogs-dataset,using parameters specified in our supplements. More pre-trained models will be added in the future.

Please download the pre-trained models and put them in pre_trained directory

Our model achieves the following performance on :

FashionMNIST vs. MNIST:

| Base | ODIN | Maha | DE | MCD | OE | FSSD | |

|---|---|---|---|---|---|---|---|

| AUROC | 77.3 | 87.9 | 99.6 | 83.9 | 76.5 | 99.6 | 99.6 |

| AUPRC | 79.2 | 88.0 | 99.7 | 83.3 | 79.3 | 99.6 | 99.7 |

| FPR80 | 42.5 | 20.0 | 0.0 | 27.5 | 42.3 | 0.0 | 0.0 |

CIFAR10 vs. SVHN:

| Base | ODIN | Maha | DE | MCD | OE | FSSD | |

|---|---|---|---|---|---|---|---|

| AUROC | 89.9 | 96.6 | 99.1 | 96.0 | 96.7 | 90.4 | 99.5 |

| AUPRC | 85.4 | 96.7 | 98.1 | 93.9 | 93.9 | 89.8 | 99.5 |

| FPR80 | 10.1 | 4.8 | 0.3 | 1.2 | 2.4 | 12.5 | 0.4 |

If you find this repository helpful, please cite:

@inproceedings{huang2021feature,

title={Feature Space Singularity for Out-of-Distribution Detection},

author={Huang, Haiwen and Li, Zhihan and Wang, Lulu and Chen, Sishuo and Dong, Bin and Zhou, Xinyu},

booktitle={Proceedings of the Workshop on Artificial Intelligence Safety 2021 (SafeAI 2021)},

year={2021}

}

The usage and contribution to this repository is under MIT licence.