This is a project developed to create a code template for knowledge graph production ready api.

Please note that information extraction model training is not done in this project.We've just used rebel library and processed it's output.

The basic code template for this project is derived from my another repo code template

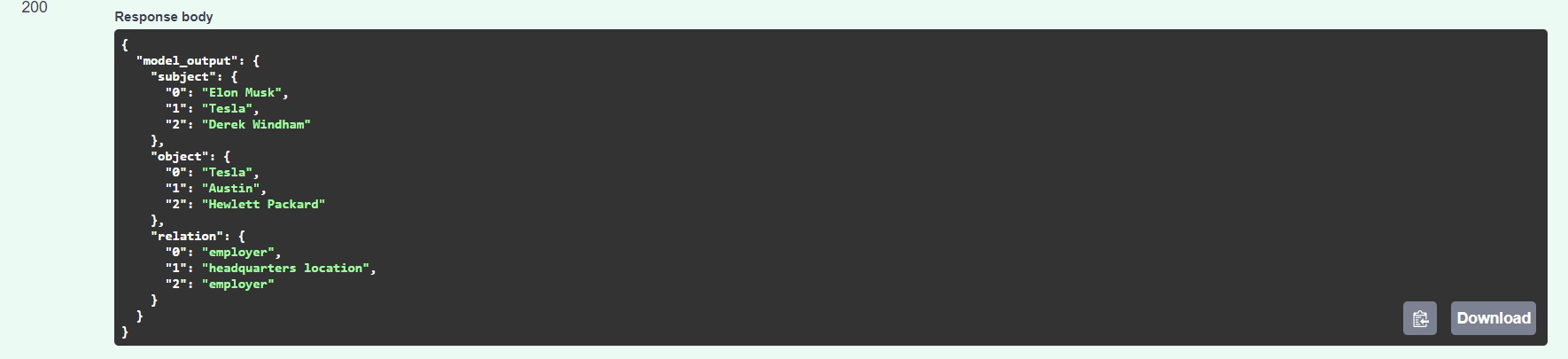

Create knowledge graph api which accepts text data and gives information extraction triplets in the form of subject,object,relation.

Steps in creation of Knowledge Graph:

Coreference Resolution

Named Entity Recognition

Entity Linking

Relationship Extraction

Knowledge Graph Creation

1.Coreference Resolution:

Convert pronouns to their original nouns.Refer my Coreference Resolution project

2.Named Entity Recognition(NER)

We can skip this step and just get all relationships extracted. However, sometimes you 'll need only certain entities types and their relationships. We can extract default entities like NAME,PERSON etc from many available libraries or we can also build our own NER model. I've created a project to build custom NER-PERSON,ORG,PLACE,ROLE. But for knowledge graph,I am getting all relationships.

Refer my Custom NER project.

3.Entity Linking/Entity Disambiguation

We can get different words/nouns for same entity. Example, U.S,United States of America,America. All these should be considered as one entity. We can achieve this by getting their root id if we have some knowledge base. Here, we are going to use Wikipedia knowledge. So, many time entity linking is also called as wikification.

4.Relationship Extraction

It means fetching relationships in text.

I've explored couple of libraries- Stanford Open IE and rebel libraries.

I selected rebel for my final implementation because Stanford Open IE output was little redundant and it is slow.

5.Knowledge Graph Creation

I've explored neo4j python wrapper py2neo and networkx in a notebook and selected networkx just because ease of use for visualization. We should go for more powerful neo4j if want to use graph databases and perform further analysis but we are not doing that here.

There are 2 ways to deploy this application.

- API using FastAPI.

- Streamlit application

Unit test cases are written

Deployment is done locally using docker.

Like any production code,this code is organized in following way:

- Keep all Requirement gathering documents in docs folder.

- Write and keep inference code in src/inference.

- Write Logging and configuration code in src/utility.

- Write unit test cases in tests folder.pytest,pytest-cov

- Write performance test cases in tests folder.locust

- Build docker image.Docker

- Use and configure code formatter.black

- Use and configure code linter.pylint

- Use Circle Ci for CI/CD.Circlci

Clone this repo locally and add/update/delete as per your requirement.

├── README.md <- top-level README for developers using this project.

├── pyproject.toml <- black code formatting configurations.

├── .dockerignore <- Files to be ognored in docker image creation.

├── .gitignore <- Files to be ignored in git check in.

├── .circleci/config.yml <- Circleci configurations

├── .pylintrc <- Pylint code linting configurations.

├── Dockerfile <- A file to create docker image.

├── environment.yml <- stores all the dependencies of this project

├── main.py <- A main file to run API server.

├── main_streamlit.py <- A main file to run API server.

├── src <- Source code files to be used by project.

│ ├── inference <- model output generator code

│ ├── training <- model training code

│ ├── utility <- contains utility and constant modules.

├── logs <- log file path

├── config <- config file path

├── docs <- documents from requirement,team collabaroation etc.

├── tests <- unit and performancetest cases files.

│ ├── cov_html <- Unit test cases coverage report

Development Environment used to create this project:

Operating System: Windows 10 Home

Anaconda:4.8.5 Anaconda installation

Go to location of environment.yml file and run:

conda env create -f environment.yml

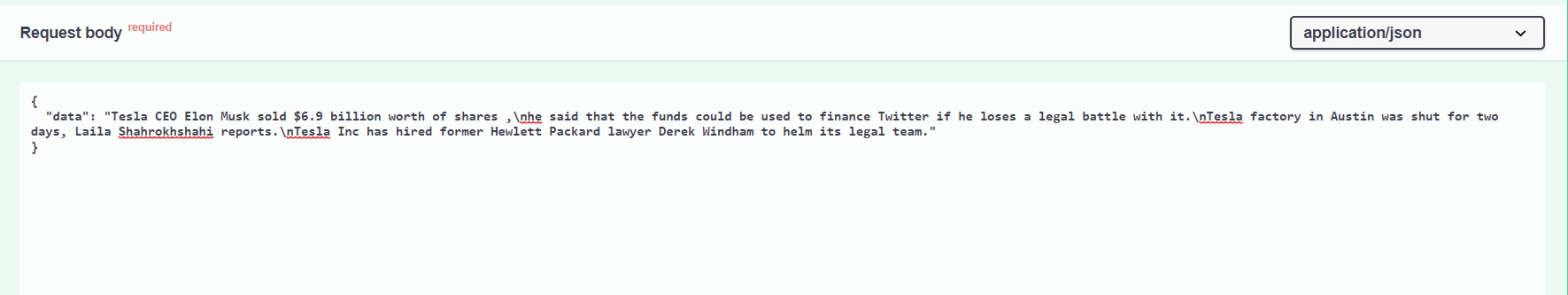

Here we have created ML inference on FastAPI server with dummy model output.

- Go inside 'knowledge_graph_api' folder on command line.

Run:

conda activate knowledge_graph_api

python main.py

Open 'http://localhost:5000/docs' in a browser.

- Or to start Streamlit application

- Run:

conda activate knowledge_graph_api

streamlit run main_streamlit.py

streamlit-coreferencce_resolution.mp4

- Go inside 'tests' folder on command line.

- Run:

pytest -vv

pytest --cov-report html:tests/cov_html --cov=src tests/

- Open 2 terminals and start main application in one terminal

python main.py

- In second terminal,Go inside 'tests' folder on command line.

- Run:

locust -f locust_test.py

- Go inside 'knowledge_graph_api' folder on command line.

- Run:

black src

- Go inside 'knowledge_graph_api' folder on command line.

- Run:

pylint src

- Go inside 'knowledge_graph_api' folder on command line.

- Run:

docker build -t myimage .

docker run -d --name mycontainer -p 5000:5000 myimage

- Add project on circleci website then monitor build on every commit.

1.Spacy model not included,you can train your custom NER using my another Custom NERproject.

Please create a Pull request for any change.

NOTE: This software depends on other packages that are licensed under different open source licenses.