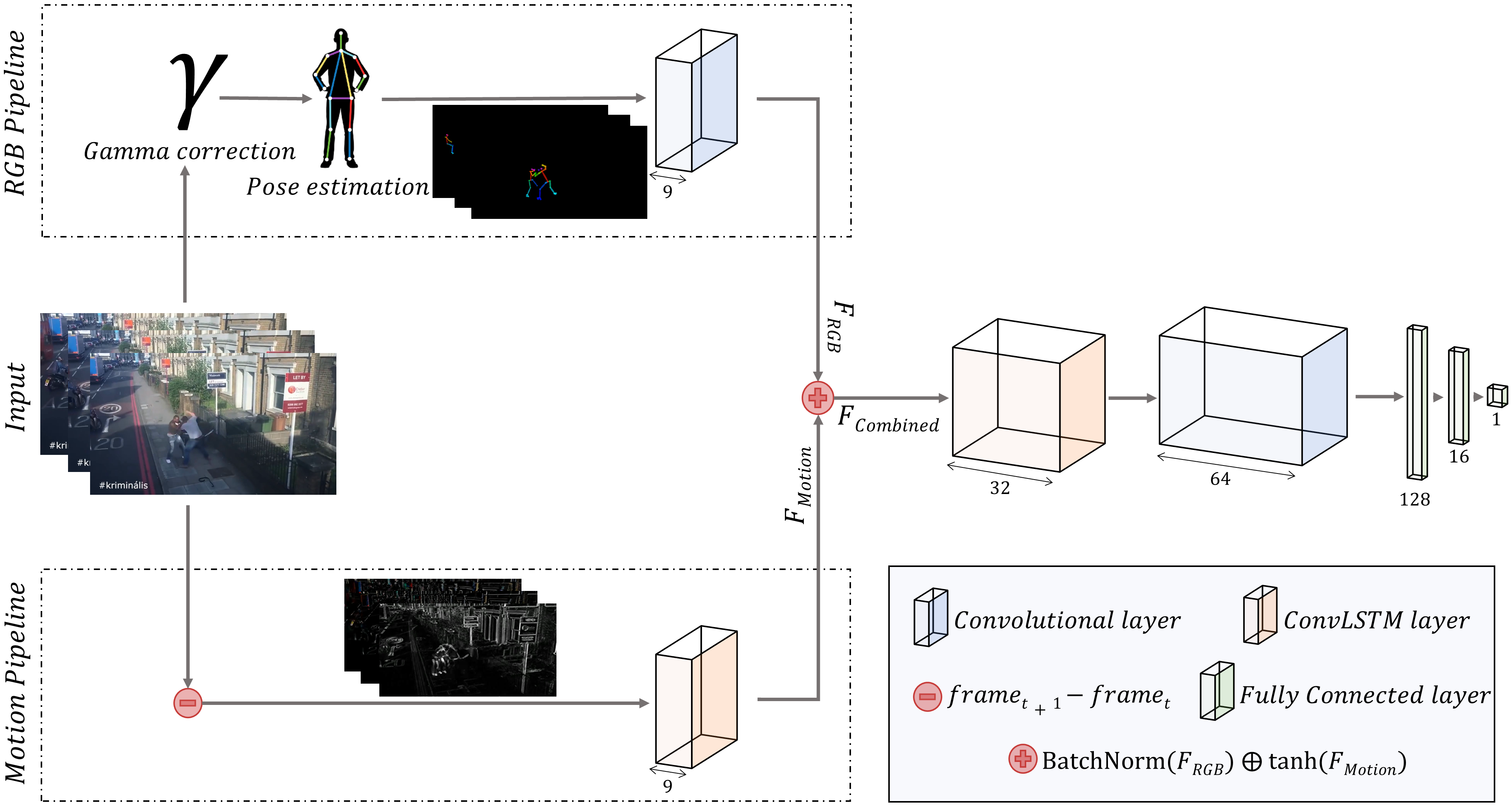

This is the GitHub repository associated with the paper Human Skeletons and Motion Estimation for Efficient Violence Detection in Surveillance Videos (currently under review by a Q1 journal), which achieves 90.25% accuracy in the RWF-2000 validation set with just 60k trainable parameters.

The purpose of this repository is to provide the code needed to replicate the results of the paper. Moreover, the training logs of all the experiments are also included.

Table of Contents

To demonstrate the ability of our proposal to detect violence in real-life scenarios, we have executed our best model with two videos that are not present in any of the datasets. In the second video, a girl is brutally beaten outside of a bar in Murcia, a city in southern Spain (news report).

The enviroment.yml file contains all the dependencies used during the development of this project. You can create the corresponding conda environment by running the following command:

conda env create -f environment.ymlIf you would like to train or validate a model, you will need one of the datasets that were used in the paper. Find below their links:

-

RWF-2000: as stated in the paper, this is the main dataset that we have used to train and validate our model. The authors of the original dataset require the signing of an Agreement Sheet to grant access.

Apart from the data, one of the essential components of our proposal is the skeletons' detector. As mentioned in the paper, we use OpenPose. Please, follow their instalation guide to compile the library and be able to extract skeletons. A complementary installation guide is available at this link.

Once you have compiled the OpenPose library, you can use our preprocess.py script to extract the skeletons from the videos of your choice. The script also applies a gamma correction before extracting the skeletons, which can be disabled.

All the notebooks required to reproduce the results of the paper in terms of training and validation are included in the experiments directory. The training logs with the results reported in our paper are provided inside each notebook. For the RWF-2000 dataset, a summary of the results is also provided in the experiments/RWF-2000/results_summary directory. Note that some extra, less important, experiments not reported in the paper are included in these directories for this last dataset.

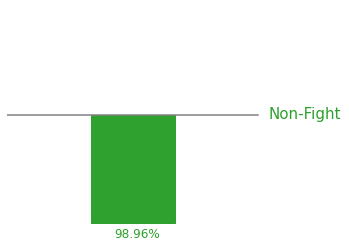

Once you have trained a model, you can try to detect violence in a video of your choice. First of all, you have to use the preprocess.py script to extract the skeletons. Afterward, you can use the inference.ipynb notebook to perform the inference. This notebook will output a sequence of frames with the predicted probabilites of violence. Find an example of a frame with a predicted probability below:

With this frames, you can render a video with the aggreagted predictions using FFmpeg, which can then be merged with the original video to obtain what is shown in the Demo. The command is:

ffmpeg -i predictions/VIDEO_NAME-%d.png -r 10 output.mp4Please cite our paper if this work helps your research:

TODO: citation