This work focuses on recovering the blurry text image.

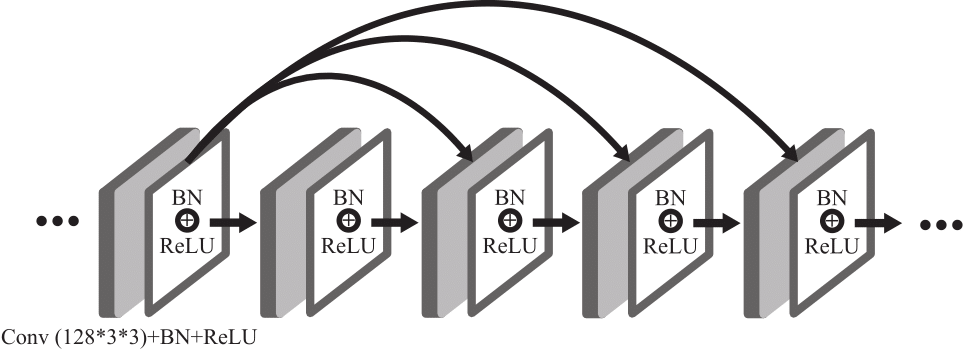

Based on the deep neural network, a new short connection scheme is used. Trained by the pixel regression, higher visual quality of the image can be recovered by the network from the blurry one.

-

Sequential Highway Connection (SHC) structure

-

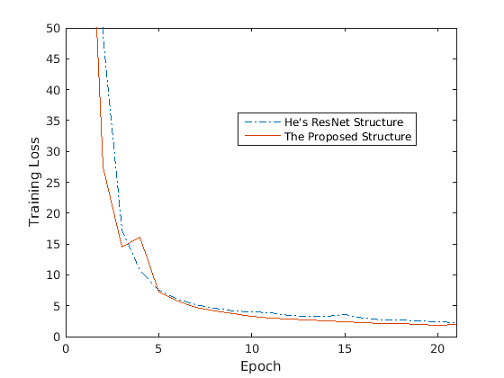

Loss curve comparing with the ResNet Structure:

-

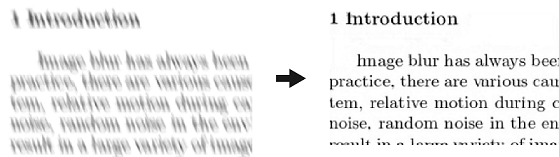

Visualization

If you find our work useful in your research and relevant works, please consider citing:

@Article{Mei2019,

author="Mei, Jianhan and Wu, Ziming and Chen, Xiang and Qiao, Yu and Ding, Henghui and Jiang, Xudong",

title="DeepDeblur: text image recovery from blur to sharp",

journal="Multimedia Tools and Applications",

year="2019",

issn="1573-7721",

doi="10.1007/s11042-019-7251-y",

url="https://doi.org/10.1007/s11042-019-7251-y"

}

-

Ubuntu 16.04

-

Python 2/3, in case you need the sufficient scientific computing packages, we recommend you to install anaconda.

-

Tensorflow >= 1.5.0

-

Keras >= 2.2.0

-

Optional: if you need GPUs acceleration, please install CUDA that the version requires >= 9.0

-

Check the matlab script 'Matlab/RunProcess.m': The path of the text images should contain raw sharp text images. You can build your own dataset by convert PDF files into raw image files and save them to the text image path in "Matlab/RunProcess.m".

Then run the matlab script 'Matlab/RunProcess.m', which helps to build the training dataset.

-

Check the training data and model saving paths in "train.py", for which the training data should be consistent with the previous step. Then run the following script:

python train.py

-

Check the testing model and data paths in "test.py". Then run the following script:

python test.py

This work is released under the MIT License (refer to the LICENSE file for details).