This repository contains PyTorch code for the paper entitled "Effective Use of Graph Convolution Network and Contextual Sub-Tree for Commodity News Event Extraction" (Accepted at ECONLP workshop in EMNLP 2021).

See Presentation Materials for summary:

- Presentation Slides

- Poster

- Presentation Recording (.mp4)

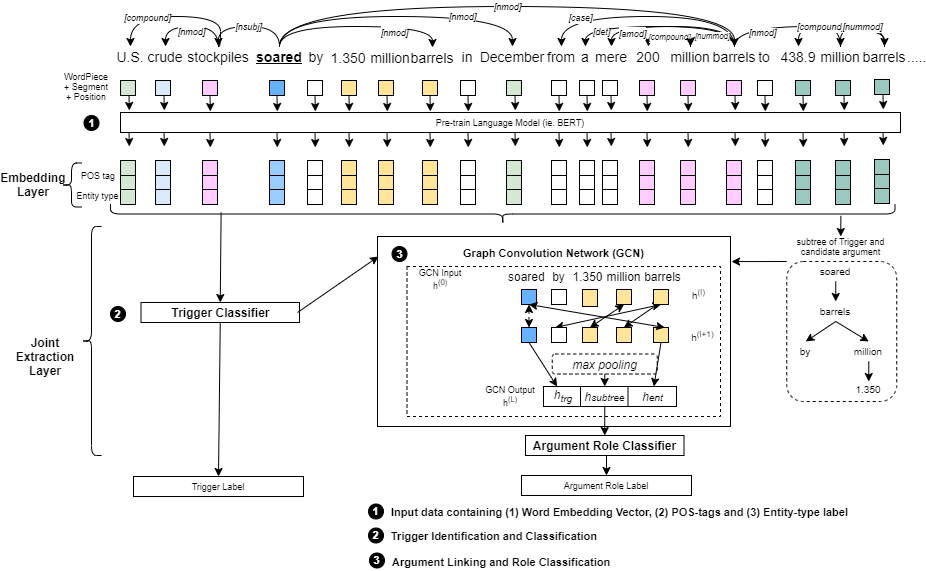

This paper introduces the use of pre-trained language models, eg: BERT and Graph Convolution Network (GCN) over a sub-dependency parse tree, termed here as Contextual Sub-Tree for event extraction in Commodity News. Below is a diagram showing the overall architecture of the proposed solution.

The events found in Commodity News are group into three main categories:

- Geo-political events

- Macro-economic events

- Commodity Price Movement events

- Supply-demand Related events

- Python 3 (version 3.7.4 is used here)

- PyTorch 1.2

- Transformer (version 2.11.0 is used here) - Huggingface

To install the requirements, run pip install -r requirements.txt.

ComBERTfolder contains the link to download ComBERT model.datasetfolder contains training data-event_extraction_train.jsonand testing data-event_extraction_test.jsondatafolder contains (1)const.pyfile with Event Labels, Entity Labels, Argument Role Labels and other constants and (2)data_loader.pywith functions relating to the loading of data.utilsfolder contains helper functions and Tree structure related functions.modelfolder contains the main Event Extraction Modelevent_extraction.pyand Graph Convolution Modelgraph_convolution.pyrunsfolder contains the output of the executions (see Ouput section below for details)

Run run_train.bat

The results are written to (1) Tensorboard and (2) "runs/logfiles/output_XX.log' where XX is the system date and timestamp. Results include

- Training loss

- Evaluation loss

- Event Trigger classification Accuracy, Precision, Recall and F1 scores.

- Argument Role classification Accuracy, Precision, Recall and F1 scores.

To access results on Tensorboard, first you need to have Tensorboard install and to bring up to bring up tensorboardX, use this command: tensorboard --logdir runs

| Argument role | precision | recall | f1-score |

|---|---|---|---|

| NONE | 0.95 | 0.93 | 0.94 |

| Attribute | 0.75 | 0.94 | 0.83 |

| Item | 0.87 | 0.89 | 0.88 |

| Final_value | 0.75 | 0.81 | 0.79 |

| Initial_reference_point | 0.67 | 0.71 | 0.66 |

| Place | 0.76 | 0.71 | 0.74 |

| Reference_point_time | 0.83 | 0.81 | 0.80 |

| Difference | 0.87 | 0.85 | 0.89 |

| Supplier_consumer | 0.77 | 0.81 | 0.79 |

| Imposer | 0.80 | 0.78 | 0.81 |

| Contract_date | 0.75 | 0.71 | 0.80 |

| Type | 0.95 | 0.89 | 0.96 |

| Imposee | 0.66 | 0.75 | 0.68 |

| Impacted_countries | 0.77 | 0.75 | 0.76 |

| Initial_value | 0.83 | 0.71 | 0.77 |

| Duration | 0.82 | 0.86 | 0.84 |

| Situation | 0.79 | 0.75 | 0.66 |

| Participating_countries | 0.88 | 0.85 | 0.89 |

| Forecaster | 0.75 | 1.00 | 0.80 |

| Forecast | 0.95 | 0.87 | 0.91 |

If you find the codes or the paper useful, please cite using the following:

@inproceedings{lee2021effective,

title={Effective Use of Graph Convolution Network and Contextual Sub-Tree for Commodity News Event Extraction},

author={Lee, Meisin and Soon, Lay-Ki and Siew, Eu-Gene},

booktitle={Proceedings of the Third Workshop on Economics and Natural Language Processing},

pages={69--81},

year={2021}

}