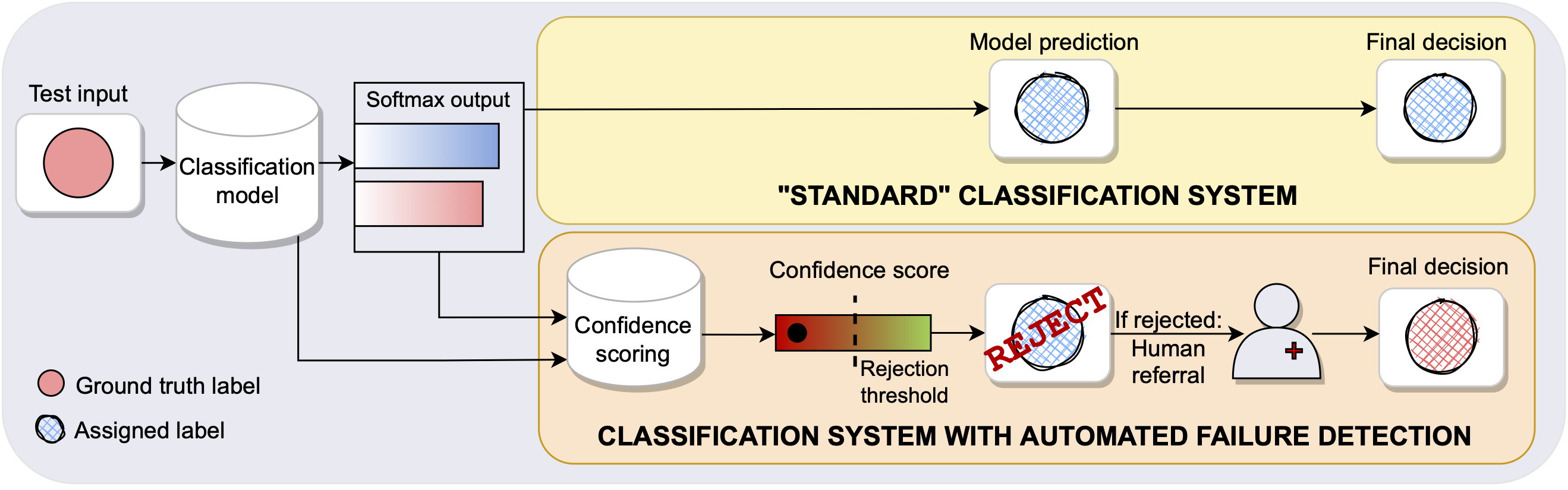

This repository contains the code to reproduce all the experiments in the "Failure Detection in Medical Image Classification: A Reality Check and Benchmarking Testbed" paper. In particular it allows benchmarking of 9 different confidence scoring methods, for 6 different medical imaging datasets. We provide all the necessary training, evaluation and plotting code to reproduce all the experiments in the paper.

If you like this repository, please consider citing the associated paper

@article{

bernhardt2022failure,

title={Failure Detection in Medical Image Classification: A Reality Check and Benchmarking Testbed},

author={M{\'e}lanie Bernhardt and Fabio De Sousa Ribeiro and Ben Glocker},

journal={Transactions on Machine Learning Research},

year={2022},

url={https://openreview.net/forum?id=VBHuLfnOMf}

}

The repository is divided into 5 main folders:

data_handlingcontains all the code related to data loading and augmentations.configs: contains all the.ymlconfigs for the experiments in the paper as well as helper functions to load modules and data from a given config.modelscontains all the models architecture definition and pytorch lightning modules.classificationcontains the main training scripttrain.pyas well as all the required files to run SWAG in theclassification/swagsubfolder (code taken from the authors) and the files to run DUQ baseline in theclassification/duqsubfolder (code adapted from the authors).failure_detectioncontains all the code for the various confidence scores.- The implementations of each individual score is grouped in the

uncertainty_scoresfolder. Note that the Laplace baseline is obtained with the Laplace package, for TrustScore we used the code from the authors. Similarly in theConfidNetsub-subfolder you will find pytorch-lightning version of the code, adapted from the code of the authors. - The main runner for evaluation is

run_evaluation.pyfor which you need to provide the name of the config defining the model.

- The implementations of each individual score is grouped in the

paper_plotting_notebooksnotebooks to produce the plots summarzing the experiment results as in the paper.

All the outputs will be placed in {REPO_ROOT} / "outputs" by default.

- Start by cloning our conda environment as specified by the

environment.ymlfile as the root of the repository. - Make sure you update the paths for the RSNA, the EyePACS and the BSUI datasets in default_paths.py to point to the root folder of the dataset as downloaded from Kaggle RSNA Pneumonia Detection Challenge for RNSA, from Kaggle EyePACS Challenge for EyePACS and from this page for BUSI. For the EyePACS dataset you will also need to download the test labels and place it at the root of the dataset repository.

- Make sure the root directory is in your

PYTHONPATHenvironment variable. Ready to go!

In this section, we will walk you through all steps necessary to reproduce the experiments for OrganAMNIST and obtain the boxplots from the paper. The procedure is identical for all other experiments, you just need to change which yml config file you want to use (see next section for description of configs).

Assuming your current work directory is the root of the repository:

- Train the 5 classification models

python classification/train.py --config medmnist/organamnist_resnet_dropout_all_layers.yml. Note if you just want to train one model you can specify a single seed in the config instead of 5 seeds. - If you want to have the SWAG results, additionally train 5 SWAG models

python classification/swag/train_swag.py --config medmnist/organamnist_resnet_dropout_all_layers.yml - If you want to have the ConfidNet results also run

python failure_detection/uncertainty_scorer/confidNet/train.py --config medmnist/organamnist_resnet_dropout_all_layers.yml - If you want to have the DUQ results also run

python classification/duq/train.py --config medmnist/organamnist_resnet_dropout_all_layers.yml. - You are ready to run the evaluation benchmark with

python failure_detection/run_evaluation.py --config medmnist/organamnist_resnet_dropout_all_layers.yml - The outputs can be found in the

outputs/{DATASET_NAME}/{MODEL_NAME}/{RUN_NAME}folder. Individual results for each seed are placed in separate subfolders named by the corresponding training seed. More importantly, in thefailure_detectionsubfolder of this output folder you will find:aggregated.csvthe aggregated tables with average and standard deviation over seeds.ensemble_metrics.csvwith the metrics for the ensemble model.

- If you then want to reproduce the boxplots figures from the paper, you can run the

paper_plotting_notebooks/aggregated_curves.ipynbnotebook. Note this will save the plot inside theoutputs/figuresfolder.

In order to reproduce all plots in Figure 2 of the paper, you need to repeat all steps 1-7 for the following provided configs:

- PathMNIST: medmnist/pathmnist_resnet_dropout_all_layers.yml

- OrganAMNIST: medmnist/organamnist_resnet_dropout_all_layers.yml

- TissueMNIST: medmnist/tissuemnist_resnet_dropout_all_layers.yml

- RSNA Pneumonia: rsna/rsna_resnet50_dropout_all_layers.yml

- BUSI Dataset: BUSI/busi_resnet.yml

- EyePACS Dataset: EyePACS/retino_resnet.yml

Just replace the config name by one of the above config names and follow the steps from the above section (note that config name argument is expected to be the relative path to the configs folder).

For this work, the following repositories were useful: