- 2023.07.14 SA-BEV is accepted by ICCV 2023. The paper is available here.

| Config | mAP | NDS | Baidu | |

|---|---|---|---|---|

| SA-BEV-R50 | 35.5 | 46.7 | link | link |

| SA-BEV-R50-MSCT | 37.0 | 48.8 | link | link |

| SA-BEV-R50-MSCT-CBGS | 38.7 | 51.2 | link | link |

a. Create a conda virtual environment and activate it.

conda create -n sabev python=3.8 -y

conda activate sabevb. Install PyTorch and torchvision following the official instructions.

pip install torch==1.10.0+cu111 torchvision==0.11.0+cu111 torchaudio==0.10.0 -f https://download.pytorch.org/whl/torch_stable.htmlc. Install SA-BEV as mmdet3d.

pip install mmcv-full==1.5.3

pip install mmdet==2.27.0

pip install mmsegmentation==0.25.0

pip install -e .2. Prepare nuScenes dataset as introduced in nuscenes_det.md and create the pkl for SA-BEV by running:

python tools/create_data_bevdet.py3. Download nuScenes-lidarseg from nuScenes official site and put it under data/nuscenes/. Create depth and semantic labels from point cloud by running:

python tools/generate_point_label.pybash tools/dist_train.sh configs/sabev/sabev-r50.py 8 --no-validate

bash tools/dist_test.sh configs/sabev/sabev-r50.py work_dirs/sabev-r50/epoch_24_ema.pth 8 --eval bboxThis project is not possible without multiple great open-sourced code bases. We list some notable examples below.

If SA-BEV is helpful for your research, please consider citing the following BibTeX entry.

@article{zhang2023sabev,

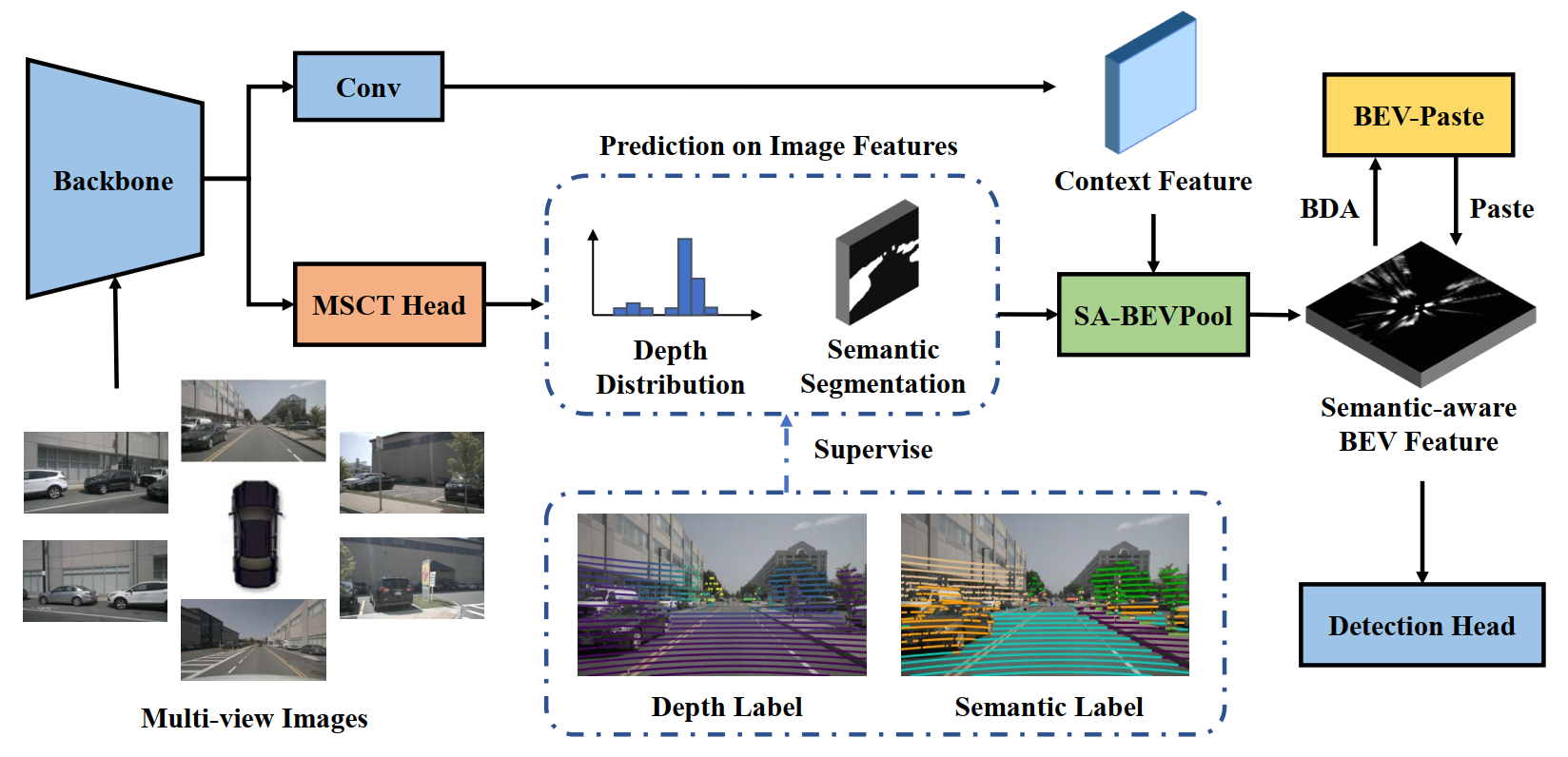

title={SA-BEV: Generating Semantic-Aware Bird's-Eye-View Feature for Multi-view 3D Object Detection},

author={Jinqing, Zhang and Yanan, Zhang and Qingjie, Liu and Yunhong, Wang},

journal={arXiv preprint arXiv:2307.11477},

year={2023},

}