Sewhan Choi1 *, Jungho Kim1 *, Hongjae Shin1, Junwon Choi2 **

1 Hanyang University, Korea 2 Seoul National University, Korea

(*) equal contribution, (**) corresponding author.

ECCV papers (ECCV2024)

ArXiv Preprint (arXiv 2208.05736)

- [2024-09] Release the model codes and trained weights of 24 and 110 epochs on the nuScenes dataset, respectively

- [2024-10] Trained weights on the Argoverse2 dataset.

- [2024-10] Trained weights for camera and LiDAR fusion on the nuScenes dataset.

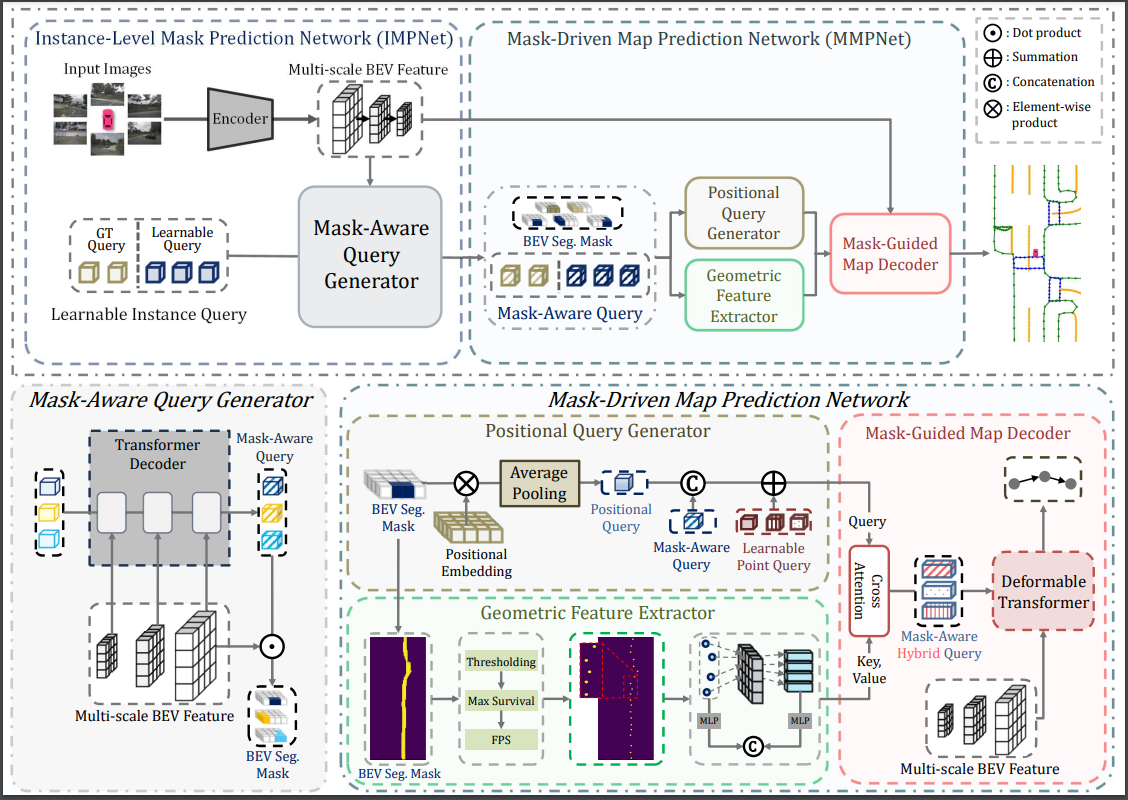

In this paper, we introduce Mask2Map, a novel end-to-end online HD map construction method designed for autonomous driving applications. Our approach focuses on predicting the class and ordered point set of map instances within a scene, represented in the bird's eye view (BEV). Mask2Map consists of two primary components: the Instance-Level Mask Prediction Network (IMPNet) and the Mask-Driven Map Prediction Network (MMPNet). IMPNet generates Mask-Aware Queries and BEV Segmentation Masks to capture comprehensive semantic information globally. Subsequently, MMPNet enhances these query features using local contextual information through two submodules: the Positional Query Generator (PQG) and the Geometric Feature Extractor (GFE). PQG extracts instance-level positional queries by embedding BEV positional information into Mask-Aware Queries, while GFE utilizes BEV Segmentation Masks to generate point-level geometric features. However, we observed limited performance in Mask2Map due to inter-network inconsistency stemming from different predictions to Ground Truth (GT) matching between IMPNet and MMPNet. To tackle this challenge, we propose the Inter-network Denoising Training method, which guides the model to denoise the output affected by both noisy GT queries and perturbed BEV Segmentation Masks.

Results from the Mask2Map paper

| Method | Backbone | BEVEncoder | Lr Schd | mAP | config | Download_phase1 | Download_phase2 |

|---|---|---|---|---|---|---|---|

| Mask2Map | R50 | bevpool | 24ep | 71.6 | config | model_phase1 | model_phase2 |

| Mask2Map | R50 | bevpool | 110ep | 75.4 | config | model_phase1 | model_phase2 |

Notes:

- All the experiments are performed on 4 NVIDIA GeForce RTX 3090 GPUs.

Mask2Map is based on mmdetection3d. It is also greatly inspired by the following outstanding contributions to the open-source community: MapTR, BEVFusion, BEVFormer, HDMapNet, GKT, VectorMapNet.

If you find Mask2Map is useful in your research or applications, please consider giving us a star 🌟 and citing it by the following BibTeX entry.

@inproceedings{Mask2Map,

title={Mask2Map: Vectorized HD Map Construction Using Bird’s Eye View Segmentation Masks},

author={Choi, Sewhan and Kim, Jungho and Shin, Hongjae and Choi, Jun Won},

booktitle={European Conference on Computer Vision},

year={2024}

}