First, download OpenSCAD from here.

For Mac or Linux users, add the following line to your .zshrc or .bashrc, depending on the shell you're using:

export OPENSCAD_EXEC=<full_path_to_openscad_executable>For Windows users, add an environment variable named OPENSCAD_EXEC with the path to the OpenSCAD executable.

Ensure the modules variable is updated to the correct absolute path for your system.

Then, install the necessary packages in your Python environment:

pip install solidpython

pip install libiglA working Python version is 3.8, but the latest version should also be compatible.

Activate your environment and run:

python viewerClone the libigl repository to your local machine:

git clone https://github.com/libigl/libigl.gitBuild libigl with the following commands:

cd libigl

mkdir build

cd build

cmake ..

makeTo run the tutorial files, for example, 106_ViewerMenu.cpp, navigate to the bin directory and run:

./106_ViewerMenuFor more detailed instructions on using the libigl repository, visit their tutorial page.

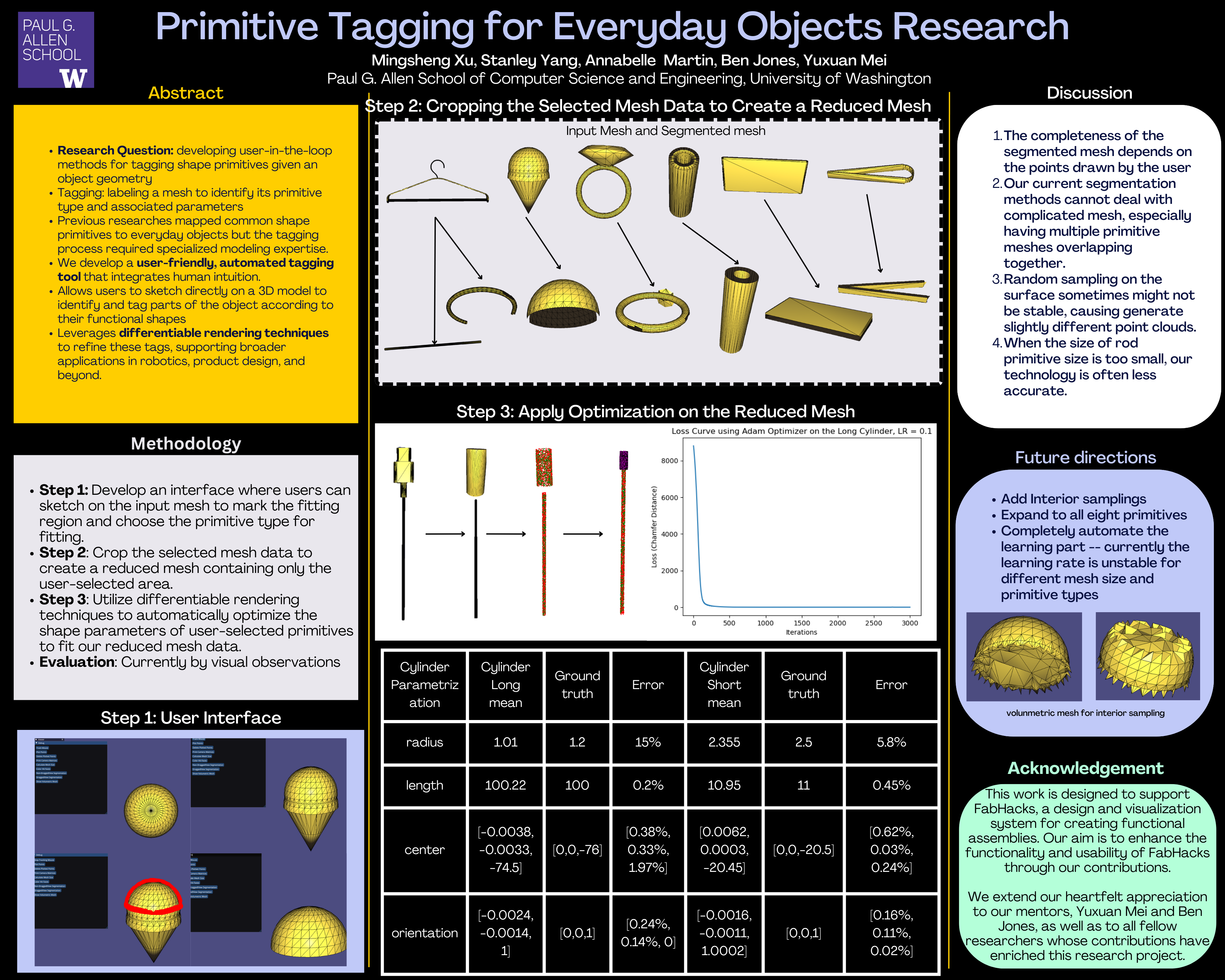

In the step2 directory, 106_ViewerMenu.cpp has been enhanced with additional functionalities, such as tracking mouse coordinates, plotting screen points, and segmenting the mesh for user interaction with the uploaded mesh. This directory also contains the original and reduced meshes.

We use the libigl repository to segment a primitive mesh (reduced mesh) from the original mesh and save it as an OBJ file. Follow these steps:

- Upload a mesh.

- Click the

track mousebutton to start tracking the mouse's screen coordinates. - Use the

plot pointsbutton to plot intersection points and thecolor facesbutton to visualize the hit faces. - Click the

segmentationbutton to segment and save the mesh from the original mesh based on the selected region.

Ensure that the selected region is precise and forms a tight boundary for the desired primitive type.

In the step3_parameters_learning directory, we include seven different types of primitives along with corresponding parameter learning .ipynb files.

The Python environment for these Jupyter Notebook files requires Python 3.10, along with PyTorch and PyTorch3D dependencies.

Due to the instability of randomly sampling points on the surface of each reduced mesh, the learning part for Clip, Hook, and Hole might not be accurate at all times. If the results are incorrect, rerun the file.

There is a slight discrepancy between our current techniques and the ground truth for some meshes with small sizes. However, as the size of the mesh increases, our techniques become more accurate and have a smaller margin of error.

We have uploaded the volumetric mesh, which includes interior faces, for the reduced mesh in the step2 directory. In future work, we aim to reconstruct the parametrization function for each primitive to sample points both on the surface and interior, improving the accuracy and stability of the learning part.

Our team has presented our research poster in two research showcase sessions. The poster highlights our findings and the methodologies used in the project. You can view the poster below: