This repository contains the implementation of the paper:

TI2V-Zero: Zero-Shot Image Conditioning for Text-to-Video Diffusion Models"

Haomiao Ni, Bernhard Egger Suhas Lohit, Anoop Cherian, Ye Wang, Toshiaki Koike-Akino, Sharon X. Huang, Tim K Marks

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024

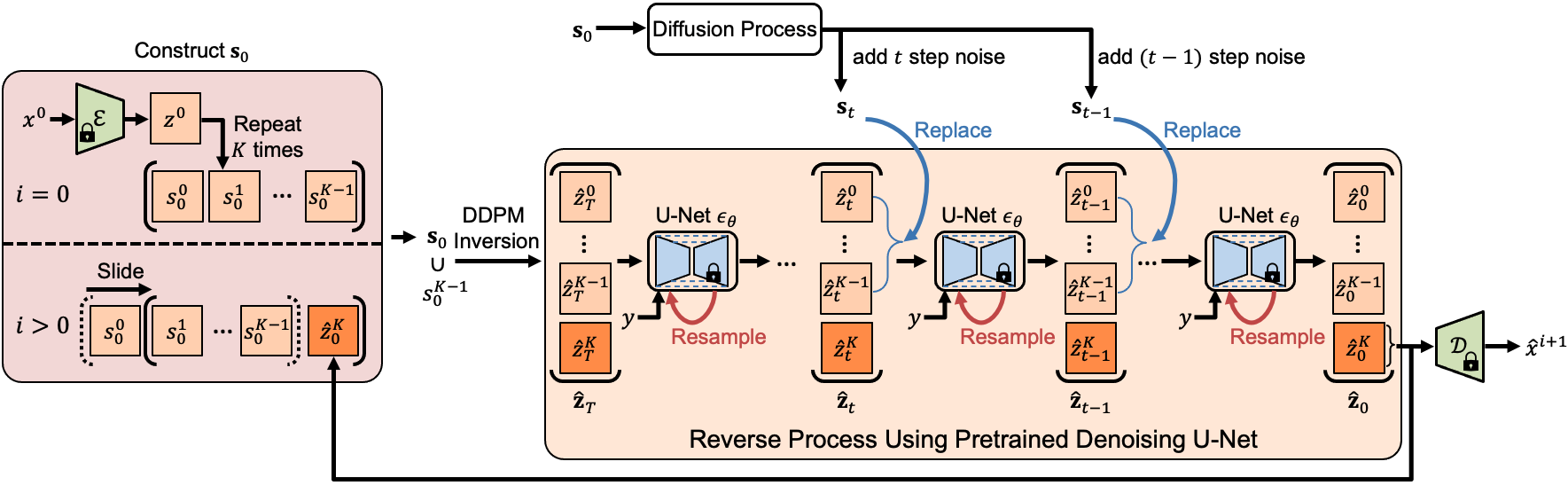

Text-conditioned image-to-video generation (TI2V) aims to synthesize a realistic video starting from a given image (\eg, a woman's photo) and a text description e.g., "a woman is drinking water". Existing TI2V frameworks often require costly training on video-text datasets and specific model designs for text and image conditioning. In this work, we propose TI2V-Zero, a zero-shot, tuning-free method that empowers a pretrained text-to-video (T2V) diffusion model to be conditioned on a provided image, enabling TI2V generation without any optimization, fine-tuning, or introducing external modules. Our approach leverages a pretrained T2V diffusion foundation model as the generative prior. To guide video generation with the additional image input, we propose a "repeat-and-slide" strategy that modulates the reverse denoising process, allowing the frozen diffusion model to synthesize a video frame-by-frame starting from the provided image. To ensure temporal continuity, we employ a DDPM inversion strategy to initialize Gaussian noise for each newly synthesized frame and a resampling technique to help preserve visual details. We conduct comprehensive experiments on both domain-specific and open-domain datasets, where TI2V-Zero consistently outperforms a recent open-domain TI2V model. Furthermore, we show that TI2V-Zero can seamlessly extend to other tasks such as video infilling and prediction when provided with more images. Its autoregressive design also supports long video generation.

- Install required dependencies. First create a conda environment using

conda create --name ti2v python=3.8. Activate the conda environment usingconda activate ti2v. Then usepip install -r requirements.txtto install the remaining dependencies. - Run

python initialization.pyto download pretrained ModelScope models from HuggingFace. - Run

python demo_img2vid.pyto generate videos by providing an image and a text input.

You can set the image path and text input in this file manually. By default, the file uses example images and text inputs. The example images in the examples/ folder were generated using Stable Diffusion

MUG Dataset

- Download MUG dataset from their website.

- After installing dependencies, run

python gen_video_mug.pyto generate videos. Please set the paths in the code files if needed.

UCF101 Dataset

- Download UCF101 dataset from their website.

- Preprocess the dataset to sample frames from video. You may use our preprocessing function in

datasets_ucf.py. - After installing dependencies, run

python gen_video_ucf.pyto generate videos. Please set the paths in the code files if needed.

See CONTRIBUTING.md for our policy on contributions.

Released under AGPL-3.0-or-later license, as found in the LICENSE.md file.

All files, except as noted below:

Copyright (c) 2024 Mitsubishi Electric Research Laboratories (MERL)

SPDX-License-Identifier: AGPL-3.0-or-later

The following files

autoencoder.pydiffusion.pymodelscope_t2v.pyunet_sd.py

were adapted from https://github.com/modelscope/modelscope/tree/57791a8cc59ccf9eda8b94a9a9512d9e3029c00b/modelscope/models/multi_modal/video_synthesis (license included in LICENSES/Apache-2.0.txt):

Copyright (c) 2024 Mitsubishi Electric Research Laboratories (MERL)

Copyright (c) 2021-2022 The Alibaba Fundamental Vision Team Authors

The following file

modelscope_t2v_pipeline.py

was adapted from https://github.com/modelscope/modelscope/blob/bedec553c17b7e297da9db466fee61ccbd4295ba/modelscope/pipelines/multi_modal/text_to_video_synthesis_pipeline.py (license included in LICENSES/Apache-2.0.txt)

Copyright (c) 2024 Mitsubishi Electric Research Laboratories (MERL)

Copyright (c) Alibaba, Inc. and its affiliates.

The following file

util.py

was adapted from https://github.com/modelscope/modelscope/blob/57791a8cc59ccf9eda8b94a9a9512d9e3029c00b/modelscope/models/cv/anydoor/ldm/util.py (license included in LICENSES/Apache-2.0.txt):

Copyright (c) 2024 Mitsubishi Electric Research Laboratories (MERL)

Copyright (c) 2021-2022 The Alibaba Fundamental Vision Team Authors. All rights reserved.

The following files:

dataset/datasets_mug.pydataset/datasets_ucf.py

were adapted from LFDM (license included in LICENSES/BSD-2-Clause.txt):

Copyright (c) 2024 Mitsubishi Electric Research Laboratories (MERL)

Copyright (C) 2023 NEC Laboratories America, Inc. ("NECLA"). All rights reserved.

The following files

demo_img2vid.pygen_video_mug.pygen_video_ucf.py

were adapted from LFDM (license included in LICENSES/BSD-2-Clause.txt):

Copyright (c) 2024 Mitsubishi Electric Research Laboratories (MERL)

Copyright (C) 2023 NEC Laboratories America, Inc. ("NECLA"). All rights reserved.

If you use our work, please use the following citation

@inproceedings{ni2024ti2v,

title={TI2V-Zero: Zero-Shot Image Conditioning for Text-to-Video Diffusion Models},

author={Ni, Haomiao and Egger, Bernhard and Lohit, Suhas and Cherian, Anoop and Wang, Ye and Koike-Akino, Toshiaki and Huang, Sharon X and Marks, Tim K},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2024}

}