ALET is an abbreviation for Automated Labeling of Equipment and Tools. In Turkish, it also stands for the word “tool”.

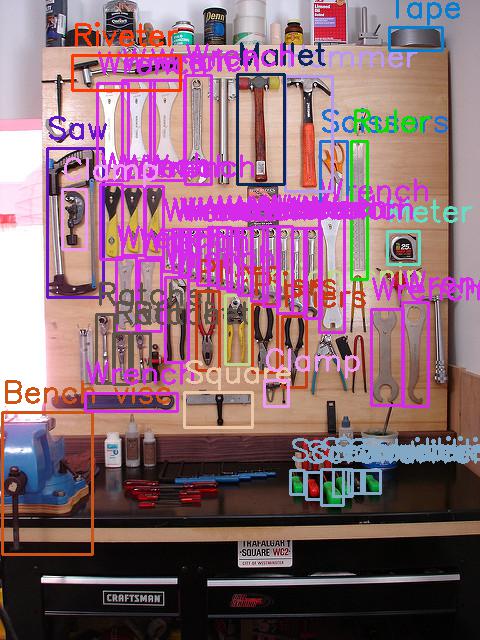

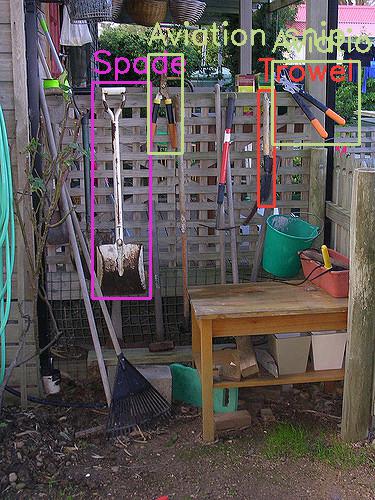

METU-ALET is an image dataset for the detection of the tools in the wild. We provide an extensive dataset in order to detect tools that belongs to the categories such as farming, gardening, office, stonemasonry, vehicle, woodworking and workshop. The images in the dataset contain a total of 22841 bounding boxes and 49 different tool categories.

For more information, please check out the paper:

F. C. Kurnaz, B. Hocaoglu, M. K. Yilmaz, I. Sulo, S. Kalkan, "ALET (Automated Labeling of Equipment and Tools): A Dataset, a Baseline and a Usecase for Tool Detection in the Wild", ECCV2020 Workshop on International Workshop on Assistive Computer Vision and Robotics, 2020. [Arxiv], [Springer copy]

Most of the scenes that reside in the dataset are not generated or constructed; they are snapshots of existing environments with or without humans using the tools. The rest of the scenes are generated; therefore, these scenes are considered as synthetic data.

The images that reside in the METU-ALET dataset can be split into three categories:

These are the images that are downloaded and crawled from the Internet. The websites that are used to gather the images are as the following: Creativecommons, Wikicommons, Flickr, Pexels, Unsplash, Shopify, Pixabay, Everystock, Imfree. It should also be noted that while crawling and downloading images from these websites, license issues had also been considered. Therefore, we have only taken the royalty-free images from these websites. These type of images in the dataset contains 11114 bounding boxes.

|

|

|

|

These are the images that are photographed by ourselves that are mostly consisting of office and workshop scenes. The images in the METU-ALET dataset contains 765 bounding boxes related to this type of data.

|

|

|

|

In order to make sure that there are at least 200 instances for each tool, we developed a simulation environment and collected synthetic images. For this, we used the Unity3D platform with 3D models of tools acquired from UnityStore. For each scene to be generated, the following steps are followed:

- Scene Background: We created a room like environment with 4 walls, 10 different random objects (chair, sofa, corner-piece, television) in static positions. At the center of the room, we spawned one of six different tables selected randomly from Uniform(1, 6). To introduce more randomness, we also dropped unrelated objects like mugs, bottles randomly.

- Camera: Each dimension of the camera position (x, y, z) was sampled randomly from Uniform(-3, 3). The viewing direction of camera was set towards the center of the top of the table.

- Tools: In each scene, we spawned N ~ Uniform(5,20) tools which are selected randomly from Uniform(1,49). The spawn tools are dropped onto the table from [x,y,z] selected randomly from Uniform(0, 1) above the table. Initial orientation (of each dimension) is sampled as an angle from Uniform(0,360).

The special cases such as the ones when the sampled camera did not see the table-top are handled using hand-designed rules.

Through the recent advancements in the field of robotics, we have come to a point where humans and robots will be performing tasks in a collaborative manner. By the help of this dataset, we aim to solve the object detection tasks where robots will be able to detect tools that can be grabed or carried by them. As the definition of a tool is too broad, it had been decided to consider only the tools for the dataset which can be manipulated by the robots.

As the datasets that are used in the field of robotics consider only a limited number of categories and instances, and they mainly focus on detection of tool affordances, they are not suitable for training a deep object detector. With METU-ALET, we introduce a dataset which consists of real-life scenes where the tools are unorganized as much as possible, and where the tools can be found in their natural habitat or while humans are using them.

The scenes that we consider also introduce several challenges for the object detection task, such as including the small scale of the tools, their articulated nature, occlusion and inter-class invariance.

|

|

|

|

We created a CNN architecture consisting of three 2D convolutional layers and two fully connected layers. After each convolutional layer we added a batch normalization layer, and each layer is also followed by ReLu activation. The final layer has five outputs with sigmoid activation. The network performs five-class (one for each safety tool) multi-label classification with binary cross-entropy. The network is trained on ALET Safety Dataset.

An alternative approach could be to combine the results of the tool detector and the pose detector. However, considering that the tool detection networks are having acceptable performance, we adopted an independent network for safety detection. Moreover, a tool detector would be detecting 49 tools in a scene where 43 of which are irrelevant for our safety usecase.

The first version of the ALET Dataset is accessible from:

- The revised ALET DATASET is available here.

The METU-ALET dataset is copyright free for research and commercial purposes provided that suitable citation is provided (see below):

- The "downloaded" images are selected from copyright free images from Creativecommons, Wikicommons, Flickr, Pexels, Unsplash, Shopify, Pixabay, Everystock, Imfree.

- Our additions to the images (photographed and synthesized) as well as our object annotations are provided copyright free as well.

If you use the METU-ALET dataset or the related resources shared here, please cite the following work:

@inproceedings{METU_ALET,

title={ALET (Automated Labeling of Equipment and Tools): A Dataset for Tool Detection and Human Worker Safety Detection},

author={Kurnaz, Fatih Can and Hocaog̃lu, Burak and Y{\i}lmaz, Mert Kaan and S{\"u}lo, {\.I}dil and Kalkan, Sinan},

booktitle={European Conference on Computer Vision Workshop on Assistive Computer Vision and Robotics},

pages={371--386},

year={2020},

organization={Springer}

}

For questions or comments please contact Sinan Kalkan at "skalkan [@] ceng.metu.edu.tr" or visit http://kovan.ceng.metu.edu.tr/~sinan.