This repository contains examples and related resources showing you how to preprocess, train, debug your training script with breakpoints, and serve on your local machine using Amazon SageMaker Local mode for processing jobs, training and serving.

The local mode in the Amazon SageMaker Python SDK can emulate CPU (single and multi-instance) and GPU (single instance) SageMaker training jobs by changing a single argument in the TensorFlow, PyTorch or MXNet estimators. To do this, it uses Docker compose and NVIDIA Docker. It will also pull the Amazon SageMaker TensorFlow, PyTorch or MXNet containers from Amazon ECS, so you’ll need to be able to access a public Amazon ECR repository from your local environment.

For full details on how this works:

- Read the Machine Learning Blog post at: https://aws.amazon.com/blogs/machine-learning/use-the-amazon-sagemaker-local-mode-to-train-on-your-notebook-instance/

This repository examples will work in any IDE on your local machine.

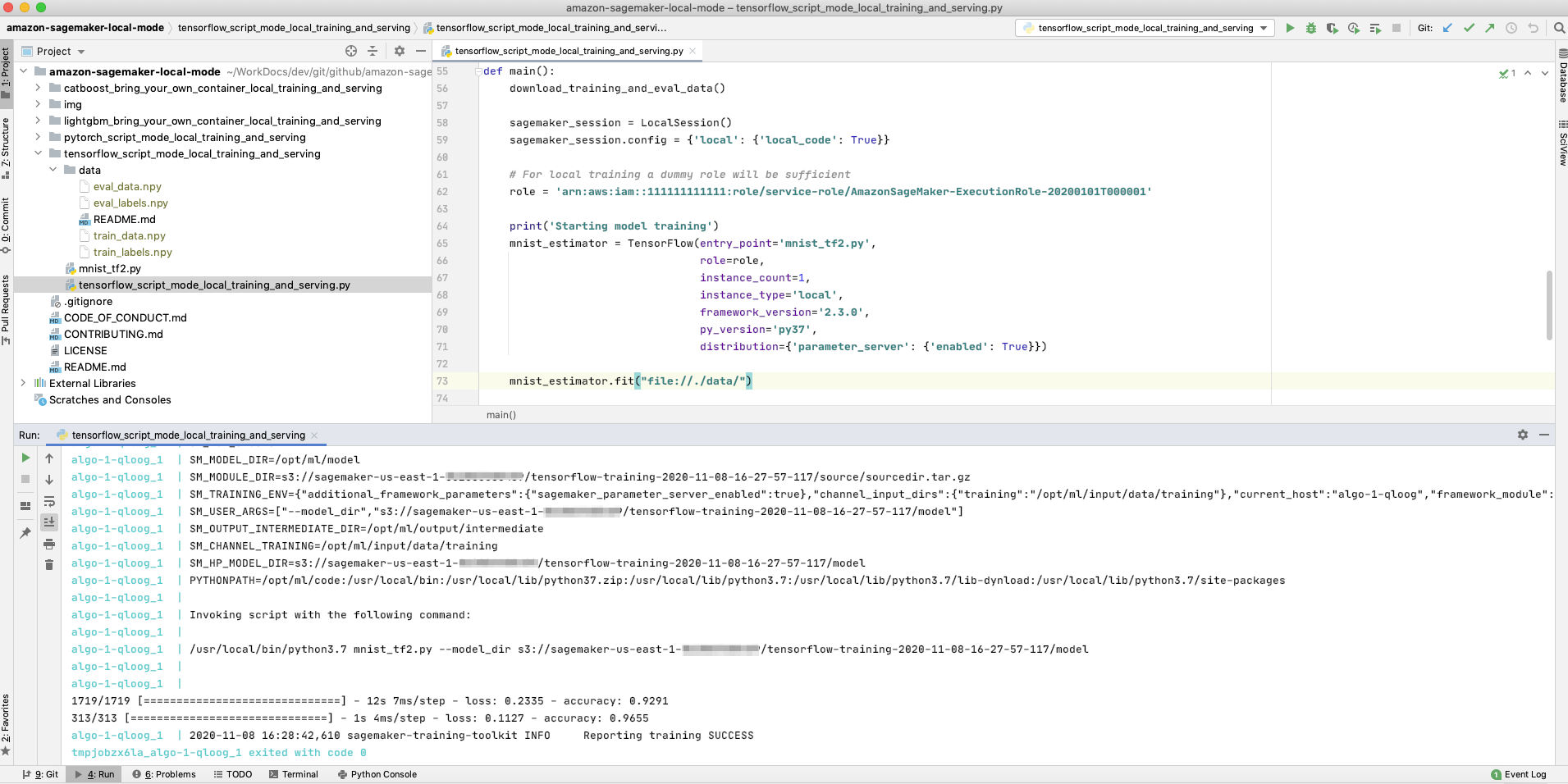

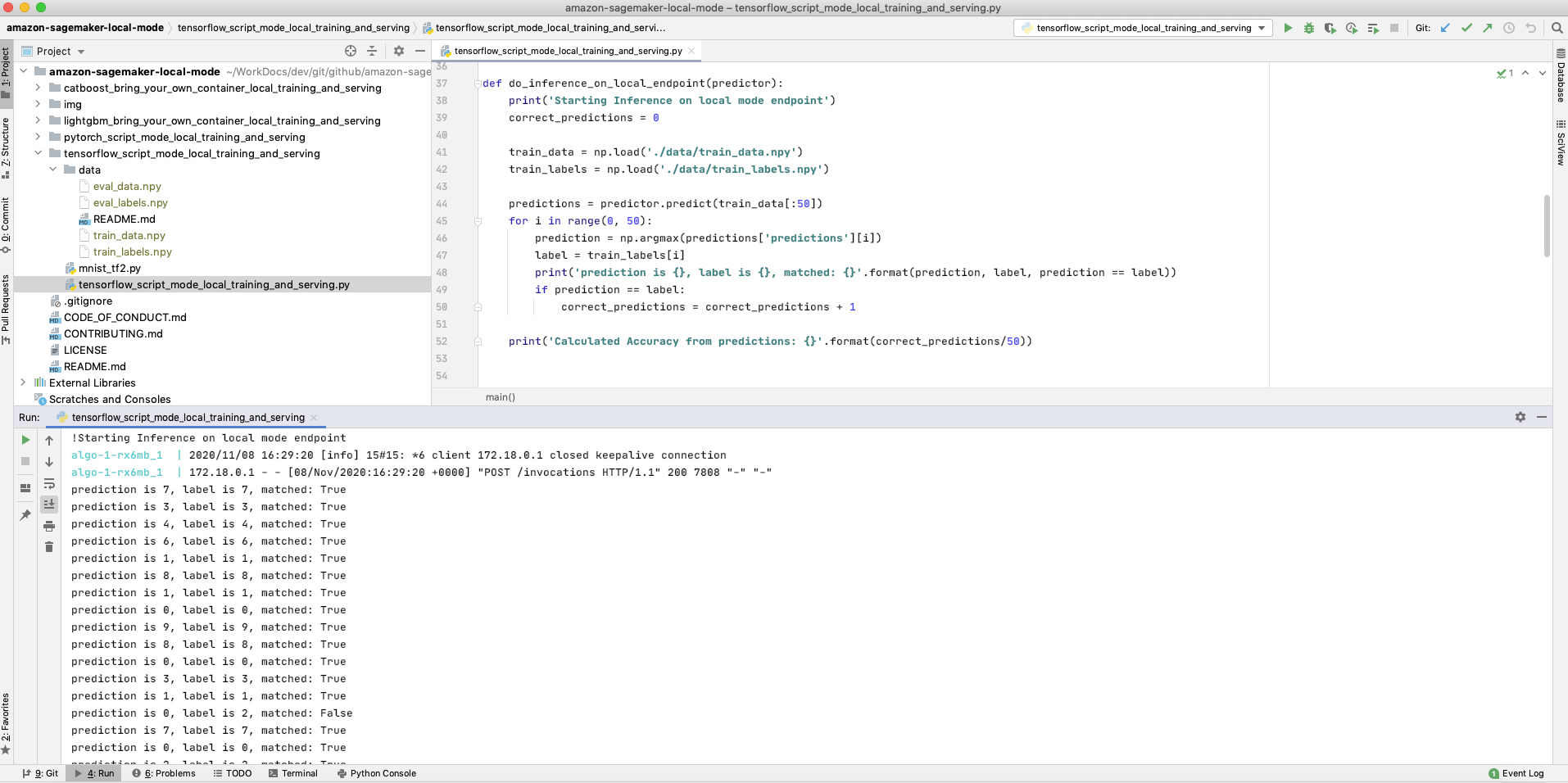

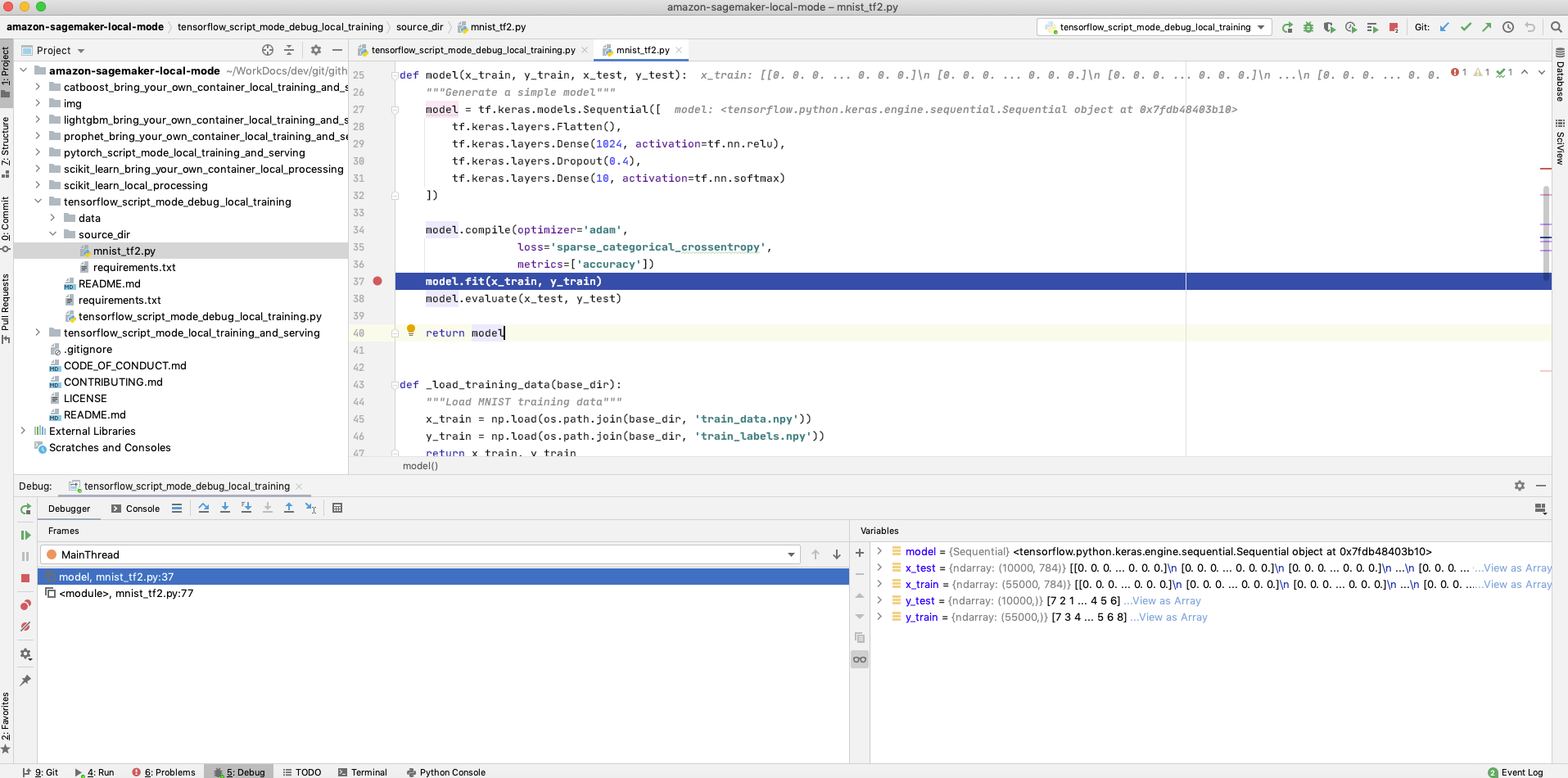

Here you can see a TensorFlow example running on PyCharm. The data for training and serving is also located on your local machine file system.

The repository contains the following resources:

-

scikit-learn resources:

- scikit-learn Script Mode Training and Serving: This example shows how to train and serve your model with scikit-learn and SageMaker script mode, on your local machine using SageMaker local mode.

- CatBoost with scikit-learn Script Mode Training and Serving: This example shows how to train and serve a CatBoost model with scikit-learn and SageMaker script mode, on your local machine using SageMaker local mode.

-

XGBoost resources:

- XGBoost Script Mode Training and Serving: This example shows how to train and serve your model with XGBoost and SageMaker script mode, on your local machine using SageMaker local mode.

-

TensorFlow resources:

- TensorFlow Script Mode Training and Serving: This example shows how to train and serve your model with TensorFlow and SageMaker script mode, on your local machine using SageMaker local mode.

- TensorFlow Script Mode Debug Training Script: This example shows how to debug your training script running inside a prebuilt SageMaker Docker image for TensorFlow, on your local machine using SageMaker local mode.

- TensorFlow Script Mode Deploy a Trained Model and inference on file from S3: This example shows how to deploy a trained model to a SageMaker endpoint, on your local machine using SageMaker local mode, and inference with a file in S3 instead of http payload for the SageMaker Endpoint.

- TensorFlow Script Mode Training and Batch Transform: This example shows how to train your model and run Batch Transform job with TensorFlow and SageMaker script mode, on your local machine using SageMaker local mode.

-

PyTorch resources:

- PyTorch Script Mode Training and Serving: This example shows how to train and serve your model with PyTorch and SageMaker script mode, on your local machine using SageMaker local mode.

- PyTorch Script Mode Deploy a Trained Model: This example shows how to deploy a trained model to a SageMaker endpoint, on your local machine using SageMaker local mode, and serve your model with the SageMaker Endpoint.

- Deploy a pre-trained PyTorch HeBERT model from Hugging Face on Amazon SageMaker Endpoint: This example shows how to deploy a pre-trained PyTorch HeBERT model from Hugging Face, on Amazon SageMaker Endpoint, on your local machine using SageMaker local mode.

-

Bring Your Own Container resources:

- Bring Your Own Container TensorFlow Algorithm - Train/Serve: This example provides a detailed walkthrough on how to package a Tensorflow 2.5.0 algorithm for training and production-ready hosting. We have included also a Python file for local training and serving that can run on your local computer, for faster development.

- Bring Your Own Container TensorFlow Algorithm - Train/Batch Transform: This example provides a detailed walkthrough on how to package a Tensorflow 2.5.0 algorithm for training, and then run a Batch Transform job on a CSV file. We have included also a Python file for local training and serving that can run on your local computer, for faster development.

- Bring Your Own Container CatBoost Algorithm: This example provides a detailed walkthrough on how to package a CatBoost algorithm for training and production-ready hosting. We have included also a Python file for local training and serving that can run on your local computer, for faster development.

- Bring Your Own Container LightGBM Algorithm: This example provides a detailed walkthrough on how to package a LightGBM algorithm for training and production-ready hosting. We have included also a Python file for local training and serving that can run on your local computer, for faster development.

- Bring Your Own Container Prophet Algorithm: This example provides a detailed walkthrough on how to package a Prophet algorithm for training and production-ready hosting. We have included also a Python file for local training and serving that can run on your local computer, for faster development.

- Bring Your Own Container HDBSCAN Algorithm: This example provides a detailed walkthrough on how to package a HDBSCAN algorithm for training. We have included also a Python file for local training that can run on your local computer, for faster development.

-

Built-in scikit-learn Processing Job :

- Built-in scikit-learn Processing Job: This example provides a detailed walkthrough on how to use the built-in scikit-learn Docker image for processing jobs. We have included also a Python file for processing jobs that can run on your local computer, for faster development.

-

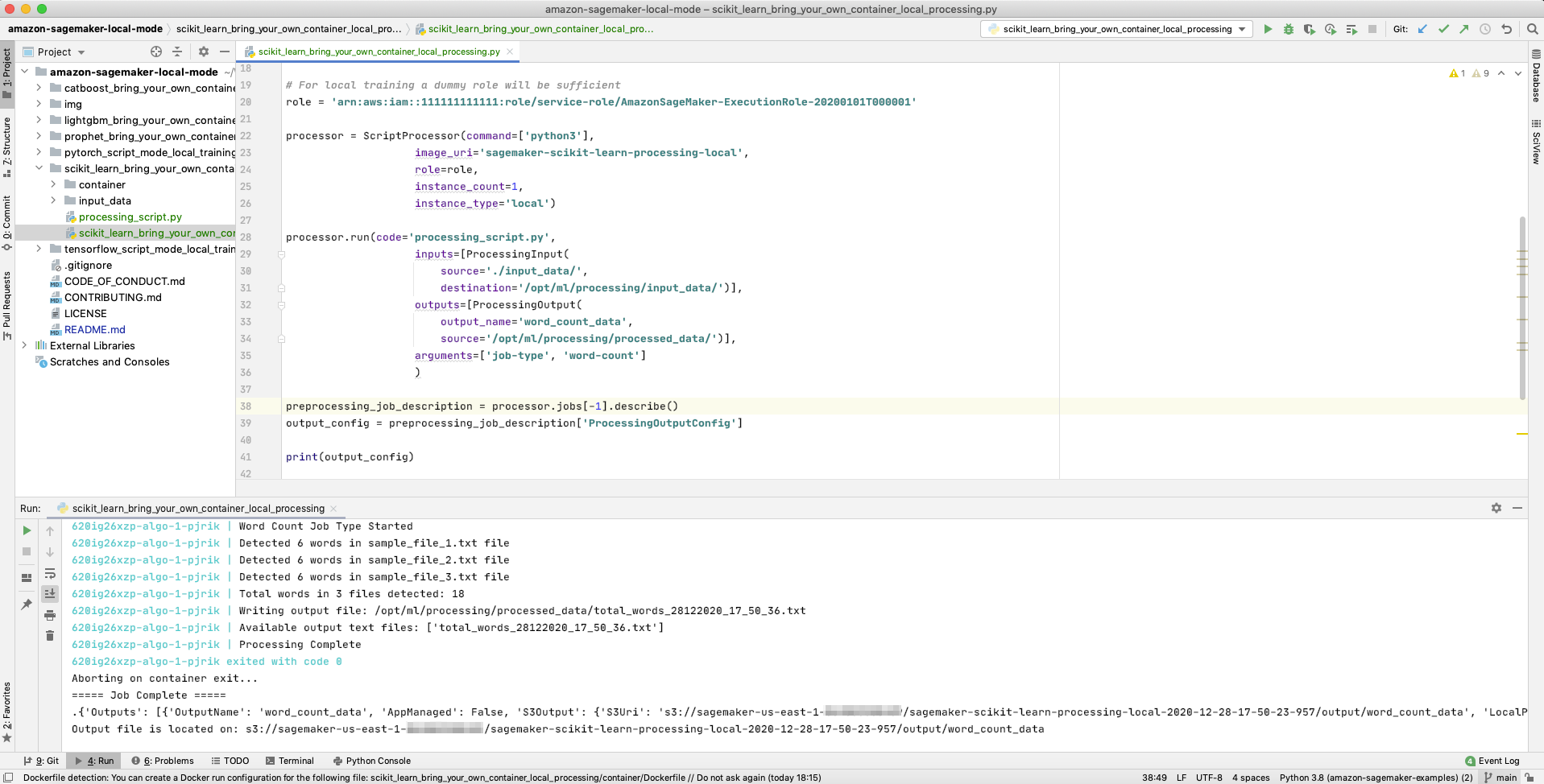

Bring Your Own Container scikit-learn Processing Job :

- Bring Your Own Container scikit-learn Processing Job: This example provides a detailed walkthrough on how to package a scikit-learn Docker image for processing jobs. We have included also a Python file for processing jobs that can run on your local computer, for faster development.

Note: Those examples were tested on macOS and Linux.

-

Create an AWS account if you do not already have one and login.

-

Install Docker Desktop for Mac

-

Clone the repo onto your local development machine using

git clone. -

Open the project in any IDE of your choice in order to run the example Python files.

-

Follow the instructions on which Python packages to install in each of the example Python file.

Please contact @e_sela or raise an issue on this repo.

This library is licensed under the MIT-0 License. See the LICENSE file.