This repository contains the corresponding training code for the project.

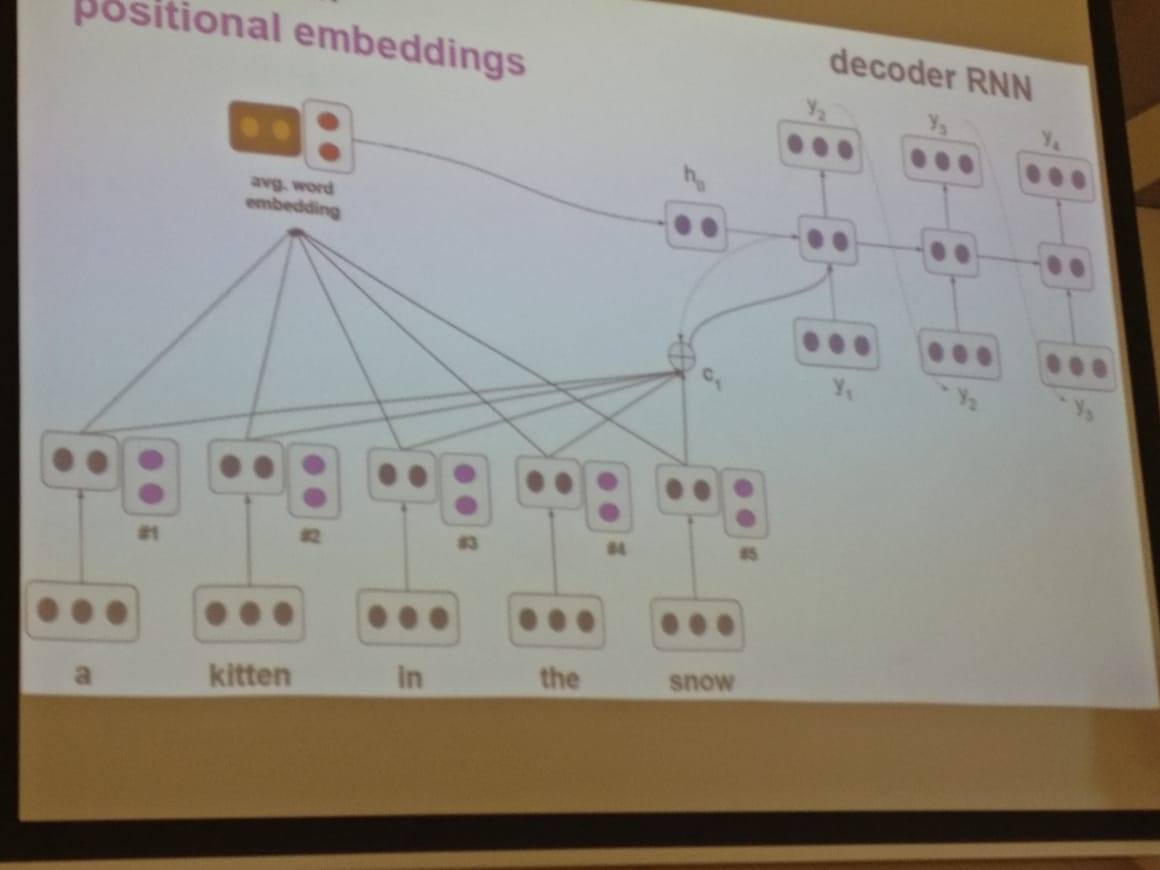

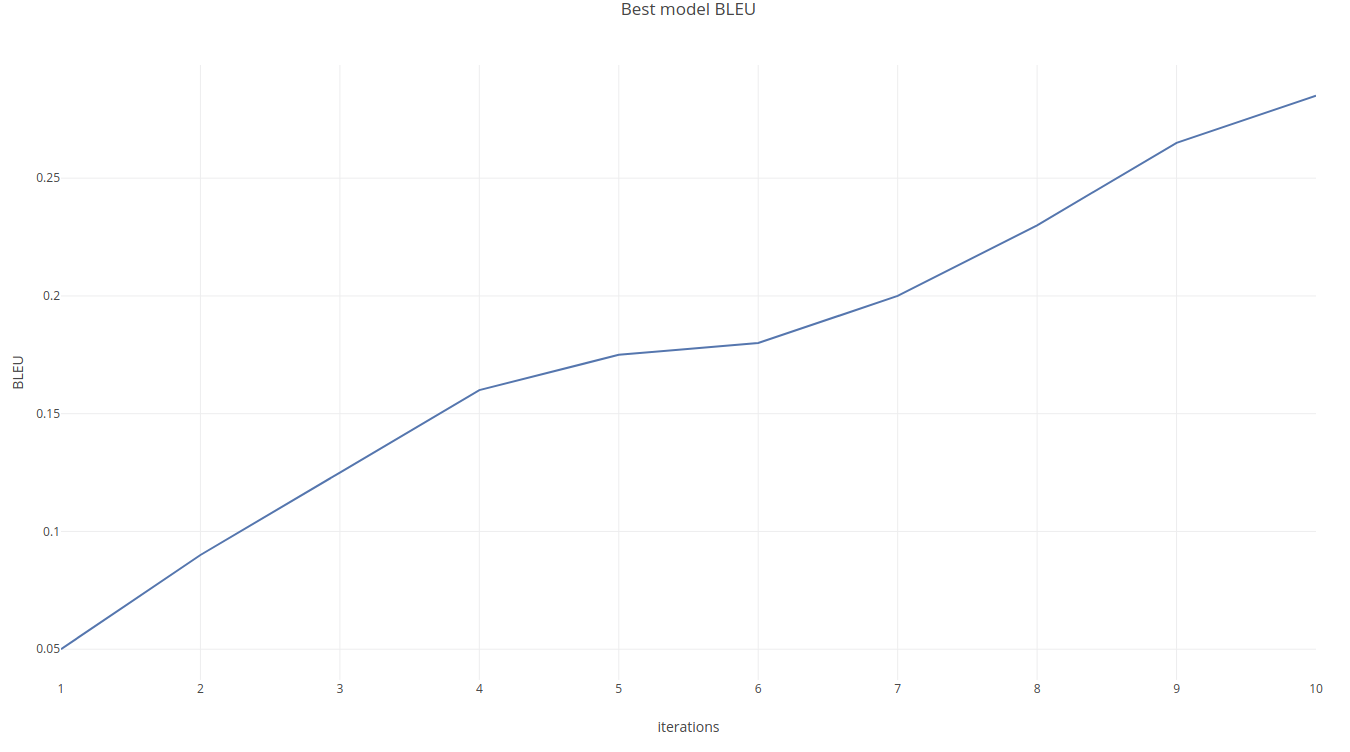

Neural Machine Translation (NMT) is an approach to machine translation that uses a large artificial neural network to predict the likelihood of a sequence of words, typically modeling entire sentences in a single integrated model. In this project we attempt to solve the NMT by using a model inspired from the sequence-to-sequence approach. However, instead of encoding the sentence using a Recurrent Neural Network we make use of positional embeddings. We display our findings and draw meaningful conclusions from them.

- Check the available settings.

- Train the networks using the provided file:

python main.py