The repo accompanies the ICCV 2025 paper DAViD: Data-efficient and Accurate Vision Models from Synthetic Data and contains instructions for downloading and using the SynthHuman dataset and models described in the paper.

The SynthHuman dataset contains approximately 300,000 images of synthetic humans with ground-truth annotations for foreground alpha masks, absolute depth, surface normals and camera intrinsics. There are approximately 100,000 images for each of three camera scenarios: face, upper-body and full-body. The data is generated using the latest version of our synthetic data generation pipeline, which has been used to create a number of datasets: Face Synthetics, SimpleEgo and SynthMoCap. Ground-truth annotations are per-pixel with perfect accuracy due to the graphics-based rendering pipeline:

The dataset contains 298008 samples. There first 98040 samples feature the face, the next 99976 sample feature the full body and the final 99992 samples feature the upper body. Each sample is made up of:

rgb_0000000.png- RGB imagealpha_0000000.png- foreground alpha maskdepth_0000000.exr- absolute z-depth image in cmnormal_0000000.exr- surface normal image (XYZ)cam_0000000.txt- camera intrinsics (see below)

The camera text file includes the standard intrinsic matrix:

f_x 0.0 c_x

0.0 f_y c_y

0.0 0.0 1.0

Where f_x, and f_y are in pixel units.

This can be easily loaded with np.loadtxt(path_to_camera_txt).

The dataset is broken in 60 zip files to make downloading easier.

Each zip file contains 5000 samples and has a maximum size of 8.75GB.

The total download size is approximately 330GB.

To download the dataset simply run download_data.py TARGET_DIRECTORY [--single-sample] [--single-chunk] which will download and unzip the zips into the target folder.

You can optionally download a single sample or a single chunk to quickly take a look at the data.

You can visualize samples from the dataset using visualize_data.py SYNTHHUMAN_DIRECTORY [--start-idx N].

This script shows examples of how to load the image files correctly and display the data.

The SynthHuman dataset is licensed under the CDLA-2.0. The download and visualization scripts are licensed under the MIT License.

We release models for the following tasks:

| Task | Version | ONNX Model | Model Card |

|---|---|---|---|

| Soft Foreground Segmentation | Base | Download | Model Card |

| Large | Download | ||

| Relative Depth Estimation | Base | Download | Model Card |

| Large | Download | ||

| Surface Normal Estimation | Base | Download | Model Card |

| Large | Download | ||

| Multi-Task Model | Large | Download | Model Card |

This demo supports running:

- Relative depth estimation

- Soft foreground segmentation

- Surface normal estimation

To install the requirements for running demo:

pip install -r requirement.txtYou can use either run:

- A multi-task model that performs all tasks simultaneously

python demo.py \

--image path/to/input.jpg \

--multitask-model models/multitask.onnx- Or using individual models

python demo.py \

--image path/to/input.jpg \

--depth-model models/depth.onnx \

--foreground-model models/foreground.onnx \

--normal-model models/normal.onnx🧠 Notes:

- The script expects ONNX models. Ensure the model paths are correct.

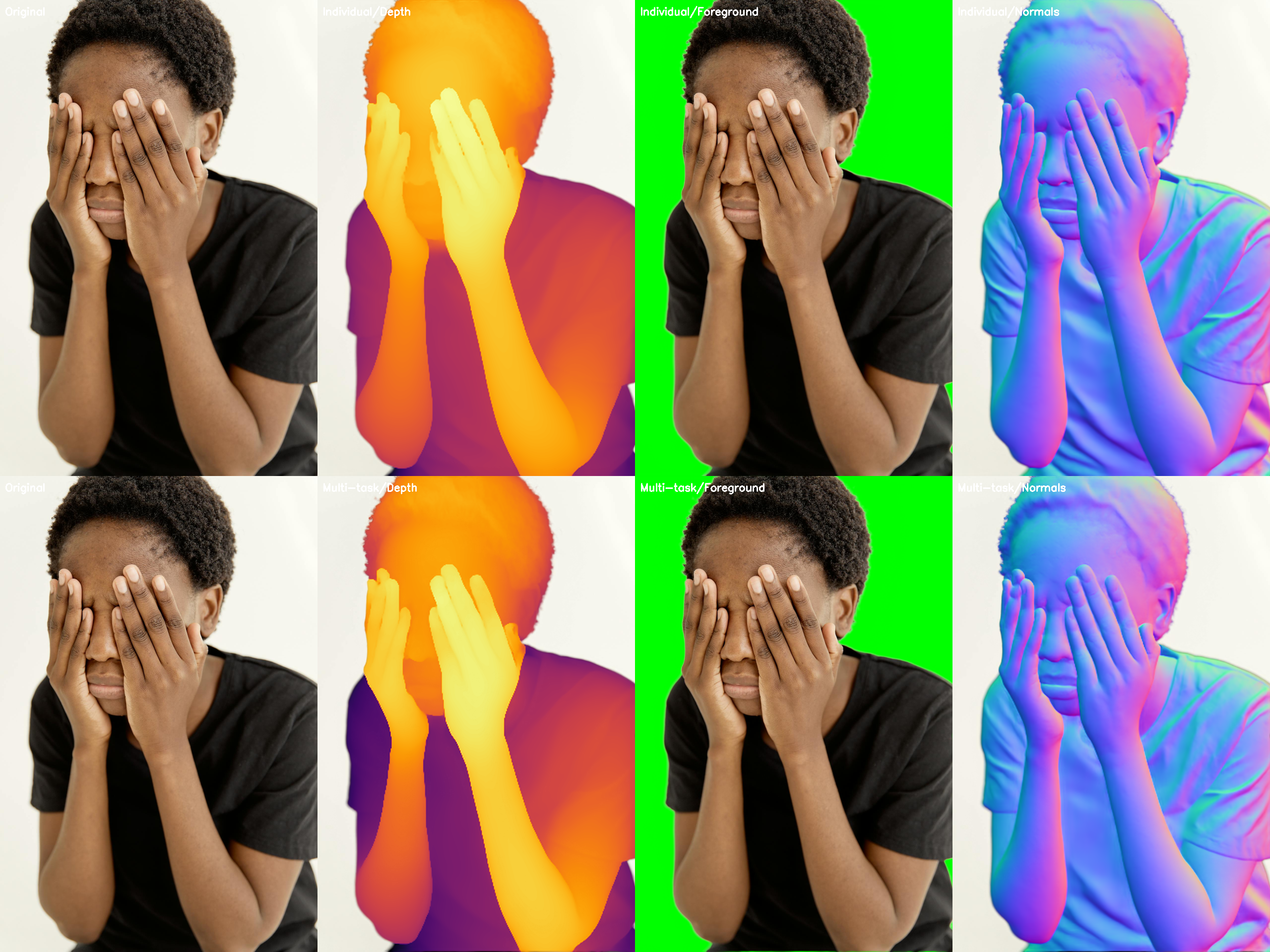

- If both multi-task and individual models are provided, results from both will be shown and compared.

- Foreground masks are used for improved visualization of depth and normals.

Here is an example output image after running the demo:

DAViD models and runtime code are licensed under the MIT License.

If you use the SynthHuman Dataset or any of the DAViD models in your research, please cite the following:

@misc{saleh2025david,

title={{DAViD}: Data-efficient and Accurate Vision Models from Synthetic Data},

author={Fatemeh Saleh and Sadegh Aliakbarian and Charlie Hewitt and Lohit Petikam and Xiao-Xian and Antonio Criminisi and Thomas J. Cashman and Tadas Baltrušaitis},

year={2025},

eprint={2507.15365},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2507.15365},

}