A simple walk-through guide for how to integrate Kubernetes with Dex + LDAP.

In this experiment, we're going to use these major components:

- Kubernetes v1.21.x, powered by

kindv0.11.1; - Dex v2.30.x;

- OpenLDAP with osixia/openldap:1.5.x

A Medium article was posted too, here: https://brightzheng100.medium.com/kubernetes-dex-ldap-integration-f305292a16b9

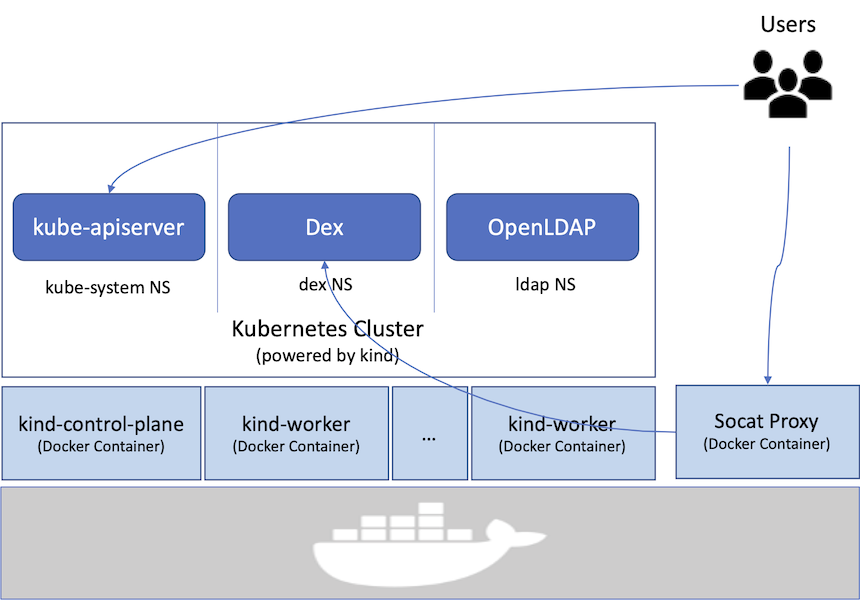

The overall idea can be illustrated as below:

git clone https://github.com/brightzheng100/kubernetes-dex-ldap-integration.git

cd kubernetes-dex-ldap-integrationThe TD;DR guide uses the script here: setup.sh, which will:

- check the required tools -- there are some of them:

docker,git,cfssl,cfssljson,kind,kubectl; - generate the necessary TLS certs/keys for both Kubernetes and Dex;

- create

kind-powered Kubernetes with OIDC configured with Dex; - deploy OpenLDAP in namespace

ldapas the LDAP Server with some dummy entities; - deploy Dex in namespace

dex; - create a proxy so that we can access Dex from our laptop (e.g. my MBP)

./setup.shNote: the populated dummy LDAP entities, all with password

secret, include:

admin1@example.orgadmin2@example.orgdeveloper1@example.orgdeveloper2@example.org

It's common to set up the kube config, e.g. ~/.kube/config, for daily use.

For that, we may simply follow these steps:

-

Bind some users, say "admin1@example.org", as the "cluster-admin"

$ kubectl create clusterrolebinding oidc-cluster-admin \ --clusterrole=cluster-admin \ --user="admin1@example.org" -

Use

kubeloginplugin to simplify the integration$ echo "127.0.0.1 dex.dex.svc" | sudo tee -a /etc/hosts $ SVC_PORT="$(kubectl get -n dex svc/dex -o json | jq '.spec.ports[0].nodePort')" $ kubectl config set-credentials oidc \ --exec-api-version=client.authentication.k8s.io/v1beta1 \ --exec-command=kubectl \ --exec-arg=oidc-login \ --exec-arg=get-token \ --exec-arg=--oidc-issuer-url=https://dex.dex.svc:$SVC_PORT \ --exec-arg=--oidc-redirect-url-hostname=dex.dex.svc \ --exec-arg=--oidc-client-id=example-app \ --exec-arg=--oidc-client-secret=ZXhhbXBsZS1hcHAtc2VjcmV0 \ --exec-arg=--oidc-extra-scope=email \ --exec-arg=--certificate-authority=`pwd`/tls-setup/_certs/ca.pem

-

Use the user to access Kubernetes

$ kubectl --user=oidc get nodes

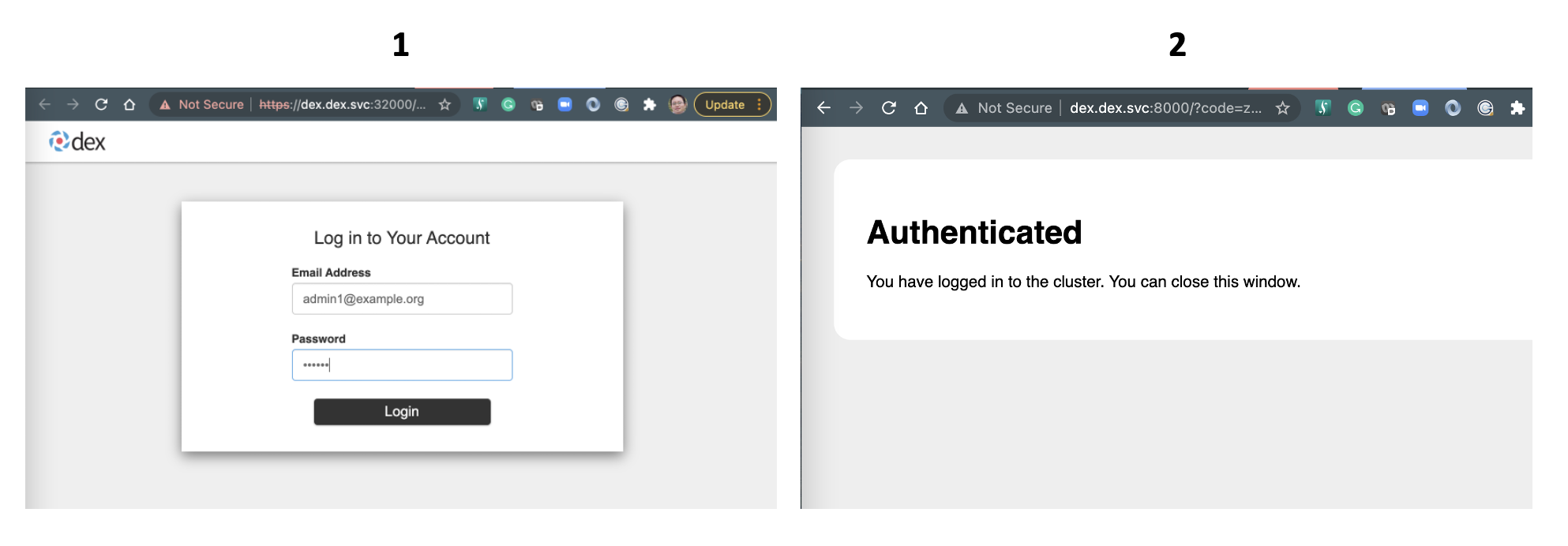

This will prompt us a authentication UI in our default browser, key in the credential of abovementioned LDAP user:

- Email Address:

admin1@example.org - Password:

secret

It will be authenticated by Dax + LDAP, and once the authentication is done we can see the output like:

$ kubectl --user=oidc get nodes NAME STATUS ROLES AGE VERSION dex-ldap-cluster-control-plane Ready control-plane,master 8m55s v1.21.1 dex-ldap-cluster-worker Ready <none> 8m30s v1.21.1Notes:

- as the login will be cached so the subsequent access will be transparent;

- if you want to change the user, you may remove the cache (

rm -rf ~/.kube/cache/oidc-login/*) first and you would be prompted again for what user you want to use;

- Email Address:

-

(optional) Keep multiple users logged in?

So far I haven't found a good way to have multiple users coexist and maintain logged in with

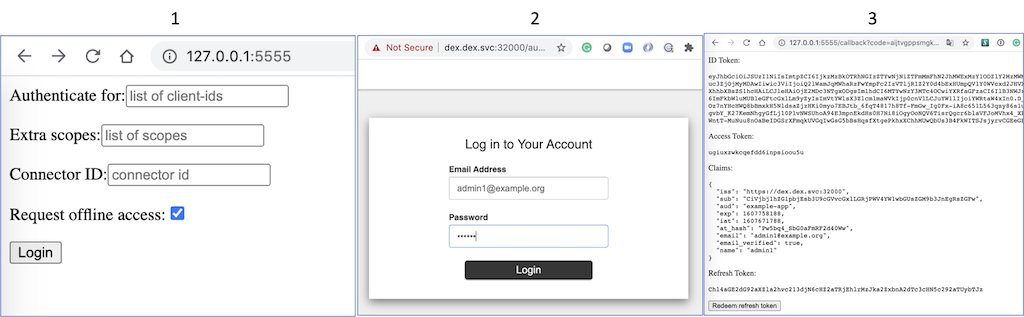

kubelogin. But we can try generate tokens for different users and set the credentials accordingly:cd example-app go run . --issuer https://dex.dex.svc:32000 --issuer-root-ca `pwd`/../tls-setup/_certs/ca.pem

Then open your browser and navigate to http://127.0.0.1:5555, log into it with the users you want, one at a time to generate different tokens, then add different users with the

ID TokenandRefresh Tokeninto your kubeconfig file, e.g~/.kube/config, like this:# Add user admin1@example.org as oidc-admin1 kubectl config set-credentials oidc-admin1 \ --auth-provider=oidc \ --auth-provider-arg=idp-issuer-url=https://dex.dex.svc:32000 \ --auth-provider-arg=client-id=example-app \ --auth-provider-arg=client-secret=ZXhhbXBsZS1hcHAtc2VjcmV0 \ --auth-provider-arg=refresh-token=<THE REFRESH TOKEN FOR admin1@example.org> \ --auth-provider-arg=idp-certificate-authority=`pwd`/tls-setup/_certs/ca.pem \ --auth-provider-arg=id-token=<THE ID TOKEN FOR admin1@example.org> # Add user developer1@example.org as oidc-developer1 kubectl config set-credentials oidc-developer1 \ --auth-provider=oidc \ --auth-provider-arg=idp-issuer-url=https://dex.dex.svc:32000 \ --auth-provider-arg=client-id=example-app \ --auth-provider-arg=client-secret=ZXhhbXBsZS1hcHAtc2VjcmV0 \ --auth-provider-arg=refresh-token=<THE REFRESH TOKEN FOR developer1@example.org> \ --auth-provider-arg=idp-certificate-authority=`pwd`/tls-setup/_certs/ca.pem \ --auth-provider-arg=id-token=<THE ID TOKEN FOR developer1@example.org>

Note:

cfsslandcfssljsonare required to generate certs/keys- You may try using cert-manager, if you want

cd tls-setup

make ca req-dex req-k8sOUTPUT: a folder

_certswill be created and a couple of pairs of certs/keys will be generated.

$ tree _certs

_certs

├── ca-key.pem

├── ca.csr

├── ca.pem

├── dex-key.pem

├── dex.csr

├── dex.pem

├── k8s-key.pem

├── k8s.csr

└── k8s.pem

0 directories, 9 filesNote: Here I'm going to use

kind, you may try any other ways too, likeminikube,k3s/k3d, but the process might have to tune a little bit.

# Make sure we're working from the Git repo's root folder

cd "$( git rev-parse --show-toplevel )"

PROJECT_ROOT="$(pwd)" envsubst < kind/kind.yaml | kind create cluster --name dex-ldap-cluster --config -# Make sure we're working from the Git repo's root folder

cd "$( git rev-parse --show-toplevel )"

kubectl create ns ldap

kubectl create secret generic openldap \

--namespace ldap \

--from-literal=adminpassword=adminpassword

kubectl create configmap ldap \

--namespace ldap \

--from-file=ldap/ldif

kubectl apply --namespace ldap -f ldap/ldap.yaml

# Load ldif data after the OpenLDAP is ready

# Note: by right, they should be loaded automatically but it doesn't work so we load them manually

LDAP_POD=$(kubectl -n ldap get pod -l "app.kubernetes.io/name=openldap" -o jsonpath="{.items[0].metadata.name}")

kubectl -n ldap exec $LDAP_POD -- ldapadd -x -D "cn=admin,dc=example,dc=org" -w adminpassword -H ldap://localhost:389 -f /ldifs/0-ous.ldif

kubectl -n ldap exec $LDAP_POD -- ldapadd -x -D "cn=admin,dc=example,dc=org" -w adminpassword -H ldap://localhost:389 -f /ldifs/1-users.ldif

kubectl -n ldap exec $LDAP_POD -- ldapadd -x -D "cn=admin,dc=example,dc=org" -w adminpassword -H ldap://localhost:389 -f /ldifs/2-groups.ldif

# Check the users loaded

kubectl -n ldap exec $LDAP_POD -- \

ldapsearch -LLL -x -H ldap://localhost:389 -D "cn=admin,dc=example,dc=org" -w adminpassword -b "ou=people,dc=example,dc=org" dnYou should see some users that have been created:

dn: ou=people,dc=example,dc=org

dn: cn=admin1,ou=people,dc=example,dc=org

dn: cn=admin2,ou=people,dc=example,dc=org

dn: cn=developer1,ou=people,dc=example,dc=org

dn: cn=developer2,ou=people,dc=example,dc=org

# Make sure we're working from the Git repo's root folder

cd "$( git rev-parse --show-toplevel )"

kubectl create ns dex

kubectl create secret tls dex-tls \

--namespace dex \

--cert=tls-setup/_certs/dex.pem \

--key=tls-setup/_certs/dex-key.pem

kubectl apply --namespace dex -f dex/dex.yamlAs the Kubernetes is powered by kind, we need to do something extra to access the Dex.

There are some other ways kind#702 but let's try this:

$ SVC_PORT="$(kubectl get -n dex svc/dex -o json | jq '.spec.ports[0].nodePort')"

# Create this proxy container

$ docker run -d --restart always \

--name dex-kind-proxy-$SVC_PORT \

--publish 127.0.0.1:$SVC_PORT:$SVC_PORT \

--link kind-control-plane:target \

--network kind \

alpine/socat -dd \

tcp-listen:$SVC_PORT,fork,reuseaddr tcp-connect:target:$SVC_PORTNow we can access Dex by: https://127.0.0.1:$SVC_PORT/.

For example, issuing an HTTPS request to the discovery endpoint can verify the installation of Dex:

$ curl -k https://127.0.0.1:$SVC_PORT/.well-known/openid-configuration

{

"issuer": "https://dex.dex.svc:32000",

"authorization_endpoint": "https://dex.dex.svc:32000/auth",

"token_endpoint": "https://dex.dex.svc:32000/token",

"jwks_uri": "https://dex.dex.svc:32000/keys",

"response_types_supported": [

"code"

],

"subject_types_supported": [

"public"

],

"id_token_signing_alg_values_supported": [

"RS256"

],

"scopes_supported": [

"openid",

"email",

"groups",

"profile",

"offline_access"

],

"token_endpoint_auth_methods_supported": [

"client_secret_basic"

],

"claims_supported": [

"aud",

"email",

"email_verified",

"exp",

"iat",

"iss",

"locale",

"name",

"sub"

]

}But the issuer has exposed its URL though domain of dex.dex.svc, so we have to edit the /etc/hosts as the easy fix.

$ echo "127.0.0.1 dex.dex.svc" | sudo tee -a /etc/hostsNote:

- this

example-appwas copied from Dex's repo, here.- I've enabled

go modsupport so the life of playing with it is much easier.

# Make sure we're working from the Git repo's root folder

cd "$( git rev-parse --show-toplevel )"

cd example-app

go run . \

--issuer https://dex.dex.svc:$SVC_PORT \

--issuer-root-ca ../tls-setup/_certs/ca.pem \

--debugNow open browser and access: http://127.0.0.1:5555/

- Leave the form as is and click "Login";

- In "Log in to Your Account" page:

- Email Address:

admin1@example.org - Password:

secret

- Email Address:

- A series of tokens will be generated, copy down the

ID Token, something like this:

eyJhbGciOiJSUzI1NiIsImtpZCI6IjkzMzBkOTRhNGIzZTYwNjNiZTFmMmFhN2JhMWExMzY1ODZlY2MzMWMifQ.eyJpc3MiOiJodHRwczovL2RleC5kZXguc3ZjOjMyMDAwIiwic3ViIjoiQ2lWamJqMWhaRzFwYmpFc2IzVTljR1Z2Y0d4bExHUmpQV1Y0WVcxd2JHVXNaR005YjNKbkVnUnNaR0Z3IiwiYXVkIjoiZXhhbXBsZS1hcHAiLCJleHAiOjE2MDc3NTgxODgsImlhdCI6MTYwNzY3MTc4OCwiYXRfaGFzaCI6IlB3NWJxNF9TYkcwYUZtUkYyZDQwV3ciLCJlbWFpbCI6ImFkbWluMUBleGFtcGxlLm9yZyIsImVtYWlsX3ZlcmlmaWVkIjp0cnVlLCJuYW1lIjoiYWRtaW4xIn0.D_7kzzwlT5u9eq0KYrL64K_az2sO7iQ_5-Oz7nYHcHWQ8bBmxkH5NldsaZjzHKi0myo7EBJtb_6fqT4817h8Tf-FmGw_Ig0Fx-iA8c651L563qsy86s1usrrKyxQo-B6nZi-gvbY_K27KemNhgyGfLjl0PlvNWSUhoA94E3mpnEkdHs0H7Ni8iOgyOoNQV6TisrQgcr6blaVFJoMVhx4_XP1WnC3YZBX3vbGMCamu67BUP1KgnRbUwGqsuWntT-MuNuu8nOaBeIDGSrXFmqkUVGqIwGsG5bBsHqsfXtgePkhxXChhMUwQbUs3B4FkWITSJsjyrvCGEeGBjRtEH1w7A

The screenshots are captured like this:

Now we have the token, let's access it through raw API first:

# Retrieve the API Endpoint

$ kubectl cluster-info

Kubernetes master is running at https://127.0.0.1:55662

KubeDNS is running at https://127.0.0.1:55662/api/v1/namespaces/kube-system/services/kube-dns:dns/prox

$ APISERVER=https://127.0.0.1:55662 && \

BEARER_TOKEN="eyJhbGciOiJSUzI1NiIsImtpZCI6IjkzMzBkOTRhNGIzZTYwNjNiZTFmMmFhN2JhMWExMzY1ODZlY2MzMWMifQ.eyJpc3MiOiJodHRwczovL2RleC5kZXguc3ZjOjMyMDAwIiwic3ViIjoiQ2lWamJqMWhaRzFwYmpFc2IzVTljR1Z2Y0d4bExHUmpQV1Y0WVcxd2JHVXNaR005YjNKbkVnUnNaR0Z3IiwiYXVkIjoiZXhhbXBsZS1hcHAiLCJleHAiOjE2MDc3NTgxODgsImlhdCI6MTYwNzY3MTc4OCwiYXRfaGFzaCI6IlB3NWJxNF9TYkcwYUZtUkYyZDQwV3ciLCJlbWFpbCI6ImFkbWluMUBleGFtcGxlLm9yZyIsImVtYWlsX3ZlcmlmaWVkIjp0cnVlLCJuYW1lIjoiYWRtaW4xIn0.D_7kzzwlT5u9eq0KYrL64K_az2sO7iQ_5-Oz7nYHcHWQ8bBmxkH5NldsaZjzHKi0myo7EBJtb_6fqT4817h8Tf-FmGw_Ig0Fx-iA8c651L563qsy86s1usrrKyxQo-B6nZi-gvbY_K27KemNhgyGfLjl0PlvNWSUhoA94E3mpnEkdHs0H7Ni8iOgyOoNQV6TisrQgcr6blaVFJoMVhx4_XP1WnC3YZBX3vbGMCamu67BUP1KgnRbUwGqsuWntT-MuNuu8nOaBeIDGSrXFmqkUVGqIwGsG5bBsHqsfXtgePkhxXChhMUwQbUs3B4FkWITSJsjyrvCGEeGBjRtEH1w7A"

$ curl -k $APISERVER/api/v1/namespaces/default/pods/ --header "Authorization: Bearer $BEARER_TOKEN"

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "pods is forbidden: User \"admin1@example.org\" cannot list resource \"pods\" in API group \"\" in the namespace \"default\"",

"reason": "Forbidden",

"details": {

"kind": "pods"

},

"code": 403

}

$ kubectl auth can-i --as admin1@example.org -n dex list pods

noThe good news is that Kubernetes has recognized the login user as admin1@example.org but still declined the access with 403 Forbidden.

Why? It's because there is no permission, by default, granted to a new user like admin1@example.org.

As you may have seen, authentication is delegated to Dex but authorization is handled by Kubernetes itself.

# Make sure we're working from the Git repo's root folder

cd "$( git rev-parse --show-toplevel )"

kubectl apply -f manifests/authorization.yaml

kubectl auth can-i --as admin1@example.org -n dex list pods

yes

curl -k -s $APISERVER/api/v1/namespaces/dex/pods/ -H "Authorization: Bearer $BEARER_TOKEN" | jq '.items[].metadata.name'

"dex-5f97556766-kcfvl"Yes! We now can access pods within dex namespace, as per the permissions granted.

It's common to generate and distribute such a token by constructing a kubeconfig file.

# Generate a kubeconfig

$ cat > ~/.kube/config-kind <<EOF

apiVersion: v1

kind: Config

preferences: {}

clusters:

- cluster:

server: $APISERVER

insecure-skip-tls-verify: true

name: kind

contexts:

- context:

cluster: kind

namespace: default

user: admin1@example.org

name: admin1@example.org

current-context: admin1@example.org

users:

- name: admin1@example.org

user:

token: $BEARER_TOKEN

EOF

$ kubectl get pods -n dex --kubeconfig ~/.kube/config-kind

NAME READY STATUS RESTARTS AGE

dex-5f97556766-kcfvl 1/1 Running 1 1h28mPerfect!

# Delete the kind cluster

kind delete cluster --name dex-ldap-cluster

# Delete the Socat proxy container

docker rm -f dex-kind-proxy-32000