This repository contains tools to process and visualize the IMAR Vision Datasets: CHI3D, FlickrCI3D, HumanSC3D, FlickrSC3D and Fit3D. In addition, we release the code for the evaluation server for the 3d reconstruction challenges that we introduce: Close Interactions Reconstruction, Complex Self-Contact Reconstruction and Fitness Exercises Reconstruction.

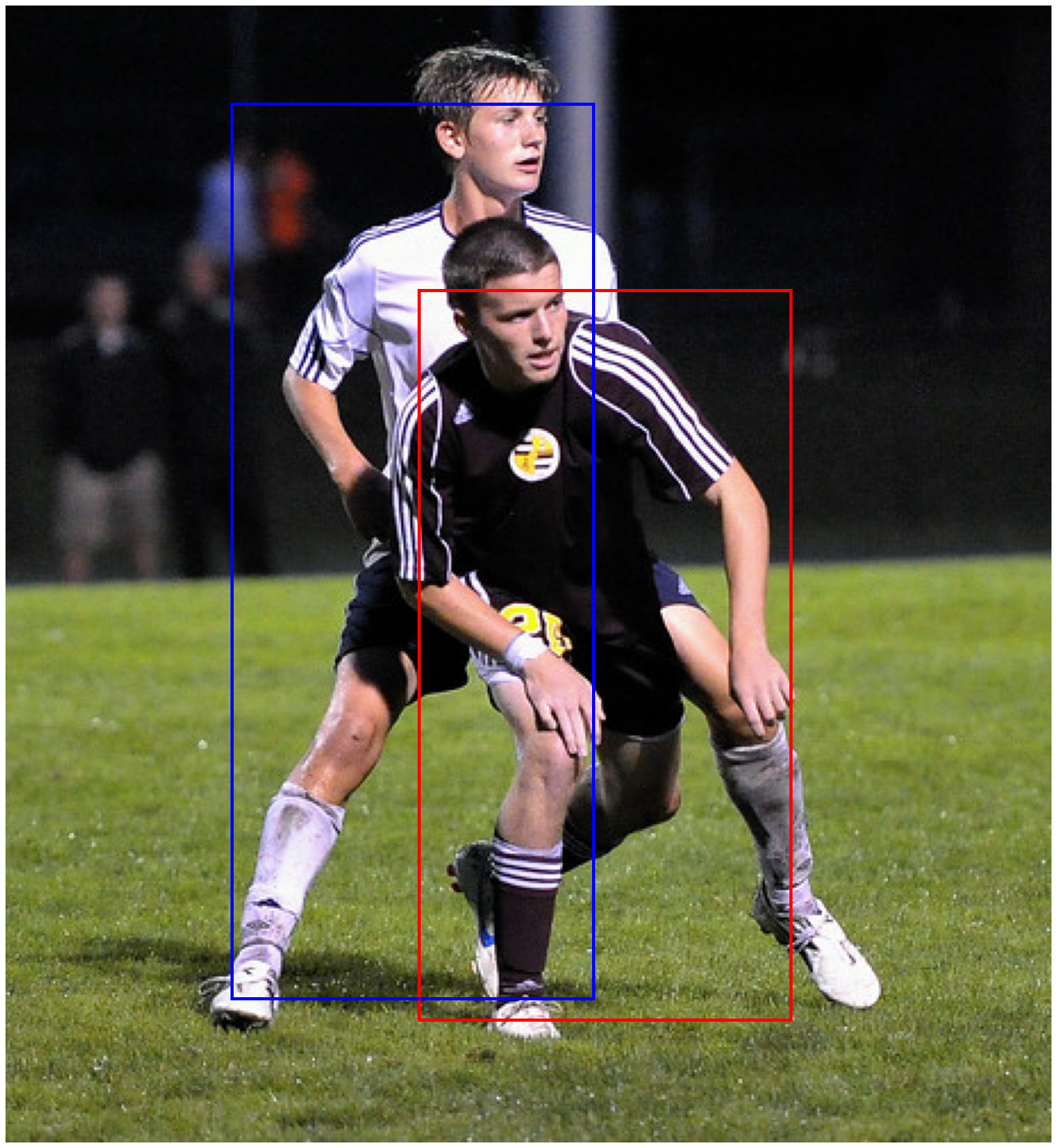

Three-Dimensional Reconstruction of Human Interactions [Paper] [Project / Data / Challenges]

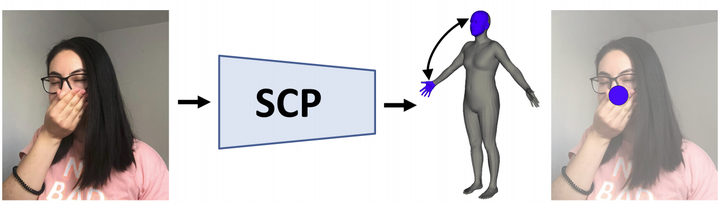

Learning Complex 3D Human Self-Contact [Paper] [Project / Data / Challenges]

AIFit: Automatic 3D Human-Interpretable Feedback Models for Fitness Training [Paper] [Project / Data / Challenges]

The terms of this software are available in LICENSE. Note that the license of this software shall not be confused with the licenses of the datasets it supports.

Please organize your project in the following structure:

project

├── imar_vision_datasets_tools

├── venv

├── ghumrepo

| ├── requirements.txt

| ├── ...

├── smplx_body_models

| ├── smplx

| | ├── SMPLX_NEUTRAL.npz

| | ├── SMPLX_NEUTRAL.pkl

├── datasets

| ├── chi3d

| | ├── train

| | ├── test

| | ├── info.json

| | ├── template.json

| ├── FlickrCI3D_classification

| | ├── train

| | ├── test

| ├── FlickrCI3D_signature

| | ├── train

| | ├── test

| ├── humansc3d

| | ├── train

| | ├── test

| | ├── info.json

| | ├── template.json

| ├── FlickrSC3D_classification

| | ├── train

| | ├── test

| ├── FlickrSC3D_signature

| | ├── train

| | ├── test

| ├── fit3d

| | ├── train

| | ├── test

| | ├── info.json

| | ├── template.jsoncd project

git clone https://github.com/sminchisescu-research/imar_vision_datasets_tools.git imar_vision_datasets_toolssudo apt-get install python3-pip python3-venv git -y

python3 -m venv venv

source venv/bin/activate

pip3 install --no-cache-dir --upgrade pip setuptools wheelPlease request the GHUM human body model. Then, clone the repository into the project folder:

git clone https://partner-code.googlesource.com/ghum ghumrepo

cp imar_vision_datasets_tools/info/ghum_requirements.txt ghumrepo/requirements.txt

pip3 install -e ghumrepoIf you want to use SMPLX, please register and download SMPL-X v1.1. From the archive, copy the SMPLX_NEUTRAL.npz and SMPLX_NEUTRAL.pkl body models to smplx_body_models/smplx/. Then:

pip3 install smplx[all]For each dataset you want to use, you will need to request access from its respective project websites: Close Interactions 3D / Self Contact 3D / Fit3D. Once provided access, download the datasets, unzip them and place them under datasets/ by keeping the project structure.

To process and visualize either of the mocap datasets CHI3D, HumanSC3D and Fit3D, run:

jupyter notebook imar_vision_datasets_tools/notebooks/visualize_lab_dataset.ipynbTo process and visualize either of the Flickr datasets FlickrCI3D_classification, FlickrCI3D_signature, FlickrSC3D_classification, FlickrSC3D_signature, run:

jupyter notebook imar_vision_datasets_tools/notebooks/visualize_flickr.ipynbTo validate the format of your challenge submission file, run:

jupyter notebook imar_vision_datasets_tools/notebooks/validate_prediction_format.ipynbTo visualize the predictions of your challenge submission file, take a look at the example here:

jupyter notebook imar_vision_datasets_tools/notebooks/visualize_prediction.ipynbFor an example on how the evaluation server functions, run:

jupyter notebook imar_vision_datasets_tools/notebooks/eval_predictions.ipynbNote that this code will not run, as we do not provide ground truth data for the test set. Its purpose is to illuastrate the evaluation protocol.

Depending on which dataset you use, please cite the relevant article where the dataset was introduced.

For CHI3D, FlickrCI3D_signature, FlickrCI3D_classification, please cite:

@InProceedings{Fieraru_2020_CVPR,

author = {Fieraru, Mihai and Zanfir, Mihai and Oneata, Elisabeta and Popa, Alin-Ionut and Olaru, Vlad and Sminchisescu, Cristian},

title = {Three-Dimensional Reconstruction of Human Interactions},

booktitle = {The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2020}

}

For HumanSC3D, FlickrSC3D_signature, FlickrSC3D_classification, please cite:

@article{Fieraru_Zanfir_Oneata_Popa_Olaru_Sminchisescu_2021,

title={Learning Complex 3D Human Self-Contact},

volume={35},

url={https://ojs.aaai.org/index.php/AAAI/article/view/16223},

number={2},

journal={Proceedings of the AAAI Conference on Artificial Intelligence},

author={Fieraru, Mihai and Zanfir, Mihai and Oneata, Elisabeta and Popa, Alin-Ionut and Olaru, Vlad and Sminchisescu, Cristian},

year={2021},

month={May},

pages={1343-1351}

}

For Fit3D, please cite:

@InProceedings{Fieraru_2021_CVPR,

author = {Fieraru, Mihai and Zanfir, Mihai and Pirlea, Silviu-Cristian and Olaru, Vlad and Sminchisescu, Cristian},

title = {AIFit: Automatic 3D Human-Interpretable Feedback Models for Fitness Training},

booktitle = {The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2021}

}