Benchmark of running Phi-2 LLM on MLX and PyTorch on MPS device

Code for the article about speed comparison of MLX and PyTorch on Apple's M1 MAX GPU: https://medium.com/@koypish/mps-or-mlx-for-domestic-ai-the-answer-will-surprise-you-df4b111de8a0

Installation

pyenv install 3.11pyenv local 3.11

1st option:

poetry shell

2nd option:

python -m venv .venv; source .venv/bin/activatepip install -r requirements.txt

Run Phi-2 benchmarks

make mlxmake mps- for PyTorch on Metal GPUmake cpu- for PyTorch on CPU

In order to track CPU/GPU usage, keep make track running while performing operation of interest.

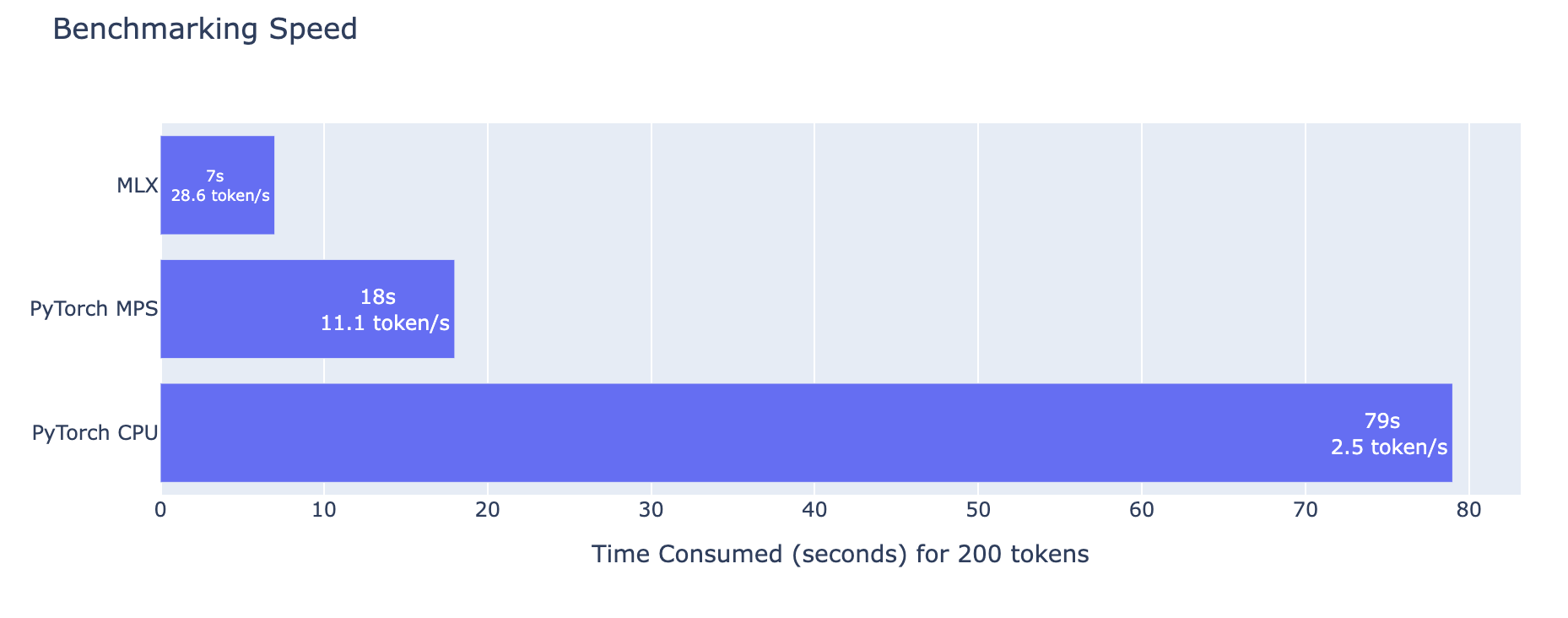

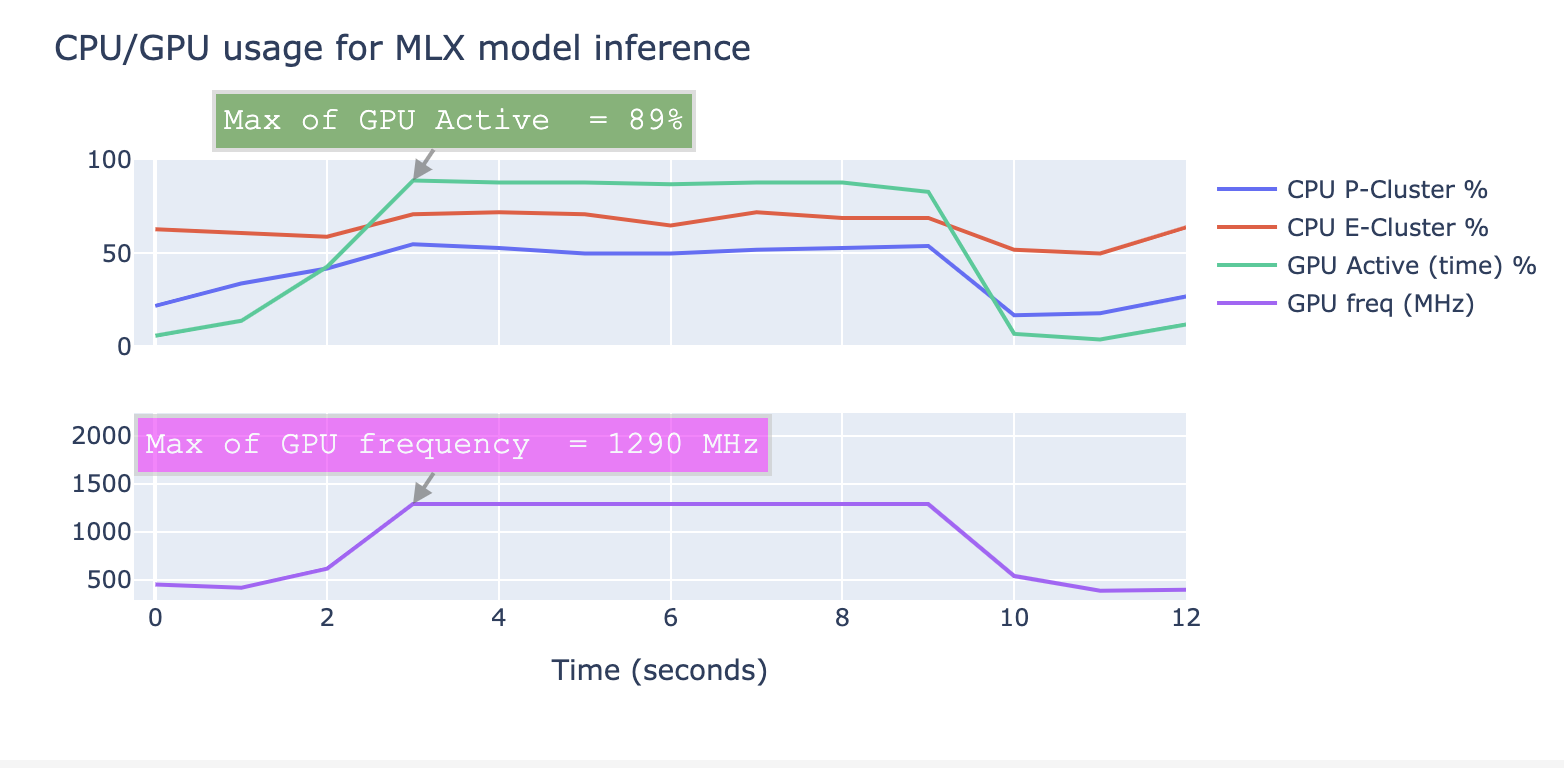

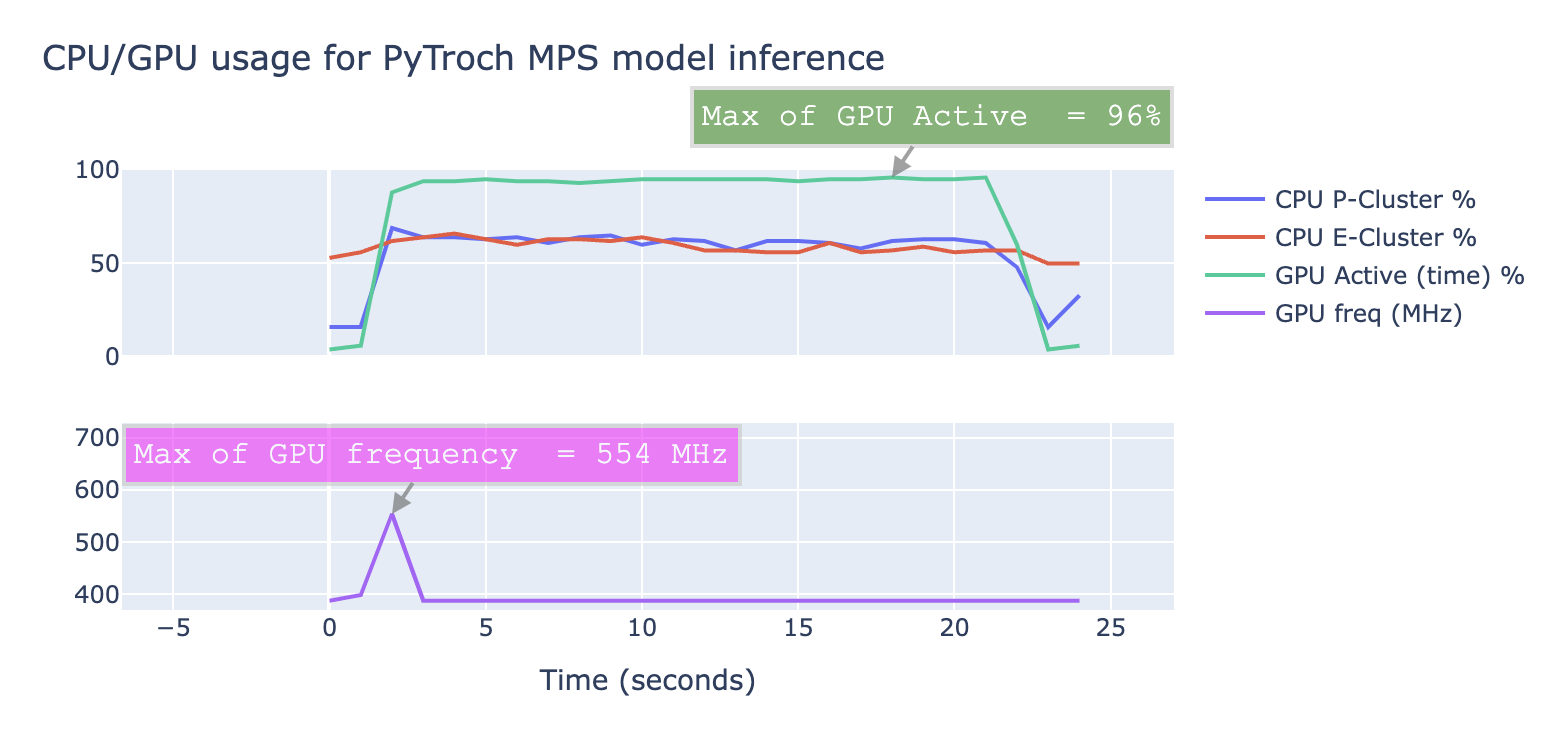

Results on M1 Max (MacOS Sonoma 14.1.1)

Structure

utils.pycontains memory measurement utilstry_phi2_torch.ipynb,try_phi2_mlx.ipynb- benchmarks codephi2_mlx.py- code mainly copied from https://github.com/ml-explore/mlx-examples/tree/main/llms/phi2plot_metrics.ipynb- contains code to parse and analyze output ofpowermetrics(run bymake track)