This is the official implementation for paper:

Probabilistic Neural Programmed Networks for Scene Generation

Zhiwei Deng, Jiacheng Chen, Yifang Fu and Greg Mori

Published on NeurIPS 2018

If you find this code helpful in your research, please cite

@inproceedings{deng2018probabilistic,

title={Probabilistic Neural Programmed Networks for Scene Generation},

author={Deng, Zhiwei and Chen, Jiacheng and Fu, Yifang and Mori, Greg},

booktitle={Advances in Neural Information Processing Systems},

pages={4032--4042},

year={2018}

}

- Overview

- Environment Setup

- Data and Pre-trained Models

- Configurations

- Code Guide

- Training Model

- Evaluation

- Results

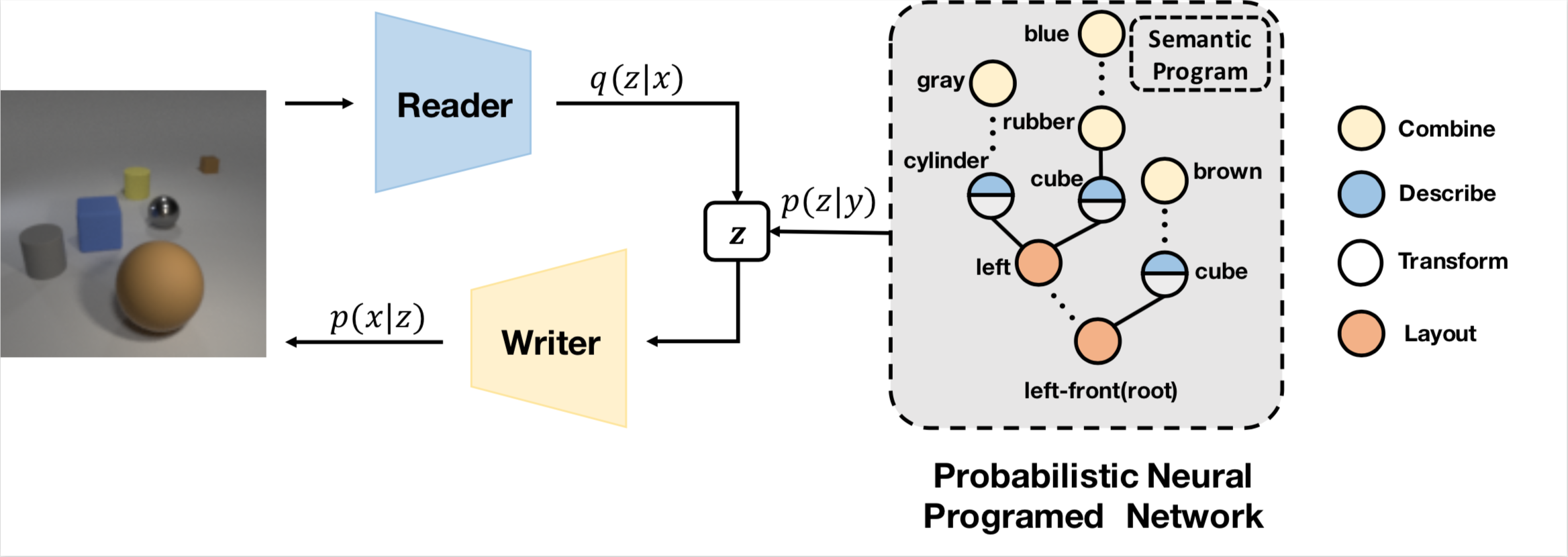

Generating scenes from rich and complex semantics is an important step towards understanding the visual world. Probabilistic Neural Programmed Network (PNP-Net) brings symbolic methods into generative models, it exploits a set of reusable neural modules to compose latent distributions for scenes described by complex semantics in a programmatic manner, a decoder can then sample from latent scene distributions and generate realistic images. PNP-Net is naturally formulated as a learnable prior in canonical VAE framework to learn the parameters efficiently.

All code was tested on Ubuntu 16.04 with Python 2.7 and PyTorch 0.4.0 (but the code should also work well with Python 3). To install required environment, run:

pip install -r requirements.txt For running our measurement (a semantic correctness score based on detector), check this submodule for full details (it's released now).

We used the released code of CLEVR (Johnson et al.) to generate a modified CLEVR dataset for the task of scene image generation, and we call it CLEVR-G. The generation code is in the submodule.

We also provide the 64x64 CLEVR-G used in our experiments. Please download and zip it into ./data/CLEVR if you want to use it with our model.

Pre-trained Model

Please download pre-trained models from:

Coming soon...

We use global configuration files to set up all configs, including the training settings and model hyper-parameters. Please check the file and corresponding code for more detail.

The core of PNP-Net is a set of neural modular operators. We briefly introduce them here and provide the pointers to corresponding code.

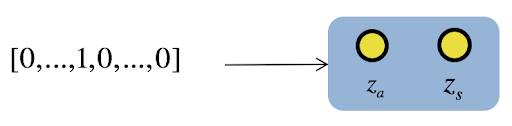

- Concept Mapping Operator

Convert one-hot representation of word concepts into appearance and scale distribution. code

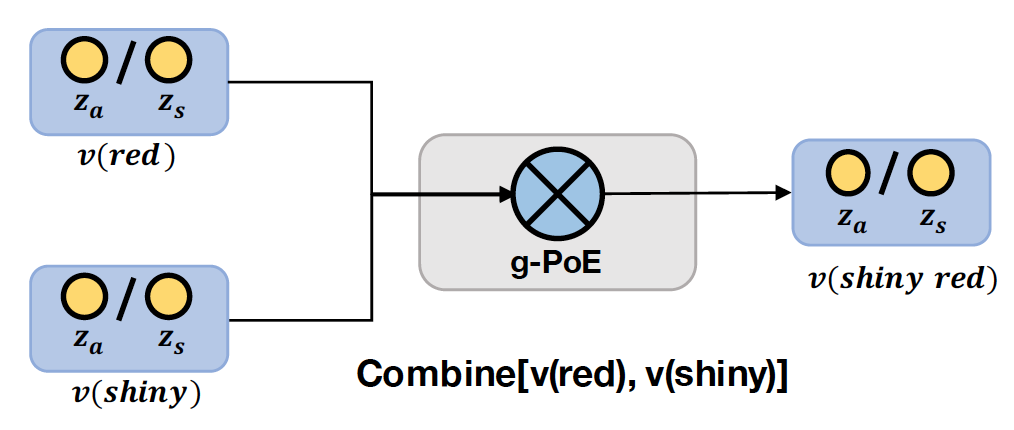

- Combine Operator

Combine module combines the latent distributions of two attributes. code

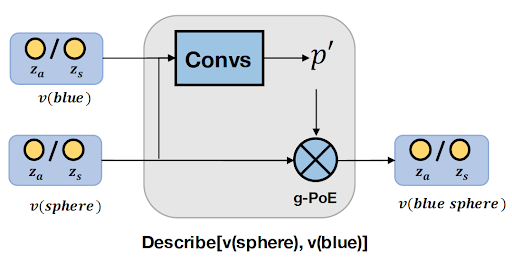

- Describe Operator

Attributes describe an object, this module takes the distributions of attributes (merged using combine module) and uses it to render the distributions of an object. code

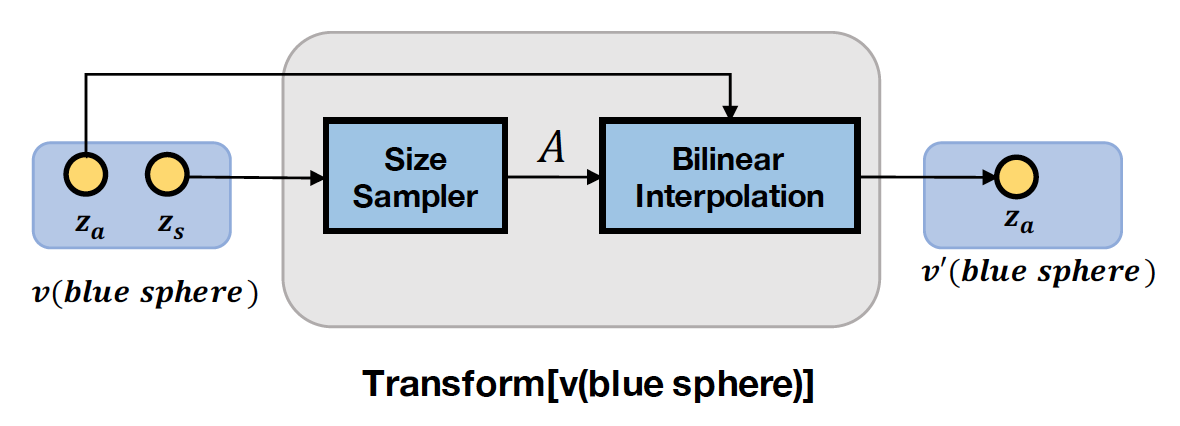

- Transform Operator

This module first samples a size instance from an object's scale distribution and then use bilinear interpolation to re-size the appearance distribution. code

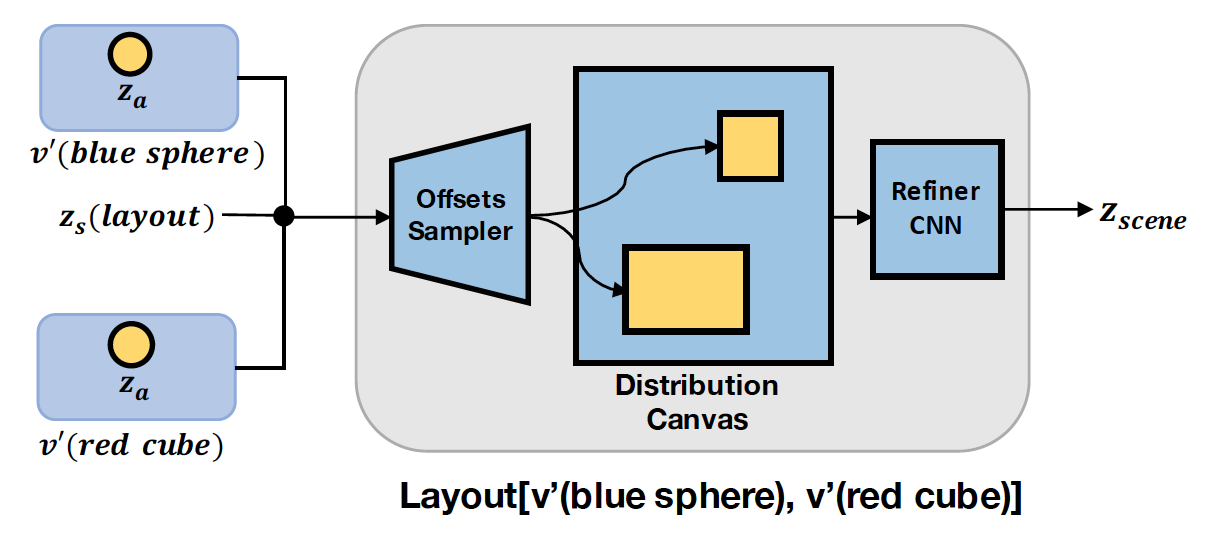

- Layout Operator

Layout module puts latent distributions of two different objects (from its children nodes) on a background latent canvas according to the offsets of the two children objects. code

The default training can be started by:

python mains/pnpnet_main.py --config_path configs/pnp_net_configs.yamlMake sure that you are in the project root directory when typing the above command.

The evaluation has two major steps:

-

Generate images according to the semantics in the test set using pre-trained model.

-

Run our detector-based semantic correctness score to evaluate the quality of images. Please check that repo for more details about our proposed metric for measuring semantic correctness of scene images.

For generating test images using pre-trained model, first set the code mode to be test, then set up the checkpoint path properly in the config file, finally run the same command as training:

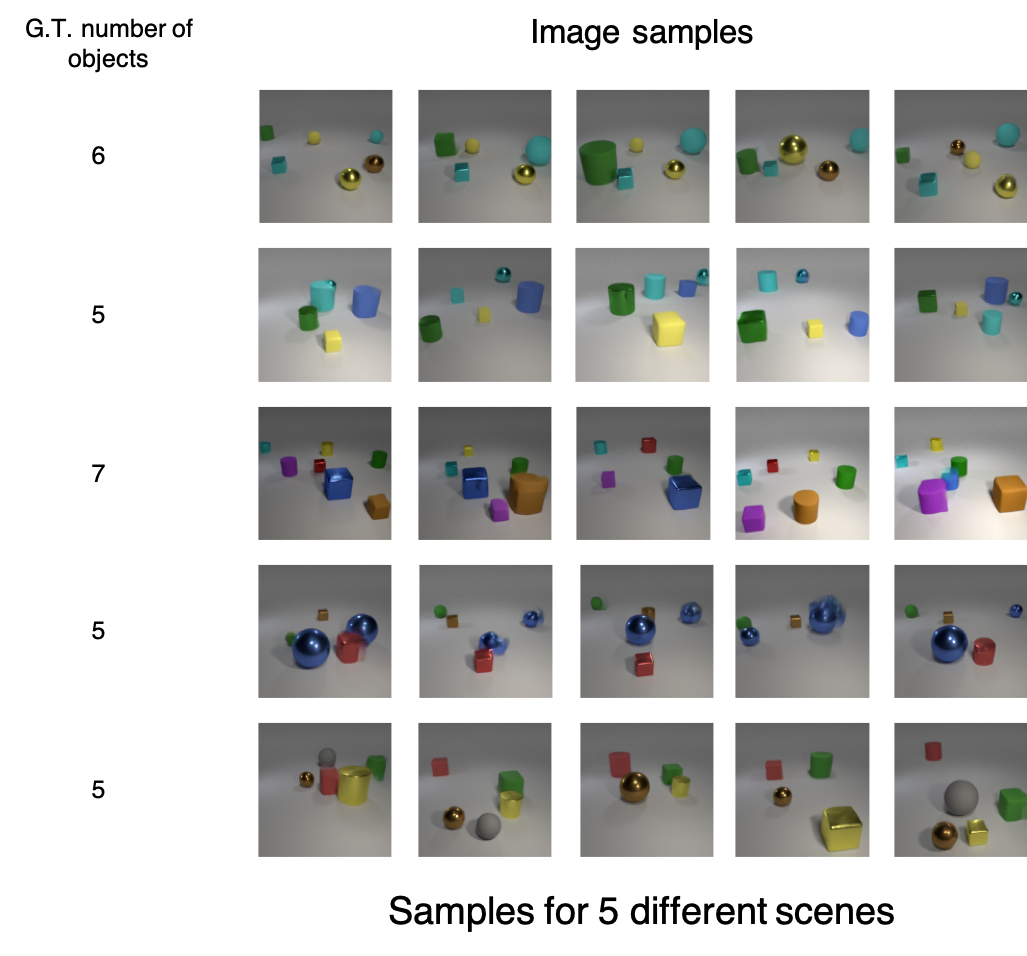

python mains/pnpnet_main.py --config_path configs/pnp_net_configs.yamlDetailed results can be checked in our paper. We provide some samples here to show PNP-Net's capability for generating images for different complex scenes. The dataset used here is CLEVR-G 128x128, every scene contains at most 8 objects.

When the scene becomes too complex, PNP-Net can suffer from the following problems:

-

It might fail to handle occlusion between objects. When multiple objects overlap, their latents get mixed on the background latent canvas, and the appearance of objects can be distorted.

-

It might put some of the objects out of the image boundary, therefore some images do not contain the correct number of objects as described by the semantics.