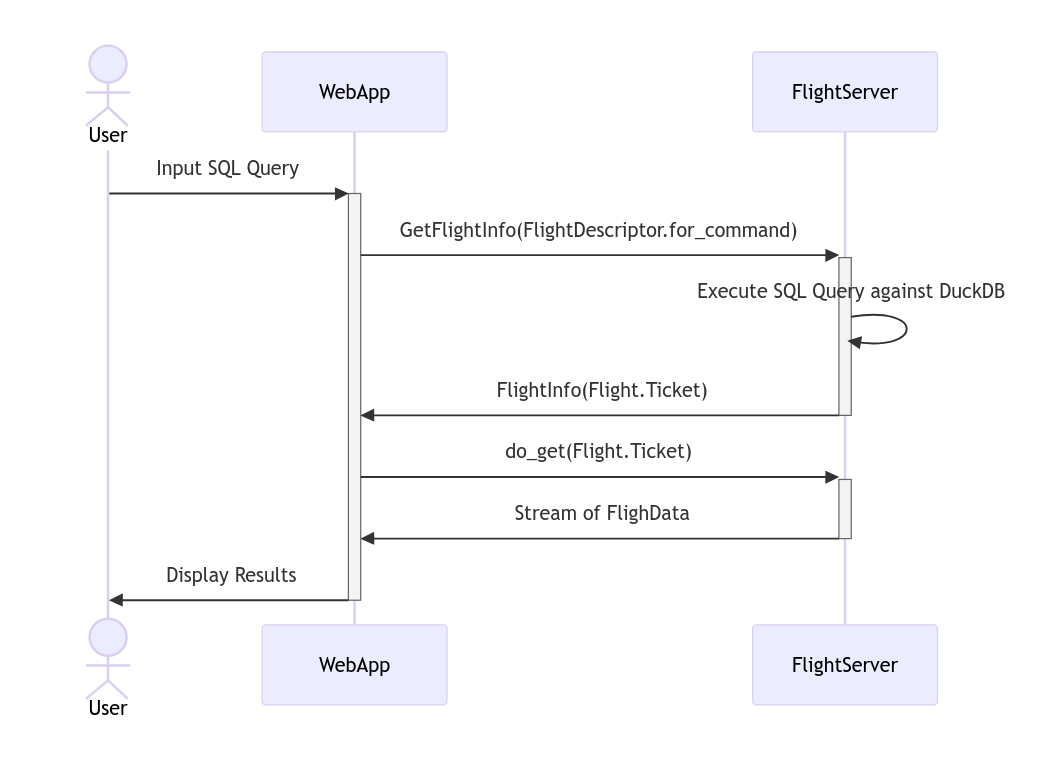

This project enables the execution of SQL queries to a remote DuckDB instance using Apache Arrow Flight RPC and explore/download the results directly through Streamlit Web interface.

Install the needed python modules

pip install -r requirements.txt- Create/Update the

.envfile with the below as needed:

# CAUTION: If using the source .env commande,

## make sure there no space before & after the '=' signs in this file

# Flight Server

SERVER_FLIGHT_HOST='0.0.0.0'

SERVER_FLIGHT_PORT=8815

# If using Local Storage for the Flight datasets

SERVER_FLIGHT_DATA_DIR_TYPE='local' # Options: ['local', 's3', 'minio']

SERVER_FLIGHT_DATA_DIR_BASE='data/datasets'

# DuckDB file

SERVER_DUCKDB_FILE='data/duck.db'- Run the below command to launch the Apache Arrow Flight Server:

python apps/server/server.py- Run the below command to launch the Streamlit Web interface:

streamlit run apps/client/web.py- Browse to the Streamlit Web link http://localhost:8501

- Remote Query Execution

- View previous Queries

- Authentication

Supported Storage for Apache Arrow Flight Datasets (ONLY local works Currently)

-

local: Results files are stored on the local disk of the server.

-

s3: Results files are stored on Amazon S3. Update the

.envto set the Access & Secret Keys. -

minio: Results files are stored on Amazon S3. Update the

.envto set the Access & Secret Keys and Endpoint, etc. -

Add Docker Compose.

-

Add Support for ephemeral Compute Nodes (GCP, AWS, Azure): This could reduce code for Flight Server with Duckdb.

-

Add NodeManager to manage ephemeral Compute Nodes.

If you have any questions or would like to get in touch, please open an issue on Github or send me an email: mike.kenneth47@gmail.com OR Twitter/x.