Panoptic Soft Attention Image Captioning with beam search object proposal reinforcement, language model rescoring and caption augmentation

- 5/7/21: Updated README TODO's

- 6/7/21: Experimented with beam-width (increased performance). Implemented Joint embedding between HLAR (achieved through conv layers) and LLAR computed by using PanopticFPN.

- 10/7/21: Implemented Beam-search object class rescoring for both object proposals of PanopticFPN and Faster R-CNN (Lowered accuracies - Further investigation needed).

- 13/7/21: Implemented Beam-search language modelling rescoring using BERT. (Beam-width and language model influence not optimized).

- 16/7/22: Impletemented augmented captions through pharaphrase generation. Implementation details to be added.

- 18/7/22: Added config parameters to toggle between attention regions, language modelling rescoring, as well as object reinforcement and caption augmentation.

The following is a list of proposed improvements on the Hard Attention model:

- Introduce low-level attention regions by using the bounding boxes produced by the object detection and localization model Faster R-CNN model

- Rescore the caption proposals during inference by utilizing beam search and reinforcing instances that contain object classes proposed by Faster R-CNN

- Introduce a language model that rescores the caption proposals of the beam search algorithm

- Augment more than 5 captions per image using a pre-trained language model such as BERT/GPT-2/GPT-3.

The majority of the above proposed improvements rely on the object classes and bounding boxes produced by the Faster R-CNN model. Therefor a dataset annotated with bounding boxes and class labels is necessary. My previous models were trained on the Flickr8k dataset which only consisted of images paired with 5 captions per image. MSCOCO is an alternative and the standard dataset used for the task of image caption generation, and consists of roughly 300,000 image-caption pairs. Unfortunately, the computational resources necessary to train on such a large dataset is quite demanding and is not feasible for a low-resource computer such as my own.

Luckily, Flickr30k Entities provides a relatively small dataset consisting of ~30,000 image caption pairs along with manually annotated object class labels and object localization bounding boxes. For the purpose of fine-tuning the Faster R-CNN, Flickr30k will be used. There is a large overlap in images contained within the Flickr8k and Flickr30k datasets, so therefor the fine-tuned Faster R-CNN will be used during the encoding stage when trained on the Flickr8k dataset.

In improving the accuracies of the Hard Attention model on the Flickr8k dataset, we may indicate potential techniques that can be used in more complex models that are trained on larger datasets, such as MSCOCO.

In this work, we adopt and extend the architecture proposed in Show, Attend and Tell since it is the most cited seminal work in the area of image captioning. It introduced the encoder-decoder architecture and the visual attention mechanism for image captioning in a simple yet powerful approach. For more information on this model, see my previous repo.

Compared to Show, Attend and Tell, other captioning approaches are task specific, more complex and derivative in nature. Moreover, we believe the simplicity of the Show, Attend and Tell model, compared to it’s other counterparts, would help to add explainability into the captioning task. This approach uses a transparent attention mechanism which, in the domain of sequence-to-sequence tasks, translates to the ability to dynamically select important features in the image instead of maintaining one feature representation for the image at all times.

The proposed improvements on the Hard Attention model proposed in Show, Attend, and Tell will now be further discussed in more detail.

The purpose of an attention mechanism is for the model to shift it's focus based on the next word that is being predicted by the model. The attention regions therefore provides context to the decoder based on a partially generated caption. In doing so, these features often fail to attend and provide direct visual cues, i.e., specific object details, to the decoder.

The Hard Attention model proposed in Show, Attend and Tell makes use of high-level image abstractions obtained from convolutional layers within a pre-trained object detection model, such as VGG-16. The image features obtained from the conv. layers acting as attention regions lack low-level object specific details.

We explore the possibilities to make this attention mechanism more effective by introducing low-level attention regions. For each input image, we embed a constant number of localized bounding boxes from a fine-tuned Faster R-CNN model. In doing, so we augment attention regions for the decoder to choose from when predicting the next word in the sequence. The produced attention regions will be object level. A possible drawback to this approach will be that the model will not have the entire context available, since the Faster R-CNN only produces object in the foreground and does not provide background context such as location of the objects within the scene. For instance, an image where two boys are running on a beach. The Faster R-CNN will only produce the bounding boxes of the two boys, and not produce an attention region containing information about the beach.

To remedy this possible drawback, the joint embedding of the high-level attention regions produced by the convolutional layers and the low-level features produced by Faster R-CNN, will also be explored.

Figure 1 - Pipeline of the panoptic attention regions. The image is first fed to the Faster R-CNN to produce ten bounding boxes of the objects within the image. These bounding boxes are used as the low-level attention regions (LLARs). The bounding boxes are then fed to a CNN such as ResNet-152 in order to embed the augmented attention regions. The last fully connected layer (1x2048 dimension) is taken as the feature vector representation. The LLARs are then stacked and fed to the LSTM (decoder). Figure 2 - Pipeline of the joint embedding of high level attention regions (HLAR) used in the original paper and LLAR achieved through the procedure shown in Figure 1. The image is fed to the ResNet-152 CNN and the feature map of a convolutional layer is taken. A 14x14 feature map is generated with each grid being of dimension 1x2048. We flatten this out to give the embedding a dimension of 196x2048. The HLARs are then concatenated with the LLAR to form a embedding with dimension 206x2048The standard decoding technique is known as greedy search which selects the most likely word at each step in the output sequence of the LSTM. This approach has the benefit that it is very fast, but the quality of the final output sequences may be far from optimal.

Opposed to greedy search, beam search expands all possible next steps and keeps the k most likely, where k is a user-specified parameter and controls the number of beams or parallel searches through the sequence of probabilities.

By using the object labels proposed by the Faster R-CNN, we can reinforce beam instances where the proposed object labels occur. This would insure that instances that contain the correct objects are contained within the beam-width and chosen as the most likely generated caption. This would of course rely on the Faster R-CNN object labels to be accurate.

Further, we could also reinforce objects that are of similar semantic meaning. This can be done by calculating the cosine distance between the proposed words by the decoder and the proposed Faster R-CNN object labels. A minimum cosine distance threshold would have to be chosen whether to reinforce the beam instance or not.

During beam search inference, each fully generated caption will be rescored by combining the probability score produced by the LSTM and the probability score produced by a pre-trained language model such as BERT/GPT-2/GPT-3. This would suppress semantically unlikely captions generated during beam-search.

We only rescore once the beam-instance has reached the terminating token . To evaluate a greater variety of proposals by the decoder, a larger beam-width is used.

Since GPT-3 has limited access for the few selected public, we can try the open-source cousin GPT-J. The language model could also be fined tuned on the annotated labels of image-captioning datasets.

For further improvement on the model, we test whether the model would benefit from augmented captions generated by a pre-trained language model. The Flickr8k dataset provides 5 human annotated captions per image. From the given captions, augmented captions could be generated by paraphrasing each given caption and doubling the captions per image from 5 to 10.

I've included the scores produced by the state of the art models (on this dataset), as well as the models produced by my previous attempts. For the baseline (Hard Attention), only high-level attention regions (HLAR) computed by the convolutional layers from a pre-trained object detection neural network, such as VGG or ResNet. The original paper uses VGG-16 to produce the attention regions. For my implementation I make use of ResNet-152.

| Abbreviation | Description |

|---|---|

| JE | Joint embedding of the high-level attention regions produced by the convolutional layers and the low-level features produced by Faster R-CNN, Mask R-CNN or PanopticFPN |

| LLAR | Low level attention regions represented by object-level bounding boxes or segmentation achieved by Faster R-NN, Mask R-CNN or PanopticFPN |

| F/M/P | Faster R-CNN, Mask R-CNN and PanopticFPN respectively - Models used for localizing and cropping object-level attention features (LLAR), as well as object proposals used within rescoring during beam search. |

| OR | Indicates object class proposals reinforcement was implemented |

| BERT | Language model used to rescore beam instances |

| CA | Indicates additional captions were generated through making use of paraphrase generation. Increased captions per image from 5 to 10. |

| Model | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 |

|---|---|---|---|---|

| Hard attention (Xu et al.,2016) | 67 | 45.7 | 31.4 | 21.3 |

| Google NIC (Vinyals et al., 2014) | 63 | 41 | 27 | - |

| Log Bilinear (Kiros et al., 2014) | 65.6 | 42.4 | 27.7 | 17.7 |

| Merge (Tanti et al., 2017) | 60.1 | 41.1 | 27.4 | 17.9 |

| Merge-EfficientNetB7-Glove-RV | 63.62 | 40.47 | 26.63 | 16.92 |

| Hard attention (my implementation) | 66.54 | 45.8 | 31.6 | 20.93 |

| Hard attention ResNet-101 | 66.73 | 45.45 | 31.81 | 22.14 |

| Hard Attention BERT | 67.67 | 46.56 | 32.35 | 22.40 |

| Hard attention CA | 67.57 | 47.32 | 32.97 | 22.94 |

| Hard Attention JE-F | 68.74 | 47.07 | 32.86 | 22.95 |

| Hard Attention JE-P | 68.43 | 47.77 | 33.63 | 24.42 |

| Hard Attention JE-P-BERT | 69.03 | 48.32 | 33.94 | 24.79 |

| Hard Attention JE-P-CA | 69.52 | 48.91 | 34.83 | 25.36 |

| Hard Attention JE-P-CA-BERT | 69.98 | 49.34 | 35.02 | 25.86 |

The following model variations did not improve the original implementation.

| Model | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 |

|---|---|---|---|---|

| Hard Attention LLAR-F | 56.52 | 39.42 | 28.34 | 11.14 |

| Hard Attention LLAR-P | 57.41 | 40.98 | 28.84 | 11.74 |

| Hard Attention JE-P-OR | 66.89 | 45.93 | 32.18 | 22.45 |

| Hard Attention JE-F-OR | 66.05 | 45.64 | 31.68 | 22.05 |

...

- Implement segmented attention regions from Faster R-CNN as LLARs

- Implement segmented attention regions from PantopicFPN (detectron2) as LLARs

- Implement segmented attention regions from Masked R-CNN as LLARs

- Beam Search Faster R-CNN object class proposals reinforcement (using Faster R-CNN proposals)

- Beam Search Faster R-CNN object class proposals reinforcement (using PanopticFPN proposals)

- Beam Search re-scoring by using language models

- Run GridSearch on beam-width and language model influence parameter

- Caption augmentation using language models

- Attention visualization for sample inference

- Use EfficientNet-B7 for encoding of low-level attention regions (Faster R-CNN bounding boxes) and extraction of feature maps for high-level attention regions.

- Add second layer of LSTM

- Experiment with different beam-search sizes

- Include additional evaluation metrics (CIDEr, METEOR, ROUGE-L, SPICE)

We make use of Faster R-CNN, which is an object detection network that produces bounding boxes along with class labels and probability scores.

We make use of a Faster R-CNN model pre-trained on MSCOCO and fine-tuned on the Flickr30k Entities dataset.

This paper/dataset presents Flickr30k Entities, which is an augmented 58k captions for Flickr30k with 244k coreference chains, linking mentions of the same entities across difference captions for the same image, associating them with 276k manually annotated bounding boxes. Such annotations are essential for continued progress in automatic image description and grounded language understanding. They enable us to define a new benchmark for localization of textual entity mentions in an image. This dataset was annotated using crowd-sourcing.

Due to a lack of datasets that provide not only paired sentences and images, but detailed grounding of specific phrases in image regions, most of these methods attempt to directly learn mappings from whole images to whole sentences. Not surprisingly, such models have a tendency to reproduce generic captions from the training data, and to perform poorly on compositionally novel images whose objects may have been seen individually at training time, but not in that combination.

Figure 3 - Example annotations from our dataset. In each group of captions describing the same image, coreferent mentions (coreference chains) and their corresponding bounding boxes are marked with the same color. On the left, each chain points to a single entity (bounding box). Scenes and events like “outside” or “parade” have no box. In the middle example, the people (red) and flags (blue) chains point to multiple boxes each. On the right, blue phrases refer to the bride, and red phrases refer to the groom. The dark purple phrases (“a couple”) refer to both of these entities, and their corresponding bounding boxes are identical to the red and blue ones.Our new dataset, Flickr30k Entities, augments Flickr30k by identifying which mentions among the captions of the same image refer to the same set of entities, resulting in 244,035 coreference chains, and which image regions depict the mentioned entities, resulting in 275,775 bounding boxes. Figure 1 illustrates the structure of our annotations on three sample images.

Traditional object detection assumes a predefined list of semantically distinct classes with many training examples for each. By contrast, in phrase localization, the number of possible phrases is very large, and many of them have just a single example or are completely unseen at training time. Also, different phrases may be very semantically similar (e.g., infant and baby), which makes it difficult to train separate models for each. And of course, to deal with the full complexity of this task, we need to take into account the broader context of the whole image and sentence, for example, when disambiguating between multiple entities of the same type.

The comparison between MSCOCO and Flickr30k Entities is shown in the table below:

| Dataset | Images | Objects Per Image | Object Categories | Captions Per Image |

|---|---|---|---|---|

| Flickr30k Entities (Plummer et al., 2015) | 31,783 | 8.7 | 44,518 | 5 |

| MSCOCO (Lin et al., 2014) | 328,000 | 7.7 | 91 | 5 |

For our dataset, we define Object Categories as the set of unique phrases after filtering out non-nouns in our annotated phrases (note that Scene Graph and Visual Genome also have very large numbers in this column because they correspond essentially to the total numbers of unique phrases).

As we notice from the comparison, the Flickr30k dataset has a diverse set of objects compared to the MSCOCO dataset (with 10 times more image instances).

Object categories were grouped into 9 classes, namely: people, clothing, bodyparts, animals, vehicles, instruments, scene, other.

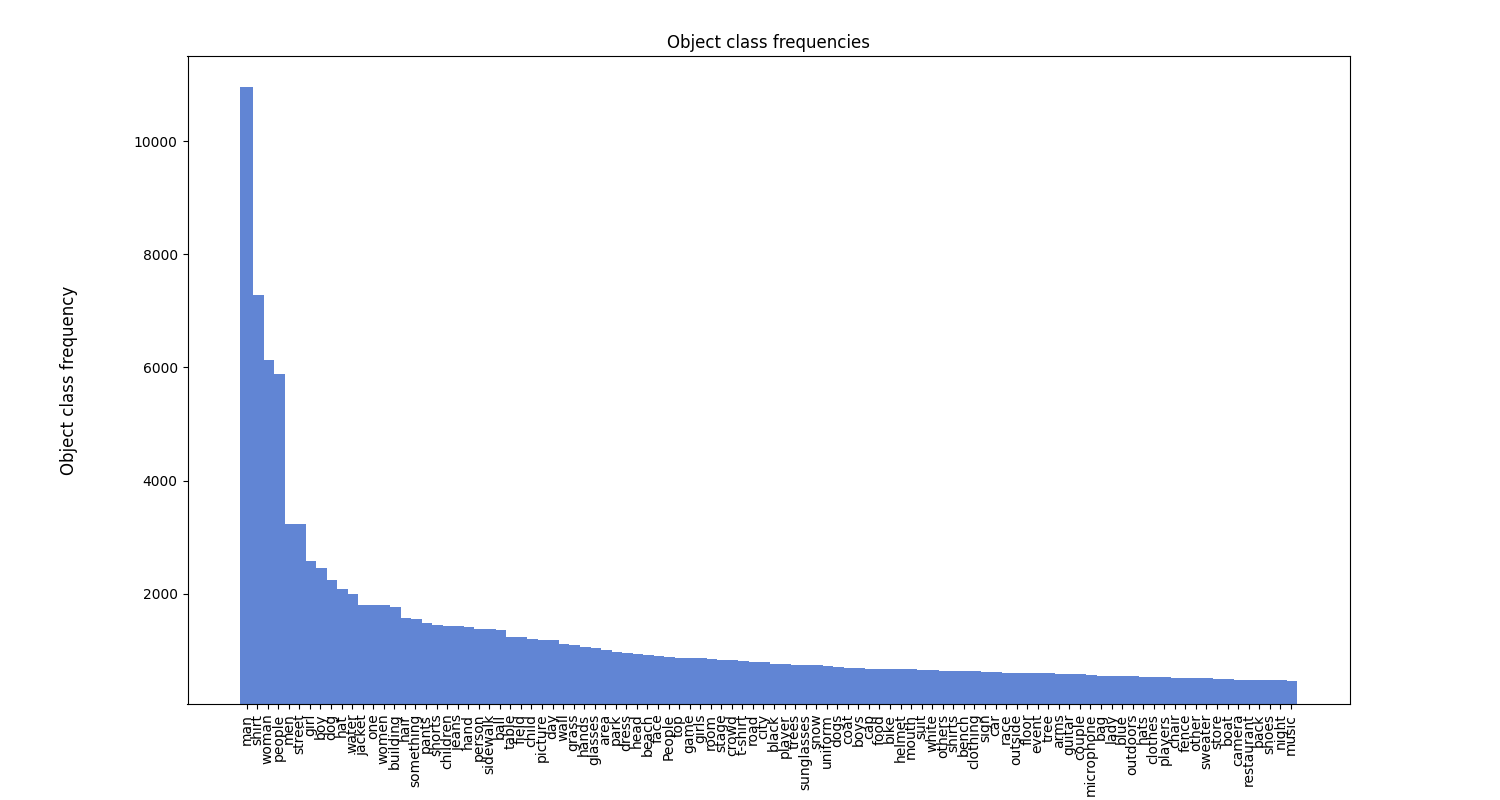

For our purpose of object detection and localization, these groupings are not useful, as we want the specific object class. We use the original object classes with 44,518 classes, with some ambiguity between them. For instance, there exists an object class of infant, as well as baby, with the two object categories having the same semantic meaning. For this reason some pre-processing is needed. Below is the object class distribution of the top 100 object categories before pre-processing.

Figure 1 - Example annotations from our dataset. In each group of captions describing the same image, coreferent mentions (coreference chains) and their corresponding bounding boxes are marked with the same color. On the left, each chain points to a single entity (bounding box). Scenes and events like “outside” or “parade” have no box. In the middle example, the people (red) and flags (blue) chains point to multiple boxes each. On the right, blue phrases refer to the bride, and red phrases refer to the groom. The dark purple phrases (“a couple”) refer to both of these entities, and their corresponding bounding boxes are identical to the red and blue ones.From observing the class labels above, we notice there are some non-visual or nonsensical classes such as one. We also note some semantically similar classes such as shirt and t-shirt.

The following pre-processing was done to the Flickr30k Entities annotations:

- Remove non-visual object class instances

- Remove nonsensical object class instances

- Made all the object classes to lowercase

- Limit the object classes to the top-100 most frequently occurring object classes and disregard the remaining object class instances

In only taking the top-100 object classes we significantly reduce the possible object classes Faster R-CNN has to predict and hopefully leading to more informatory attention regions for the Hard Attention model.