FastFaces

A Pytorch Implementation of a compressed Deep Learning Architecture using Low-Rank Factorization.

About

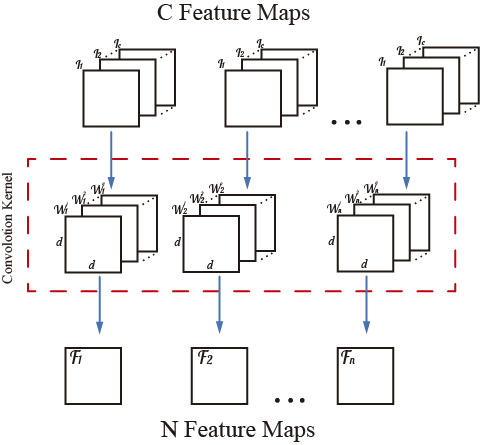

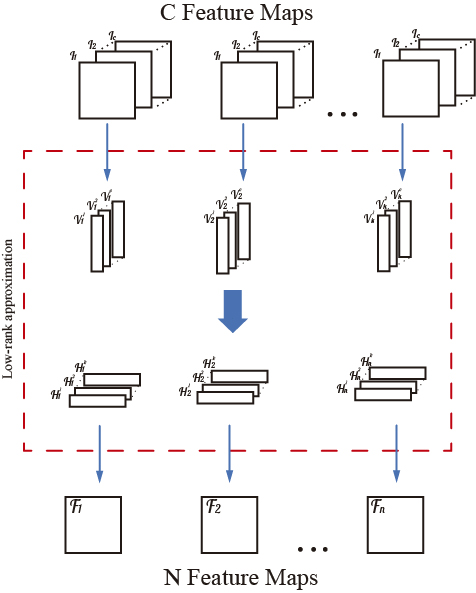

The goal is to speed up and compress a network by eliminating redundancy in the 4D tensors that serve as convolutional kernels. The proposed tensor decomposition replaces the convolutional kernel with two consecutive kernels with lower rank as is shown in the figure.

More specifically, if the convolutional layer have inputs and

outputs, the main objective is to find two intermediate kernels

and

such that

, constraining the rank of the layer to be

.

The new proposed tensor has the form:

and given that the convolution is distributive over addition, we can produce the same number offeature maps performing two independent convolutions:

As the main goal is to maintain the overall accuracy, it is required to keep the filter products as close as possible to the original ones. The Frobenius norm can be used to define the objective function to be minimized, leading to the problem:

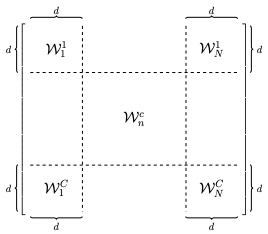

Whic can be solved in a lower dimension space according to [1] using the following linear mapping

This mapping corresponds to the alignment of all the filters together in a matrix as is shown in the picture

Finally, the SVD decomposition of the matrix is computed and the product of the first singular values and vectors will be mapped back to the original space. That is the solution to the original minimization problem. See [1] for a detailed proof.

Results.

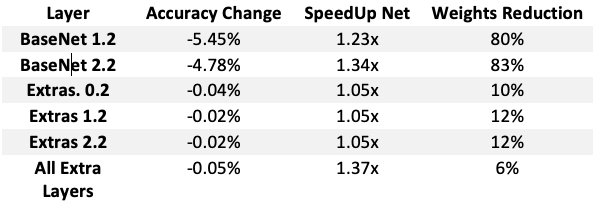

The method was tested using the public the implementation of the Single Shot Multibox Detector (SSD) models provided by https://github.com/qfgaohao/pytorch-ssd. The method was tested using a private dataset provided by the public transportation system in Bogotá Colombia with the aim of developing an efficient head count software. We were concerned about speedup and performance change for different layers. Performance change being relative to the baseline model with accuracy of 81.05 % and a total number of 6.601.011 parameters. The values in the weights reduction column represent the percentage of the original number of values in the particular layer that is used in the comprised model.Reference.

[1].Cheng Tai, Tong Xiao, Xiaogang Wang, and E Weinan. Convolutional neural networks with low-rank regularization. CoRR, abs/1511.06067, 2016.