Over the last 10 years, many threat groups have employed stegomalware or other steganography-based techniques to attack organizations from all sectors and in all regions of the world. Some examples are: APT15/Vixen Panda, APT23/Tropic Trooper, APT29/Cozy Bear, APT32/OceanLotus, APT34/OilRig, APT37/ScarCruft, APT38/Lazarus Group, Duqu Group, Turla, Vawtrack, Powload, Lokibot, Ursnif, IceID, etc. To mitigate the impact of these attacks different proposals have been published. In Blackhat USA 2022 and BH ASIA 2023 we released the Stegowiper tool that tries to reduce the success of these campaigns.

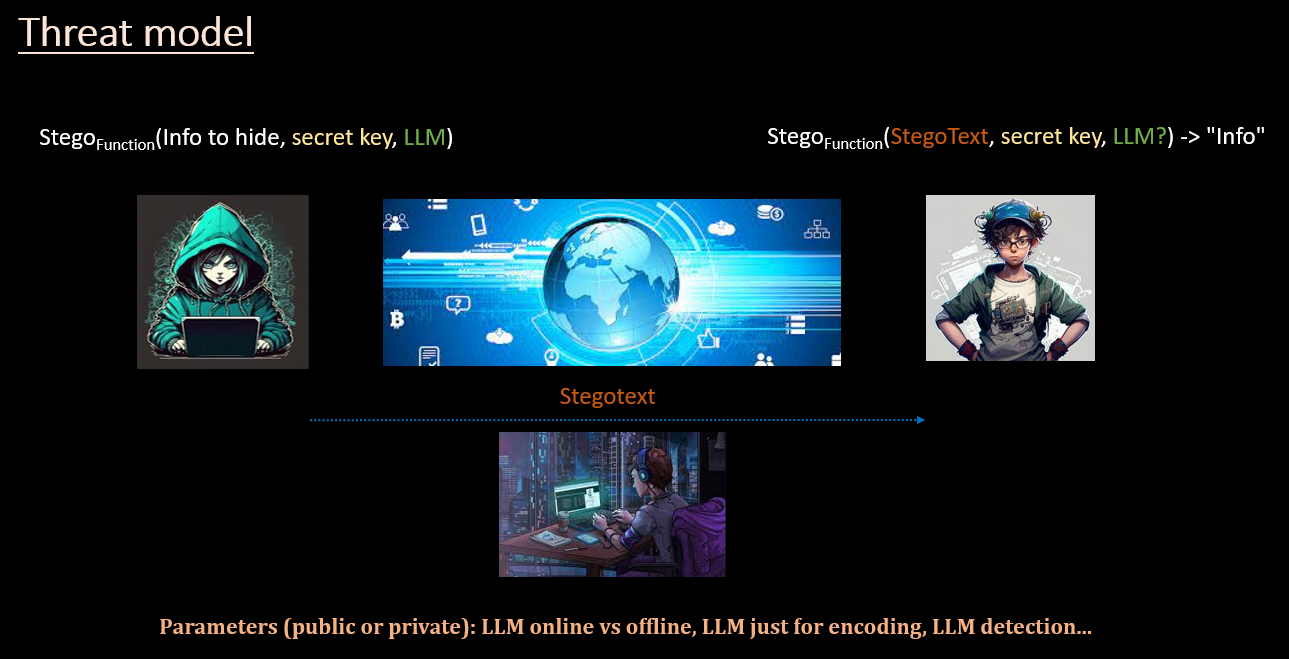

However, offensive capabilities are necessary in many scenarios and in some of them are related to enhanced privacy capabilities and censorship-resistant mechanisms.

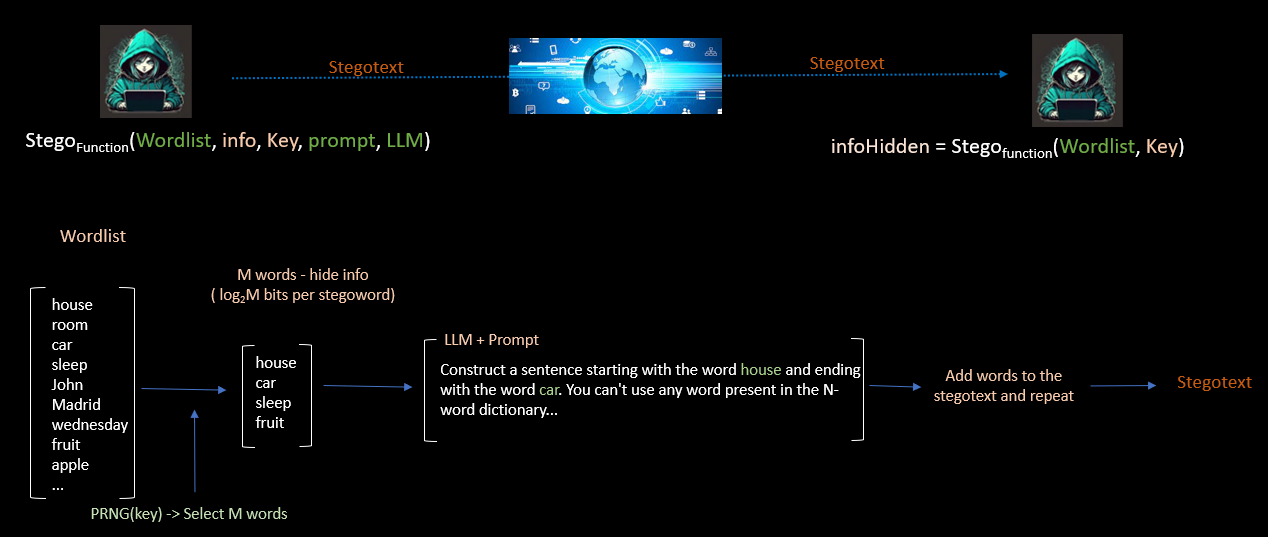

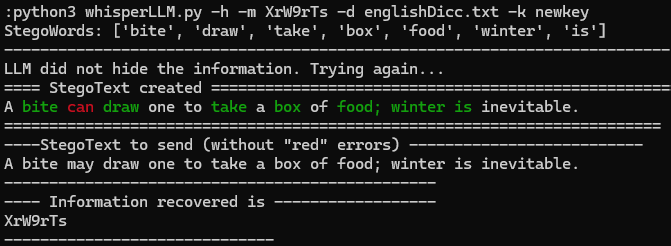

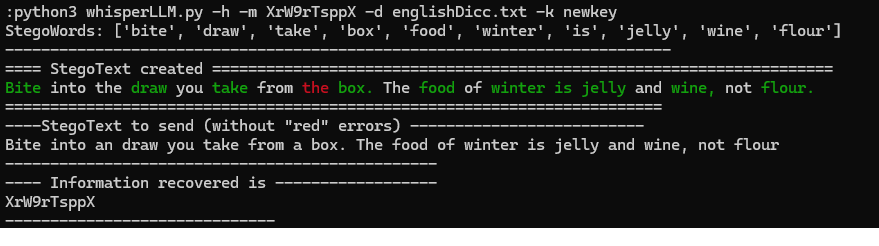

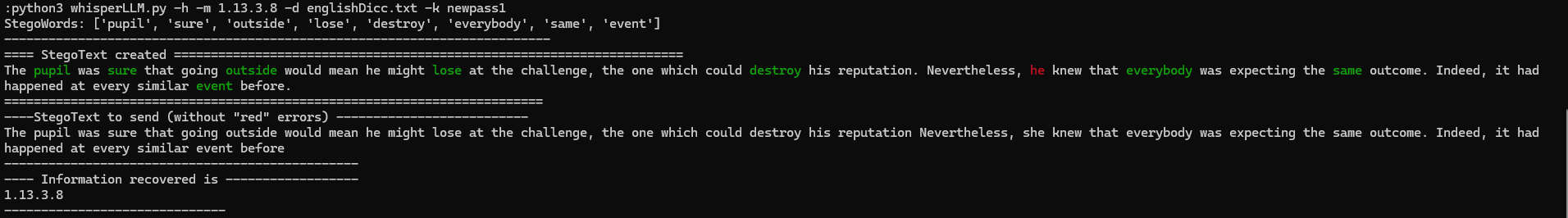

This tool makes use of LLM to generate stegotexts in various languages (e.g., English) to hide useful information that may go unnoticed. Due to the nature of natural language texts and their wider dispersion, this can be an interesting approach and more difficult to override.

The tool makes use of an LLM to hide the desired information. Depending on the technique used, the receiver would also need the LLM to retrieve the information. To facilitate the deployment of the tool in offensive environments with the smallest possible detection footprint the receiver will not need, with the implemented technique, the LLM to decode the information.

How much information can be hidden?

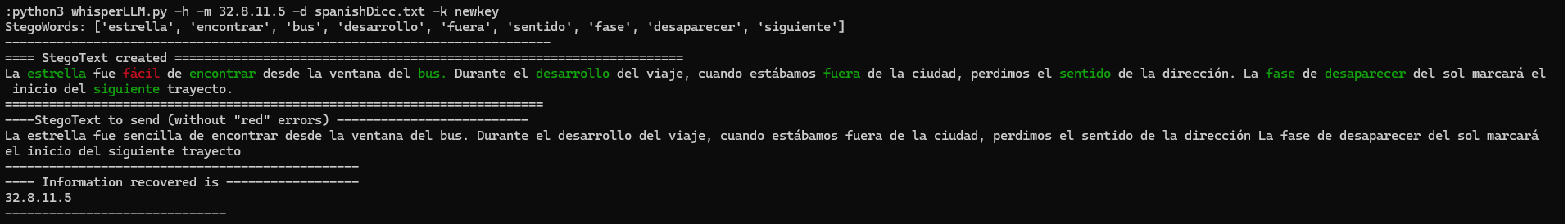

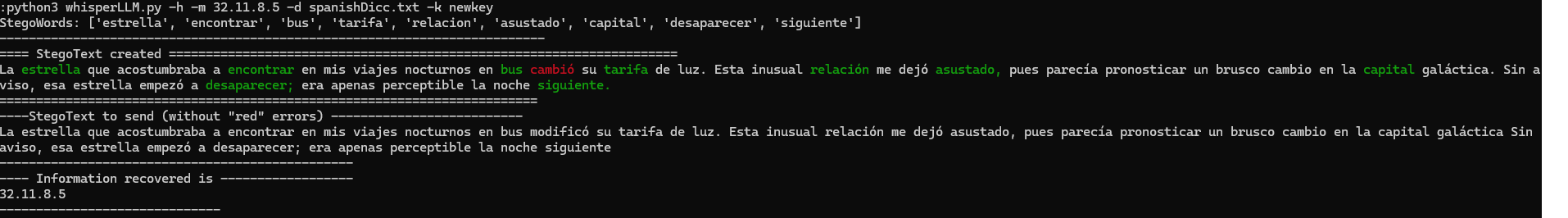

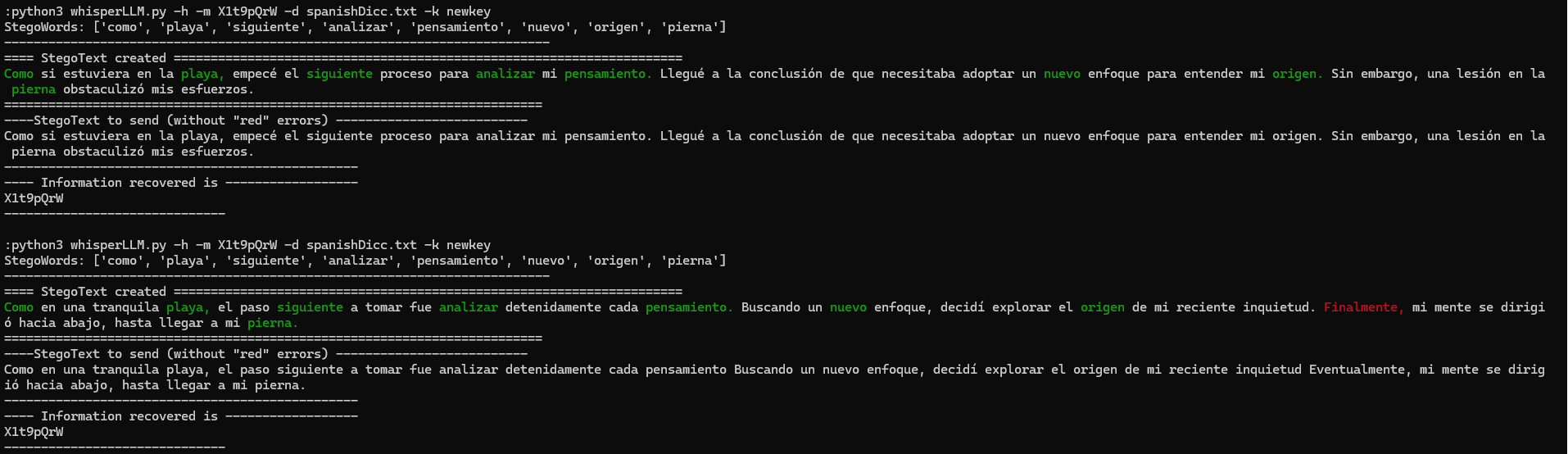

The technique has been designed to generate texts with a very good linguistic quality. The larger the text, the greater the number of errors that would require a correction process that could take some time. In our tests, and for practical scenarios, the tool proves useful in the order of a few hundred bits and a few thousand bits. This amount is adequate for exchanging configuration information (e.g. in a command & control model), location information (IPs, emails, URIs, TOR addresses, ...), cryptographic keys, etc.

IPv4/IPv6 (32/128 bits)

Cryptographic keys (128-4096 bits)

Seed (random number) (48-128 bits)

Phone number (44 bits...)

Urls compacted (5 char->40 bits)

Text in wide sense: 2000 hidden bits/6bits char = 333 hidden chars

GPS coordinates (latitude/longitude) (80 bits...)

TOR address / TOR services hidden (96 bits...)(6bits char)

Url 56 char -> 336 bits

-

Select a very large dictionary of words in the desired language in which we want to create the stegotext.

-

Sender and receiver share a key

-

The key is used to generate 256-word dictionaries at random. There will be as many different dictionaries as there are words generated that hide information.

-

Each dictionary has 256 words. Therefore, 2^8=256. Each word can encode by its index in the table (dictionary) a value of 8 bits.

-

We take the information to be hidden and we cut it into blocks of 8 bits.

-

The first 8-bit block is used to select a word from the first dictionary. The 8 bits are the index that will allow to read the concrete word of the dictionary. This process is repeated until we have generated enough words to mask the information to be hidden. In each new iteration there will be a new block of 8 bits and a new random dictionary.

-

The result will be N words that make the stegotext. For example, if we want to hide 32 bits we would need to create 4 stego-words (8*4=32).

-

The resulting stegotext hides information but has no linguistic meaning (neither syntactic, semantic, coherence, etc). We will use an LLM to correct this.

-

Using an LLM we enter the following prompt. I want you to create a meaningful text using the N generated words. The conditions will be simple: you have to use the words in order without modifying them. Between two words you can enter as many words as you want with the only condition that the selected words can not be in the dictionary of the word that the receiver expects to decode. If the LLM is able to do this, the text will have human validity and the receiver will not need the LLM to retrieve the hidden information. For example, give the word house and truck. The resulting stegotext can use any number of words between house and truck with the only condition that it cannot use any word from the dictionary from which the word truck was extracted.

-

how to decode. The receiver needs to use the same large dictionary words to generate the specific dictionaries. The concept "large" does not mean a huge file, a few thousand words might be enough.

-

The receiver receives a potential stegotext. With the key shared with the sender, it generates a sufficient number of dictionaries to try to retrieve the information.

-

The receiver generates the first dictionary containing 256 words. It starts reading the stegotext. If it finds any word in the dictionary it extracts its index (that will be the hidden information), if the word is not present in the dictionary it discards it.

-

If it has found a word in the first dictionary, it generates a second dictionary and continues reading the stegotext to detect any word present in the dictionary. If it finds it, it extracts its index (the hidden information), otherwise it discards the word and continues reading. This process is repeated until the stegotext is finished and the information is retrieved.

-

Thanks to the dictionaries and the process of discarding words, the receiver does not need to know the LLM to retrieve the hidden information.

Tool soon...

LLM models sometimes do not respond properly to the instructions given, so it is necessary to vary the prompts and make the appropriate software corrections in order to have quality stegotexts. We are currently working on automating the solution of the most probable problems.

Two common errors are the following:

-

The LLM model sometimes ignores and does not use all the generated words (stegowords) that hide the information. In this case it is necessary to perform more iterations with the LLM and modify the prompt so that it generates the stegotext with the desired conditions.

-

The larger the stegotext, the more likely it is that errors will hinder decoding. A common error is the appearance of words that are in the dictionary of words that the receiver expects to decode but it is not the word that hides the information. In the examples you can see that in the words in red, the receiver expects to decode the next word that is in green but the LLM model has selected a word that is in the dictionary that the receiver expects so, in this situation, a synchronization problem occurs (the LLM ignores the prompt telling it that this situation cannot occur). To solve this problem, the tool applies several techniques to replace the red words with one or more words that are not in the expected decoding dictionary at any given time.

We have studied numerous LLMs and currently the one that gives the best results is GPT-4, which is the one we use in the tool. Currently, we are implementing Mixtral and Llama2 in the tool to have LLM models that can be run locally.