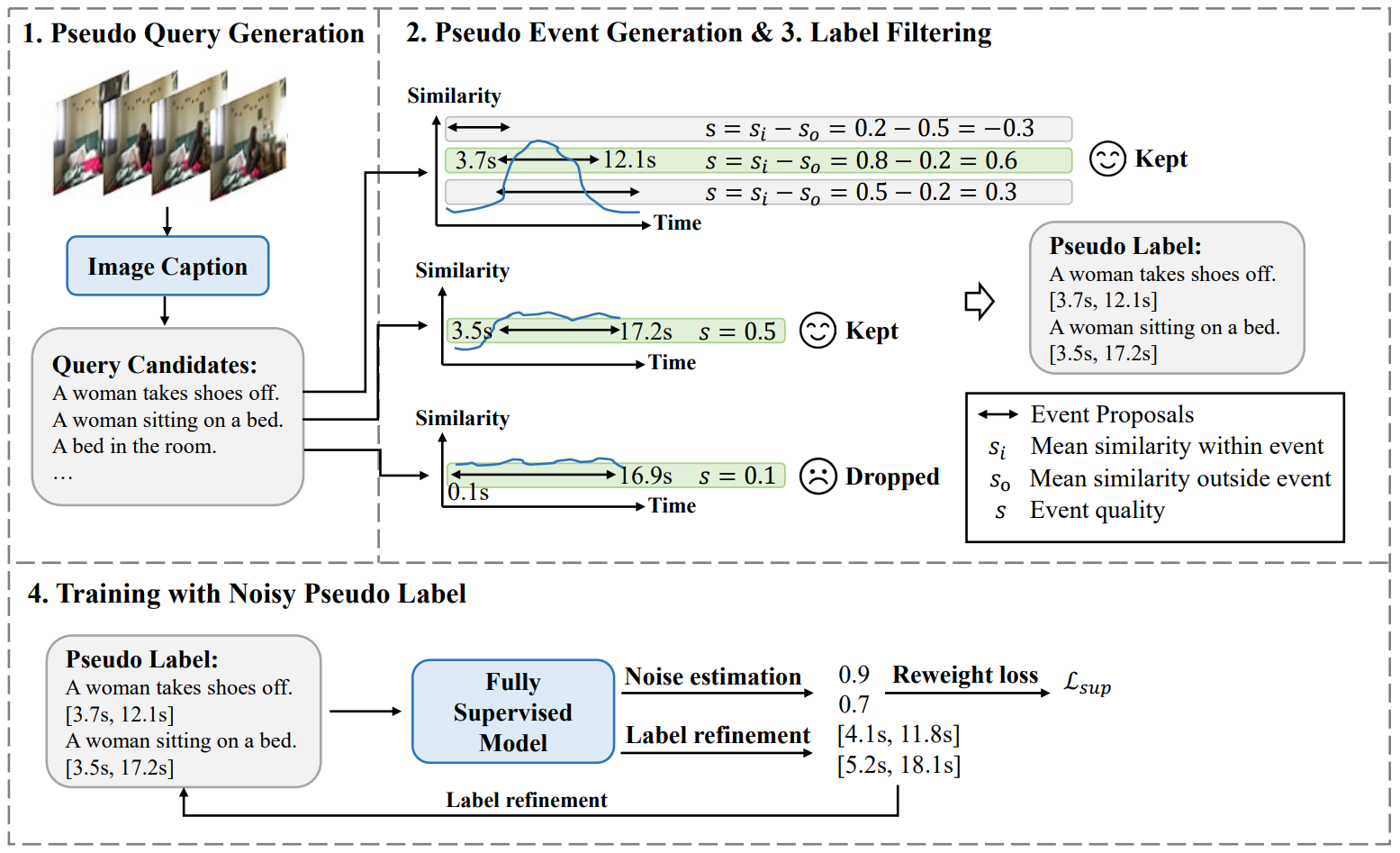

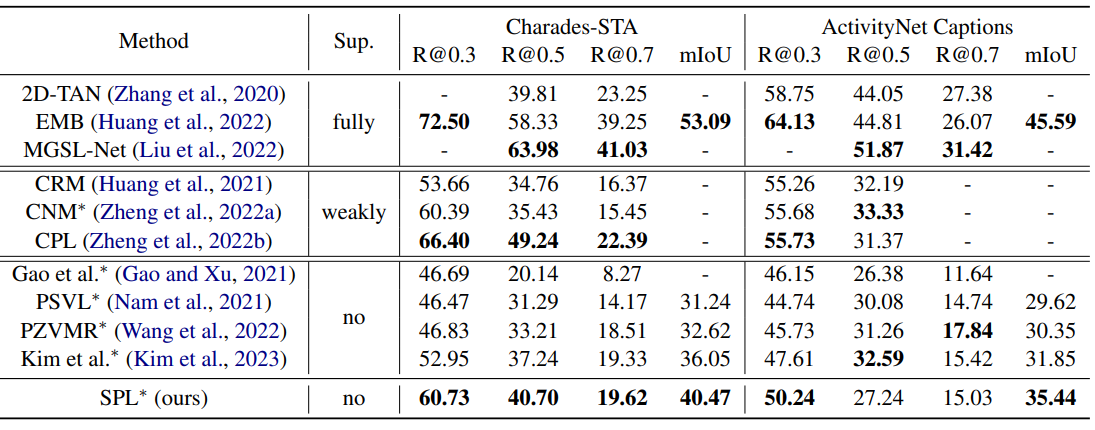

In this work, we propose the Structurebased Pseudo Label generation (SPL) framework for the zero-shot video sentence localization task, which learns with only video data without any annotation. We first generate free-form interpretable pseudo queries before constructing query-dependent event proposals by modeling the event temporal structure. To mitigate the effect of pseudolabel noise, we propose a noise-resistant iterative method that repeatedly re-weight the training sample based on noise estimation to train a grounding model and correct pseudo labels. Experiments on the ActivityNet Captions and Charades-STA datasets demonstrate the advantages of our approach.

Our paper was accepted by ACL-2023. [Paper] [Project]

We provide the generated pseudo labels for the ActivityNet Captions and Charades-STA datasets in EMB/data/dataset/activitynet/train_pseudo.json and EMB/data/dataset/charades/charades_sta_train_pseudo.txt. If you need to generate pseudo labels by yourself, please follow the instructions.

We use the BLIP model to generate pseudo labels. We provide pre-extracted BLIP captions and features at this link. If you download the features and captions we provide, please proceed directly to step 3.

Please clone BLIP and copy the following files into the directory for BLIP:

git clone git@github.com:salesforce/BLIP.git

cp captions_generator.py feature_extractor.py BLIP

cd BLIPPlease follow BLIP's instruction to prepare the python enviroment.

You can download our generated captions from here. You can also generate captions using the following script.

To generate captions, please run:

# Charades-STA

python captions_generator.py --video_root PATH_TO_VIDEO --save_root PATH_TO_SAVE_CAPTIONS

# ActivityNet Captions

python captions_generator.py --video_root PATH_TO_VIDEO --save_root PATH_TO_SAVE_CAPTIONS --stride 16 --num_stnc 5You can download our extracted features from here. You can also extract the features using the following scripts.

To extract the visual features, please run:

# Charades-STA

python feature_extractor.py --input_root PATH_TO_VIDEO --save_root PATH_TO_SAVE_VISUAL_FEATURES

# ActivityNet Captions

python feature_extractor.py --input_root PATH_TO_VIDEO --save_root PATH_TO_SAVE_VISUAL_FEATURES --stride 16To extract the caption features, please run:

python feature_extractor.py --input_root PATH_TO_SAVED_CAPTIONS --save_root PATH_TO_SAVE_CAPTION_FEATURES --extract_textTo generate the pseudo labels, please run:

# leave BLIP's directory

cd ..

# Charades-STA

python pseudo_label_generation.py --dataset charades --video_feat_path PATH_TO_SAVED_VISUAL_FEATURES --caption_feat_path PATH_TO_SAVED_CAPTION_FEATURES --caption_path PATH_TO_SAVED_CAPTIONS

# ActivityNet Captions

python pseudo_label_generation.py --dataset activitynet --num_stnc 4 --stnc_th 0.9 --stnc_topk 1 --video_feat_path PATH_TO_SAVED_VISUAL_FEATURES --caption_feat_path PATH_TO_SAVED_CAPTION_FEATURES --caption_path PATH_TO_SAVED_CAPTIONSNote: On the ActivityNet Captions dataset, due to the low efficiency of the sliding window method for generating event proposals, we pre-reduced the number of event proposals by clustering features. The processed proposals are stored in data/activitynet/events.pkl. The preprocessing script is event_preprocess.py.

We use EMB as our grounding model and train it using our generated pseudo labels. Please follow EMB's instruction to download features and prepare python envrionment.

To train EMB with generated pseudo labels, please run:

cd EMB

# Charades-STA

python main.py --task charades --mode train --deploy

# ActivityNet Captions

python main.py --task activitynet --mode train --deployDownload our trained models from here and put them in EMB/sessions/. Create the session folder if not existed.

To evaluate the trained model, please run:

cd EMB

# Charades-STA

python main.py --task charades --mode test --model_name SPL

# ActivityNet Captions

python main.py --task activitynet --mode test --model_name SPLWe appreciate EMB for its implementation. We use EMB as our grounding model.

@inproceedings{zheng-etal-2023-generating,

title = "Generating Structured Pseudo Labels for Noise-resistant Zero-shot Video Sentence Localization",

author = "Zheng, Minghang and

Gong, Shaogang and

Jin, Hailin and

Peng, Yuxin and

Liu, Yang",

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = jul,

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.acl-long.794",

pages = "14197--14209",

}