This SiD-LSG repository contains the code and model checkpoints necessary to replicate the findings of Long and Short Guidance in Score identity Distillation for One-Step Text-to-Image Generation. The technique, Long and Short Guidance (LSG), is used with Score identity Distillation (SiD: ICML 2024 paper, Code) to distill Stable Diffusion models for one-step text-to-image generation.

If you find our work useful or incorporate our findings in your own research, please consider citing our papers:

- SiD:

@inproceedings{zhou2024score,

title={Score identity Distillation: Exponentially Fast Distillation of Pretrained Diffusion Models for One-Step Generation},

author={Mingyuan Zhou and Huangjie Zheng and Zhendong Wang and Mingzhang Yin and Hai Huang},

booktitle={International Conference on Machine Learning},

url={https://arxiv.org/abs/2404.04057},

url_code={https://github.com/mingyuanzhou/SiD},

year={2024}

}- SiD-LSG:

@article{zhou2024long,

title={Long and Short Guidance in Score identity Distillation for One-Step Text-to-Image Generation},

author={Mingyuan Zhou and Zhendong Wang and Huangjie Zheng and Hai Huang},

journal={ArXiv 2406.01561},

url={https://arxiv.org/abs/2406.01561},

url_code={https://github.com/mingyuanzhou/SiD-LSG},

year={2024}

}SiD-LSG functions as a data-free distillation method capable of generating photo-realistic images in a single step. By employing a relatively low guidance scale, such as 1.5, it surpasses the teacher stable diffusion model in achieving lower zero-shot Fréchet Inception Distances (FID). This comparison involves 30k COCO2014 caption-prompted images against the COCO2014 validation set, though it does so at the cost of a reduced CLIP score.

The one-step generators distilled with SiD-LSG achieve the following FID and CLIP scores:

| Stable Diffusion 1.5 | Guidance Scale | FID | CLIP |

|---|---|---|---|

| 1.5 | 8.71 | 0.302 | |

| 1.5 (longer training) | 8.15 | 0.304 | |

| 2 | 9.56 | 0.313 | |

| 3 | 13.21 | 0.314 | |

| 4.5 | 16.59 | 0.317 | |

| Stable Diffusion 2.1-base | Guidance Scale | FID | CLIP |

| 1.5 | 9.52 | 0.308 | |

| 2 | 10.97 | 0.318 | |

| 3 | 13.50 | 0.321 | |

| 4.5 | 16.54 | 0.322 |

To install the necessary packages and set up the environment, follow these steps:

First, clone the repository to your local machine:

git clone https://github.com/mingyuanzhou/SiD-SLG.git

cd SiD-LSGTo create the Conda environment with all the required dependencies and activate it, run:

conda env create -f sid_lsg_environment.yml

conda activate sid_lsgTo train the model, you must supply the training prompts. By default, we use Aesthetic6+, but you have the option to choose Aesthetic6.25+, Aesthetic6.5+, or any list of prompts that does not include COCO captions. To obtain the Aesthetic6+ prompts from Hugging Face, follow their provided guidelines. Once you have prepared the Aesthetic6+ prompts, place them as '/data/datasets/aesthetics_6_plus/aesthetics_6_plus.txt'.

To evaluate the zero-shot FID of the distilled one-step generator, you will first need to download the COCO2014 validation set from COCOdataset, and then prepare the COCO2014 validation set using the following command:

python cocodataset_tool.py --source=/path/to/COCO/val \

--dest=MS-COCO-256/val --resolution=256×256

Once prepared, place them into the /data/datasets/MS-COCO-256/val folder.

To make an apple-to-apple comparison with previous methods such as GigaGAN, you may use the captions.txt, obtained from GigaGAN/COCOevaluation, to generate 30k images and use them to compute the zero-shot COCO2014 FID.

After activating the environment, you can run the scripts or use the modules provided in the repository. Example:

sh run_sid.sh 'sid1.5'Adjust the --batch-gpu parameter according to your GPU memory limitations. To save memory, such as fitting GPU with 24GB memomry, you may set --ema 0 to turn off EMA and set --fp16 1 to use mixed-precision training.

The one-step generators produced by SiD-LSG are provided in huggingface/UT-Austin-PML/SiD-LSG

You can first download the SiD-LSG one-step generators and place them into /data/Austin-PML/SiD-LSG/ or a folder you choose. Alternatively, you can replace /data/Austin-PML/SiD-LSG/ with 'https://huggingface.co/UT-Austin-PML/SiD-LSG/resolve/main/' to directly download the checkpoint from HuggingFace

Generate examples images using user-provided prompts and random seeds:

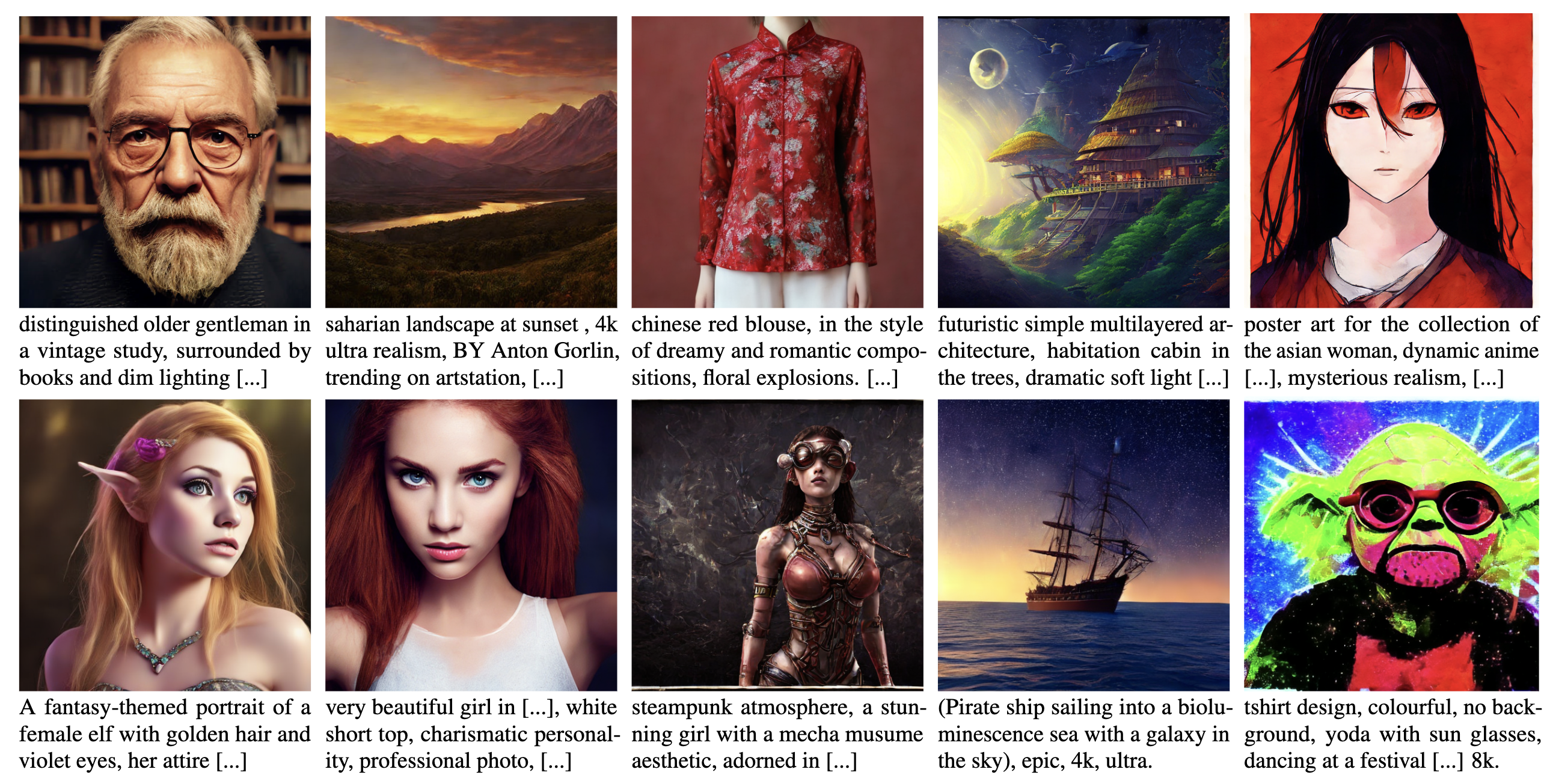

- Reproduce Figure 1:

python generate_onestep.py --outdir='image_experiment/example_images/figure1' --seeds='8,8,2,3,2,1,2,4,3,4' --batch=16 --network='/data/Austin-PML/SiD-LSG/batch512_sd21_cfg4.54.54.5_t625_7168_v2.pkl' --repo_id='stabilityai/stable-diffusion-2-1-base' --text_prompts='prompts/fig1-captions.txt' --custom_seed=1- Reproduce Figure 6 (the columns labeled SD1.5 and SD2.1), ensuring the seeds align with the positions of the prompts within the HPSV2 defined list of prompts:

python generate_onestep.py --outdir='image_experiment/example_images/figure6/sd1.5' --seeds='668,329,291,288,057,165' --batch=6 --network='/data/Austin-PML/SiD-LSG/batch512_cfg4.54.54.5_t625_8380_v2.pkl' --text_prompts='prompts/fig6-captions.txt' --custom_seed=1python generate_onestep.py --outdir='image_experiment/example_images/figure6/sd2.1base' --seeds='668,329,291,288,057,165' --batch=6 --network='/data/Austin-PML/SiD-LSG/batch512_sd21_cfg4.54.54.5_t625_7168_v2.pkl' --repo_id='stabilityai/stable-diffusion-2-1-base' --text_prompts='prompts/fig6-captions.txt' --custom_seed=1- Reproduce Figure 8:

python generate_onestep.py --outdir='image_experiment/example_images/figure8' --seeds='4,4,1,1,4,4,1,1,2,7,7,6,1,20,41,48' --batch=16 --network='/data/Austin-PML/SiD-LSG/batch512_sd21_cfg4.54.54.5_t625_7168_v2.pkl' --repo_id='stabilityai/stable-diffusion-2-1-base' --text_prompts='prompts/fig8-captions.txt' --custom_seed=1- Generation: Generate 30K images to calculate zeroshot COCO FID (see the comments inside generate_onestep.py for more detail):

#SLG guidance scale kappa1=kappa2=kappa3=kappa4 = 1.5, longer training

#FID 8.15, CLIP 0.304

torchrun --standalone --nproc_per_node=4 generate_onestep.py --outdir='image_experiment/sid_sd15_runs/sd1.5_kappa1.5_traininglonger/fake_images' --seeds=0-29999 --batch=16 --network='https://huggingface.co/UT-Austin-PML/SiD-LSG/resolve/main/batch512_cfg1.51.51.5_t625_18789_v2.pkl' - Computing evaluation metrics: Following GigaGAN to compute FID and CLIP using the 30k images generated with generate_onestep.py; you also need to place

captions.txtinto the user defined path forfake_dir

Download GigaGAN/evaluation

Place evaluate_SiD_t2i_coco256.sh into its folder: GigaGAN/evaluation/scripts

Modify fake_dir= inside evaluate_SiD_t2i_coco256.sh to point to the folder that consits of captions.txt and the fake_images folder with 30k fake images, and run:

bash scripts/evaluate_SiD_t2i_coco256.shThe SiD-LSG code integrates functionalities from Hugging Face/Diffusers into the mingyuanzhou/SiD repository, which was build on NVlabs/edm and pkulwj1994/diff_instruct.

- Mingyuan Zhou: Led the project, debugged and developed the integration of Stable Diffusion and Long-Short Guidance into the SiD codebase, wrote the evaluation pipelines, and performed the exerpiments.

- Zhendong Wang Led the effort of integrating Stable Diffusion into the SiD codebase.

- Huangjie Zheng Led the effort of evaluating the generation results and preparing the COCO dataset.

- Hai Huang: Led the effort in adapting the code for Google's internal computing infrasturcture.

- Michael (Qijia) Zhou, Led the effort in preparing the data and participated in adapting the code to Google's internal computing infrasturcture.

- All contributors worked closely together to co-develop essential components and writing various subfunctions.

To contribute to this project, follow these steps:

- Fork this repository.

- Create a new branch:

git checkout -b <branch_name>. - Make your changes and commit them:

git commit -m '<commit_message>' - Push to the original branch:

git push origin <project_name>/<location> - Create the pull request.

Alternatively, see the GitHub documentation on creating a pull request.

If you want to contact me, you can reach me at mingyuan.zhou@mccombs.utexas.edu.

This project uses the following license: Apache-2.0 license.